Permabit Albireo SANblox is a purpose-built data reduction appliance designed to unlock more capacity from Fibre Channel SANs. Permabit estimates users will see at least a 6:1 reduction in data footprint that resides on the SAN, allowing storage investments to massively alter the standard value proposition. SANblox offers deduplication and compression with thin provisioning, further enhancing the feature set. All of the data reduction is done inline, the SANblox appliance simply slips in ahead of and virtualizes the SAN, the SAN and the application are oblivious to the residence of the SANblox solution. SANblox works on any FC storage, regardless of disk configuration – hard drive, hybrid and all-flash solutions will all see the same reduction in data footprint.

Permabit Albireo SANblox is a purpose-built data reduction appliance designed to unlock more capacity from Fibre Channel SANs. Permabit estimates users will see at least a 6:1 reduction in data footprint that resides on the SAN, allowing storage investments to massively alter the standard value proposition. SANblox offers deduplication and compression with thin provisioning, further enhancing the feature set. All of the data reduction is done inline, the SANblox appliance simply slips in ahead of and virtualizes the SAN, the SAN and the application are oblivious to the residence of the SANblox solution. SANblox works on any FC storage, regardless of disk configuration – hard drive, hybrid and all-flash solutions will all see the same reduction in data footprint.

Permabit Albireo SANblox is a purpose-built data reduction appliance designed to unlock more capacity from Fibre Channel SANs. Permabit estimates users will see at least a 6:1 reduction in data footprint that resides on the SAN, allowing storage investments to massively alter the standard value proposition. SANblox offers deduplication and compression with thin provisioning, further enhancing the feature set. All of the data reduction is done inline, the SANblox appliance simply slips in ahead of and virtualizes the SAN, the SAN and the application are oblivious to the residence of the SANblox solution. SANblox works on any FC storage, regardless of disk configuration – hard drive, hybrid and all-flash solutions will all see the same reduction in data footprint.

A 6X data reduction is pretty commonly accepted as a good mark for standard enterprise mixed application workloads. Depending on the use of the storage though, the numbers can go much higher. VDI use cases can drive the benefit of SANblox skyward by an order of magnitude, and IT shops that use multiple copies of databases for development for instance will see huge data footprint reductions. In fact, simply being able to spin off copies of data for development purposes may enable new business processes, where the cost of deploying complete data sets prior may have been too high.

For its part, Permabit has been at the deduplication business for a long time. While data reduction wasn’t widely popular outside of backup appliances until recently, flash-based appliances have driven the concept to more mainstream workloads. The deduplication technology behind many of those all-flash appliances is more likely than not a Permabit solution. Deduplication isn’t everywhere though, hard drive arrays and even most hybrids simply aren’t built with that concept in mind, and even many flash arrays offer a limited set of data reduction services. Permabit opens up these services via the SANblox appliance, giving new or existing storage a new set of tricks.

The Permabit Albireo SANblox is shipping now with an MSRP that varies depending on the storage vendor the unit is paired up with and promotional pricing. Obviously pricing arguments work out best when the capacity is large enough to get economies of scale. Permabit included a pricing example to show how traditional flash storage stacks up against an environment with SANblox:

- Cost for 60 TBs raw: $720,000

- Cost after data protection overhead: $12/GB

- Cost for SANblox 6:1 pcapacity savings: $70,000

- Cost for 10TB after data protection overhead: $120,000

- Total cost before discounts: $190,000

- Effective cost per GB (storage + sanblox) before discounts: $3.16

- Net savings: 74%

Permabit Albireo SANblox Specifications

- CPU: Intel Xeon E5-1650v2

- RAM: 128 GB

- FC Ports: 4 x 16 Gb (Emulex)

- Max. Usable Capacity: 256 TiB

- Max. Supported LUNs: 256

- Random IO (4K IOPS):

- Read: 230,000

- Write: 111,000

- Mixed RW70: 180,000

- Sequential Throughput:

- Read: 1045MB/s

- Write: 800MB/s

- Min Latency:

- Read: 300us

- Write: 400us

- Reliability: All data/metadata is written to backend storage before writes are acknowledged. No data is cached on SANblox.

- Availability: Seamless High Availability provides transparent failover in under 30s.

- Serviceability: SMTP alerting and transparent upgrades of software and hardware components.

- Physical Characteristics:

- Form Factor: 1U rackmount

- Width: 17.2” (437 mm)

- Weight: 38lbs (16.5kg)

- Power:

- Voltage: 100-240V, 50-60 Hz

- Watts: 330

- Amps: 4.5 max

- Operating Temperature: 10°C to 35°C (50°F to 95°F)

- Operating Relative Humidity: 8% to 90% (non-condensing)

- Certifications

- Electromagnetic Emissions: FCC Class A, ICES-003, CISPR 22 Class A, AS/NZS CISPR 22 Class A, EN 61000-3-2/-3-3, VCCI:V-3, KN22 Class A

- Electromagnetic Immunity: CISPR 24, KN 24, (EN 61000-4-2, EN 61000-4-3, EN 61000-4-4, EN 61000-4-5, EN 61000-4-6, EN 61000-4-8, EN 61000-4-11)

- Power Supply Efficiency: 80 Plus Gold Certified

Deduplication

Deduplication is simply the process of preventing duplicate data from taking up valuable space in primary storage. The difference between buying a data reduction appliance specifically for backup as opposed to one designed for primary storage may be confusing to some buyers. Primary storage with data reduction is designed to optimize delivery of performance for random access to fixed-size blocks of data. In order to hit the faster performance, primary storage data reduction focuses on fixed chunks of data, typically more, smaller blocks (however there is variation depending of the specific vendor). On the other hand a deduplication backup appliance focuses more on sequential throughput in order to speed up the backup and restore processes. Backup dedupe appliances, with their sequential focus, are able to process large streams of data and write them to media with variable chunk sizes. On the one hand this means that the appliance can use larger chunks and therefore have fewer chunks to keep track of; on the other hand, if there is a small amount of data that needs to be read back, the entire chunk must be read.

As far as deduplication goes there are two main ways in which to carry it out: inline or post-process. Inline deduplication simply means that as data moves toward its target duplicates are found and then never written. Because it is inline, caches and tiers of faster storage in hybrid arrays all benefit from an increase in effective capacity. This is ideal in both saving disk space not to mention saving writes to flash media (where flash can only take so many writes before beginning to degrade). On top of these benefits, inline also allows for immediate replication for data protection. The down side of the inline deduplication is the hit in performance at wrute tune that is almost unavoidable.

Post-process deduplication means the deduplication process begins once the data has hit its storage target or when it hits a storage cache. While this can skip the initial performance hit at write time, it does introduce other issues. For one, duplicates, while waiting for the deduplication process to begin or catch up if it is always running, are taking up storage space. If the data is sent to a cache first the cache can rapidly fill up. As a result, hybrid arrays may only see capacity savings at the lowest tier. Writing everything to the storage media first before dedupe can also take a larger toll on flash. And while the initial performance hit may be skipped the deduplication process will still have to use resources once it begins post-process.

Performance is usually the largest concern from a vendor perspective, as they don’t want their appliance running slower than their competitors (even though they will be using overall less disk space). The performance hit and the overall all cap on performance comes through a combination of resources available as well as the specific software being used within the given appliance. While performance can also be a concern for customers, and a major concern at that, they are also worried about data loss, as the deduplication process does change how data is being stored versus how it was initially written.

So where does Permabit fit in this difference of deduplication? Permabit sits in front of a SAN and deduplicates data as it moves toward its target. Permabit uses an inline, multi-core scalable, and low memory overhead method of deduplication. Looking specifically at the device we are testing, the Permabit Albireo SANblox, it can index data in need of deduplication at 4K granularity in a primary storage environment. So the Permabit Albireo SANblox can take 256TB of provisioned LUNs and present it as 2.5PB of logical storage, yet it does so in only 128GB of RAM. This allows the device to tackle to aspects of performance both by reading back smaller chunks of data as well as using fewer resources. Another method for addressing performance with Permabit is to embed its software into an appliance. Permabit states that customers that use this method have seen performance greater than 600,000 IOPS.

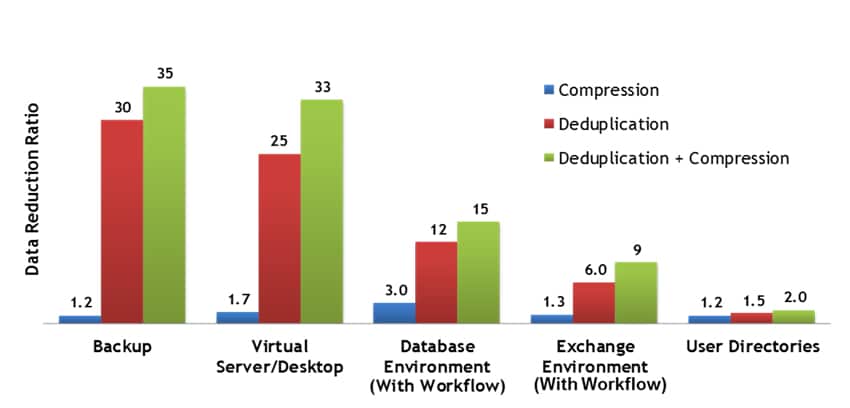

It is easy for any company to say that there device (in this case deduplication) is wonderful at what it does. But it is always better when some proof can be provided in a context that can be understood by customers and by vendors looking to combine Permabit with their SAN appliances. A few years ago Permabit ran a study with Enterprise Strategy Group (ESG). The study looked at data reduction ratio on various environments and compared compression alone, deduplication alone, and compression and deduplication combined.

Setup and Configuration

The SANblox appliance is a 1U server that essentially inserts itself into the data path for LUNs that get routed through. Of course not all LUNs have to go through the SANblox. SANblox units are usually deployed in HA pairs, and depending on needs or capabilities of the underlying storage, multiple HA pairs can be used to address any storage or performance requirement.

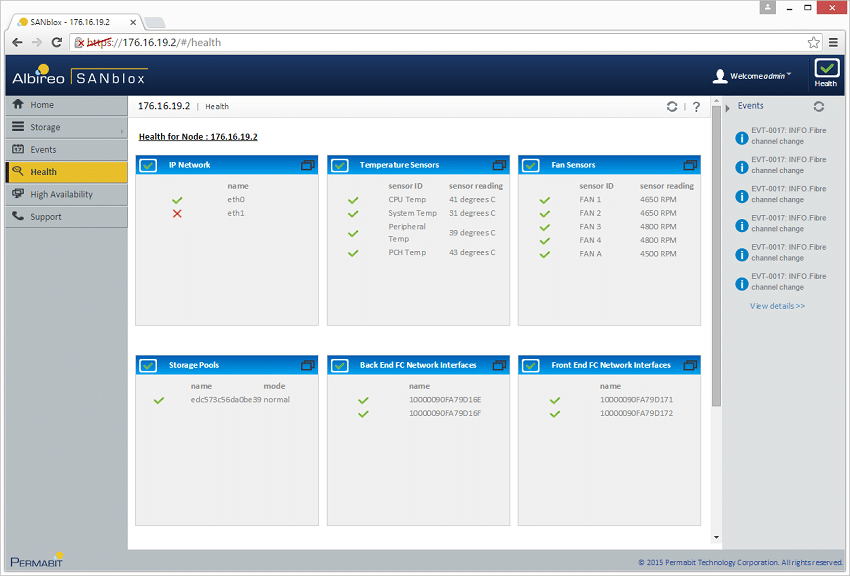

Getting the SANblox online is pretty quick and easy. You assign the system two IP addresses: one for IPMI, and a second one for the web management and SSH interface. When it comes online, you grab the WWNs for the two backend FC ports (ones that will connect to your storage system) and use those to create a separate FC zone with.

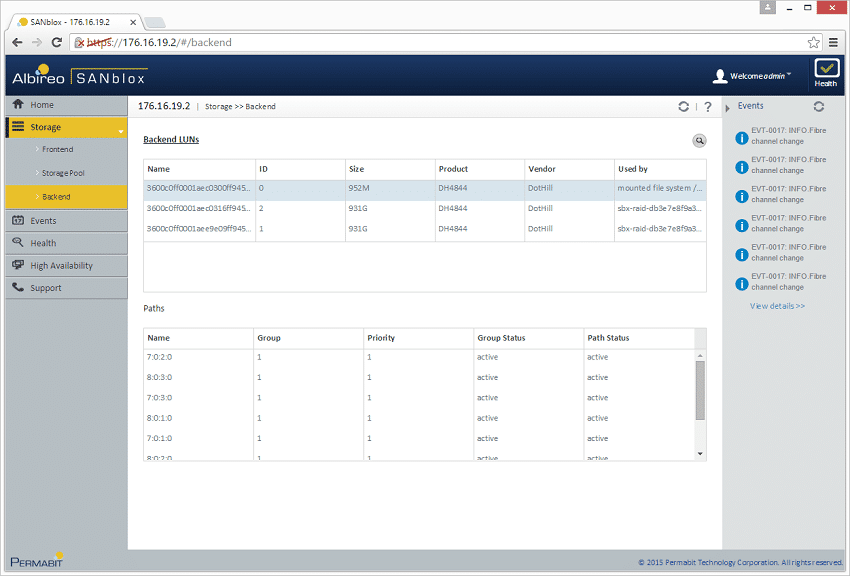

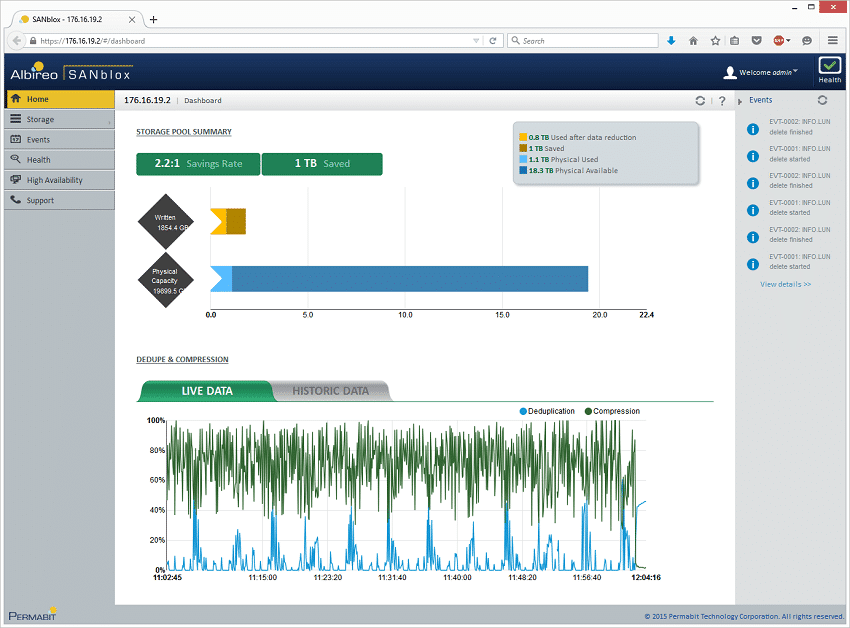

At the array level you provision your storage so that you have one 1GB LUN for device settings, and multiple LUNs for your primary data storage. All of the metadata are also stored on these volumes, the SANblox does not persist any data within the appliance, made possible by its synchronous, inline functionality. For our screenshot examples we used our DotHill Ultra48 array, configuring 1 1GB LUN for SANblox settings and 2 1TB LUNs for the SANblox storage pool.

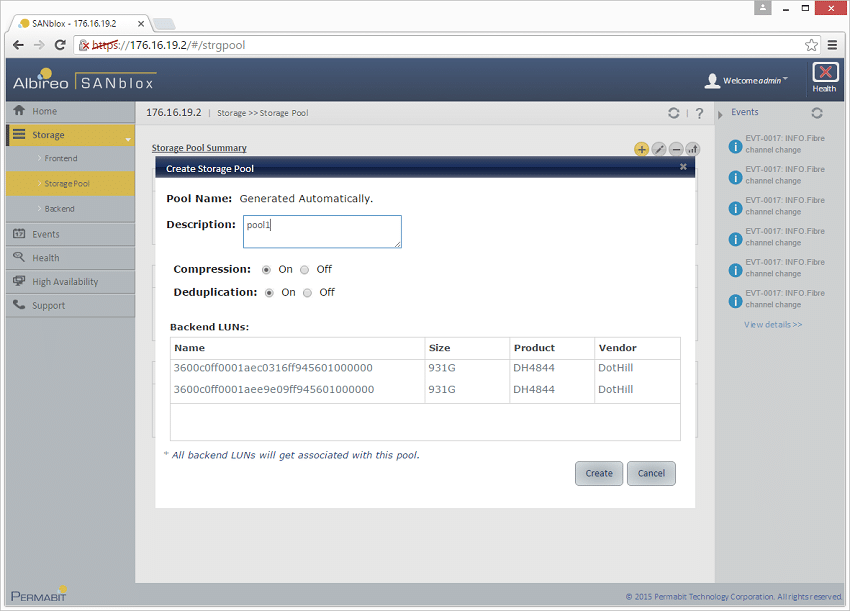

With the storage configured the SANblox automatically detects and configures itself using the 1GB LUN for device settings and views the other LUNS for storage pool creation. In this case it groups all of them together when creating the pool and allows you to select if you want dedupe on or off, as well as compression on or off.

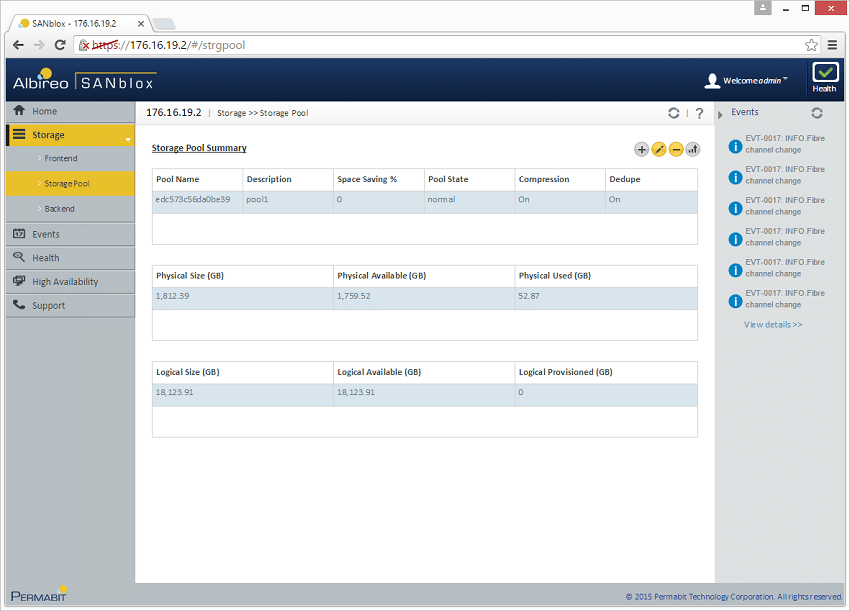

With the pool created the Permabit SANblox by default allows users to address the physical storage with a 10:1 logical addressable size. So 1TB raw becomes 10TB of usable space when creating volumes. In our case it mapped the 1.8TB of raw storage as 18TB of usable storage that we could assign.

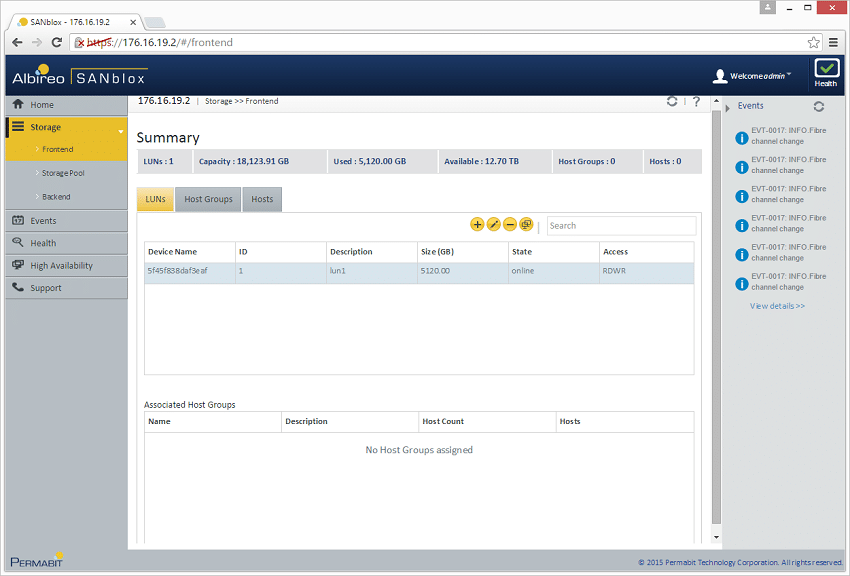

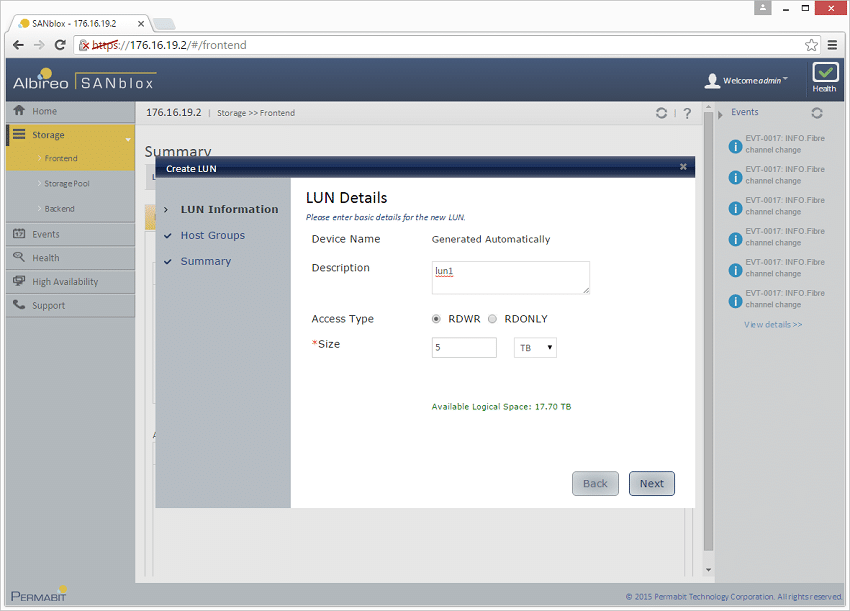

With the underlying storage sorted out the rest of the interface works similar to that of your basic storage array. You can create LUNs, assign them to hosts or host groups, as well as define rules such as read-only access or read/write access.

Performance

Not all high-performance storage offers deduplication. The X-IO ISE G3 family of flash arrays are a good example, the recently reviewed X-IO ISE 860 is designed largely as a performance play. X-IO made the conscious decision to not layer on too many features, all of which require more RAM and CPU, while diminishing the ability of the array to deliver leading-edge performance. That said, there are use cases where applications must tradeoff performance for capacity and with the cost of flash still relatively high on a per TB basis, deduplication can alter the economics of performance storage dramatically enough to tackle the cost concern and retain high performance characteristics. With this as the backdrop, we deployed the SANblox in front of the ISE 860 to gauge its capabilities. With the primary focus being how deduplication affected application performance, we leveraged our Microsoft SQL Server, MySQL Sysbench and VMware VMmark testing environments to stress a single SANblox appliance. Each of these tests operate with multiple simultaneous workloads hitting a given storage array at the same time, giving a data reduction system such as the Permabit SANblox a fantastic opportunity to reduce the data footprint of the deployed workload.

One important element to understand when it comes to deduplication and performance is when you reduce your data footprint you also increase the I/O load on your backend storage. Throughput in many cases can be reduced, since you are sending much less data than before, but the small-block random I/O requests substantially increase. This is one reason DR and flash can go so well together, but it also means that at a certain point you can and will still saturate your backend storage in certain scenarios. Luckily, the SANblox’s patented technology manages data reduction overheads to a minimal amount, leaving room to scale or use the array natively for other applications. For large environments or platforms that have a lot of I/O potential, users can scale the number of SANblox appliances for increased performance and capacity. While we were given a single appliance to review, we would have most likely seen higher measured performance with two pairs working together, instead of just one.

StorageReview’s Microsoft SQL Server OLTP testing protocol employs the current draft of the Transaction Processing Performance Council’s Benchmark C (TPC-C), an online transaction processing benchmark that simulates the activities found in complex application environments. The TPC-C benchmark comes closer than synthetic performance benchmarks to gauging the performance strengths and bottlenecks of storage infrastructure in database environments.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs, being stressed by Dell’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across our X-IO ISE 860 to better illustrate the aggregate performance inside a 4-node VMware cluster.

Second Generation SQL Server OLTP Benchmark Factory LoadGen Equipment

- Dell PowerEdge R730 VMware ESXi vSphere Virtual Client Hosts (2)

- Four Intel E5-2690 v3 CPUs for 124GHz in cluster (Two per node, 2.6GHz, 12-cores, 30MB Cache)

- 512GB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- SD Card Boot (Lexar 16GB)

- 2 x Mellanox ConnectX-3 InfiniBand Adapter (vSwitch for vMotion and VM network)

- 2 x Emulex 16GB dual-port FC HBA

- 2 x Emulex 10GbE dual-port NIC

- VMware ESXi vSphere 6.0 / Enterprise Plus 4-CPU

- Dell PowerEdge R730 Virtualized SQL 4-node Cluster

- Eight Intel E5-2690 v3 CPUs for 249GHz in cluster (Two per node, 2.6GHz, 12-cores, 30MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- SD Card Boot (Lexar 16GB)

- 4 x Mellanox ConnectX-3 InfiniBand Adapter (vSwitch for vMotion and VM network)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Emulex 10GbE dual-port NIC

- VMware ESXi vSphere 6.0 / Enterprise Plus 8-CPU

Each SQL Server VM is configured with two vDisks, one 100GB for boot and one 500GB for the database and log files. From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM and leveraged the LSI Logic SAS SCSI controller.

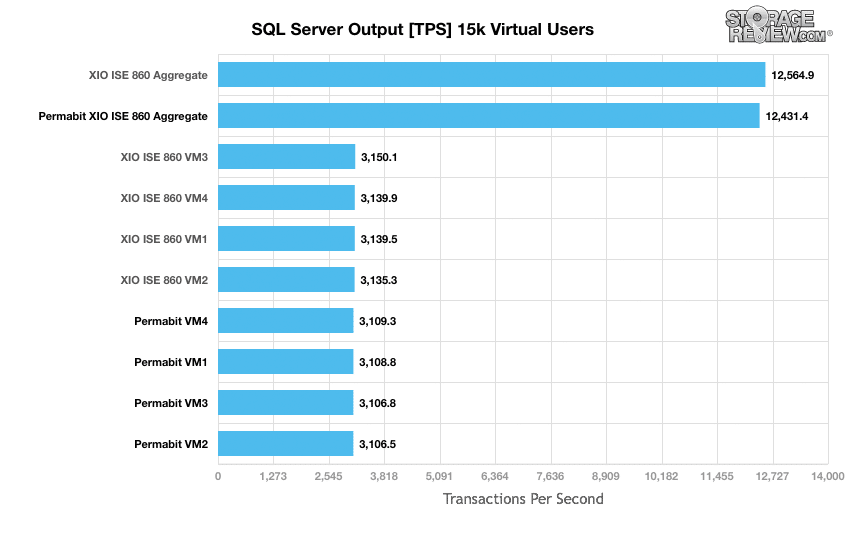

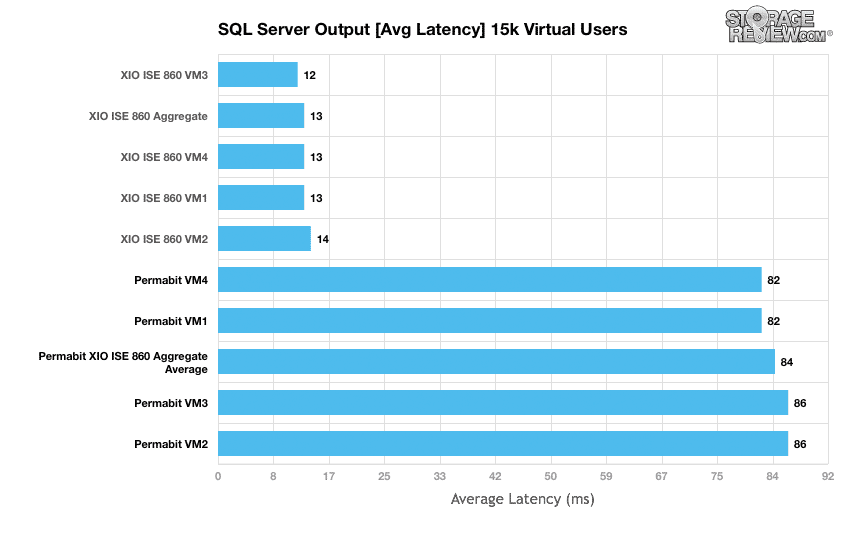

Looking at the TPS performance change between running our SQL TPC-C workload on the X-IO ISE 860 versus through the Permabit SANblox, the drop was fairly small from 12,564 to 12,431TPS.

Changing the focus from transactional performance to latency though we see the impact of data reduction in our workload. With workloads operating through the SANblox, latency increased from 13ms average to 84ms average; just under a 5.5x jump. Permabit explained that we may be nearing maximum load for a single SANblox pair and that slightly reducing the workload or adding a second SANblox could reduce the latency average significantly.

The Sysbench OLTP benchmark runs on top of Percona MySQL leveraging the InnoDB storage engine operating inside a CentOS installation. To align our tests of traditional SAN with newer hyper-converged gear, we’ve shifted many of our benchmarks to a larger distributed model. The primary difference is that instead of running one single benchmark on a bare-metal server, we now run multiple instances of that benchmark in a virtualized environment. To that end, we deployed 4 and 8 Sysbench VMs on the X-IO ISE 860, 1-2 per node, and measured the total performance seen on the cluster with all operating simultaneously. We plotted how 4 and 8VMs operated on both the flash array raw, as well as through the Permabit SANblox.

Dell PowerEdge R730 Virtualized Sysbench 4-node Cluster

- Eight Intel E5-2690 v3 CPUs for 249GHz in cluster (Two per node, 2.6GHz, 12-cores, 30MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- SD Card Boot (Lexar 16GB)

- 4 x Mellanox ConnectX-3 InfiniBand Adapter (vSwitch for vMotion and VM network)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Emulex 10GbE dual-port NIC

- VMware ESXi vSphere 6.0 / Enterprise Plus 8-CPU

Each Sysbench VM is configured with three vDisks, one for boot (~92GB), one with the pre-built database (~447GB) and the third for the database that we will test (400GB). From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM and leveraged the LSI Logic SAS SCSI controller.

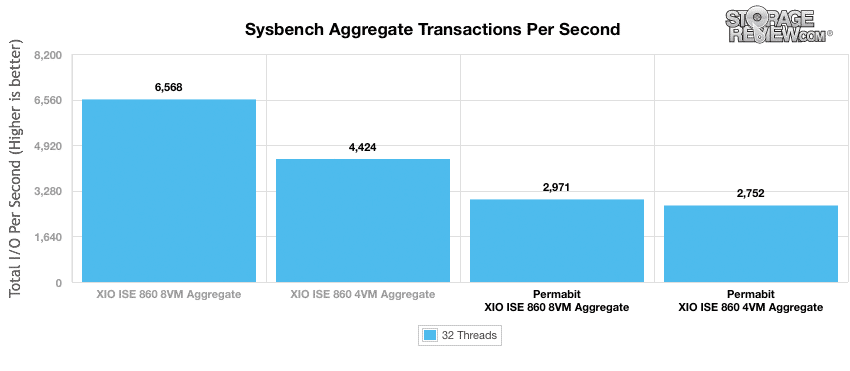

Our Sysbench test measures average TPS (Transactions Per Second), average latency, as well as average 99th percentile latency at a peak load of 32 threads.

With Sysbench running natively on the X-IO ISE 860 with an 8VM workload, we measured an aggregate of 6,568TPS across the cluster. With the SANblox added into the mix, that dropped to 2,971TPS. With a load of 4VMs, we saw less of a drop, going from 4,424TPS down to 2,752TPS. In both cases the overhead of operating through the data reduction appliance accounted for 55% and 38% respectively. One critical aspect though this overhead figure doesn’t affect LUNs served from the storage array directly. As an external system users can opt to route higher-priority traffic to the array itself, albeit without the cost benefits of data reduction.

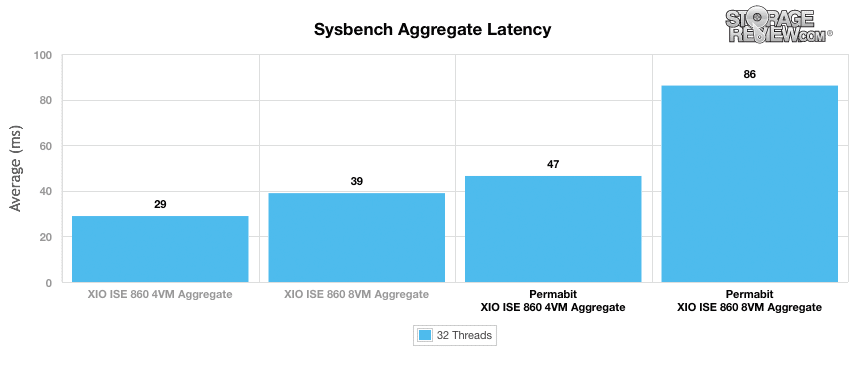

Comparing average latency between our configurations, we saw 4VM average latency increase from 29 to 47ms, while 8VM average latency picked up from 39 to 86ms.

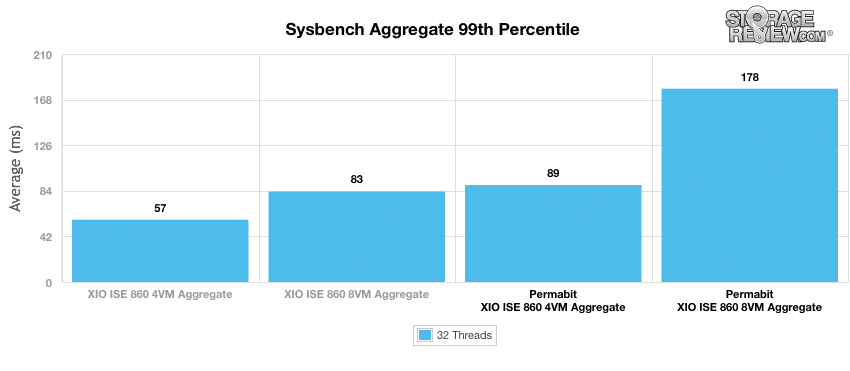

Looking at 99th percentile latency with the SANblox added into our environment, we measured an increase from 57 to 89ms with 4VMs and 83 to 178ms with 8VMs.

VMmark Performance Analysis

As with all of our Application Performance Analysis we attempt to show how products perform in a live production environment compared to the company’s claims on performance. We understand the importance of evaluating storage as a component of larger systems, most importantly how responsive storage is when interacting with key enterprise applications. In this test we use the VMmark virtualization benchmark by VMware in a multi-server environment.

VMmark by its very design is a highly resource intensive benchmark, with a broad mix of VM-based application workloads stressing storage, network and compute activity. When it comes to testing virtualization performance, there is almost no better benchmark for it, since VMmark looks at so many facets, covering storage I/O, CPU, and even network performance in VMware environments.

Dell PowerEdge R730 VMware VMmark 4-node Cluster Specifications

- Dell PowerEdge R730 Servers (x4)

- CPUs: Eight Intel Xeon E5-2690 v3 2.6GHz (12C/24T)

- Memory: 64 x 16GB DDR4 RDIMM

- Emulex LightPulse LPe16002B 16Gb FC Dual-Port HBA

- Emulex OneConnect OCe14102-NX 10Gb Ethernet Dual-Port NIC

- VMware ESXi 6.0

ISE 860 G3 (20×1.6TB SSDs per DataPac)

- Before RAID: 51.2TB

- RAID 10 Capacity: 22.9TB

- RAID 5 Capacity: 36.6TB

- List Price: $575,000

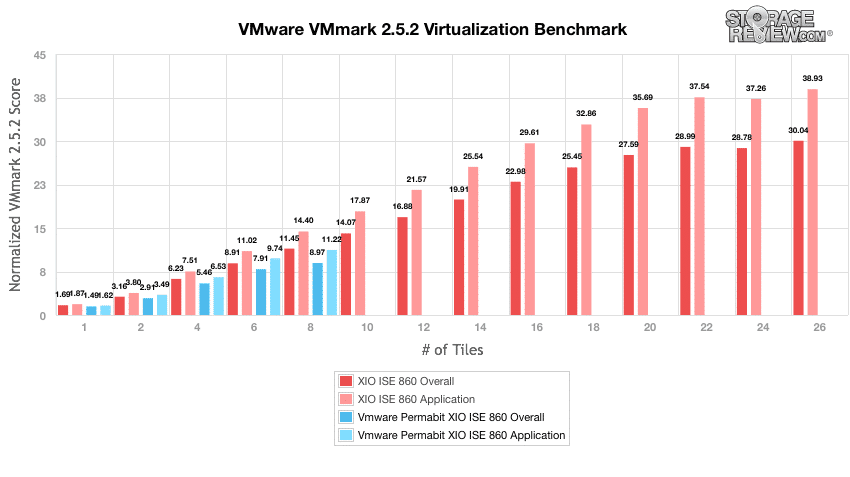

When configuring the Permabit SANblox for testing with VMware’s VMmark, we optimized the way data was distributed. Traditionally with a given array, VMs are deployed in an “all or nothing” configuration, meaning that data in full is moved completely onto the storage array being tested. With the SANblox, the unique way it sits in front of the storage device, we were able to leverage the storage directly for some write-intensive workloads, as well as through the SANblox for the majority of the OS disks and VMmark workloads where deduplication savings were greatest.. In our specific configuration we migrated all VMs onto the SANblox, with the exception of the individual 40GB Mailserver mailbox vDisks, which we positioned on the X-IO ISE 860 directly.

With our optimized configuration, we were able to reach a total of 8 tiles with VMmark using the Permabit SANblox in front of the X-IO ISE 860. This compared to a peak of 26 tiles we measured directly hosted on the array prior. From a performance standpoint, running our workload through the SANblox had an overhead of 70%. In terms of data reduction though, the space consumed stayed flat at 1 tile. Migrating additional tiles onto the array had no appreciable impact on space consumed. This is one scenario where having a 2nd HA pair of SANblox appliances would improve overall performance.

Conclusion

The Permabit Albireo SANblox is an easy to deploy appliance that offers tremendous benefit by vastly reducing an organization’s data footprint. Permabit states that the Albireo SANblox can be dropped in front of any Fibre Channel SAN and customers can see up to a 6:1 reduction in data footprint. All of the data reduction happens inline with the SAN unaware that the SANblox exists. Along with the typical 6:1 data reduction the SANblox also offers thin provisioning and compression. Permabit is a longstanding and widely respected name in deduplication and can help customers see potential huge footprint reductions depending on the workload.

On its face, deduplication sounds wonderful. Organizations can take full advantage of their purchased storage instead of letting it get filled up by duplicates and even older disk-based storage can find new life. The fact that the Permabit Albireo SANblox works regardless of the configuration that follows it is another shining reason to think about it. The biggest drawback to deduplication is that performance must take a hit, in some instances the hit to performance can be pretty significant. Instead of viewing this as a deal breaker potential customers should realize that while performance compared to raw all-flash takes a hit, this is still faster than traditional HDD storage arrays that play in the similar price bracket.

If ultra high performance and extremely low latency is needed more than utilizing all of their storage investment, then they should skip over deduplication. However, if an enterprise can take the performance hit and still function within their defined parameters then by all means they should look into a device such as the Permabit Albireo SANblox. There is a compromise as well, a third option would be to run the less performance critical data (such as development) through SANblox, while letting production data go through with no deduplication. A similar line of thinking needs to go into how one looks at our performance results. The comparison is less of “look how much better the X-IO performs without the SANblox” and more of a way to present the type of performance one could expect when applying deduplication to their SAN.

As noted, the addition of the appliance to a storage stack depends on a number of variables. Ultimately what Permabit offers is an extension of storage capacity and longevity, especially where the workloads don’t have a performance need. In today’s IT environment where tasks like spinning up databases often for development is becoming standard practice, the SANblox enables this with no data footprint penalty. Integration into the enterprise is simple as well and should tuning and customization be required, the appliance allows for such.

Pros

- Simple integration into storage architecture

- Aligns with modern development practices

- Can be turned on/off by LUN

Cons

- Deduplication has overhead, latency sensitive applications may need to bypass the appliance

Bottom Line

Permabit Albireo SANblox easily integrates into existing systems and performs inline data reduction enabling organizations to utilize the full potential of their storage investments. The data reduction can be turned on or off, or apply to only certain workloads in order to maximize both performance and capacity.

Permabit Albireo SANblox Product Page

Discuss this review

Sign up for the StorageReview newsletter