In computer storage, a cache is a physical component placed “in front” of a primary device that transparently stores data so that it can be quickly accessed later on, as it’s usually faster than accessing it directly from the data’s primary location.

With caching software, specific data is automatically chosen to be stored in the cache during transfers to and from the primary device. When an application accesses the specific data later on, it will be served to the user noticeably faster. Usually, data that is most often used (e.g. “hot data”) is chosen to be cached, as it can be very beneficial to users: it reduces latency and increases IOPS for read-intensive workloads, which boosts overall system performance.

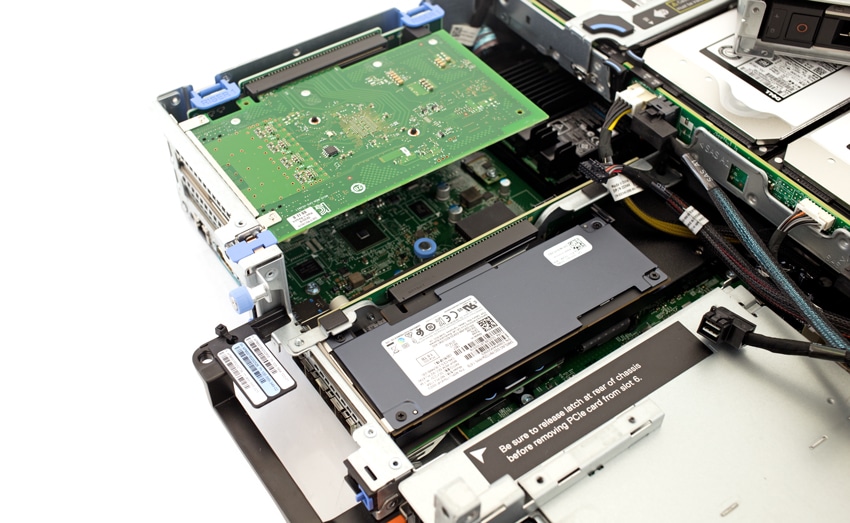

Because of their superior read and write speed, SSDs are by in far the most popular device for caching. Systems like Network Attached Storage (NAS) solutions sometimes have a slot for a cache drive due to how often data can be accessed from servers. Storage arrays also commonly ship in hybrid configurations with HDDs used for capacity and SSDs used for acceleration. Hybrids can bee all flash as well, with lower cost flash used as the capacity pool and higher performing flash used as a cache.

Amazon

Amazon