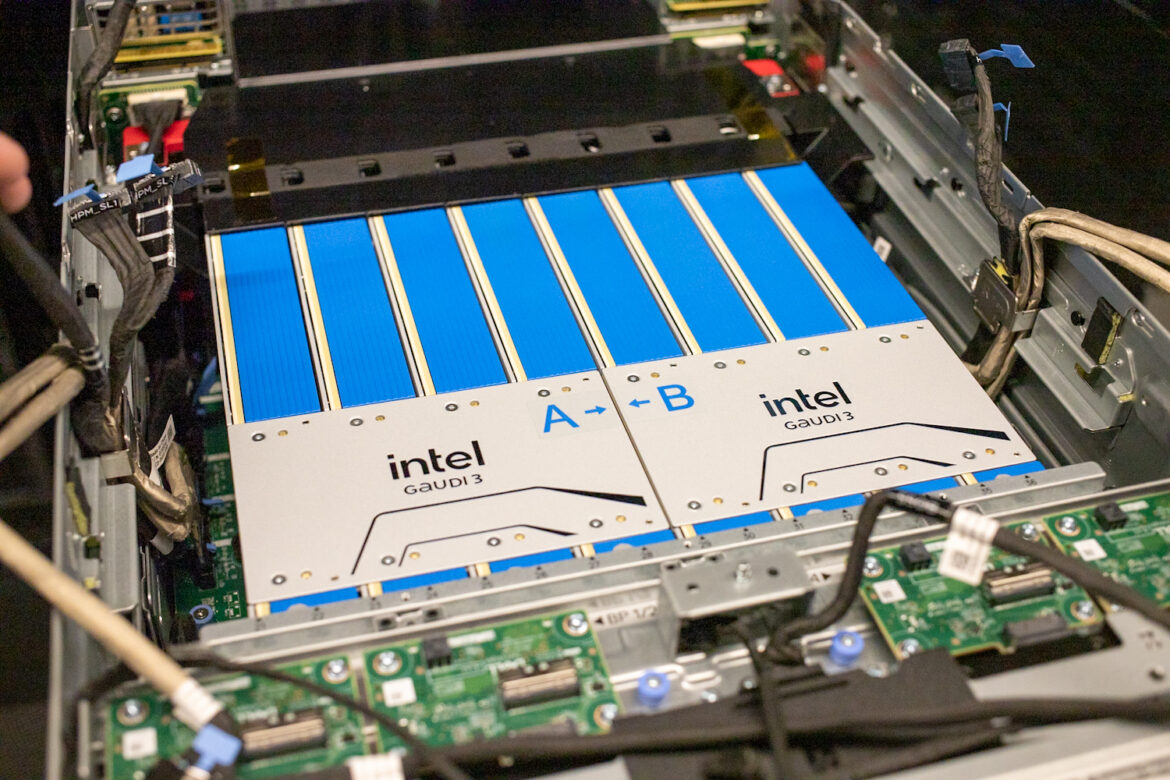

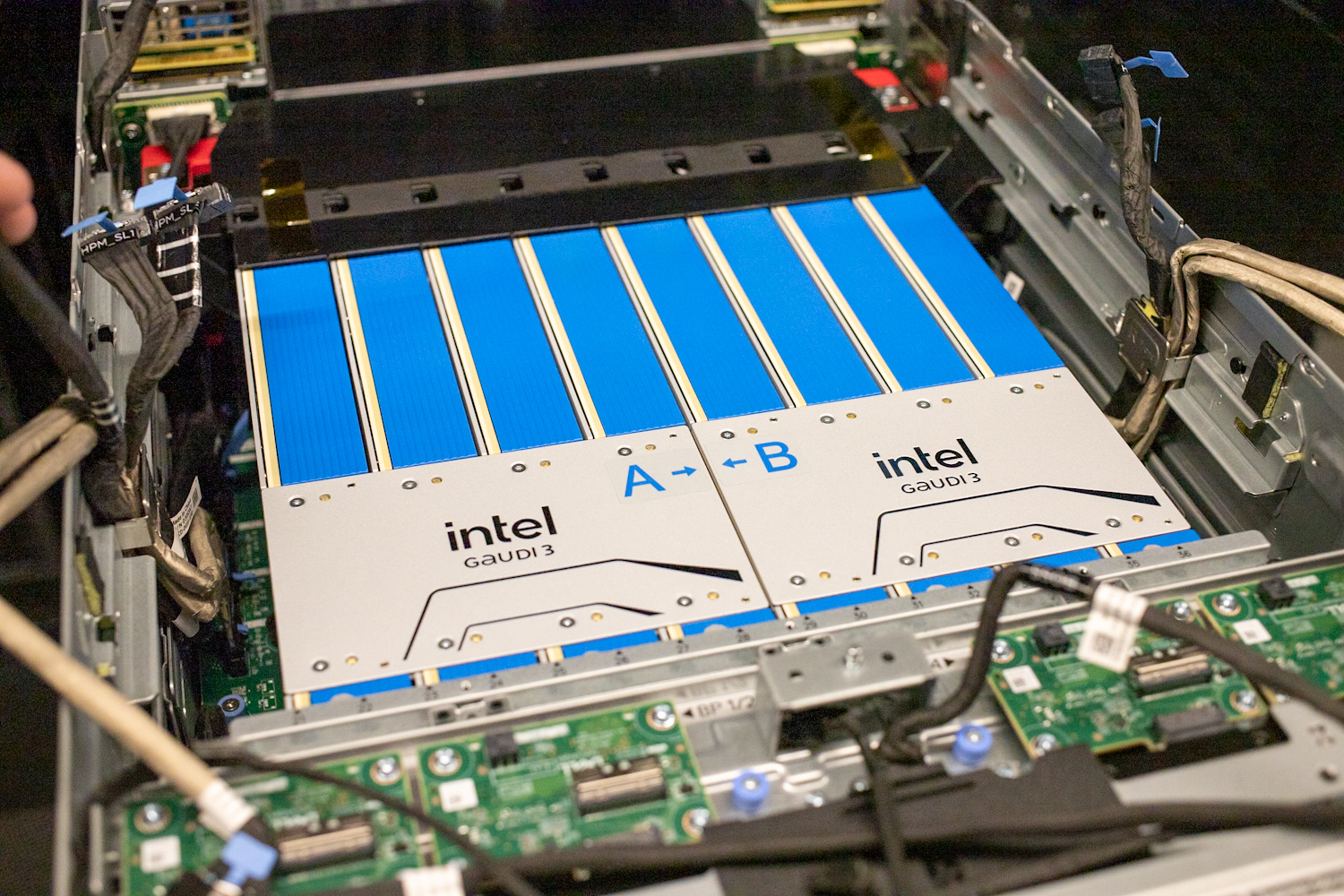

Dell PowerEdge XE7740 is now available with up to eight Intel Gaudi 3 PCIe AI accelerators.

Dell Technologies has announced the PowerEdge XE7740 server for general availability; the first vendor to deliver an integrated server featuring the newly released Intel Gaudi 3 PCIe AI accelerator. Positioned as a 4U powerhouse, the XE7740 combines the ease of integration of Dell’s R-Series with the high-density scalability of the XE platform, purpose-built to accelerate enterprise AI adoption.

This launch signals Dell’s strategy to define the next generation of AI infrastructure. By pairing the performance of Gaudi 3 with Dell’s proven server architecture, the XE7740 is designed to bring price-to-performance leadership, enterprise reliability, and configurability to organizations increasingly tasked with operationalizing AI.

Flexible, High-Performance AI Infrastructure

The PowerEdge XE7740 supports up to 16 single-width or eight 600w double-wide PCIe add in cards, with flexible configuration options tailored to diverse workloads. For enterprises running large-scale AI training or high-throughput inference, the system can be populated with up to eight Intel Gaudi 3 PCIe accelerators. For even more demanding scenarios, two groups of four accelerators can be bridged together using RoCE v2. This bridging enables peer-to-peer accelerator connections, which is a critical factor when dealing with large models, bigger parameter counts, and expanded memory spaces.

Networking flexibility is equally important. Dell provides a 1:1 accelerator-to-NIC ratio, supported by eight full-height PCIe slots and an integrated OCP module, ideal for distributed inference deployments. This design ensures that a compute-rich server does not become I/O-constrained, while also delivering efficient lane utilization. Operating effectively within a 10kW per-rack power envelope, the XE7740 brings high-density AI compute to the majority of today’s enterprise data centers without requiring wholesale infrastructure retrofits.

Seamless Integration Into Enterprise Environments

Cooling and integration are often stumbling blocks for the rollout of AI at scale. Dell has approached this with its Smart Cooling technology, enabling the XE7740 to run heavy AI jobs in standard air-cooled racks rather than mandating costly upgrades to liquid or immersion cooling solutions.

The XE7740 is optimized for real-world AI adoption, supporting today’s most widely adopted large language models and foundation models, including Llama 3/4, DeepSeek, Phi-4, Qwen3, Falcon3, and more. Enterprises can expect smooth integration with PyTorch, Hugging Face, and other popular frameworks.

Enterprise AI Use Cases

The XE7740 is designed for real applications in production AI environments, spanning industries where high-performance inferencing and adaptation are business-critical, including:

- Financial services: Includes fraud detection, real-time risk modeling, and algorithmic trading workloads that demand low-latency inference with secure on-premises compute.

- Healthcare & life sciences: From genome sequencing pipelines to diagnostic imaging, efficiency and compliance drive model deployment in constrained environments.

- Retail/e-commerce: XE7740 delivers the horsepower for personalization engines and recommendation systems, striking a balance between cost, responsiveness, and secure data handling.

- Manufacturing & telecom: Predictive maintenance, defect detection, and network optimization benefit from scalable edge-to-core AI compute.

- Multimodal applications: Speech recognition, image analysis, and multimodal LLMs are supported with seamless accelerator bridging across Gaudi 3 stacks.

Making AI More Accessible

Enterprises often cite cost unpredictability and infrastructure limitations as primary barriers to the adoption of AI. The XE7740 removes those obstacles by providing:

- Cost efficiency: Achieve better price-to-performance positioning compared to GPU-centric alternatives, thereby reducing the total cost of ownership.

- Scalability: Modular infrastructure that allows organizations to start small and scale up incrementally as workloads evolve.

- Compatibility: Engineered for existing data center environments, avoiding the expense of new power and cooling topologies.

Additionally, by keeping workloads on-premises, enterprises sidestep high cloud utilization charges and egress fees. This has become a growing pain point for organizations constrained by the economics of cloud AI.

Preparing Enterprises for the Next Era of AI

The PowerEdge XE7740 reflects Dell’s strategy and commitment to future-proof enterprise AI infrastructure. Businesses deploying this platform are investing in a system that supports today’s fine-tuning, multimodal inference, and data-intensive analytics, while also preparing for the rapid evolution of foundation models and generative AI.

By delivering unmatched flexibility, an ecosystem-ready design, and broad accelerator support, Dell positions the XE7740 as a cornerstone for enterprise AI innovation. Organizations adopting this platform are equipped not just for today’s workloads, but also for whatever tomorrow’s AI landscape holds.

The Dell PowerEdge XE7740, powered by Intel Gaudi 3 PCIe accelerators, offers a balanced combination of flexibility, efficiency, and enterprise-grade reliability, enabling organizations to operationalize AI at scale while keeping costs predictable and infrastructure demands practical.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed