Dell adds significant updates to the AI Data Platform, designed to help customers change distributed, siloed data into fast, repeatable AI outcomes.

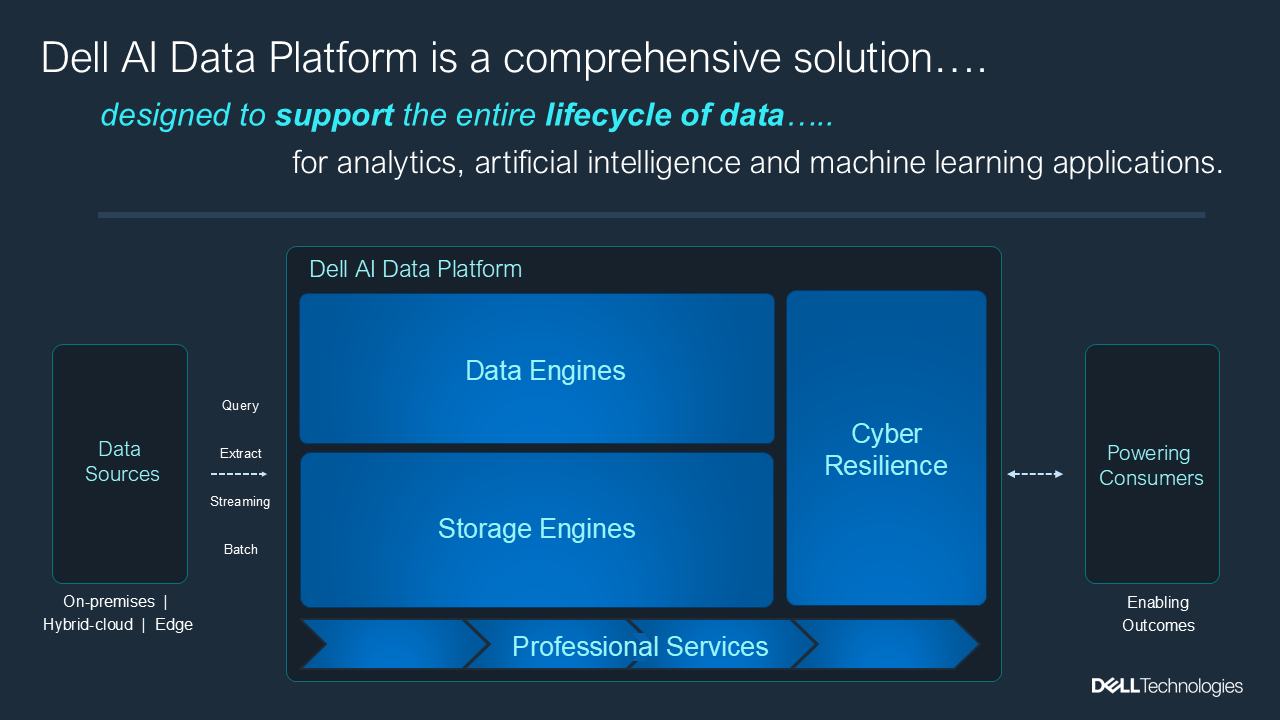

Dell Technologies has announced a significant update to its AI Data Platform, part of the broader Dell AI Factory initiative. The improvements are designed to help organizations turn distributed, siloed data into fast, repeatable AI outcomes. As enterprise data volumes continue to grow with AI adoption, businesses need architectures that bring together security, flexibility, and scalable performance. Dell’s approach centers on creating a unified, open, and modular foundation that bridges isolated data silos while enabling advanced workloads such as training, fine-tuning, retrieval-augmented generation (RAG), and inference.

Unlocking the Value of Siloed Data for AI Workloads

The Dell AI Data Platform’s architecture decouples data storage from processing, removing performance and management bottlenecks. This separation allows different teams, such as data scientists, DevOps engineers, and IT administrators, to use the same data platforms across multiple AI workflows without re-architecting infrastructure each time. The platform also integrates directly with the NVIDIA AI Data Platform reference design, providing organizations with optimized interoperability and acceleration across Dell and NVIDIA technologies.

Dell structures the platform around four key pillars: storage engines for data placement and movement, data engines for real-time insights, built-in cyber resilience, and intelligent data management services. Together, these components create a flexible, scalable, and secure foundation for organizations looking to operationalize AI at scale.

High-Performance Storage Engines for Modern AI Pipelines

The backbone of the Dell AI Data Platform is built on the PowerScale and ObjectScale storage families. Both have been enhanced to deliver faster data throughput, improved security, and support for multiprotocol access, capabilities critical for training, fine-tuning, inference, and RAG pipelines where latency and data locality directly impact model accuracy and iteration speed.

Dell PowerScale combines NAS-like simplicity with the massive parallelism required by modern AI architectures. Recent updates integrate tightly with new NVIDIA platforms, including GB200 and GB300 NVL72, and deliver seamless compatibility with existing application stacks. This alignment ensures that enterprises running GPU-intensive workloads can scale linearly while maintaining performance consistency. The PowerScale F710, which earned NVIDIA Cloud Partner certification, exemplifies this by supporting over 16,000 GPUs in a single system while consuming up to five times less rack space, substantially improving data center density and resource efficiency.

Complementing PowerScale, Dell ObjectScale delivers S3-compatible, high-performance object storage built for large-scale AI data workloads. Customers can deploy it as a purpose-built appliance or as a software-defined stack on Dell PowerEdge servers. Performance benchmarking shows ObjectScale now operates up to eight times faster than prior-generation all-flash configurations, a direct boost for data-hungry AI training and analytics workflows.

Dell is also introducing S3 over RDMA in an upcoming tech preview, with significant performance gains: up to 230% higher throughput, 80% lower latency, and 98% lower CPU usage versus traditional S3 protocols. These improvements help reduce data movement overhead, freeing system resources for GPU processing and inference. In addition, enhanced AWS S3 integration, bucket-level compression, and small-object performance tuning offer up to 19% higher throughput and 18% lower latency for 10KB object operations—critical for high-churn, small-file environments common in AI and edge applications.

Intelligent Data Engines for Real-Time AI Activation

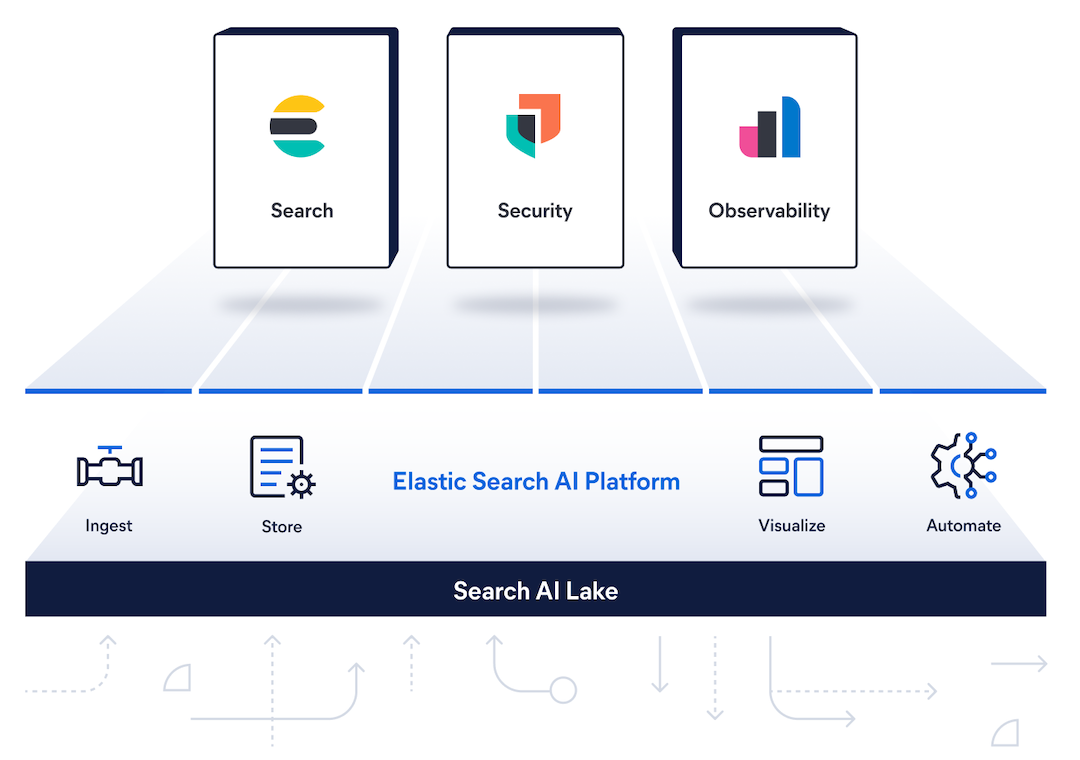

The Dell AI Data Platform’s data engines are designed to make raw data immediately usable for AI, analytics, and decision-making. Developed in collaboration with partners including NVIDIA, Elastic, and Starburst, these engines integrate data search, analytics, and semantic understanding into a unified operational layer.

The new Data Search Engine, powered by Elastic, provides natural language search across enterprise data sources. Users can query datasets conversationally, ideal for RAG, semantic search, and generative AI use cases. The engine integrates with MetadataIQ to enrich file-level awareness, indexing billions of files on PowerScale and ObjectScale through metadata tagging and discovery. This capability lets developers build more innovative RAG applications using tools like LangChain, which efficiently update and retrieve new or changed data to minimize indexing costs and maintain accurate vector databases.

The Data Analytics Engine, created with Starburst, extends this by enabling federated queries across spreadsheets, databases, cloud warehouses, and lakehouses—all without data migration. The new Agentic Layer leverages large language models (LLMs) to automate core data management tasks, including documentation, insight generation, and SQL augmentation. By embedding AI directly into database workflows, organizations can move from raw data ingestion to actionable outputs faster.

An essential addition is the MCP Server for the Data Analytics Engine, which introduces multi-agent coordination and native support for AI application development. Teams can operationalize generative AI and data analytics workflows together while maintaining model governance and lineage tracking—key for regulated or large-scale enterprise environments. Additionally, unified vector store access allows seamless RAG and AI search functions across Iceberg, Dell’s Data Search Engine, PostgreSQL + PGVector, and other repositories.

GPU-Accelerated Vector Search with Dell and NVIDIA

Dell’s integration of the AI Data Platform with NVIDIA cuVS brings GPU acceleration to enterprise vector search. This advancement allows organizations to perform hybrid keyword and vector-based searches with exceptional performance and scalability. Using GPU acceleration, queries run faster, similarity scoring improves, and large vector stores can be processed in real time, all within a secure, on-premises environment that meets enterprise governance standards.

This GPU-accelerated hybrid search bridges Dell’s storage and data engines, closing the gap between storage infrastructure and AI inference. With cuVS and Dell’s managed infrastructure, IT teams gain a turnkey, high-performance search solution optimized for both speed and security, capable of supporting the newest enterprise-scale LLMs and multimodal workloads.

The Bigger Picture: A Unified Platform for Enterprise AI

The expanded Dell AI Data Platform underscores Dell Technologies’ ongoing transformation toward AI-native infrastructure environments. By aligning its servers, storage, and data engines through open collaboration with partners like NVIDIA, Elastic, and Starburst, Dell is positioning itself not as a storage vendor but as a full-stack solutions provider for modern AI and analytics.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed