NVIDIA is expanding its professional GPU lineup with new hardware aimed at both large-scale data centers and compact workstations. At SIGGRAPH 2025, the company unveiled the RTX PRO 6000 Blackwell Server Edition for enterprise servers, alongside two new desktop GPUs: the RTX PRO 4000 Small Form Factor (SFF) Edition and the RTX PRO 2000 Blackwell. The announcements highlight a push to deliver Blackwell architecture performance across a wide range of professional workloads, from AI model training and industrial simulation to engineering design and media production. Alongside the hardware, NVIDIA and its partners are emphasizing integrated AI software platforms that enable these GPUs to accelerate everything from generative AI to robotics and physical AI applications.

NVIDIA RTX PRO 6000 Blackwell Server Edition: New Power for the Data Center

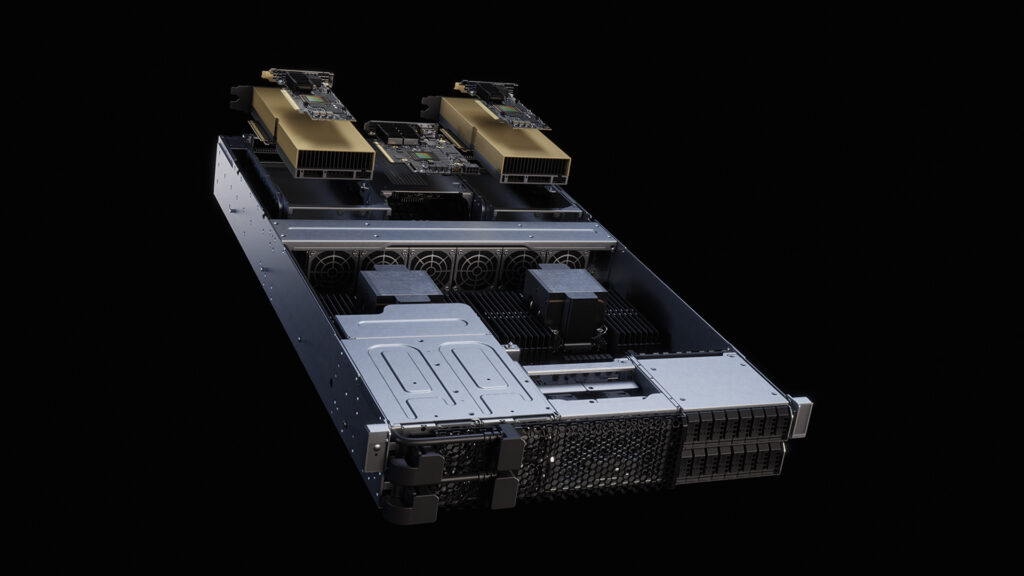

NVIDIA has confirmed that its RTX PRO 6000 Blackwell Server Edition GPU will soon be available in a broad range of mainstream enterprise servers, bringing its latest GPU architecture to the 2U rack system (one of the most widely deployed server form factors). These new configurations aim to help organizations transition from traditional CPU-only data center setups to GPU-accelerated platforms.

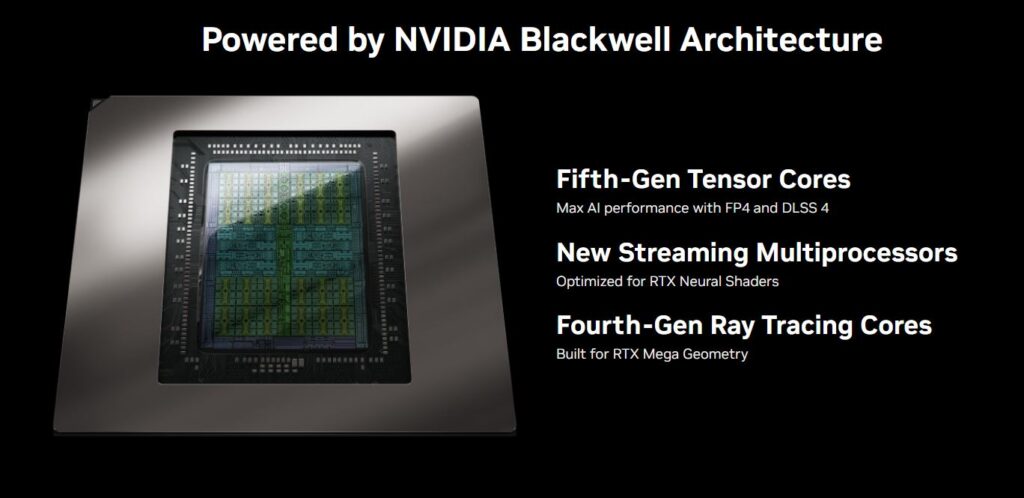

The RTX PRO 6000 is built on NVIDIA’s Blackwell architecture and comes with 24,064 CUDA parallel processing cores, allowing it to tackle a wide variety of demanding workloads. It includes 752 5th-gen NVIDIA Tensor Cores to speed up AI training and inference, along with 188 fourth-generation RT Cores for real-time ray tracing. Spec-wise, it delivers 117 teraflops of single-precision (FP32) performance, 3.7 petaflops of FP4 AI performance, and 354.5 teraflops from its RT Cores. With this combination, the card is well equipped to take on jobs that need both intense computation and high-end rendering.

Memory capacity stands at 96GB of GDDR7 with ECC to improve data integrity during processing. With a 512-bit memory interface and bandwidth of 1,597 GB/s, the GPU is capable of moving large datasets, which is very important for simulation, AI model training, and high-resolution visualization. The design supports vGPU and up to four Multi-Instance GPU (MIG) partitions, each with 24GB of dedicated memory, enabling isolated workloads to run simultaneously on the same physical card. Video and image processing tasks are accelerated through four NVENC encoders, four NVDEC decoders, and four JPEG engines.

Security and reliability features include support for confidential computing, secure boot with root of trust, and a PCI Express 5.0 x16 interface for high-speed connectivity. The card outputs via four DisplayPort 2.1 connectors, measures 4.4 inches in height and 10.5 inches in length, and fits into a dual-slot configuration. Cooling is passive, and power is supplied via a single PCIe CEM5 16-pin connector, with configurable consumption up to 600 watts.

Systems from Cisco, Dell Technologies, HPE, Lenovo, and Supermicro will be among the first to ship with the new 2U RTX PRO Servers, each supporting various configurations of the RTX PRO 6000 Blackwell GPUs. The architecture is designed to accelerate a broad scope of workloads, including AI model training and inference, data analytics, scientific simulations, photorealistic rendering, industrial robotics, and other forms of “physical AI” development.

| NVIDIA RTX PRO 6000 Blackwell Server Edition | |

| GPU Architecture | NVIDIA Blackwell Architecture |

| CUDA Parallel Processing cores | 24,064 |

| NVIDIA Tensor Cores | 752 (5th Gen) |

| NVIDIA RT Cores | 188 (4th Gen) |

| Single-Precision Performance (FP32) | 117 TFLOPS |

| Peak FP4 AI PFLOPS | 3.7 PFLOPS |

| RT Core Performance | 354.5 TFLOPS |

| GPU Memory | 96 GB GDDR7 with ECC |

| Memory Interface | 512-bit |

| Memory Bandwidth | 1597 GB/s |

| Power Consumption | Up to 600W (Configurable) |

| Multi-Instance GPU | Up to 4 MIGs @ 24GB |

| NVENC | NVDEC | JPEG | 4x | 4x | 4x |

| Confidential Compute | Supported |

| Secure Boot with Root of Trust | Yes |

| Graphics Bus | PCI Express 5.0 x16 |

| Display Connectors | 4x DisplayPort 2.1 |

| Form Factor | 4.4″ (H) x 10.5″ (L), dual slot |

| Thermal Solution | Passive |

| Power Connector | 1x PCIe CEM5 16-pin |

Performance gains over the previous-generation NVIDIA L40S GPU should be pretty significant. The new 5th-gen Tensor Cores and 2nd-gen Transformer Engine, with FP4 precision support, deliver up to six times faster inference speeds. Fourth-generation RTX graphics technology provides up to a fourfold increase in rendering and visualization throughput.

In addition to a boost in performance, these new RTX PRO 6000 systems have been engineered for efficiency. NVIDIA notes up to 18 times higher energy efficiency compared to CPU-only 2U servers, which is especially relevant for space- and power-constrained facilities. This balance of speed and efficiency is crucial for workloads such as large-scale simulation, synthetic data generation, and robotics training, where time-to-result is critical.

HPE will integrate the GPU into its ProLiant Compute lineup, including the DL385 Gen11 with support for two RTX PRO 6000 GPUs in the new 2U RTX PRO form factor, and the DL380a Gen12 supporting up to eight GPUs in a 4U design. These systems will work with HPE’s Private Cloud AI platform, which adds support for NVIDIA’s latest AI models, including Nemotron for agentic AI, Cosmos Reason for robotics, and updated video search and summarization tools.

Shipments of these server models begin on September 2, 2025.

NVIDIA RTX PRO 4000 SFF and RTX PRO 2000 Blackwell GPUs: Compact Workstation Acceleration

Alongside the server-focused launch, NVIDIA is expanding its desktop GPU lineup with two new Blackwell-based models aimed at professional workstations: the RTX PRO 4000 SFF Edition and the RTX PRO 2000. Both are designed to bring the benefits of next-generation AI and graphics acceleration to compact, energy-efficient workstations across industries such as engineering, media production, and architecture.

The RTX PRO 4000 SFF Edition fits into half the physical size of a standard GPU while retaining 4th-gen RT Cores and fifth-generation Tensor Cores. Compared with its predecessor, it offers up to 2.5 times higher AI performance, a 1.7 times boost in ray tracing, and 1.5 times greater memory bandwidth; all within a 70W power envelope.

The RTX PRO 2000 targets mainstream design and AI tasks, with NVIDIA claiming up to 1.6x faster 3D modeling, 1.4x faster CAD performance, and 1.6x quicker rendering speeds over the previous generation. It also brings notable jumps in generative AI workloads, with a 1.4x increase in image creation speed and a 2.3x improvement in text generation, allowing for more rapid prototyping and iteration.

NVIDIA indicates real-world testing from organizations such as the Mile High Flood District, the Government of Cantabria’s Geospatial Office, and design studios like Studio Tim Fu demonstrate the card’s utility in handling large datasets, geospatial workloads, and AI-driven design workflows. Benchmarks from engineering firm Thornton Tomasetti showed the RTX PRO 2000 running structural analysis nearly three times faster than the prior generation and more than 27 times faster than CPU-based methods.

Availability for both models is expected later this year through major OEMs and distribution partners.

SIGGRAPH Focus: Workstations in the Age of Physical AI

At SIGGRAPH, NVIDIA emphasized the role of its RTX PRO workstation lineup (including the new RTX PRO 4000 SFF and RTX PRO 2000) in powering professional AI and simulation workflows. Beyond the hardware, NVIDIA is positioning its software ecosystem as a core driver for adoption.

The NVIDIA AI Enterprise suite offers tools for developing and scaling AI across infrastructure, while the Cosmos platform delivers optimized foundation models for robotics, automation, and edge AI. The Omniverse platform remains central for 3D simulation, collaborative design, and digital twin workflows, integrating directly with the hardware’s AI capabilities.

This mix of compact, efficient GPUs and a well-developed AI software stack shows how much the industry is changing. AI-accelerated computing is no longer something that only happens in massive data centers. Smaller workstations can now handle the same kinds of jobs, from generative AI content creation to robotics simulation, that used to demand much larger and more power-hungry systems.

Dell Expands AI Data Platform with NVIDIA and Elastic

Also announced were updates to the Dell AI Data Platform, created with NVIDIA and Elastic to handle the entire AI workflow from data ingestion to inference. A new unstructured data engine (powered by Elastic’s vector search) adds semantic retrieval and hybrid keyword search for faster, more accurate access to large-scale unstructured datasets. It uses GPU acceleration and works alongside existing tools like a federated SQL engine, large-scale data processing, and high-speed AI-ready storage.

The platform now integrates with the NVIDIA AI Data Platform reference design, offering a validated architecture that combines storage, compute, networking, and AI software. The first hardware to feature this setup will be the Dell PowerEdge R7725, a 2U server with the NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs mentioned above. These systems target workloads such as large language model inference, engineering simulation, and AI reasoning with models like NVIDIA NeMo and Cosmos.

Dell reports the RTX PRO 6000 can deliver up to six times higher LLM token throughput, double the engineering simulation performance, and support four times as many concurrent MIG users over the previous generation. Pairing the R7725 with the updated platform offers a turnkey option for faster inference, responsive semantic search, and the ability to handle larger AI workloads without custom system design.

At SIGGRAPH 2025, Dell is demonstrating these capabilities alongside its Dell Pro Max PC lineup, which will soon add the compact Pro Max with the GB10 AI developer workstation.

HPE Brings RTX PRO 6000 Blackwell to ProLiant Servers

Lastly, there are new additions to HPE’s NVIDIA AI Computing by HPE portfolio, also bringing the RTX PRO 6000 Blackwell Server Edition GPU to its ProLiant Compute servers in a 2U form factor. Two configurations will be available. The ProLiant DL385 Gen11 can house up to two RTX PRO 6000 GPUs in the new 2U RTX PRO Server form factor, while the ProLiant DL380a Gen12 supports up to eight GPUs in a 4U design, with shipments starting September 2, 2025.

These servers are designed for a wide range of workloads, from generative and agentic AI to robotics, industrial applications, simulation, and visual computing. Gen12 ProLiant models include layered security features such as HPE Integrated Lights Out (iLO) 7 with Silicon Root of Trust, a secure enclave, and quantum-resistant firmware signing. HPE Compute Ops Management adds centralized automation for deployment and maintenance, cutting server management time and reducing downtime.

HPE also previewed the next generation of its Private Cloud AI platform, co-developed with NVIDIA. The update will support RTX PRO 6000 GPUs, offer seamless scalability across GPU generations, and include features like air-gapped management and enterprise multi-tenancy. The platform will add compatibility with NVIDIA’s latest Nemotron reasoning models for agentic AI, the Cosmos Reason vision language model for robotics and physical AI, and the updated Blueprint for Video Search and Summarization (VSS 2.4). These additions aim to help enterprises quickly deploy AI agents capable of processing and analyzing large volumes of video and visual data.

Amazon

Amazon