HPE has rolled out a wave of updates across its NVIDIA AI Computing by HPE portfolio. These enhancements are designed to better support the full lifecycle of AI development, from data ingestion and training to real-time inferencing and operational management. HPE is zeroing in on the full lifecycle of AI within enterprise infrastructure. Its latest updates reflect an emphasis on flexibility, scalability, and developer readiness, starting with new capabilities in HPE Private Cloud AI.

New Capabilities in HPE Private Cloud AI Aim to Reduce Developer Friction

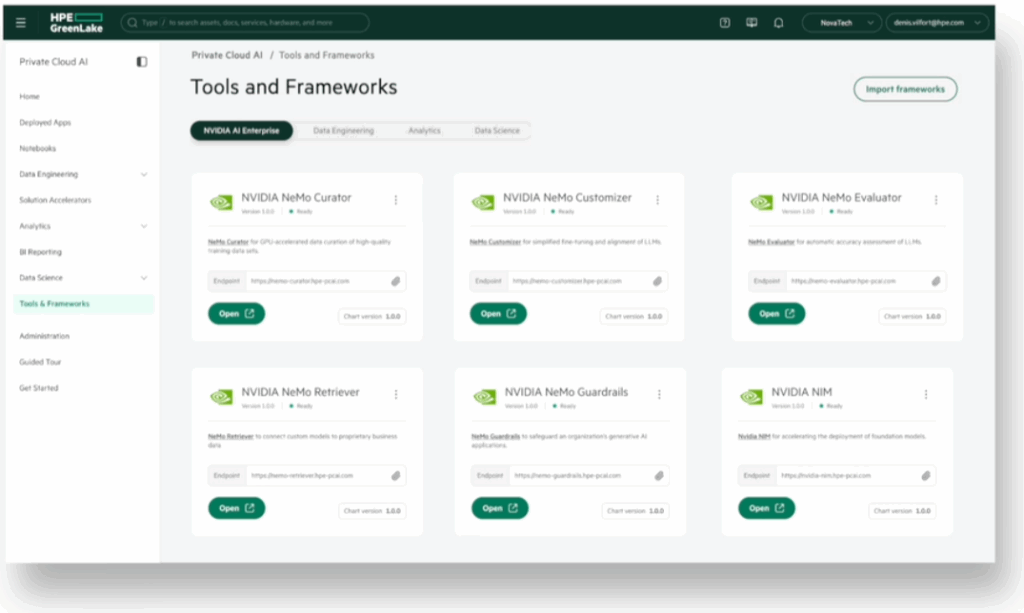

A central update comes to HPE Private Cloud AI, a full-stack solution developed in collaboration with NVIDIA. The platform enables organizations to run generative AI (GenAI) and agentic workloads on-premises or in hybrid environments, and will now support feature branch model updates from NVIDIA AI Enterprise.

This means developers can test new AI frameworks, microservices, and SDKs without affecting stable production models, an approach that mirrors what’s commonly done in modern software development. It offers a safer environment for experimentation while maintaining the robustness required for enterprise-scale AI.

HPE Private Cloud AI will also support the NVIDIA Enterprise AI Factory validated design. This alignment gives organizations a clearer path to building AI solutions using NVIDIA’s tested reference designs, making it easier to scale reliably and consistently.

New SDK for Alletra Storage Streamlines Data Workflows for Agentic AI

HPE is also rolling out a new software development kit (SDK) for its Alletra Storage MP X10000 system, designed to work with the NVIDIA AI Data Platform. This SDK makes it easier for organizations to connect their data infrastructure to NVIDIA’s AI tools.

The main goal is to help manage unstructured data (e.g., documents, images, or videos) that often needs to be cleaned up and organized before it can be used in AI projects. The SDK supports tasks such as tagging data with helpful information, organizing it for faster searches, and preparing it for AI model training and inference.

It also uses RDMA (Remote Direct Memory Access) technology to speed up data transfers between storage and GPUs, which helps improve performance when handling large AI workloads.

In addition, the Alletra X10000’s modular design allows organizations to scale storage and performance separately, allowing them to adjust their setup based on specific project needs. By combining these tools, HPE and NVIDIA aim to give organizations more efficient ways to access and process data, from on-prem systems to cloud-based environments.

HPE Servers Secure Top Spots in AI Benchmarks

HPE’s ProLiant Compute DL380a Gen12 server recently secured top rankings in 10 MLPerf Inference: Datacenter v5.0 benchmarks. These tests evaluated performance across various demanding AI models, including GPT-J for language tasks, Llama2-70B for large-scale generative AI, ResNet50 for image classification, and RetinaNet for object detection. The strong benchmark performance reflects the server’s current configuration, which includes high-performance NVIDIA H100 NVL, H200 NVL, and L40S GPUs.

HPE ProLiant Compute DL380a Gen12

Building on that momentum, HPE plans to expand the server’s capabilities by offering configurations with up to 10 NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, starting June 4. This addition is expected to further enhance the server’s suitability for enterprise AI applications like multimodal inference, simulation-based AI (often called physical AI), model fine-tuning, and advanced design or media workflows.

The DL380a Gen12 offers two cooling options to handle high-demand workloads: traditional air cooling and direct liquid cooling (DLC). The DLC configuration draws on HPE’s long-standing expertise in thermal management to help maintain system stability and performance during sustained compute-heavy operations.

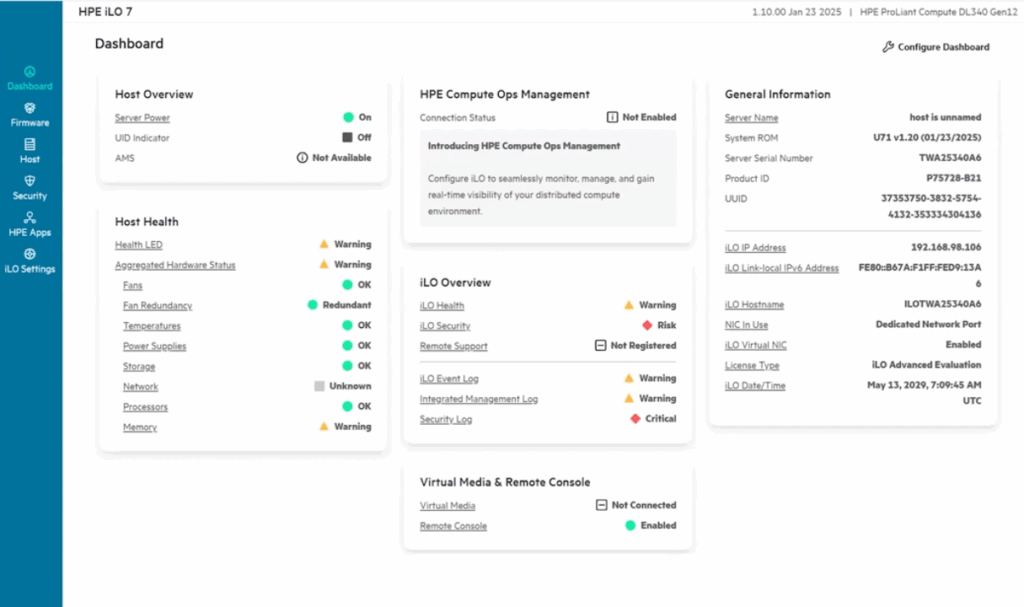

The server includes HPE’s Integrated Lights Out (iLO) 7 management engine, incorporating hardware-level security protections using Silicon Root of Trust. It’s among the first server platforms designed with post-quantum cryptographic readiness and meets the FIPS 140-3 Level 3 standard—an advanced certification for cryptographic security.

On the management front, HPE Compute Ops Management offers automated lifecycle tools that track system health, flag potential issues early, and provide energy usage insights through AI-driven analytics.

Beyond the DL380a, HPE also demonstrated strength across its server lineup. The ProLiant Compute DL384 Gen12, equipped with dual-socket NVIDIA GH200 NVL2 GPUs, ranked first in four MLPerf v5.0 tests, including on large-scale models like Llama2-70B and Mixtral-8x7B. Meanwhile, the HPE Cray XD670, featuring 8 NVIDIA H200 SXM GPUs, led in 30 benchmark scenarios, spanning large language models and vision-based AI tasks. HPE systems claimed the top spot in over 50 test categories, offering third-party validation of its AI infrastructure capabilities.

OpsRamp Expands to Cover New GPU Class for Infrastructure Observability

On the operational side, HPE is extending support for its OpsRamp software to manage environments using the upcoming NVIDIA RTX PRO 6000 Blackwell GPUs. This expansion enables IT teams to maintain visibility over their AI infrastructure, from GPU utilization and thermal loads to memory usage and power draw.

The software brings full-stack observability to hybrid AI environments and allows teams to proactively automate responses, optimize job scheduling, and manage resource allocation based on historical trends. With growing enterprise investment in AI, these observability and optimization tools are becoming important for running reliable and cost-effective AI deployments.

Availability

Feature branch support for NVIDIA AI Enterprise in HPE Private Cloud AI is expected by summer. HPE Alletra Storage MP X10000’s SDK, including support for direct memory access to NVIDIA-accelerated infrastructure, is also slated for availability in summer 2025. The HPE ProLiant Compute DL380a Gen12 server, configured with NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, will be available to order starting June 4, 2025. HPE’s OpsRamp software will be available in alignment with the release of the RTX PRO 6000 to support its management and optimization.

With these updates, it certainly seems that HPE is taking steps to further strengthen its AI infrastructure portfolio, focusing on creating scalable, manageable environments that can support the full range of AI workloads without overcomplicating operations.

Amazon

Amazon