At the 2025 OCP EMEA Summit, Google delivered pivotal announcements on the physical infrastructure supporting modern data centers. The message was clear: as AI workloads scale, so too must the capabilities of power, cooling, and mechanical systems.

AI’s insatiable appetite for power is no longer theoretical. Google projects that, by 2030, machine learning deployments will demand more than 500 kW per IT rack. This surge is driven by the relentless push for higher rack densities, where every millimeter is packed with tightly interconnected “xPUs” (GPUs, TPUs, CPUs). A fundamental shift in power distribution is called for to meet these requirements: higher-voltage DC solutions, with power components and battery backup moved outside the rack. And with this shift comes a new industry buzzword.

Power Delivery

Google’s first major announcement revisited a decade of data center power delivery progress. Ten years ago, Google championed the move to 48 VDC within IT racks, dramatically improving power distribution efficiency over the legacy 12 VDC standard. The industry responded by scaling rack power from 10 kW to 100 kW. The transition from 48 volts direct current (VDC) to the new +/-400 VDC allows IT racks to scale from 100 kilowatts to 1 megawatt.

The Mt. Diablo project, a collaboration between Meta, Microsoft, and the OCP community, aims to standardize electrical and mechanical interfaces at 400 VDC. This voltage selection isn’t arbitrary; it leverages the robust supply chain built for electric vehicles, unlocking economies of scale, streamlined manufacturing, and improved quality.

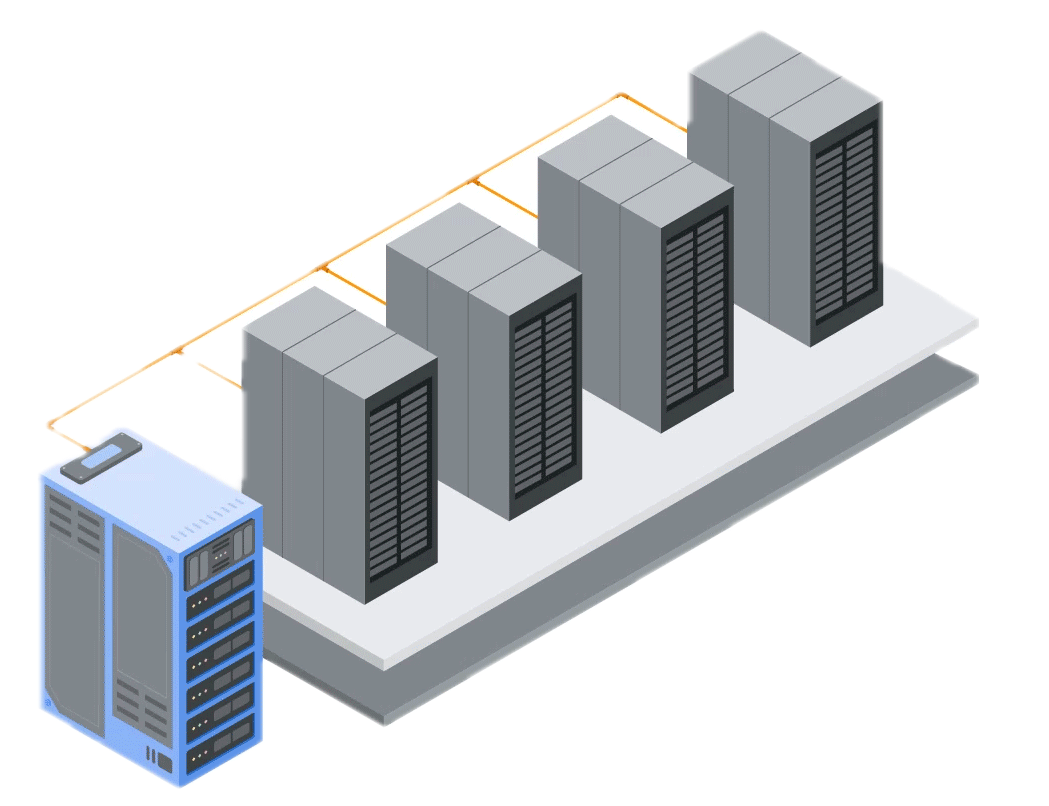

+/-400 VDC power delivery: AC-to-DC sidecar power rack

The first tangible result is an AC-to-DC sidecar power rack, which decouples power components from the IT rack. This architecture delivers a roughly 3% boost in end-to-end efficiency and frees up the entire rack for compute hardware. Looking ahead, Google and its partners are exploring direct, high-voltage DC distribution throughout the data center, promising even greater density and efficiency.

The Thermal Challenge

As chip power consumption soars—from 100W CPUs to accelerators exceeding 1,000W—thermal management has become mission-critical. The industry has responded with a wave of innovation, but the challenge is clear: higher chip densities mean higher cooling demands.

Liquid cooling has emerged as the only viable solution at scale. Water’s thermal properties are unmatched: it can carry about 4,000 times more heat per unit volume than air, and its thermal conductivity is 30 times higher. Google has already deployed liquid cooling at gigawatt scale, supporting over 2,000 TPU Pods with an impressive 99.999% uptime over the past seven years. Liquid-cooled servers occupy about half the volume of their air-cooled counterparts, replacing bulky heatsinks with cold plates. This has enabled Google to double chip density and quadruple the scale of its liquid-cooled TPU v3 supercomputers compared to the air-cooled TPU v2 generation.

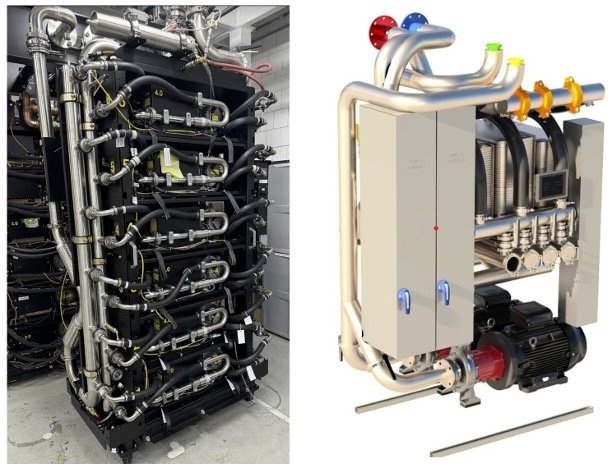

Project Deschutes CDU: 4th gen in deployment, 5th gen in concept

From TPU v3 to TPU v5 and now Ironwood, Google’s approach has evolved to use in-row coolant distribution units (CDUs). These CDUs isolate the rack liquid loop from the facility loop, delivering a controlled, high-performance cooling environment. Project Deschutes, Google’s CDU architecture, features redundant pumps and heat exchangers, achieving 99.999% availability since 2020.

StorageReview has tracked the evolution of liquid cooling from the outset, covering innovators like CoolIT, Submer, JetCool, and DUG Nomad.

Accelerating Industry Adoption

Later this year, Google will contribute Project Deschutes CDU to OCP, sharing system details, specifications, and best practices to accelerate the adoption of liquid cooling at scale. The contribution will include guidance on design for enhanced cooling performance, manufacturing quality, reliability, deployment speed, serviceability, operational best practices, and insights into ecosystem supply chain advancements.

The rapid pace of AI hardware innovation demands that data centers prepare for the next wave of change. The industry’s move toward +/-400 VDC, catalyzed by the Mt. Diablo specification, is a significant step forward. Google urges the community to adopt the Project Deschutes CDU design and leverage its deep expertise in liquid cooling to meet the demands of tomorrow’s AI infrastructure.

Amazon

Amazon