Earlier this year, Intel launched its Xeon 6 processors with Performance Cores (P-cores), providing industry-leading performance for the broadest data center and network infrastructure workloads and best-in-class efficiency to create an unmatched server consolidation opportunity. At Computex 2025, Intel unveiled its latest Xeon 6 processors, featuring significant advancements in data center and networking portfolios.

128 P-Core Intel Xeon 6

The Intel® Xeon® 6700/6500 series processors equipped with P-cores are ideal for modern data centers, providing an excellent balance between performance and energy efficiency. They deliver, on average, 1.4 times better performance than the previous generation across various enterprise workloads. The Xeon 6 series also serves as a foundational central processing unit (CPU) for AI systems, functioning exceptionally well alongside a GPU as a host node CPU.

Compared to 5th Generation AMD EPYC processors, the Xeon 6 offers up to 1.5 times better performance in AI inference on chip while using one-third fewer cores. These processors also excel in performance-per-watt efficiency, enabling an average consolidation rate of 5:1 for a 5-year-old server, with the potential for up to 10:1 in certain use cases. This results in significant savings, with a 68% reduction in total cost of ownership (TCO). Notable innovations include the introduction of Priority Core Turbo (PCT) and Speed Select Technology – Turbo Frequency (SST-TF).

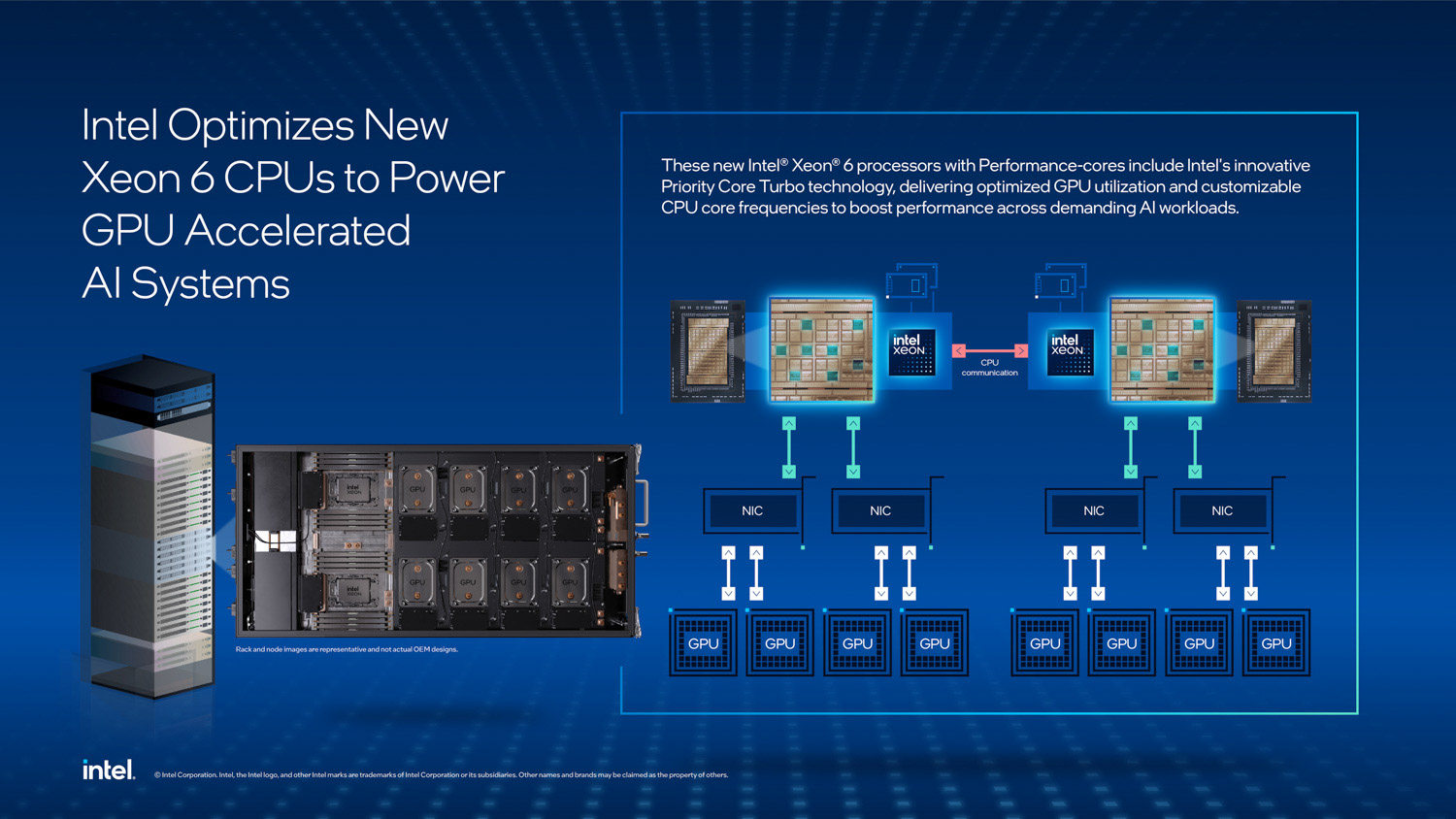

Intel’s Priority Core Turbo technology introduces dynamic prioritization within the CPU, allowing high-priority cores to operate at elevated turbo frequencies, while lower-priority cores remain at base clock. This optimizes processing for serialized and latency-sensitive AI operations that are essential in feeding data to GPUs efficiently, a significant step in reducing CPU-GPU bottlenecks in AI pipelines.

The new Xeon 6 CPUs offer up to 128 P-cores, increased memory bandwidth with support for MRDIMMs and CXL, and 30% faster memory speeds than AMD’s latest EPYC platforms in 2DPC configurations. According to Intel, the Xeon 6700P achieves 5,200 MT/s RDIMM speeds, whereas the competing latest AMD EPYC platform tops out at 4,000 MT/s in equivalent configurations.

In addition to memory improvements, the processors provide up to 20% more PCIe lanes, enabling greater throughput for I/O-heavy workloads such as AI inference, training, and high-speed networking. Intel has also emphasized enterprise-grade reliability, availability, and serviceability (RAS) in the new lineup, ensuring uptime for mission-critical deployments.

A notable enhancement is support for Intel Advanced Matrix Extensions (AMX) with FP16 precision, enabling more efficient CPU-side AI processing and model preconditioning before handing off workloads to accelerators.

In a statement, Karin Eibschitz Segal, Intel’s interim Data Center Group General Manager, highlighted the collaboration with NVIDIA. She stated, “These new Xeon SKUs showcase the exceptional performance of Intel Xeon 6, making it the ideal CPU for next-generation GPU-accelerated AI systems. We are excited to strengthen our partnership with NVIDIA to provide one of the highest-performing AI systems in the industry.”

Powering NVIDIA DGX B300

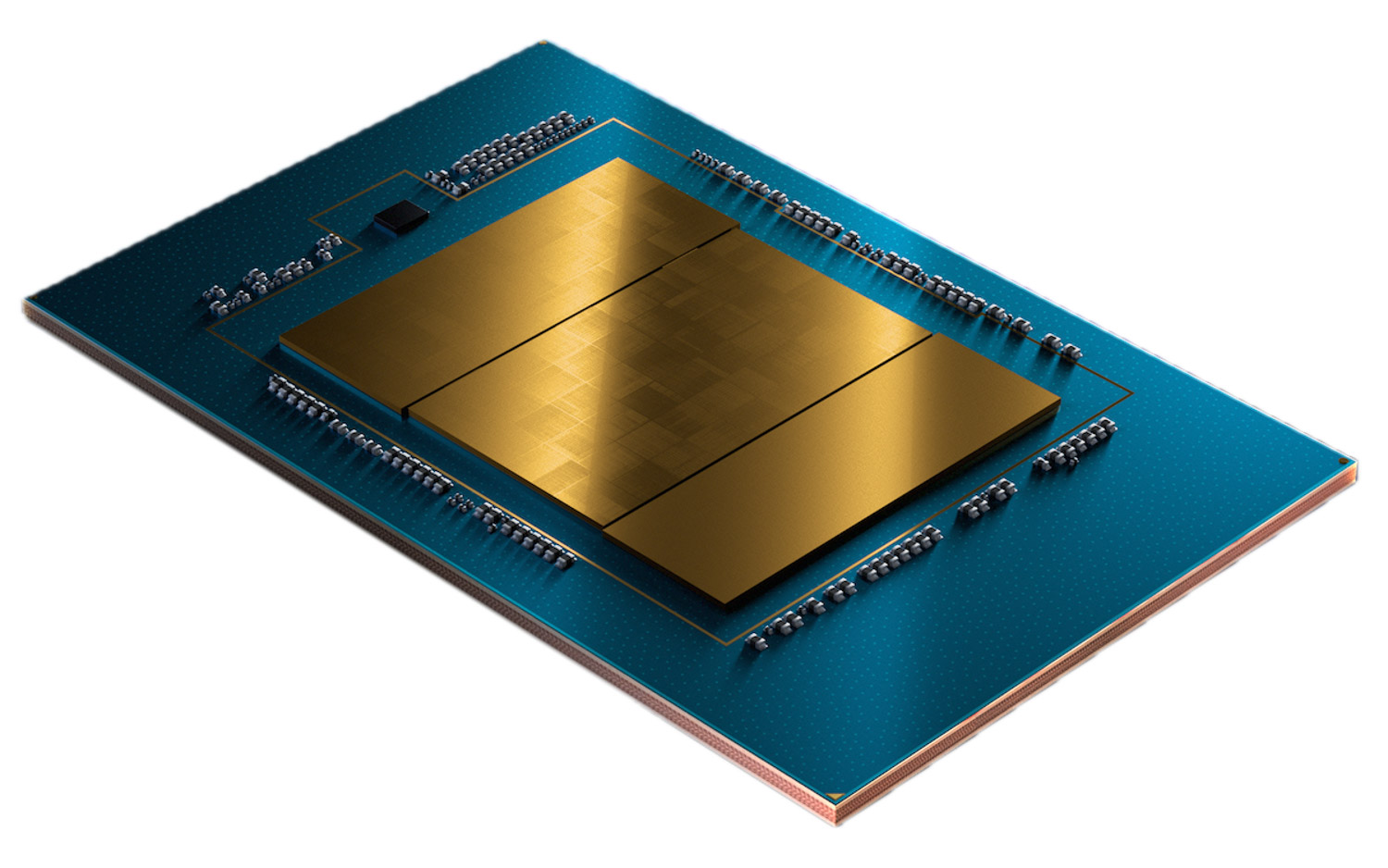

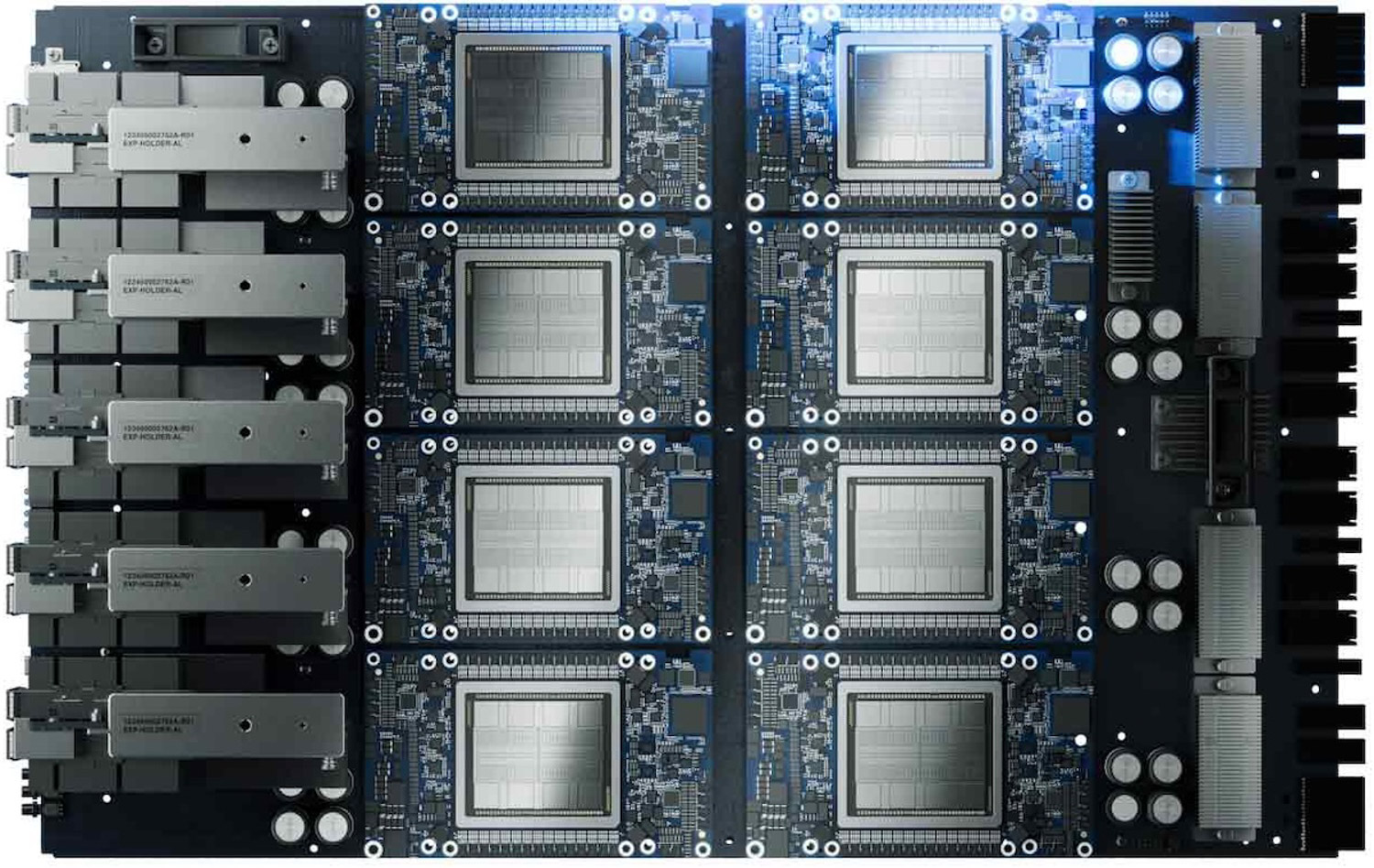

The Intel Xeon 6776P is already used as the host processor in NVIDIA’s DGX AI platform, the latest version of NVIDIA’s flagship AI infrastructure system. The Xeon 6776P plays a critical role in orchestrating data management and coordinating with GPUs, which is essential as AI models continue to increase in complexity and size.

To understand the scale of the platform Intel is powering, here’s a look at NVIDIA’s new DGX B300 specifications.

| Specifications Overview | NVIDIA DGX B300 AI System |

|---|---|

| GPUs | NVIDIA Blackwell Ultra GPUs |

| CPU | Intel® Xeon® 6776P Processors |

| Total GPU Memory | 2.3TB |

| AI Performance | 72 PFLOPS FP8 (Training), 144 PFLOPS FP4 (Inference) |

| Networking | 8x OSFP (ConnectX-8 VPI, up to 800Gb/s) 2x dual-port QSFP112 (BlueField-3, up to 400Gb/s) |

| Management Network | 1GbE onboard NIC + 1GbE BMC |

| Storage | OS: 2x 1.9TB NVMe M.2 Internal: 8x 3.84TB NVMe E1.S |

| Power Consumption | ~14kW |

As AI workloads continue to strain infrastructure, Intel’s Xeon 6 P-core offerings mark a strategic step toward balancing CPU and GPU performance at scale. Available starting today, the new CPUs are positioned to support a wide range of AI-driven applications, from LLM inference and training to real-time analytics in modern data centers.

Performance for Modern Telecommunications Networks

The Intel Xeon 6 for network and edge is a system-on-chip (SoC) designed for high-performance and power efficiency. It utilizes Intel’s built-in accelerators to enhance virtualized radio access networks (vRAN), media, artificial intelligence (AI), and network security. This design addresses the increasing demand for network and edge solutions in an AI-driven world. The Xeon 6 SoCs provide up to 2.4 times the RAN capacity and a 70% improvement in performance-per-watt compared to earlier generations, thanks to Intel vRAN Boost. The Xeon 6 is the industry’s first server SoC to feature a built-in media accelerator, the Intel Media Transcode Accelerator, which delivers up to 14 times the performance-per-watt compared to the Intel Xeon 6538N.

As 5G technology and artificial intelligence stand ready to transform connectivity, conventional network optimization approaches are proving insufficient. To truly capitalize on the capabilities of next-generation networks, telecom operators are embracing sophisticated innovations, including network slicing, AI-enhanced radio controllers, and cloud-native architectures. Using Intel’s unified Xeon platform, operators can adjust workloads, reduce costs, and create scalable networks that respond in real-time to changing customer demands and traffic patterns. This strategic integration enables a more resilient and agile network infrastructure to meet the challenges of a rapidly changing technological landscape.

Intel Xeon 6 SoC highlights include:

- Webroot CSI upload model inference is up to 4.3x faster than Intel Xeon D-2899NT.

- AI RAN performance per core is improved by up to 3.2x compared with the previous generation with vRAN Boost.

- A 38-core system supports int8 inferencing of up to 38 simultaneous camera streams on a video edge server.

New Advanced Ethernet Solutions

Intel also unveiled two new Ethernet controllers and network adapter product lines to address the growing demands of enterprise, telecommunications, cloud, high-performance computing (HPC), edge, and AI applications. Initial availability includes dual-port 25GbE PCIe and OCP 3.0-compliant adapters, with additional configurations expected this year.

- The Intel Ethernet E830 Controllers and Network Adapters deliver up to 200GbE bandwidth, flexible port configurations, and advanced precision time capabilities, including Precision Time Measurement (PTM). These adapters are optimized for high-density virtualized workloads and offer robust security features and performance.

- The Intel® Ethernet E610 Controllers and Network Adapters provide 10GBASE-T connectivity optimized for control plane operations. The 610 series offers outstanding power efficiency, advanced manageability, and comprehensive security features that simplify network administration and ensure maximum network integrity.

Amazon

Amazon