The Lenovo ThinkSystem SR675 V3 server–powered by NVIDIA’s new L40S GPUs–took the stage at SIGGRAPH in Los Angeles, becoming a focal point in NVIDIA’s plans to advance global AI rollouts and introduce generative AI applications like intelligent chatbots, search, and summarization tools to users across various industries.

The new ThinkSystem was showcased as an NVIDIA OVX server and will soon integrate the recently announced NVIDIA L40S GPUs. This collaboration is expected to help revolutionize AI implementations, enabling next-generation AI, immersive metaverse simulations, and cognitive decisions on a large scale.

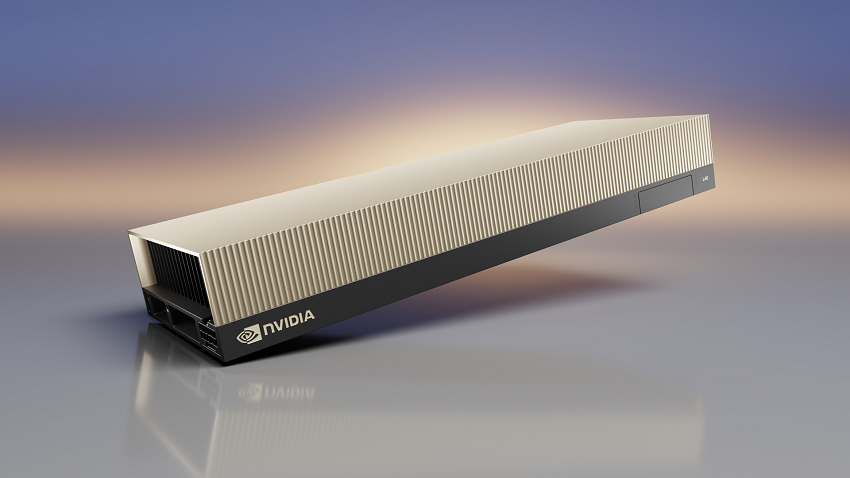

NVIDIA L40S GPUs

The newly unveiled NVIDIA L40S GPU stands as a powerful, universal data center processor, purpose-built to accelerate compute-intensive applications, including AI training and inference, 3D designs and visualization, video processing, and industrial digitalization with the NVIDIA Omniverse platform. It boasts breakthrough multi-workload acceleration for large language model (LLM) inference and retraining, graphics, and video applications.

The L40S GPU empowers the next generation of AI-enabled audio, speech, 2D, video, and 3D applications and is a crucial component for the upcoming NVIDIA Omniverse OVX 3.0 platforms, offering high fidelity and accurate digital twins.

The NVIDIA L40S GPU will be a key asset in the NVIDIA OVX systems. Powered by the NVIDIA Ada Lovelace GPU architecture and a generous 48GB of memory, each server can house up to eight L40S GPUs. It also boasts fourth-generation Tensor Cores and an FP8 Transformer Engine, delivering an astonishing 1.45 petaflops of tensor processing power.

Compared to its predecessor, the NVIDIA A100 Tensor Core GPU, the L40S demonstrates up to 1.2 times more generative AI inference performance and up to 1.7 times faster training performance for handling complex AI workloads with billions of parameters and multiple data modalities.

The L40S GPU is designed to cater to a wide range of professional workflows. It includes 142 third-generation RT Cores, delivering 212 teraflops of ray-tracing performance, ideal for high-fidelity professional visualization workflows like real-time rendering, product design, and 3D content creation.

Additionally, the L40S’s 18,176 CUDA cores provide nearly 5x the single-precision floating-point (FP32) performance of the NVIDIA A100 GPU, ensuring computational demands for engineering and scientific simulations are met with accelerated efficiency.

ThinkSystem SR675 V3 Configurations

Lenovo is strategically positioned to support the L40S with its new lineup of servers optimized for the new GPUs. By combining fully simulated digital twins with generative AI, Lenovo aims to improve its business processes and design outcomes. The collaboration between Lenovo and NVIDIA on the NVIDIA OVX system facilitates the construction and operation of virtual worlds, extending the system’s capabilities to generative AI and providing power performance for data centers with AI workloads.

The ThinkSystem SR675 V3 offers three server configurations in one, which includes support for NVIDIA HGX A100 4-GPU systems with NVLink and Lenovo Neptune hybrid liquid cooling. Additionally, it is compatible with 4 or 8 GPU configurations that feature NVIDIA L40S, NVIDIA H100 80GB, or NVIDIA H100 NVL servers in a 3U footprint.

Lenovo sees these developments as a significant leap in simplifying AI rollout, making it accessible to organizations of all sizes, and driving transformative intelligence across all industries.

ThinkSystem SR675 V3 Configurations: Base, Dense, and HGX Modules

In the realm of AI/HPC server configurations, understanding the trade-offs and benefits of different configurations is crucial for optimization and specific workload requirements. The Lenovo ThinkSystem SR675 V3 offers flexibility with its Base, Dense, and HGX module configurations, each designed for particular needs.

Base Module

The Base Module configuration caters to organizations looking for a balanced blend of GPU support and storage capability. It can accommodate up to 4 double-wide, full-height, full-length; FHFL GPUs, utilizing PCIe Gen5 x16 connections. This offers decent parallel processing capabilities for AI and deep learning workloads.

Storage-wise, the Base Module supports up to 8x 2.5” Hot Swap SAS/SATA/NVMe drives, providing a balance between storage volume and speed.

Dense Module

For organizations that prioritize parallel GPU processing, the Dense Module can support up to 8 double-wide, full-height, full-length GPUs. Each GPU uses PCIe Gen5 x16 on a PCIe switch, thereby maximizing the number of GPUs on a single server for enhanced parallel processing.

When it comes to storage, the Dense Module is versatile. It supports up to 6x EDSFF E1.S NVMe SSDs or up to 4x EDSFF E3.S 1T NVMe HS SSDs. This configuration is ideal for scenarios where intense data processing tasks require more GPUs at the cost of some storage flexibility.

HGX Module

The HGX Module configuration is specialized, targeting high-performance needs with specific GPU requirements. It leverages NVIDIA HGX H100 with 4 NVLink-connected SXM5 GPUs. These SXM5 GPUs might limit the overall number you can fit in the chassis, but they offer superior chip performance, making them ideal for demanding AI and deep learning tasks.

In terms of storage, the HGX Module is optimized for speed. It can house up to 4x 2.5” Hot Swap NVMe SSDs or up to 4x EDSFF E3.S 1T NVMe HS SSDs.

Closing Thoughts

Each module configuration of the ThinkSystem SR675 V3 has its unique strengths. While the SXM5 GPUs in the HGX Module offer unparalleled performance, organizations requiring more parallel processing can opt for the Dense Module to fit more PCIe GPUs. However, this comes at the cost of TDP loss and potential performance degradation. The Base Module provides a middle ground, offering a balance of GPU support and storage options. As always, the best choice depends on the specific requirements and constraints of the task at hand, and we would never kick any of these configurations out of our lab.

Amazon

Amazon