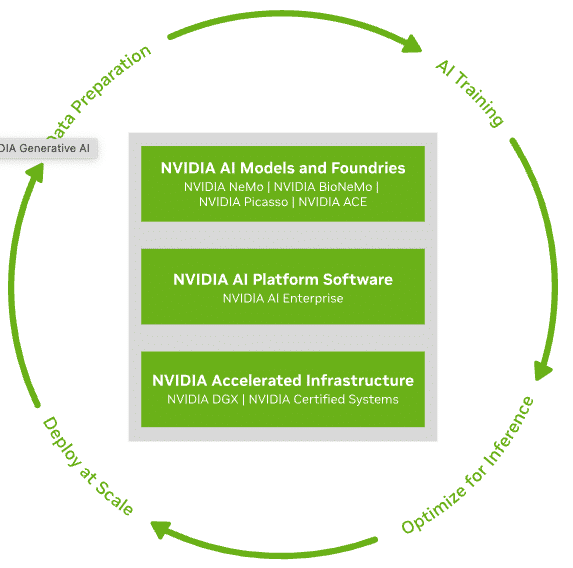

It’s been a busy week for NVIDIA, and they are not finished. NVIDIA is rolling out an AI foundry service on Microsoft Azure, and it’s a powerful new tool for businesses diving into custom generative AI.

Here’s what NVIDIA’s AI foundry service is bringing to the table:

- NVIDIA AI Foundation Models: These are your AI building blocks.

- NVIDIA NeMo Framework and Tools: The toolkit for fine-tuning your AI.

- NVIDIA DGX Cloud AI Supercomputing Services: The powerhouse for processing your AI dreams into reality.

These elements let businesses craft their own AI models for things like smart search and content creation, all powered by NVIDIA AI Enterprise software.

Customized Models for Generative AI-powered Applications

NVIDIA’s AI foundry service can customize models for generative AI-powered applications across industries, including enterprise software, telecommunications, and media. When customized models are ready to deploy, enterprises can use a technique called retrieval-augmented generation (RAG) to connect their models with their enterprise data and access new insights.

NVIDIA’s service isn’t just for show; it’s practical. Industry leaders SAP SE, Amdocs, and Getty Images are already creating custom models with this service. Industries from software to telecom can now craft AI apps that fit their needs. SAP is using it for their AI copilot, Joule, and Amdocs is enhancing their amAIz framework for telecom AI solutions.

NVIDIA CEO Jensen Huang highlights the service’s ability to tailor AI using a company’s unique data. And Satya Nadella, Microsoft CEO, is all about pushing AI innovation on Azure with NVIDIA’s help.

Nemotron-3 8B

NVIDIA serves up a variety of AI Foundation models, including the versatile Nemotron-3 8B family, available on both Azure AI and NVIDIA NGC catalogs. These models are ready for multilingual use and various applications. Optimized for NVIDIA for accelerated computing, community models such as Meta’s Llama 2 models are also available on NVIDIA NGC and are coming to the Azure AI model catalog soon.

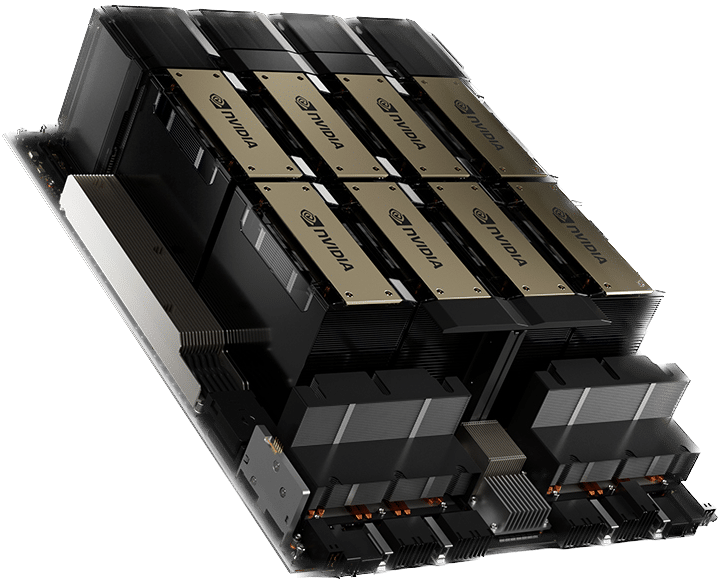

NVIDIA DGX Cloud Hits Azure Marketplace

NVIDIA DGX Cloud AI supercomputing is now on Azure Marketplace. Rent what you need, easily scale, and enjoy NVIDIA AI Enterprise software for quick LLM customization. It features instances customers can rent, scaling to thousands of NVIDIA Tensor Core GPUs, and comes with NVIDIA AI Enterprise software, including NeMo, to speed LLM customization.

With NVIDIA AI Enterprise integrated into Azure Machine Learning, users get a stable, secure AI platform. It’s also up on Azure Marketplace, offering a range of AI development and deployment possibilities.

NVIDIA’s AI foundry service on Azure isn’t just an update; it’s an innovative approach to custom AI development.

NVIDIA and Microsoft Amp Up Azure with Advanced AI Capabilities

Microsoft is upping its AI game on Azure, introducing new H100-based virtual machines, and planning for the future addition of the H200 Tensor Core GPU. Announced at the Ignite conference, the new NC H100 v5 VM series is a first in cloud computing, featuring NVIDIA H100 NVL GPUs. These VMs pack a serious punch with two PCIe-based H100 GPUs linked by NVIDIA NVLink, delivering nearly 4 petaflops of AI compute and 188GB of high-speed HBM3 memory. The H100 NVL GPU is a powerhouse, offering up to 12x the performance on GPT-3 175B compared to previous models, perfect for inference and mainstream training workloads.

Integrating NVIDIA H200 Tensor Core GPU Into Azure

Microsoft plans to integrate the NVIDIA H200 Tensor Core GPU into Azure next year. This upgrade is designed to handle larger model inferencing without increased latency, which is ideal for LLMs and generative AI models. The H200 stands out with its 141GB of HBM3e memory and 4.8 TB/s peak memory bandwidth, significantly outperforming the H100.

Additionally, Microsoft is expanding its NVIDIA-accelerated offerings with the NCC H100 v5, a new confidential VM. These Azure confidential VMs, equipped with NVIDIA H100 Tensor Core GPUs, ensure data and application confidentiality and integrity while in use. This enhanced security feature, combined with the H100’s acceleration capabilities, is set to be available soon in a private preview.

These advancements by NVIDIA and Microsoft mark a significant leap in Azure’s cloud computing and AI capabilities, offering unprecedented power and security for demanding AI workloads.

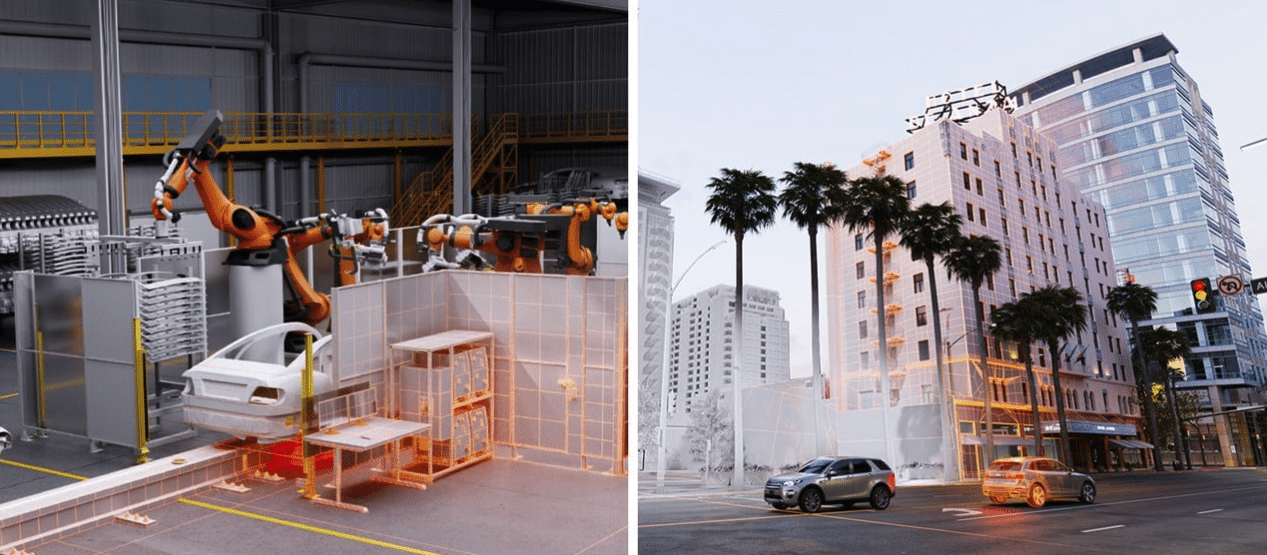

NVIDIA Boosts Automotive Digitalization with New Simulation Engines on Omniverse Cloud

NVIDIA is stepping up its game in the automotive industry by introducing two new simulation engines on Omniverse Cloud: the virtual factory simulation engine and the autonomous vehicle (AV) simulation engine. Hosted on Microsoft Azure, Omniverse Cloud is transforming how automotive companies handle their product lifecycle, shifting from physical, manual processes to software-driven, AI-enhanced digital systems.

Virtual Factory Simulation Engine: A Game-Changer for Automakers

This engine is a toolkit for factory planning teams to connect and collaborate on large-scale industrial datasets in real time. It enables design teams to build virtual factories and share their work seamlessly. This increases production quality and throughput and saves significant time and money by avoiding post-construction changes.

Key features include compatibility with existing software like Autodesk Factory Planning and Siemens’ NX, enhancing collaboration across various platforms. T-Systems and SoftServe already leverage this engine to develop custom virtual factory applications.

AV Simulation Engine: Revolutionizing Autonomous Vehicle Development

The AV simulation engine is designed to deliver high-fidelity sensor simulation, crucial for developing next-gen AV architectures. It allows developers to test autonomous systems in a virtual environment, integrating layers of vehicle stack such as perception, planning, and control.

This engine is crucial for developing advanced unified AV architectures, requiring high-quality sensor data simulation. NVIDIA integrates its DRIVE Sim and Isaac Sim sensor simulation pipelines into this engine, providing realistic simulations for cameras, radars, lidars, and other sensors.

Accelerating Digital Transformation

The factory simulation engine is now available on Azure Marketplace, offering NVIDIA OVX systems and managed Omniverse software. The sensor simulation engine is set to follow soon. Additionally, enterprises can deploy Omniverse Enterprise on optimized Azure virtual machines, further streamlining the digitalization process in the automotive sector.

NVIDIA’s new simulation engines on Omniverse Cloud mark a significant step in automotive digitalization, offering powerful tools for virtual factory planning and AV development.

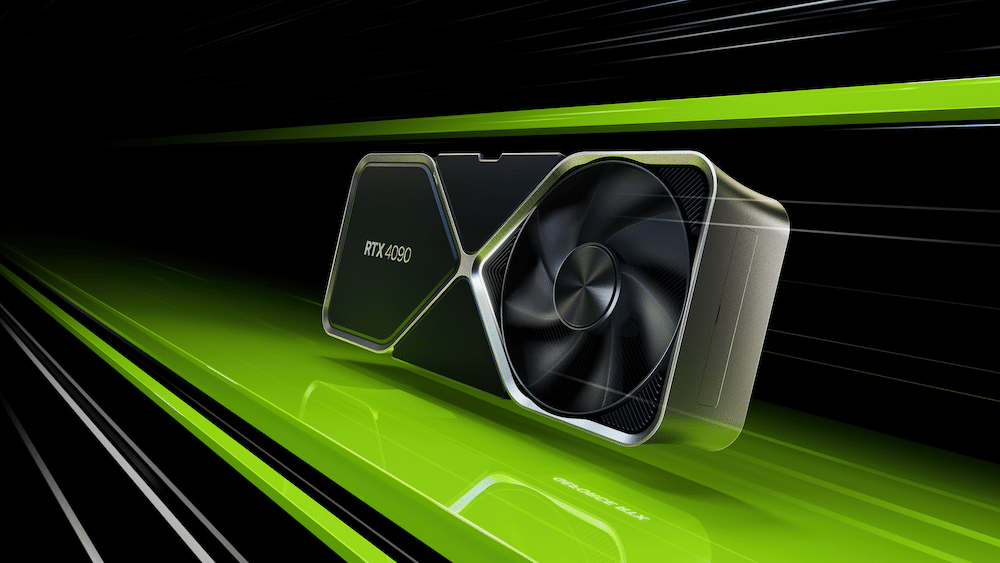

AI Revolution Hits Windows 11: NVIDIA’s Big Leap with RTX GPUs

NVIDIA is reshaping the Windows 11 landscape, leveraging the power of RTX GPUs to bring a new dimension to the experiences of gamers, creators, and everyday PC users. With a user base of over 100 million Windows PCs equipped with RTX GPUs, NVIDIA’s latest innovations are poised to elevate productivity and creativity to new heights.

At the forefront of these developments is the introduction of two new simulation engines on Omniverse Cloud — the virtual factory and the autonomous vehicle (AV) simulation engines. Hosted on Microsoft Azure, these engines are revolutionizing the automotive industry’s product lifecycle, transitioning from traditional manual processes to efficient, AI-enhanced digital systems.

In a significant update, NVIDIA’s open-source software, TensorRT-LLM, is set to enhance AI inference performance. This update will extend support to new large language models, making complex AI workloads more accessible on desktops and laptops equipped with RTX GPUs starting at 8GB of VRAM. This enhancement is a game-changer, especially with the upcoming compatibility of TensorRT-LLM for Windows with OpenAI’s Chat API. This integration means that many developer projects and applications can now run locally on RTX PCs, allowing users to keep sensitive data securely on their Windows 11 PCs, away from the cloud.

NVIDIA is also introducing AI Workbench, a comprehensive toolkit designed to streamline the development process for creators. This platform simplifies the creation, testing, and customization of pre-trained generative AI models and LLMs, enabling developers to efficiently manage their AI projects and tailor models to specific use cases.

In collaboration with Microsoft, NVIDIA is enhancing DirectML, which promises to accelerate foundational AI models like Llama 2. This collaboration broadens the scope for cross-vendor deployment and sets a new benchmark in performance standards.

The upcoming release of TensorRT-LLM v0.6.0 is another leap forward, promising up to five times faster inference performance. It will also support additional popular LLMs, including Mistral 7B and Nemotron-3 8B. These models will be accessible on GeForce RTX 30 and 40 Series GPUs with 8GB RAM or more, making advanced AI capabilities available even on the most portable Windows devices.

To support these advancements, NVIDIA is making the new release of TensorRT-LLM and optimized models available on its GitHub repository and ngc.nvidia.com.

With these innovations, NVIDIA is not just enhancing the PC experience for its vast user base; it’s paving the way for a new era in AI-enhanced computing on Windows 11, marking a pivotal moment in the intersection of technology and everyday life.

Amazon

Amazon