NVIDIA has made quite a splash in the world of artificial intelligence (AI) and high-performance computing with its latest unveiling – the NVIDIA GH200 Grace Hopper Superchip. This recent offering has shown outstanding performance in the MLPerf benchmarks, demonstrating NVIDIA’s prowess in cloud and edge AI.

A Superchip that Speaks Volumes

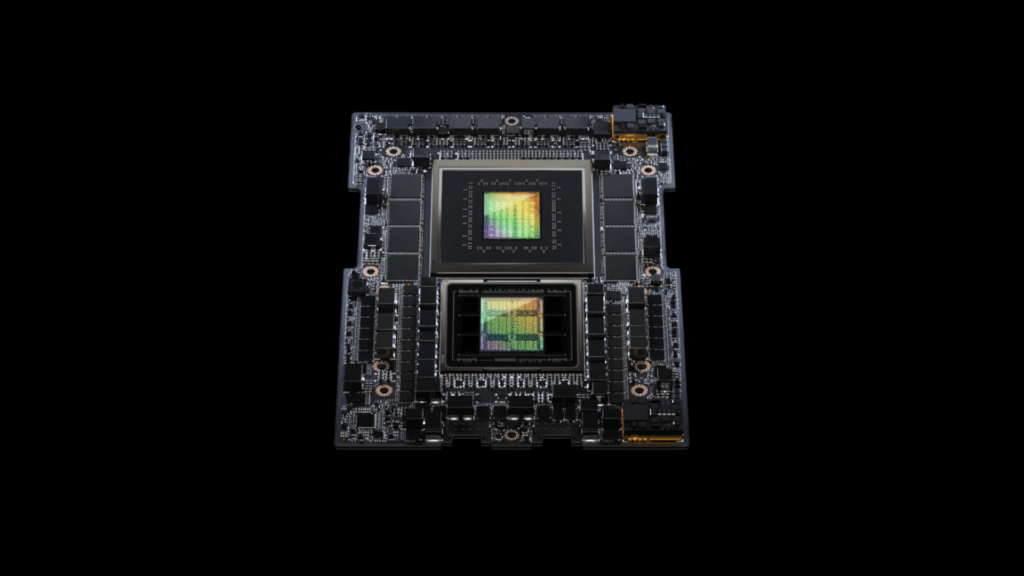

The GH200 Superchip is not just any ordinary chip. It uniquely combines a Hopper GPU with a Grace CPU, providing heightened memory, bandwidth, and the capability to auto-adjust power between the CPU and GPU for peak performance. This innovative integration lets the chip achieve a delicate balance between power and performance, ensuring that AI applications get the resources they need when they need them.

Exceptional MLPerf Results

MLPerf benchmarks are a respected industry standard, and NVIDIA’s GH200 didn’t disappoint. Not only did the superchip run all the data center inference tests, but it also showcased the versatility of NVIDIA’s AI platform, extending its scope from cloud operations to the edges of the network.

Moreover, NVIDIA’s H100 GPUs weren’t left behind either. The HGX H100 systems, equipped with eight H100 GPUs, showcased superior throughput across all the MLPerf Inference tests. This highlights the immense potential and capabilities of the H100 GPUs, especially for tasks such as computer vision, speech recognition, medical imaging, recommendation systems, and large language models (LLMs).

TensorRT-LLM: Amplifying Inference Performance

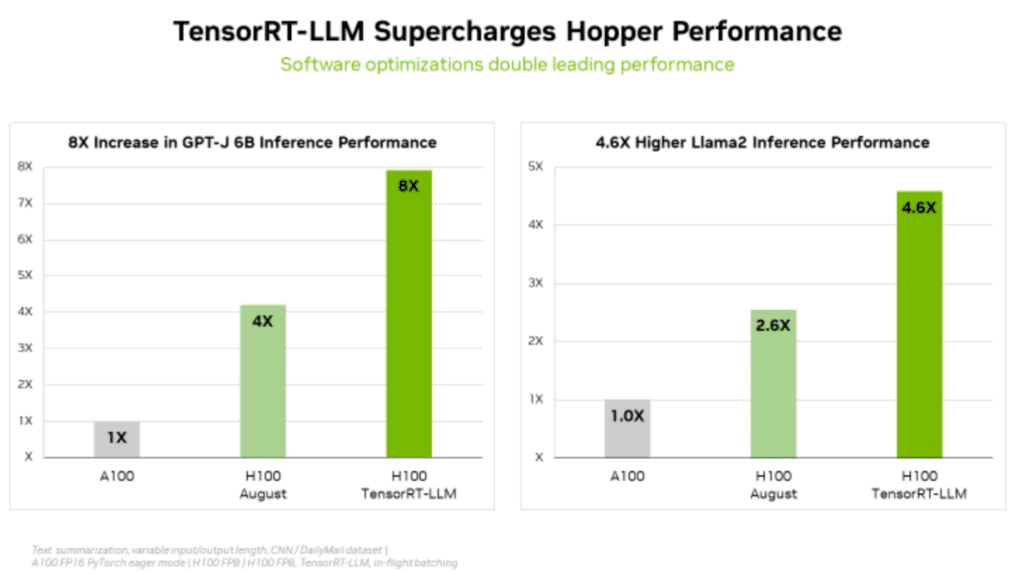

NVIDIA has always been at the forefront of continuous innovation, and the TensorRT-LLM is a testament to this legacy. This generative AI software boosts inference and comes as an open-source library. Even though it wasn’t submitted to MLPerf in time for the August evaluation, it holds promise, allowing users to amplify the performance of their H100 GPUs without incurring additional costs. Partners like Meta, Cohere, and Grammarly have benefitted from NVIDIA’s efforts in enhancing LLM inference, reaffirming the importance of such software developments in the AI realm.

L4 GPUs: Bridging Mainstream Servers and Performance

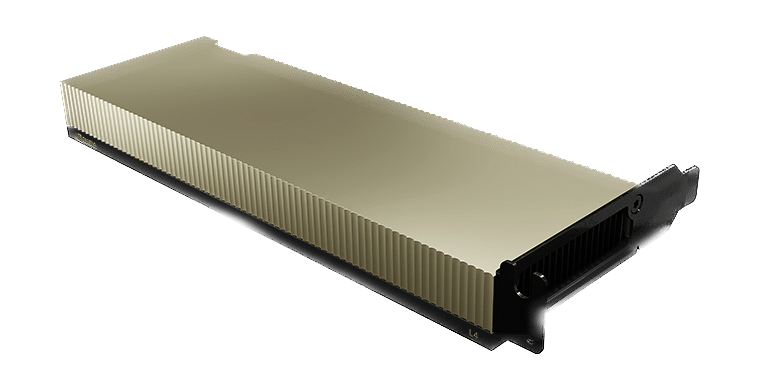

With the latest MLPerf benchmarks, the L4 GPUs have exhibited commendable performance across a myriad of workloads. These GPUs, when placed in compact accelerators, have demonstrated performance metrics up to six times more efficient than CPUs with higher power ratings. The introduction of dedicated media engines, in collaboration with CUDA software, gives the L4 GPU the edge, especially in computer vision tasks.

Pushing the Boundaries: Edge Computing and More

The advancements are not limited to cloud computing alone. NVIDIA’s focus on edge computing is evident with the Jetson Orin system-on-module, which showcases performance enhancements of up to 84% compared to its previous versions in object detection.

MLPerf: A Transparent Benchmarking Standard

MLPerf continues to be an objective benchmark that users across the globe rely on for making purchase decisions. The inclusion of cloud service giants like Microsoft Azure and Oracle Cloud Infrastructure, along with renowned system manufacturers like Dell, Lenovo, and Supermicro, underlines the significance of MLPerf in the industry.

In conclusion, NVIDIA’s recent performance in the MLPerf benchmarks reinforces its leadership position in the AI sector. With a broad ecosystem, continuous software innovation, and a commitment to delivering high-quality performance, NVIDIA is indeed shaping the future of AI.

For a more in-depth technical dive into NVIDIA’s accomplishments, refer to the linked technical blog. Those keen on replicating NVIDIA’s benchmarking success can access the software from the MLPerf repository and the NVIDIA NGC software hub.

Amazon

Amazon