NVIDIA Hopper architecture took center stage during NVIDIA GTC with the focus to power the next wave of AI data centers. Named for Grace Hopper, a pioneering U.S. computer scientist, the next-generation accelerated computing platform delivers an order of magnitude performance over its predecessor, NVIDIA Ampere.

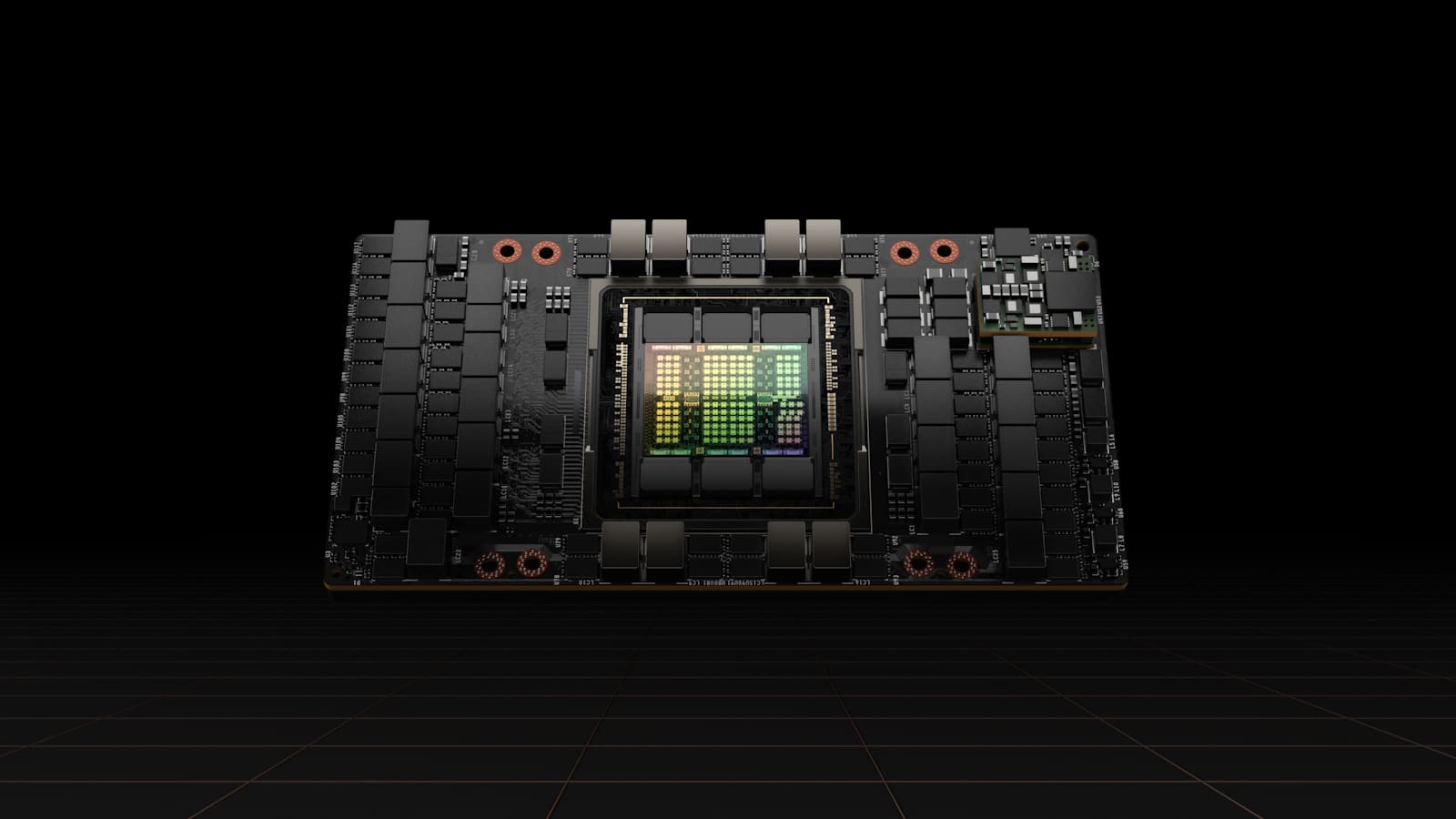

NVIDIA also announced its first Hopper-based GPU, the NVIDIA H100, packed with 80 billion transistors. Touted as the world’s largest and most powerful accelerator, the H100 features a Transformer Engine and a highly scalable NVIDIA NVLink interconnect for advancing gigantic AI language models, deep recommender systems, genomics, and complex digital twins.

“Data centers are becoming AI factories — processing and refining mountains of data to produce intelligence,” said Jensen Huang, founder and CEO of NVIDIA. “NVIDIA H100 is the engine of the world’s AI infrastructure that enterprises use to accelerate their AI-driven businesses.”

H100 Technology

Built using a cutting-edge TSMC 4N process designed for NVIDIA’s accelerated compute needs, H100 features significant advances to accelerate AI, HPC, memory bandwidth, interconnect, and communication, including nearly 5TB/s external connectivity. The Hopper H100 is the first GPU to support PCIe Gen5 and utilize HBM3, (High Bandwidth Memory 3) enabling 3TB/s of memory bandwidth. Twenty H100 GPUs can sustain the equivalent of the entire world’s internet traffic, making it possible for customers to deliver advanced recommender systems and large language models running inference on data in real-time.

The choice for natural language processing, the Transformer Engine is one of the most important deep learning models ever invented. The H100 accelerator’s Transformer Engine is built to speed up these networks as much as 6x versus the previous generation without losing accuracy.

With Multi-Instance GPU (MIG) technology, the Hopper architecture allows a single GPU to be partitioned into seven smaller, fully isolated instances to handle different types of jobs. By extending MIG capabilities by up to 7x over the previous generation, the Hopper architecture offers secure multi-tenant configurations in cloud environments across each GPU instance.

The H100 is the world’s first accelerator with confidential computing capabilities to protect AI models and customer data during processing. Customers can also apply confidential computing to federated learning for privacy-sensitive industries like healthcare, financial services, and shared cloud infrastructures.

The 4th-Generation NVIDIA NVLink combines with a new external NVLink Switch extending it as a scale-up network beyond the server, connecting up to 256 H100 GPUs at 9x higher bandwidth versus the previous generation using NVIDIA HDR Quantum InfiniBand.

NVIDIA H100 can be deployed in virtually all data centers, including on-premises, cloud, hybrid-cloud, and edge, and is expected to be available later this year.

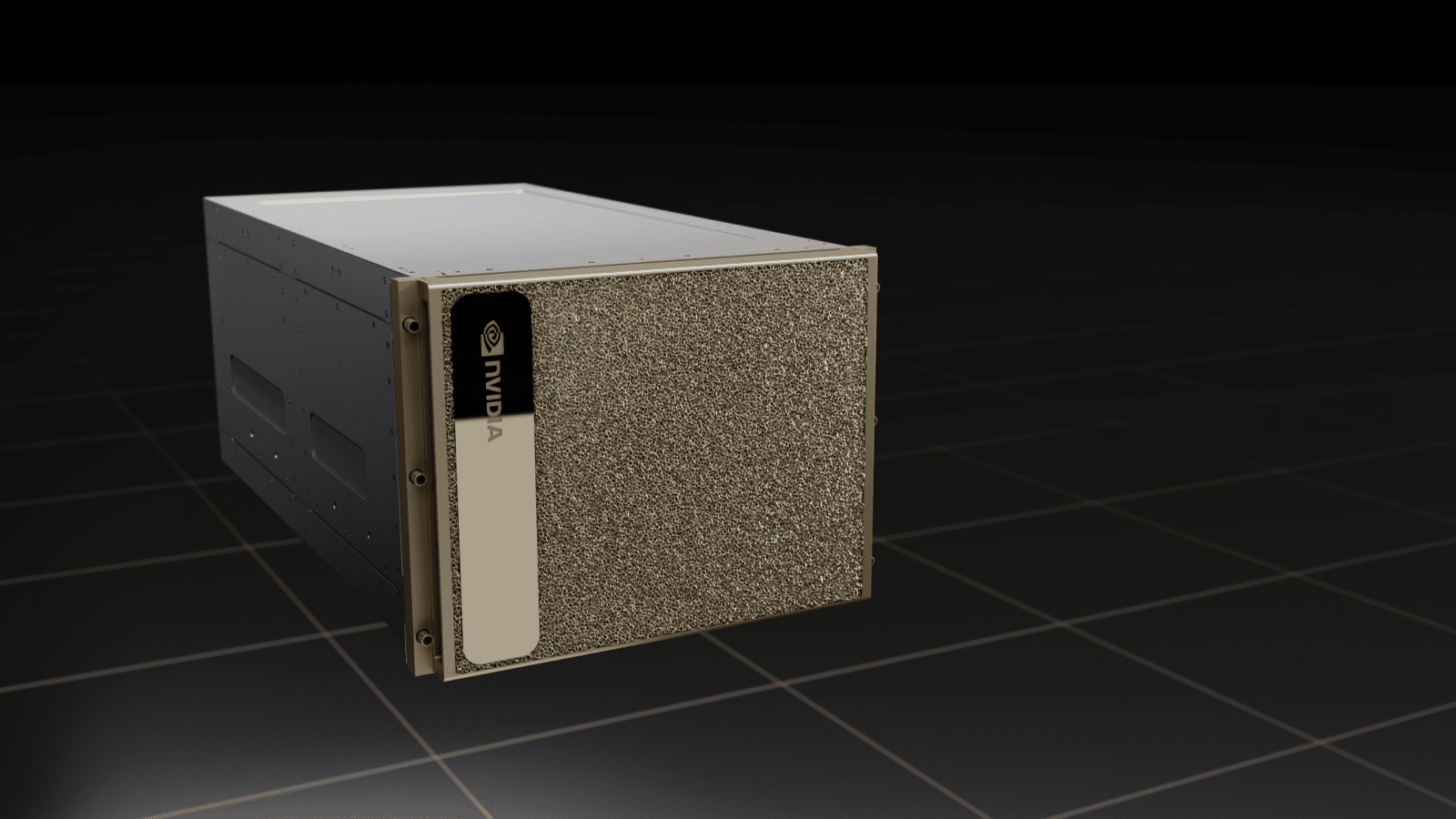

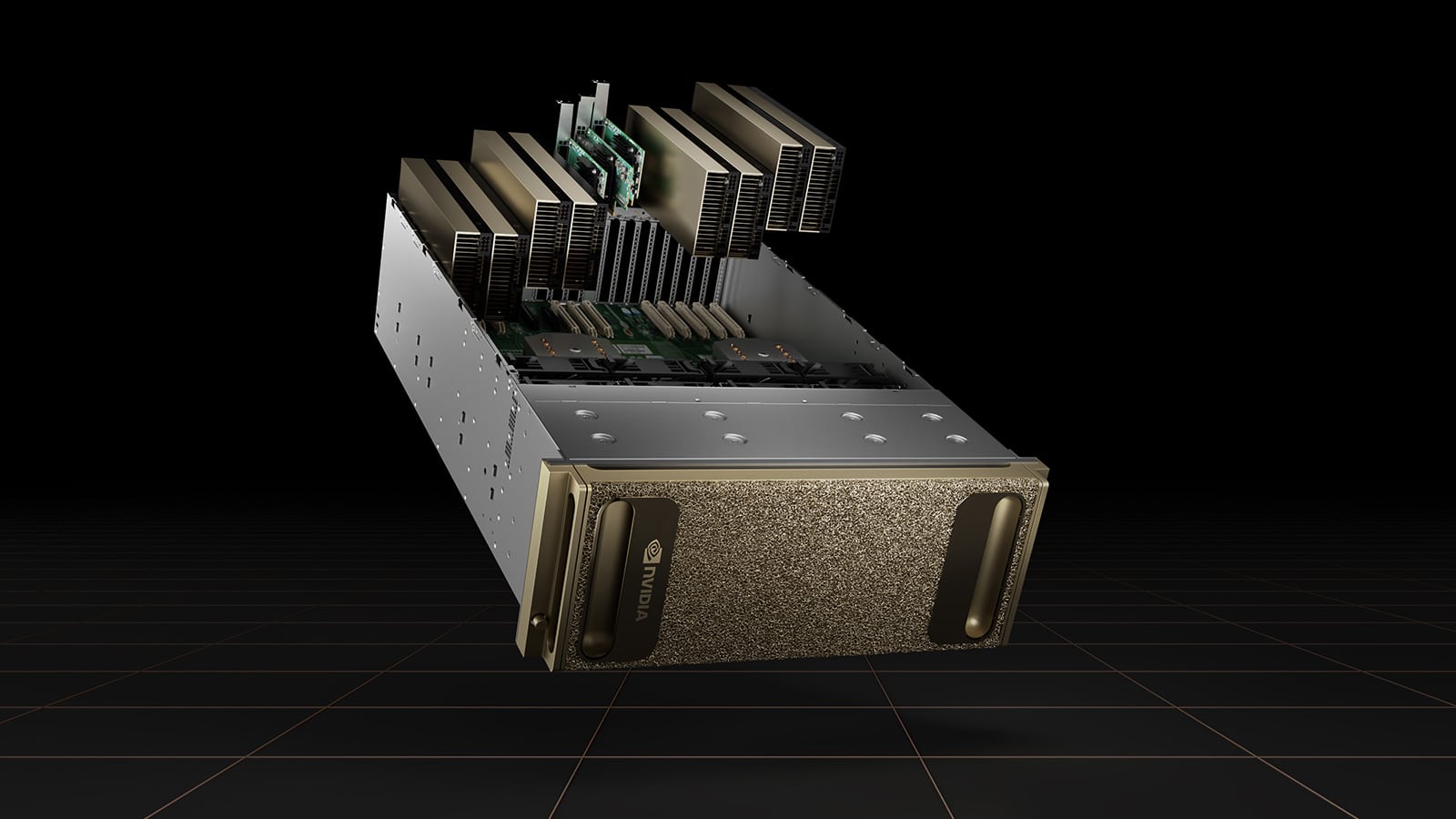

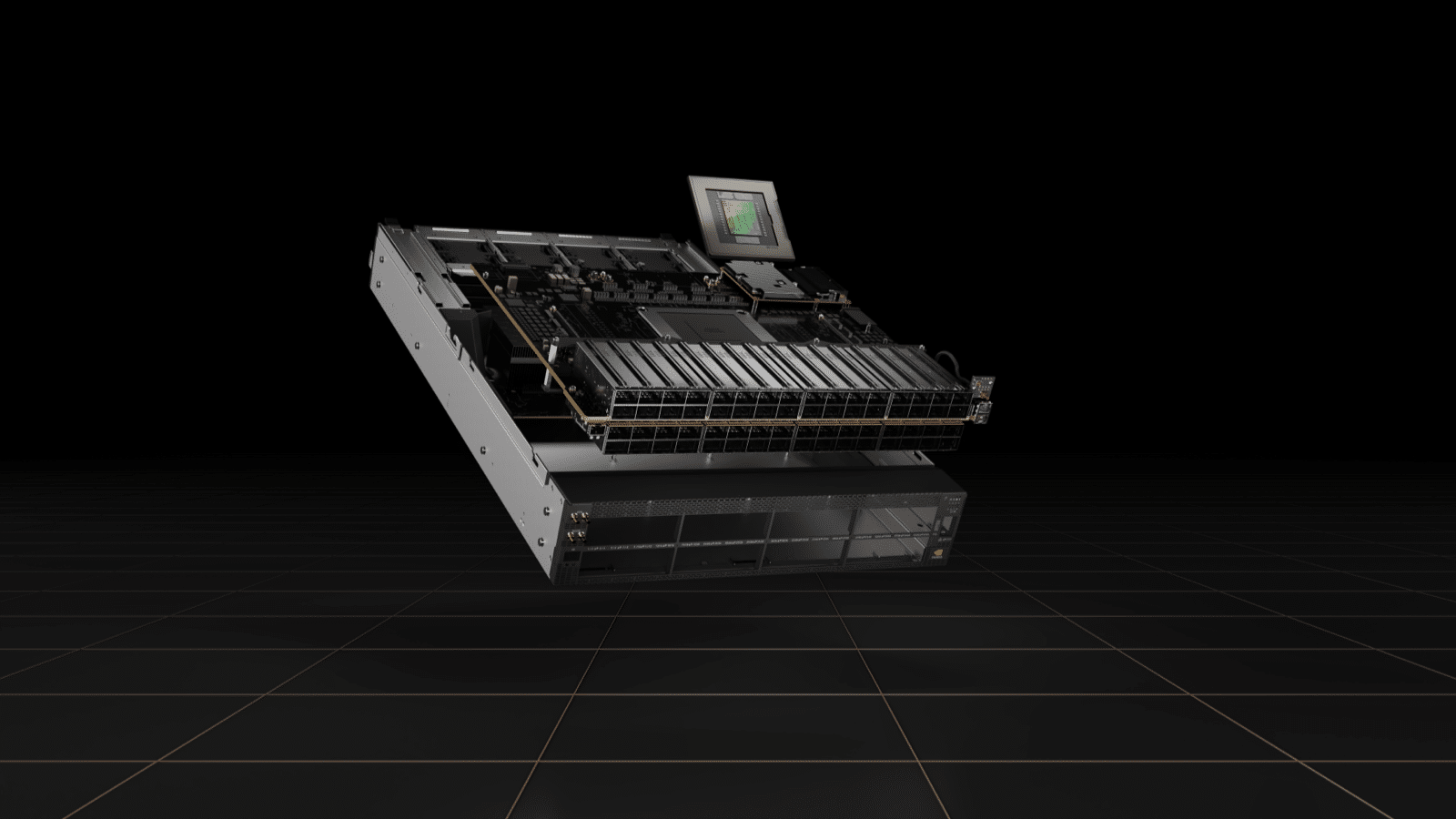

DGX H100 System

NVIDIA announced the fourth-generation DGX system, DGX H100, featuring eight H100 GPUs delivering 32 petaflops of AI performance at new FP8 precision, providing the scale to meet the massive compute requirements of large language models, recommender systems, healthcare research, and climate science.

Each DGX H100 system packs eight H100 GPUs, connected as one by fourth-generation NVLink, delivering 900GB/s connectivity, an increase of 1.5x more than the prior generation. NVIDIA’s NVLink is a low-latency, lossless GPU-to-GPU interconnect that includes resiliency features, such as link-level error detection and packet replay mechanisms to guarantee successful data delivery.

In addition to the fourth-generation NVLink, the H100 also introduces the new NVLink Network interconnect. This scalable version of NVLink enables GPU-to-GPU communication of up to 256 GPUs across multiple compute nodes. NVIDIA also introduced third-generation NVSwitch technology that includes switches both inside and outside nodes to connect multiple GPUs in servers, clusters, and data center environments. A node with the new NVSwitch provides 64 ports of NVLinks to accelerate multi-GPU connectivity, nearly doubling the total switch throughput from 7.2 Tbits/s to 13.6 Tbits/s. NVSwitch enables all eight of the H100 GPUs to connect over NVLink. An external NVLink Switch can network up to 32 DGX H100 nodes in the next-generation NVIDIA DGX SuperPOD supercomputers.

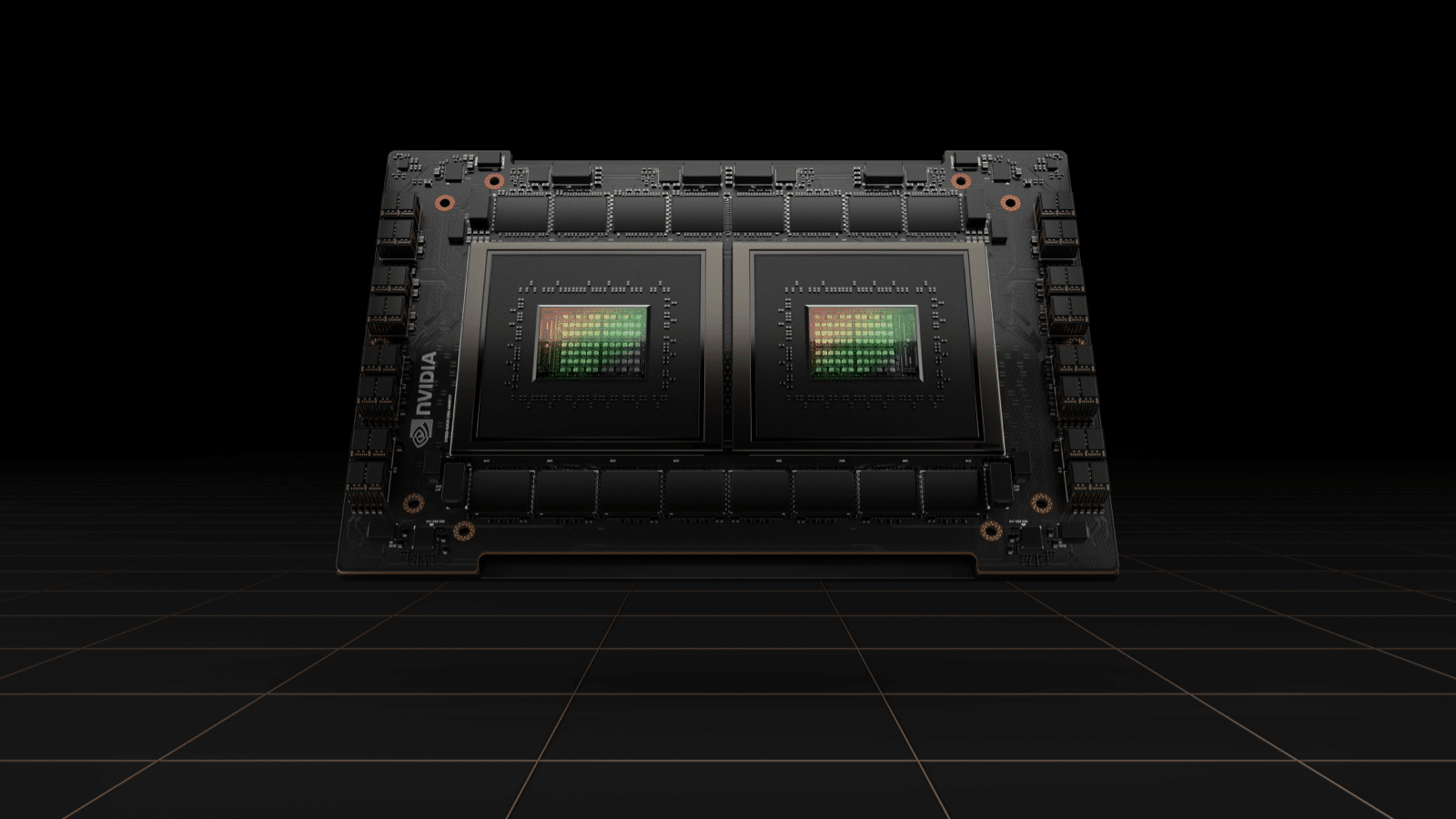

Grace CPU Superchip

NVIDIA announced Grace CPU Superchip, packed with 144 Arm cores in a single socket. This is the first Arm Neoverse-based discrete data center CPU designed for AI infrastructure and high-performance computing, delivering twice the memory bandwidth and energy efficiency.

The memory subsystem consists of LPDDR5x memory with Error Correction Code for balance of speed and power consumption. The LPDDR5x memory subsystem doubles the bandwidth of traditional DDR5 designs at 1 TB/s while consuming dramatically less power.

The Grace CPU Superchip will run all of NVIDIA’s computing software stacks, including NVIDIA RTX, NVIDIA HPC, NVIDIA AI, and Omniverse. The Grace CPU Superchip along with NVIDIA ConnectX-7 NICs offer the flexibility to be configured into servers as standalone CPU-only systems or as GPU-accelerated servers with one, two, four, or eight Hopper-based GPUs, allowing customers to optimize performance for their specific workloads while maintaining a single software stack.

Omniverse Computing System

Keeping with the data center focus, NVIDIA announced NVIDIA OVX, a computing system designed to power large-scale digital twins. A digital twin is a virtual world that’s connected to the physical world. NVIDIA OVX is designed to operate complex digital twin simulation that will run within NVIDIA Omniverse, a real-time physically accurate world simulation and 3D design collaboration platform.

Combining high-performance GPU-accelerated compute, graphics and AI with low-latency, high-speed storage access, the OVX system will provide the performance required for creating digital twins with real-world accuracy. OVX can simulate complex digital twins for modeling buildings, factories, cities, and the world.

The OVX server includes eight NVIDIA A40 GPUs, three ConnectX-6 DX 200Gbps NICs, 1TB memory and 16TB NVMe storage. The OVX system scales from a single pod of eight OVX servers to an OVX SuperPOD with 32 servers connected via NVIDIA Spectrum-3 switch or multiple OVX SuperPODs.

Jetson AGX Orin Developer Kit

NVIDIA also announced the availability of the Jetson AGX Orin Developer kit, a compact, energy-efficient AI supercomputer for advanced robotics, autonomous machines, and next-gen embedded and edge computing. The specs for the Jetson AGX Orin are impressive, delivering 275 trillion operations per second, over 8x the processing power of the previous model, while still maintaining a palm-sized form factor. Featuring the NVIDIA Ampere architecture GPU, Arm Cortex-A78AE CPUs, next-gen deep learning and vision accelerators, faster memory bandwidth, high-speed interfaces, and multimodal sensor, the Jetson AGX Orin can feed multiple, concurrent AI application pipelines.

Customers using Jetson AGX Orin can leverage the full NVIDIA CUDA-X accelerated computing stack, with 60 updates to its collection of libraries, tools, and technologies. They will also have full access to NVIDIA JetPack SDK, pre-trained models from the NVIDIA NGC catalog, and the latest frameworks and tools for application development and optimization, such as NVIDIA Isaac on Omniverse, NVIDIA Metropolis, and NVIDIA TAO Toolkit.

NVIDIA Spectrum-4

Staying focused on the data center, NVIDIA announced its NVIDIA Spectrum-4 Ethernet platform. The next-gen switch delivers 400Gbps end-to-end with 4x higher switching throughput than previous generations. The Spectrum-4 includes ConnectX-7 SmartNIC, BlueField-3 DPLU, and DOCA data center infrastructure software.

Built for AI, the Spectrum-4 switches allow nanosecond precision, accelerate, simplify, and secure the network fabric with 2x faster per-port bandwidth, 4x fewer switches, and 40 percent lower power consumption than previous generations. With 51.2 Tbps aggregate ASIC bandwidth support 128 ports of 400GBE, adaptive routing, and enhanced congestion control mechanisms, Spectrum-4 optimizes RDMA over Converged Ethernet fabrics, dramatically accelerating data centers.

Wrap up

NVIDIA’s GTC event was packed with new products updates to software, performance, and speed. Plenty of focus on the data center, but also addressing the autonomous mobile robot (AMR) audience and, of course, edge. The show’s highlight was the Hopper H100 GPU, but that one product tied in so many of the other announcements. NVIDIA has put all the press releases and blog highlights here, and it’s worth a look.

Amazon

Amazon