Generative AI applications create impressive text responses that are sometimes accurate and other times completely off-topic. These smart applications are powered by large language models (LLMs) capable of answering complex queries and creating works of art, literature, and more. It is critical to ensure these smart applications generate text responses that are accurate, appropriate, on-topic, secure, and current.

NVIDIA has released open-source software to help developers keep those generative AI applications on track. NeMo Guardrails will help ensure AI apps respond appropriately, accurately, and securely. NeMo Guardrails software includes all the code, examples, and documentation businesses need to feel confident with deploying AI apps that generate text.

Enterprise Implications

Enterprises have shown hesitation to implement large language models (LLMs) due to concerns surrounding safety, security, and the potential for LLMs to generate inappropriate or biased content. The introduction of NeMo Guardrails offers a promising solution to these challenges, helping to mitigate the risks associated with LLM adoption and helping enterprises overcome their hesitations. By providing a toolkit for building safe and trustworthy LLM conversational systems, NeMo Guardrails allows developers to easily establish programmable rules that dictate user interactions, ensuring that LLM-based applications adhere to enterprise standards and policies.

The topical, safety, and security guardrails supported by NeMo Guardrails address some of the main concerns we have been hearing from enterprises, from maintaining topical focus, to preventing misinformation, or inappropriate or malicious responses. By offering a robust security model and mitigating risks associated with LLM-based applications, NeMo Guardrails instills greater confidence in enterprises to adopt this powerful technology.

With the release of NeMo Guardrails, hopefully it can serve as a catalyst for wider enterprise adoption of LLMs, bridging the gap between the capabilities of AI technology and the safety and security requirements of the business world.

Safe, On-Topic, Secure

Many industries are adopting LLMs as they roll out AI applications that will ultimately answer customer questions, provide summaries to lengthy documents, write software, and accelerate drug design. With this diverse audience, safety in generative AI is a significant concern, and NVIDIA NeMo Guardrails has been designed to work with all LLMs, including OpenAI’s ChatGPT, to help keep users safe.

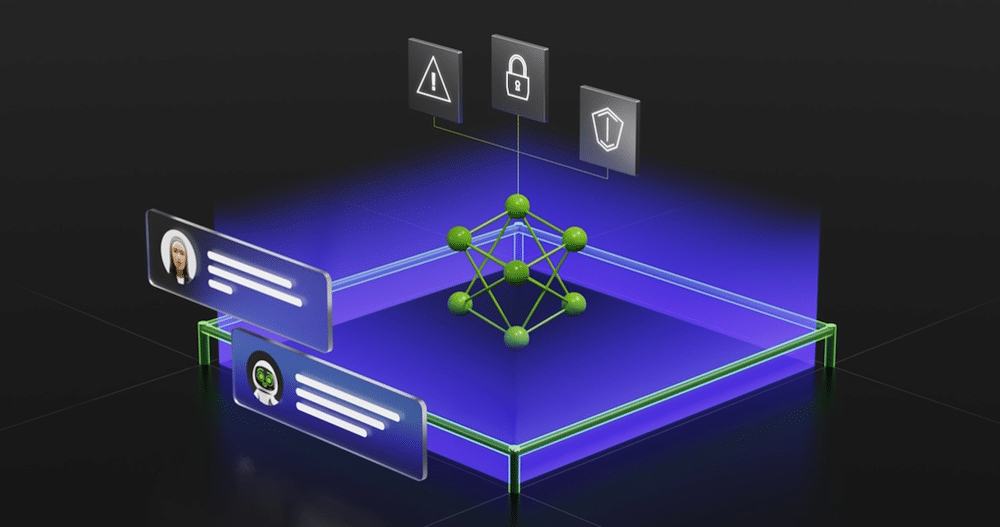

Developers can use NeMo Guardrails to set up three kinds of boundaries:

- Topical guardrails: Prevents apps from veering off course into some undesired area, like keeping customer service assistants from answering questions about the weather.

- Safety guardrails: Ensures apps respond with accurate, appropriate information, filtering out unwanted language and enforcing references are made only to credible sources.

- Security guardrails: Restricts apps to making connections only to external third-party applications known to be safe.

NeMo Guardrails doesn’t require software developers to be experts in machine learning or become data scientists. New rules can be created quickly with just a few lines of code.

Open Source and Available on GitHub

NVIDIA is adding NeMo Guardrails into the NVIDIA NeMo framework providing everything users need to train and tune language models. Much of the NeMo framework is already available on GitHub, and enterprises can get a complete and supported package since it is part of the NVIDIA AI Enterprise software platform.

NeMo is also a part of NVIDIA AI Foundations, a family of cloud services for businesses looking to create and run custom generative AI models based on their own datasets and domain knowledge.

Built on Colang

NeMo Guardrails is built on CoLang, a modeling language and associated runtime for conversational AI. Colang provides a readable and extensible interface for users to define or control the behavior of conversational bots with natural language.

Guardrails are created by defining flows in a Colang file using canonical forms, messages, and flows. This is achieved using natural language, making it simple to construct and to keep the bot on task. Several GitHub Examples address common patterns for implementing guardrails.

Amazon

Amazon