At the VMware Explore 2023 event, VMware unveiled new Private AI offerings aimed at promoting the adoption of generative AI within enterprises and harnessing the potential of trusted data. Characterized by its architectural approach, VMware Private AI balances the benefits of AI with the practical privacy and compliance requisites of organizations.

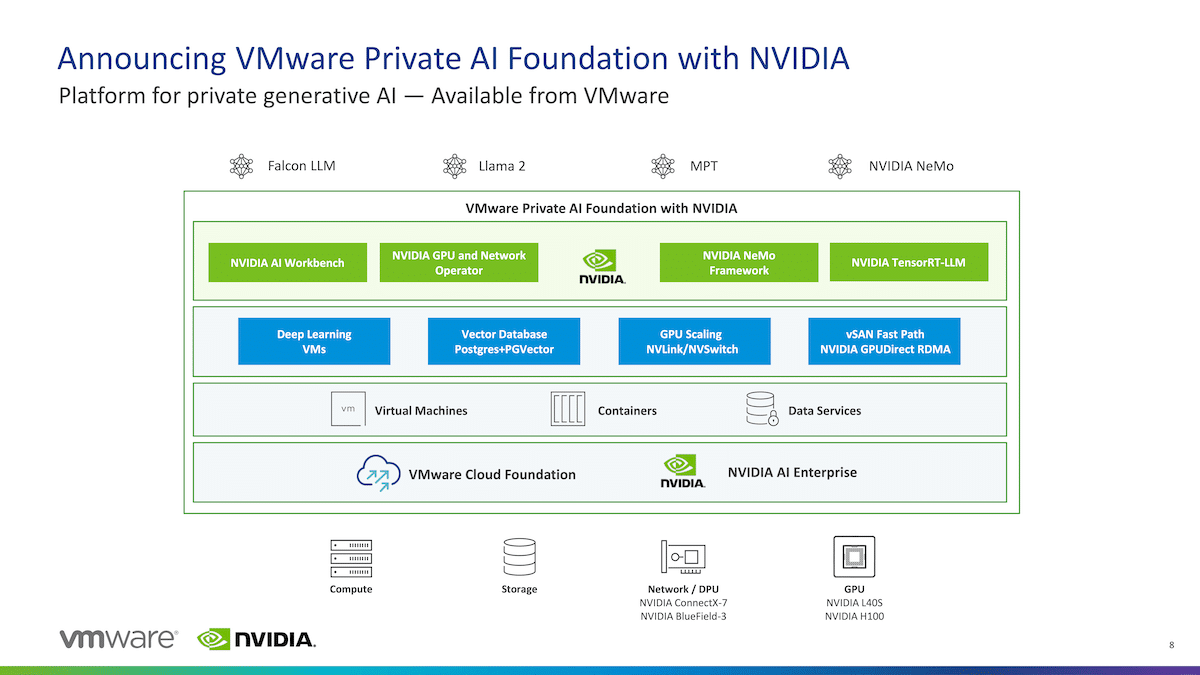

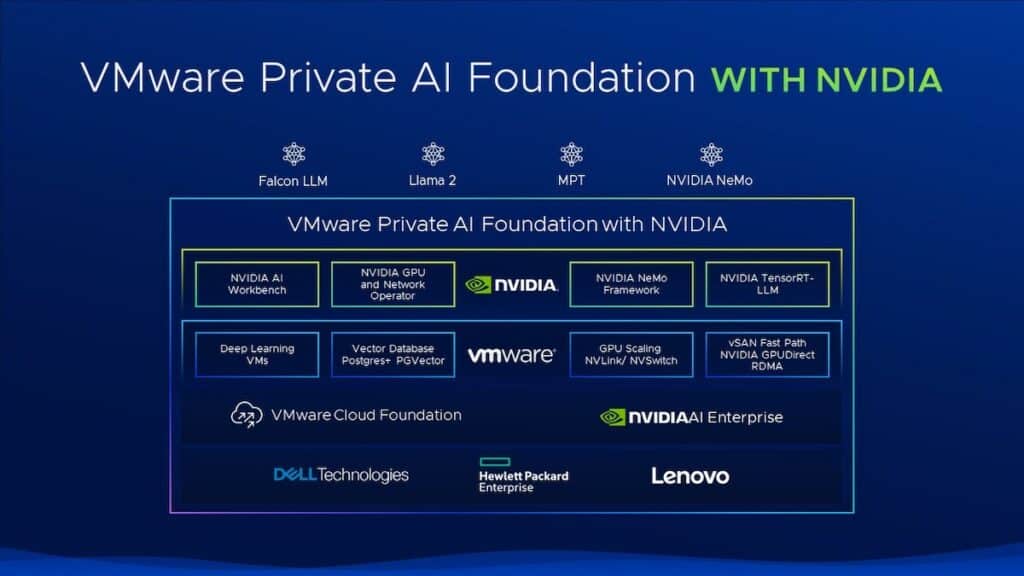

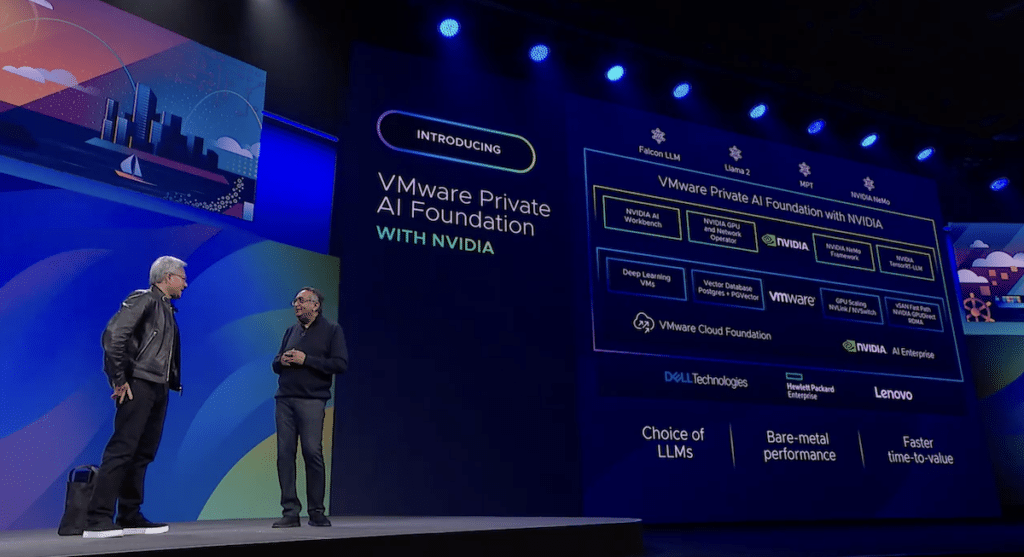

VMware Private AI Foundation with NVIDIA will enable enterprises to customize models and run generative AI applications, including intelligent chatbots, assistants, search, and summarization. The platform will be a fully integrated solution featuring generative AI software and accelerated computing from NVIDIA, built on VMware Cloud Foundation and optimized for AI.

Building on their strategic partnership, the VMware Private AI Foundation with NVIDIA offering provides enterprises with deployment on VMware’s cloud infrastructure with the tools to embrace the growing interest in the era of generative AI. This extension integrates VMware’s Private AI architecture with NVIDIA AI Enterprise software and accelerated computing, providing a turnkey solution for deploying generative AI applications across data centers, public clouds, and the edge.

To achieve business benefits faster, enterprises seek to streamline the development, testing, and deployment of generative AI applications. VMware Private AI Foundation with NVIDIA will enable enterprises to harness this capability, customizing large language models, producing more secure and private models for their internal usage, and offering generative AI as a service to their users, securely running inference workloads at scale.

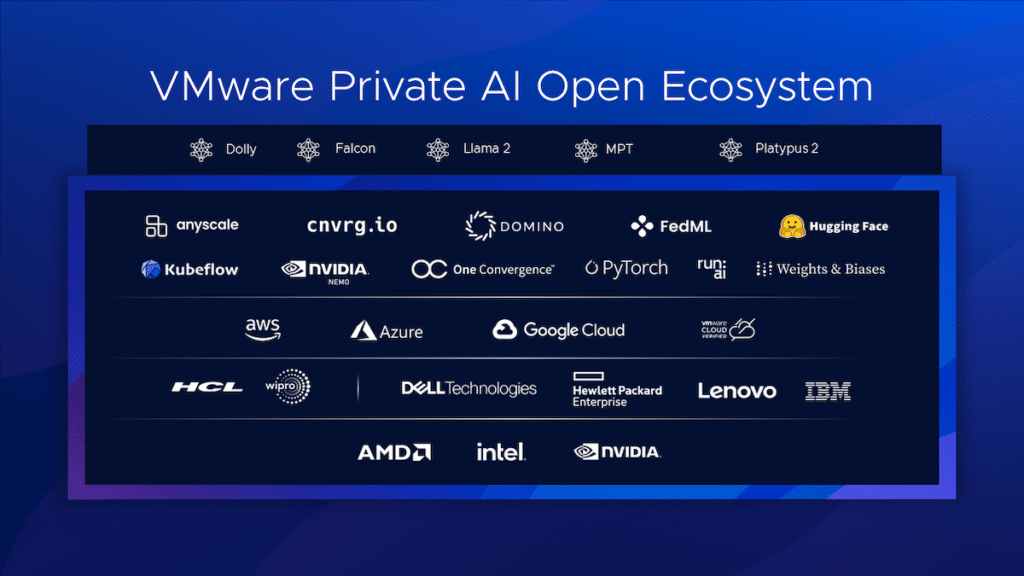

The platform is expected to include integrated AI tools to empower enterprises to run proven models trained on their private data cost-efficiently. Customers gain desired AI outcomes with VMware Private AI Reference Architecture for Open Source leveraging top-tier open-source software technologies, catering to present and future requirements.

The platform is expected to be built on VMware Cloud Foundation and NVIDIA AI Enterprise software, with numerous benefits. From a privacy perspective, customers can run AI services adjacent to where the data lives with an architecture that preserves data privacy with secure access.

Enterprises will have a wide choice of where to build and run their models, from NVIDIA NeMo to Llama 2. It includes OEM hardware configurations and, in the future, public cloud and service provider offerings.

The expected performance should equal or exceed bare metal with the platform running on NVIDIA accelerated infrastructure. The GPU scaling optimizations in virtualized environments will enable AI workloads to scale up to 16 vGOUs/GPUs in a single VM and multiple nodes to speed generative AI model fine-tuning and deployments.

Maximizing the usage of all compute resources across GPUs, DPUs, and CPUs means overall costs are expected to be lower while creating a pooled resource environment shared across teams. VMware vSAN Express Storage Architecture (ESA) will deliver performance-optimized NVMe storage, supporting GPUDirect storage over RDMA providing direct I/O transfer from storage to GPUs without involving the CPU.

The deep integration between vSphere and NVIDIA NVSwitch will enable multi-GPU models to execute without inter-GPU bottlenecks delivering accelerated networking.

All these benefits will mean little if it takes too long to deploy and realize time-to-value. With vSphere Deep Learning VM images and image repository, rapid prototyping capabilities offer a stable turnkey solution image that includes pre-installed frameworks and performance-optimized libraries.

NVIDIA NeMo, an end-to-end, cloud-native framework and the OS for the NVIDIA AI platform included in NVIDIA AI Enterprise, is a platform feature. NeMo allows enterprises to build, customize, and deploy generative AI models virtually anywhere, combining customization frameworks, guardrail toolkits, data curation tools, and pre-trained models to offer enterprises an easy, cost-effective, and efficient path to generative AI adoption.

Deploying generative AI in production, NeMo uses TensorRT for Large Language Models (TRT-LLM), accelerating and optimizing inference performance on the latest LLMs on NVIDIA GPUs. With NeMo, VMware Private AI Foundation with NVIDIA enables enterprises to pull in their data to build and run custom generative AI models on VMware’s hybrid cloud infrastructure.

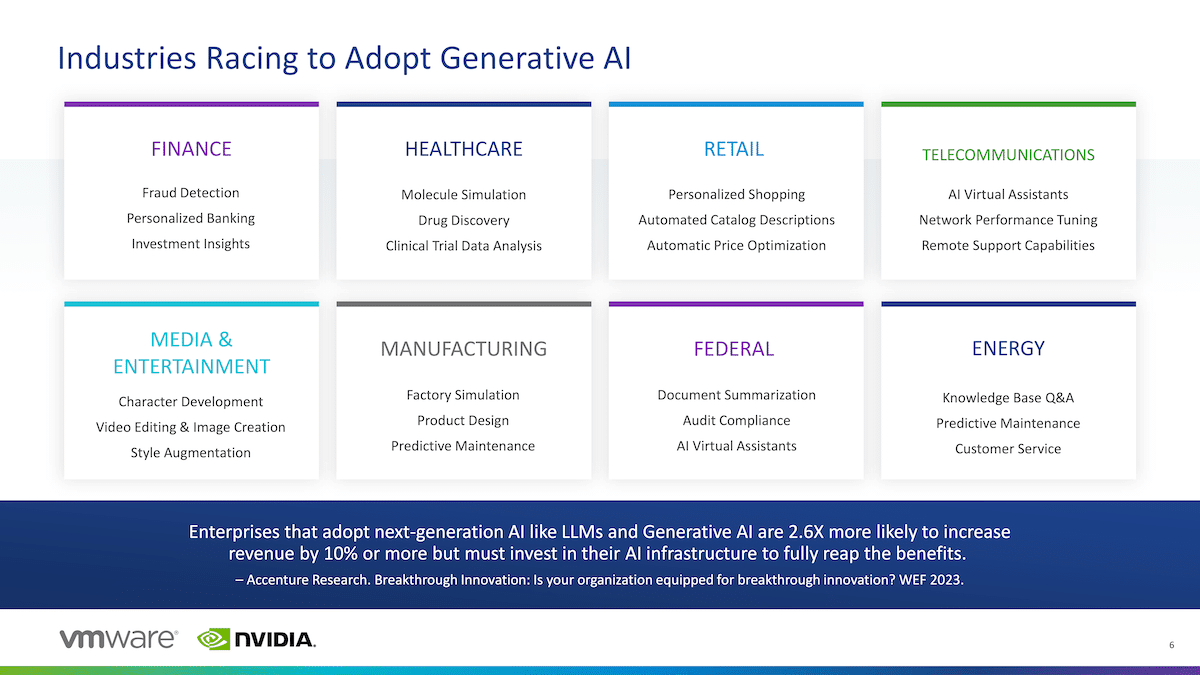

VMware Private AI Foundation extends AI capabilities and models to where the enterprise data is generated, processed, and consumed, irrespective of whether it’s within a public cloud, enterprise data center, or at the edge. By introducing these new offerings, VMware aims to enable customers to merge flexibility and control, propelling a new generation of AI-powered applications. The applications hold the potential to significantly enhance worker productivity, trigger transformation across key business functions, and drive substantial economic impact—a McKinsey report projects generative AI to contribute up to $4.4 trillion annually to the global economy.

Crucial to the success of this evolution is the establishment of a multi-cloud environment, as it paves the way for private yet widely distributed data utilization. VMware’s multi-cloud strategy empowers enterprises with greater flexibility in building, customizing, and deploying AI models using private data while ensuring security and resiliency across varied environments.

Raghu Raghuram, CEO of VMware, emphasizes that the viability of generative AI hinges on maintaining data privacy and minimizing IP risks. VMware Private AI addresses these concerns, enabling organizations to harness their trusted data for efficient AI model building and execution within a multi-cloud environment.

Amazon

Amazon