The Fusion-io ioDrive2 Duo is a full-height, half-length application accelerator that, when paired with SLC NAND, offers 1.2TB of low-latency, high-endurance storage for today's most demanding applications. While branded as a second generation product, the naming is somewhat misleading as Fusion-io has long been a pioneer in the memory-as-storage range with their assorted ioMemory products. That experience shows not just in product development and spec sheet highlights, but all the way through to management as well; Fusion-io boasts the most robust drive management software suite on the market with ioSphere. Still, pretty software and proven drive design are only part of the equation. Enterprises deploy these products with one goal in mind; reduce application response times by attacking storage system latency. The SLC iteration of the ioDrive2 brings laser-focus to this issue, offering read access latency of 47µs and write access latency of 15µs. This compares to read access latency of 68µs in the MLC-based ioDrive2 (they have the same write latency) and while roughly 20µs doesn't sound like much, it can be a virtual eternity for applications that have been tuned for use with flash storage.

Beyond the spec-sheet gains in latency and throughput performance, Fusion-io has been hard at work making several other material improvements to the platform in this latest generation. The prior generation ioDrive devices had a feature called FlashBack, which allowed the drive to continue operating in the event of a NAND failure, and ioDrive2 application accelerators have built on that attribute with the new version dubbed Adaptive FlashBack. Adaptive FlashBack increases NAND die failure tolerances keeping the drive online and data secure in the unlikely event of multiple NAND failures. In such an event, the ioDrive2 can remap and recover without going offline.

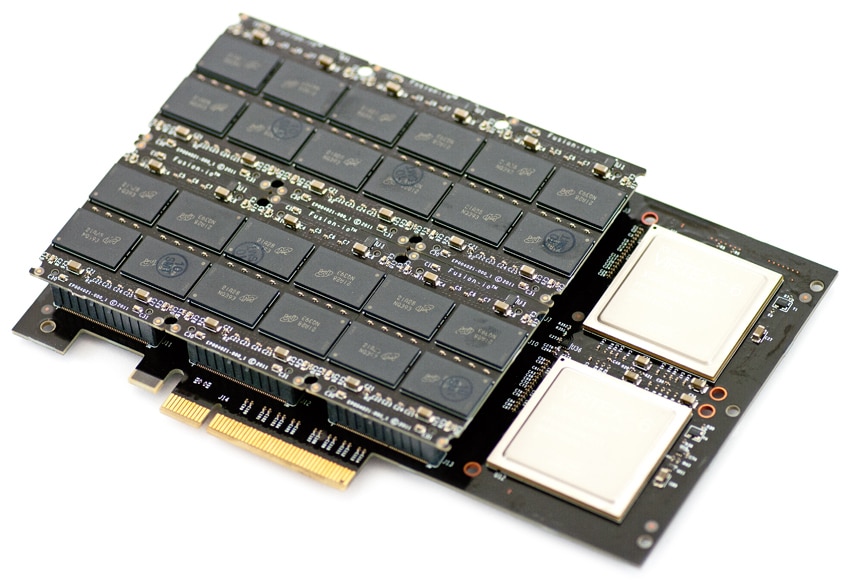

Fusion-io has also rolled out a new NAND controller and firmware build for the ioDrive2 family. The benefits here are largely performance-related, better throughput, and improved latency, but there is a NAND compatibility benefit as well. Fusion-io has also updated their VSL software to 3.2.x which in combination with the new NAND controller gives the ioDrive2 improved performance with smaller block sizes. While on the NAND subject, Fusion-io made a hardware architecture change that puts the NAND in its own modules, separated from the NAND controller, making for a simpler design. The net benefit is Fusion-io can more quickly support a new NAND or NAND packaging, leveraging the same PCB layout.

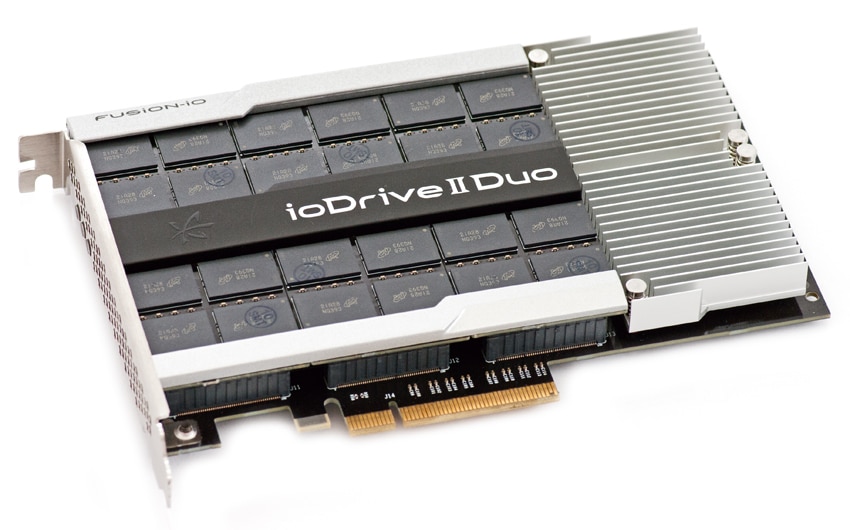

While we'll dive into hardware overview more in-depth in the design section below, it is instructive to provide a brief overview of the Fusion-io architecture here. Like the Virident FlashMAX II we previously reviewed, the ioDrive2 design leverages an FPGA that offloads most of the NAND management duties to the host CPU. While other designs like Micron's P320h leverage an on-board controller for most of these duties, Fusion-io prefers to take advantage of the powerful and often underutilized CPU within the host system. This design also tends to give a more direct path to the storage, reducing storage latency. The Duo's design leverages twin controllers, like the FlashMAX II, but diverges in that it uses six daughter boards that only include NAND, rather than combining the NAND and controllers on individual PCBs. Unlike the Virident solution though, Fusion-io with VSL 3.2.2 presents four 300GB drives to the system, instead of the one volume Virident presents. Users can opt to address each volume on its own, but to get a single volume they must be put in software RAID. It's also worth noting that the Fusion-io Duo cards are full-height half-length, making them larger than the generally universal half-height, half-length cards that Virident, Micron, and others offer. That said, most Tier 1 1U and 2U servers on the market today easily accommodate the FHHL cards, making card shape relevant only in fringe cases.

Fusion-io ships the ioDrive2 Duo in both MLC and SLC configurations. The MLC comes in a 2.4TB capacity, the SLC 1.2TB. The drives carry a five-year warranty.

Fusion ioDrive2 Duo Specifications

- ioDrive2 Duo Capacity 1.2TB SLC

- Read Bandwidth (1 MB) 3.0 GB/s

- Write Bandwidth (1 MB) 2.5 GB/s

- Ran. Read IOPS (512B) 700,000

- Ran. Write IOPS (512B) 1,100,000

- Ran. Read IOPS (4K) 580,000

- Ran. Write IOPS (4K) 535,000

- Read Access Latency 47µs

- Write Access Latency 15µs

- 2xnm NAND Flash Memory Single-Level Cell (SLC)

- Bus Interface PCI-Express 2.0 x8 electrical x8 physical

- Weight: Less than 11 ounces

- Form Factor: Full-height, half-length (FHHL)

- Warranty: 5 years or maximum endurance used

- Endurance: 190PBW (95PBW per controller)

- Supported Operating Systems

- Microsoft Windows Microsoft Windows: 64-bit Windows Server 2012, Windows Server 2008 R2, Windows Server 2008, Windows Server 2003

- Linux RHEL 5/6; SLES 10/11; OEL 5/6; CentOS 5/6; Debian Squeeze; Fedora 16/17; openSUSE 12; Ubuntu 10/11/12

- UNIX Solaris 10/11 x64; OpenSolaris 2009.06 x64; OSX 10.6/10.7/10.8

- Hypervisors VMware ESX 4.0/4.1/ESXi 4.1/5.0/5.1, Windows 2008 R2 with Hyper-V, Hyper-V Server 2008 R2

Design and Build

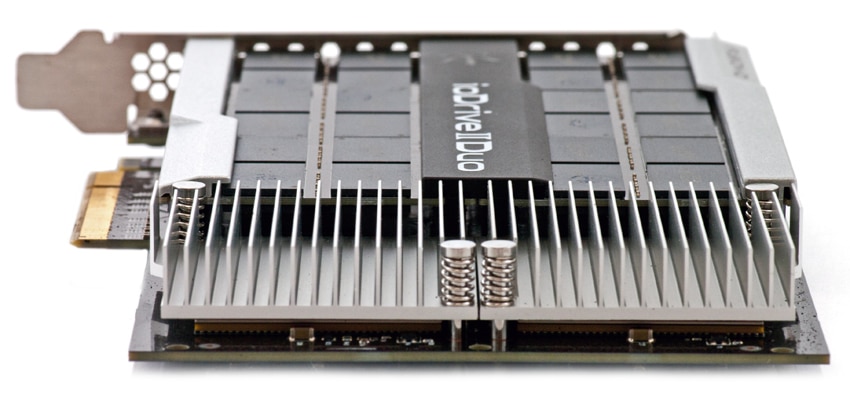

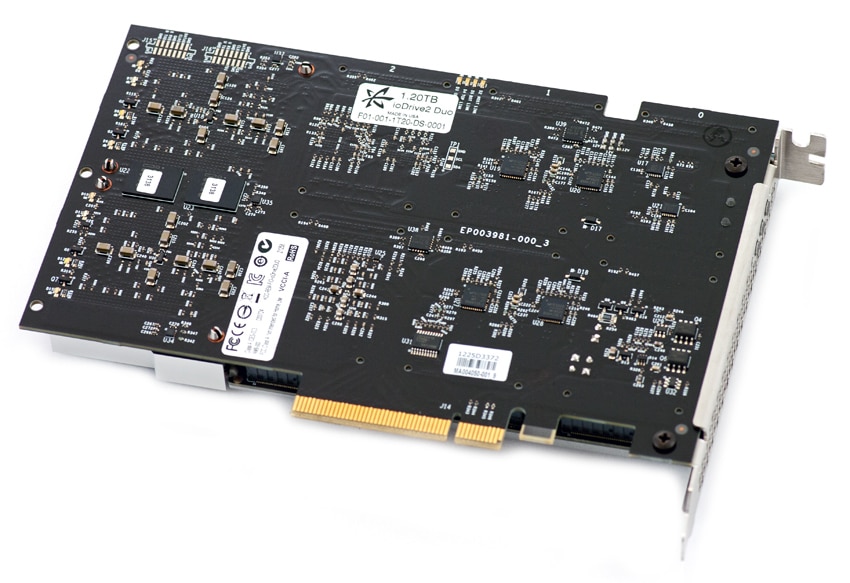

The Fusion ioDrive2 Duo 1.2TB SLC is a Full-Height Half-Length (FHHL) x8 PCI-Express 2.0 card, with two controllers and a PCIe switch attached to the main circuit board. The NAND is attached through two daughter boards, giving Fusion a manufacturing advantage when switching to new NAND configurations. Instead of redesigning the card each time a lithography change rolls around (NAND die shrink), they can install a new daughter board and flash new firmware onto the FPGA. Our SLC ioDrive2 Duo is formed with two 600GB ioMemory devices, each using 4 lanes of the PCIe connection. The PCB layout is very efficient, with large passive heatsinks covering the two controllers on the right side of the card.

Each controller represents one ioDrive2, with its own 40nm Xilinx Virtex-6 FPGA and 768GB pool of SLC NAND. The ioDrive2 Duo we reviewed uses Micron NAND, but Fusion-io is NAND manufacturer agnostic. The NAND is split up between 24 32GB chips per device, with 600GB usable under stock formatting. That ratio puts the stock over-provisioning levels to 22%, roughly on par with most enterprise flash devices.

Fusion ioMemory interfaces with NAND flash like a processor would interact with system memory; it uses a combination of Fusion-io’s NAND controller (FPGA), which communicates directly over PCIe, and Fusion-io’s driver or Virtual Storage Layer (VSL) software installed on the host system to translate the device into a traditional block-device. Through Fusion-io's VSL, the software emulates a block device for compatibility, although Fusion also offers an SDK to allow software vendors to communicate natively with NAND, bypassing emulation overhead. ioMemory is also non-traditional in the sense that it consumes system resources for the VSL drivers to function, leveraging the host CPU while also creating a footprint in system memory. In terms of product support, because Fusion-io uses an FPGA as the NAND controller versus an ASIC, they can deploy very low-level software updates that can address bug fixes and performance enhancements. This contrasts with standard SSD controllers, where fundamental changes can only be made via fabricating a new controller – though both designs allow for more high-level tuning via firmware updates.

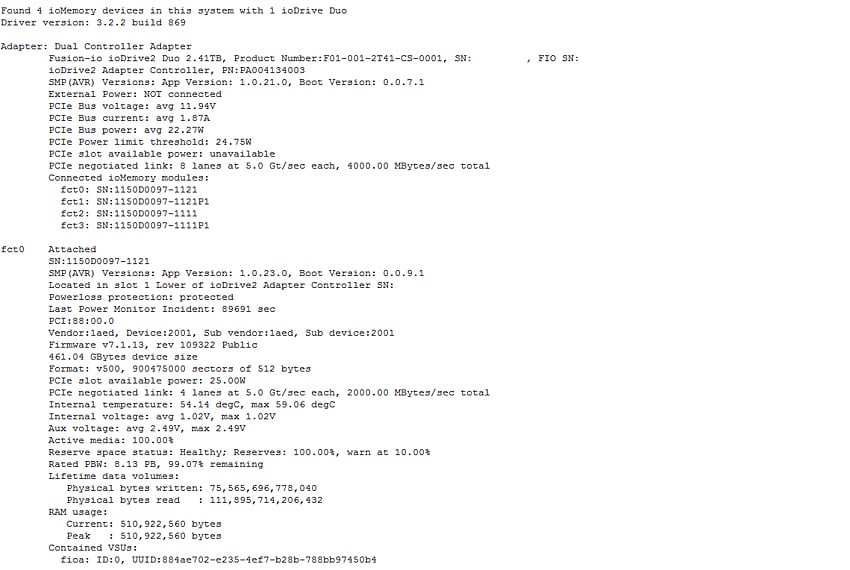

One enhancement that came with the release of VSL 3.2.2 for ioDrive2 devices is a new controller feature. Previously, each ioMemory device appeared as a single device to the host system. In the most current versions of Fusion's VSL, the controller is split into two devices and operates in a "dual-pipe" mode. So instead of one LUN for an ioDrive2 and two LUNs for an ioDrive2 Duo, it's two or four LUNs respectively. In our tests working through the old and new layout, we noted a strong improvement of small I/O performance, although all of our formal benchmarks took place with VSL 3.2.2 only.

Power is another topic that comes up frequently when Fusion ioMemory devices are compared to the PCIe landscape, since they stand out as one of few with external power connections for certain applications. This applies to Duo family products, which are two ioMemory devices on a single PCIe card. In these cases, to operate at full power they consumer over 25 watts, which is the minimum rated x8 PCIe power rating. Fusion-io has two ways of addressing this power requirement: external power cables or power-overrides that allow the card to consume more than 25w through an x8 PCIe slot. In our review which evaluated the ioDrive2 Duo SLC inside a Lenovo ThinkServer RD630, we performed all benchmarks with the power-override enabled, giving us full performance without external power needed. In the hardware installation guide, Fusion-io states that if the host server is rated for a 55w power draw, the software override can safely be enabled.

Management Software

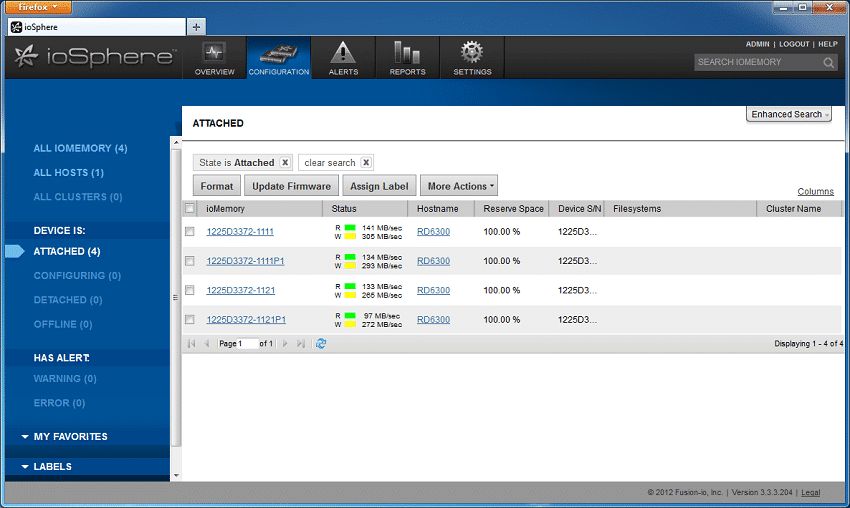

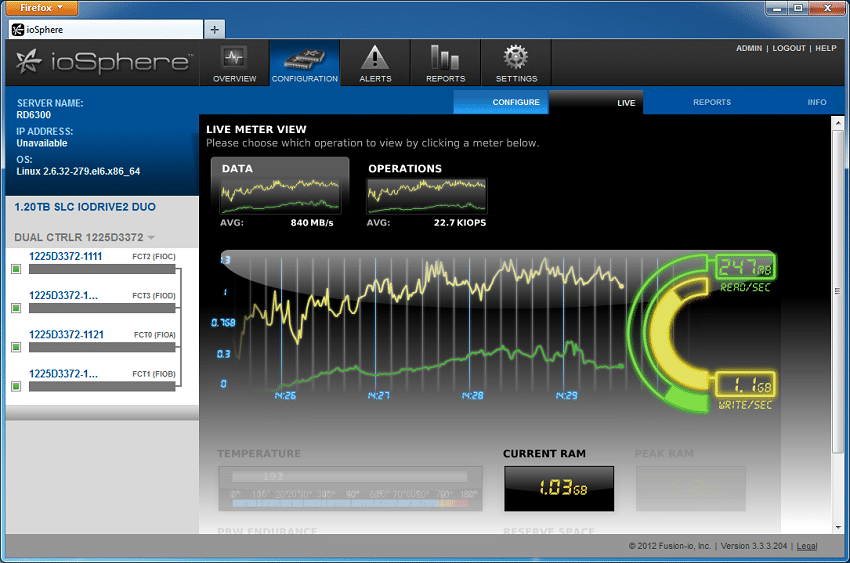

Fusion-io has continuously set the bar that other manufacturers try to reach with their ioSphere ioMemory Data Center Management suite. As we have seen by working extensively with competing Application Accelerators, even a basic GUI in Windows is hard to come by, with many manufacturers offering limited CLI support. This plays a big role in the long-term management of a given flash device. since warranty and expected lifetime come back to what the usage profile is in a given environment.

Fusion's ioSphere addresses many key areas for an IT administrator all through a web interface including: real-time and historical performance, health monitoring, and warranty forecasting. ioSphere supports both monitoring of locally-installed ioMemory devices as well as ioMemory devices installed across a large network, and it can also be configured with remote access to allow administrators to monitor data outside the datacenter. This extensive feature set is unrivaled.

Bar none, one of the most interesting features is the real-time performance streaming. ioSphere lets users connect to a specific ioMemory device and watch activity hitting the device as it happens. We used this capability extensively in testing, as shown above in the stream captured during an internal of our MarkLogic NoSQL Database Benchmark. As ioSphere is constantly recording data from all connected ioMemory devices, it can also build reports showing past performance information so you can better estimate how long any particular ioMemory device will last in a given production environment.

For users interested in advanced information, ioSphere will also track power usage, card temperature, total data read and written, and a multitude of other details useful when debugging. This data can be accessed both through ioSphere and the Fusion-io CLI that is installed by default with the device drivers. Another area in which these advanced features come into play is for over or under-provisioning the drive, which trades capacity for performance. In our evaluation we tested the ioDrive2 Duo SLC in both stock as well as high-performance modes. High-performance mode is with 20% additional over-provisioning, although for advanced users Fusion-io provides the capability to select the exact level of over or under-provisioning. When under-provisioned, users can increase the capacity of the ioDrive2 above the advertised capacity (at the expense of performance and endurance).

Testing Background and Comparables

All Application Accelerators compared in this review are tested on our second-generation enterprise testing platform consisting of an Intel Romley-based Lenovo ThinkServer RD630. This new platform is configured with both Windows Server 2008 R2 SP1 as well as Linux CentOS 6.3 to allow us to effectively test the performance of different AAs in the various environments their drivers support. Each operating system is optimized for highest performance, including having the Windows power profile set to high-performance as well as cpuspeed disabled in CentOS 6.3 to lock the processor at its highest clock speed. For synthetic benchmarks, we utilize FIO version 2.0.10 for Linux and version 2.0.12.2 for Windows, with the same test parameters used in each OS where permitted.

StorageReview Lenovo ThinkServer RD630 Configuration:

- 2 x Intel Xeon E5-2620 (2.0GHz, 15MB Cache, 6-cores)

- Intel C602 Chipset

- Memory – 16GB (2 x 8GB) 1333Mhz DDR3 Registered RDIMMs

- Windows Server 2008 R2 SP1 64-bit, Windows Server 2012 Standard, CentOS 6.3 64-Bit

- 100GB Micron RealSSD P400e Boot SSD

- LSI 9211-4i SAS/SATA 6.0Gb/s HBA (For boot SSDs)

- LSI 9207-8i SAS/SATA 6.0Gb/s HBA (For benchmarking SSDs or HDDs)

When it came to choosing comparables for this review, we chose the newest top-performing SLC and MLC Application Accelerators. These were chosen based on performance characteristics of each product, as well as price-range. We include both stock and high-performance benchmark results for the ioDrive2 Duo SLC and compare it against the Micron RealSSD P320h as well as the Virident FlashMAX II over-provisioned in high-performance mode.

1.2TB Fusion ioDrive2 Duo SLC

- Released: 2H2011

- NAND Type: SLC

- Controller: 2 x FPGA with proprietary firmware

- Device Visibility: 4 JBOD Devices

- Fusion-io VSL Windows Version: 3.2.2

- Fusion-io VSL Linux Version: 3.2.2

- Preconditioning Time: 12 hours

- Released: 2H2011

- NAND Type: SLC

- Controller: 1 x Proprietary ASIC

- Device Visibility: Single Device

- Micron Windows: 8.01.4471.00

- Micron Linux: 2.4.2-1

- Preconditioning Time: 6 hours

- Released: 2H2012

- NAND Type: MLC

- Controller: 2 x FPGA with proprietary firmware

- Device Visibility: Single or Dual Device depending on formatting

- Virident Windows: Version 3.0

- Virident Linux: Version 3.0

- Preconditioning Time: 12 hours

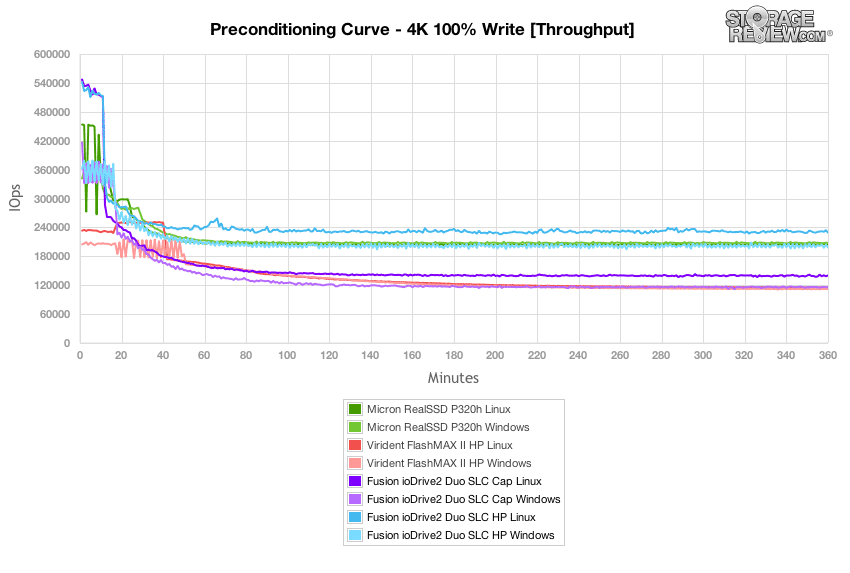

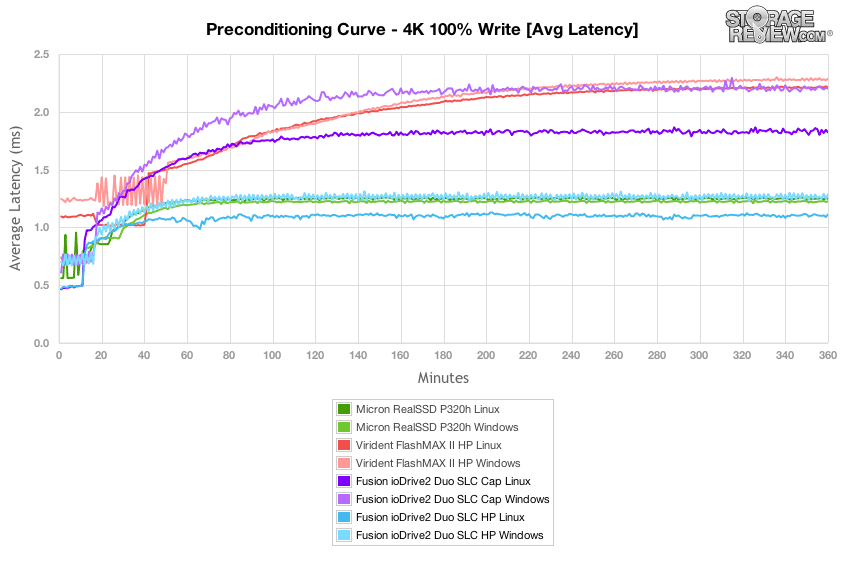

Enterprise Synthetic Workload Analysis

The way we look at PCIe storage solutions dives deeper than just looking at traditional burst or steady-state performance. When looking at averaged performance over a long period of time, you lose sight of the details behind how the device performs over that entire period. Since flash performance varies greatly as time goes on, our benchmarking process analyzes the performance in areas including total throughput, average latency, peak latency, and standard deviation over the entire preconditioning phase of each device. With high-end enterprise products, latency is often more important than throughput. For this reason we go to great lengths to show the full performance characteristics of each device we put through our Enterprise Test Lab.

We also include performance comparisons to show how each device performs under a different driver set across both Windows and Linux operating systems. For Windows, we use the latest drivers at the time of original review, which each device is then tested under on a 64-bit Windows Server 2008 R2 environment. For Linux, we use 64-bit CentOS 6.3 environment, which each Enterprise PCIe Application Accelerator supports. Our main goal with this testing is to show how OS performance differs, since having an operating system listed as compatible on a product sheet doesn't always mean the performance across them is equal.

Flash performance varies throughout the preconditioning phase of each storage device. With different designs and varying capacities, our preconditioning process lasts for either 6 hours or 12 hours depending on the length of time needed to reach steady-state behavior. Our main goal is to ensure that each drive is fully into steady-state mode by the time we begin our primary tests. In total, each of the comparable devices are secure erased using the vendor's tools, preconditioned into steady-state with the same workload the device will be tested with under a heavy load of 16 threads with an outstanding queue of 16 per thread, and then tested in set intervals in multiple thread/queue depth profiles to show performance under both light and heavy usage.

Attributes Monitored In Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregate)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

Our Enterprise Synthetic Workload Analysis includes four profiles based on real-world tasks. These profiles have been developed to make it easier to compare to our past benchmarks as well as widely-published values such as max 4K read and write speed and 8K 70/30, which is commonly used for enterprise drives. We also included two legacy mixed workloads, the traditional File Server and Webserver, each offering a wide mix of transfer sizes.

- 4K

- 100% Read or 100% Write

- 100% 4K

- 8K 70/30

- 70% Read, 30% Write

- 100% 8K

- File Server

- 80% Read, 20% Write

- 10% 512b, 5% 1k, 5% 2k, 60% 4k, 2% 8k, 4% 16k, 4% 32k, 10% 64k

- Webserver

- 100% Read

- 22% 512b, 15% 1k, 8% 2k, 23% 4k, 15% 8k, 2% 16k, 6% 32k, 7% 64k, 1% 128k, 1% 512k

In our first workload we look at a fully random 4K write preconditioning profile with an outstanding workload of 16T/16Q. In this test, the Fusion ioDrive2 Duo SLC offers the highest burst performance in the group measuring nearly 550,000 IOPS burst with its Linux driver. In Windows, burst speeds came in lower at 'just' 360-420,000 IOPS. Looking at its performance as it neared steady-state, the ioDrive2 Duo in HP mode leveled off at around 230,000 IOPS in Linux and 200,000 IOPS in Windows. In stock capacity mode, performance measured roughly 140,000 IOPS in Linux and 115,000 IOPS in Windows.

With a heavy load of 16T/16Q, average latency on the Fusion ioDrive2 Duo measured between 1.85ms to 2.20ms in stock capacity mode for Linux and Windows respectively. In high-performance configurations, latency dropped to about 1.10ms and 1.25ms.

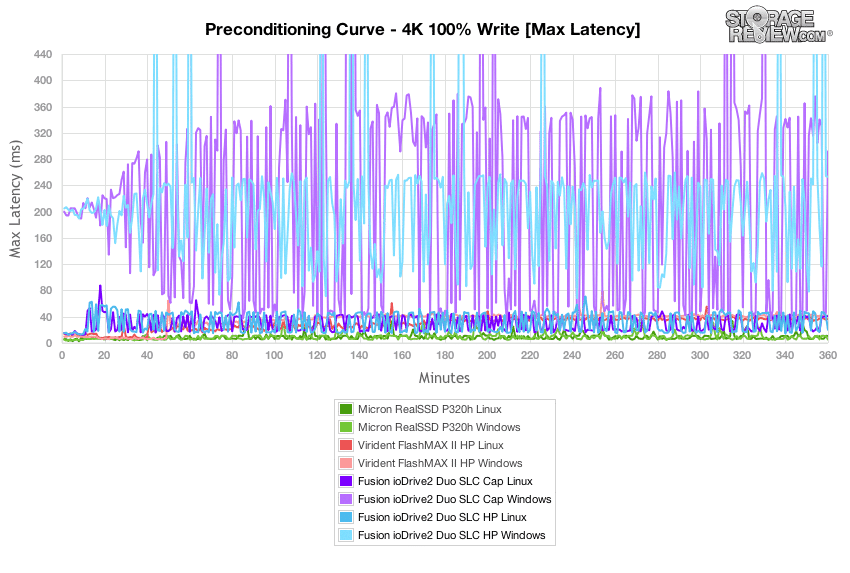

Comparing max latency on the ioDrive2 Duo in Windows and Linux with our 4k random write profile, it was easy to spot that it favored a Linux environment when it came to peak response times. Over the course of the preconditioning period, Windows max latency ranged between 40-360ms in stock capacity and 100-250ms in high-performance mode. This contrasted to its performance in Linux which maintained peak response times between 20-50ms for both stock and high-performance configurations.

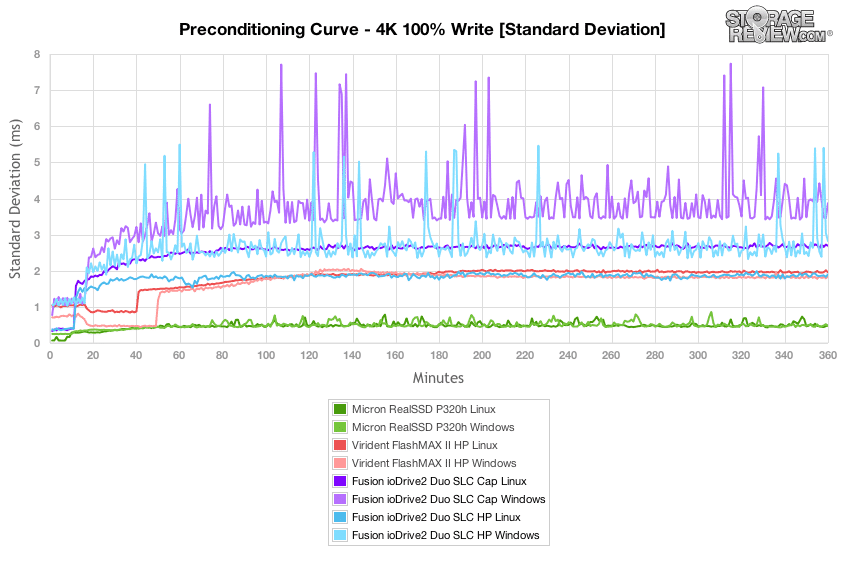

Drilling down into latency standard deviation, the Fusion ioDrive2 Duo SLC had less latency consistency in Windows in both stock and high-performance modes than it did in Linux. Comparing it to the Micron P320h and Virident FlashMAX II in HP mode, both of those models maintained roughly the same consistency across both operating systems.

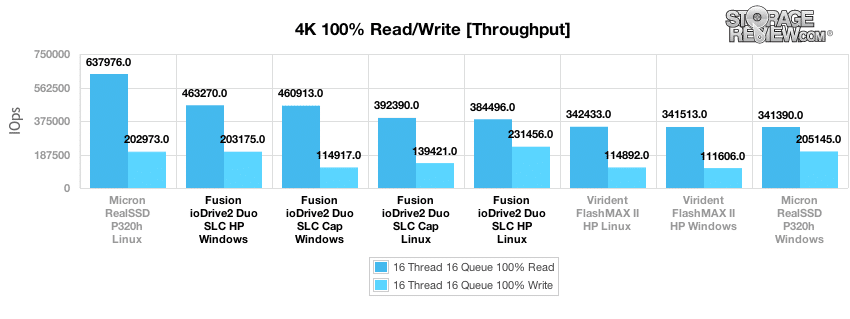

After our 12-hour preconditioning period ended on the ioDrive2 Duo SLC, it had steady-state random 4k performance measuring a peak of 231,456 IOPS in Linux when provisioned in high-performance mode. This gave it the highest random 4K write performance in the group, although its Windows performance was roughly in-line with the write speed of the Micron P320h. In stock performance mode, random 4k write speeds dropped to 114,917 IOPS in Windows and 139,421 IOPS in Linux. This was roughly on par with the FlashMAX II with it configured in its high-performance mode. Looking at the groups 4K random read speed, the Micron P320h led the pack with 637k IOPS in Linux, with the ioDrive2 Duo SLC measuring 460-463k IOPS in Linux and 384-392k IOPS in Windows.

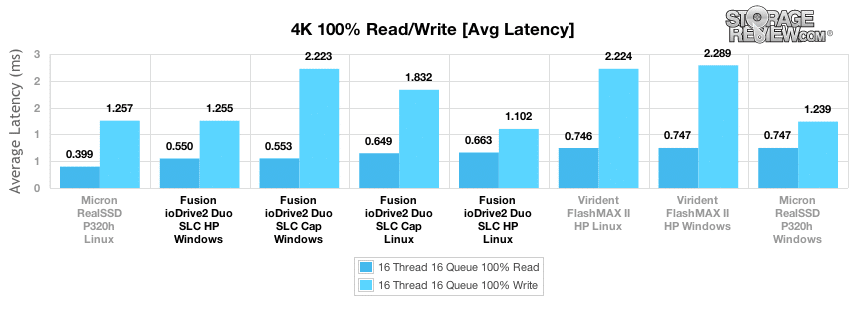

Comparing average latency with a heavy 16T/16Q workload with 100% 4K random read activity, the ioDrive2 Duo SLC measured between 0.550-0.552ms in Windows and 0.649-0.663ms in Linux. Switching to write performance, it measured 1.102-1.255ms in high-performance mode and 1.832-2.223ms in stock capacity, with its write strength on the Linux side.

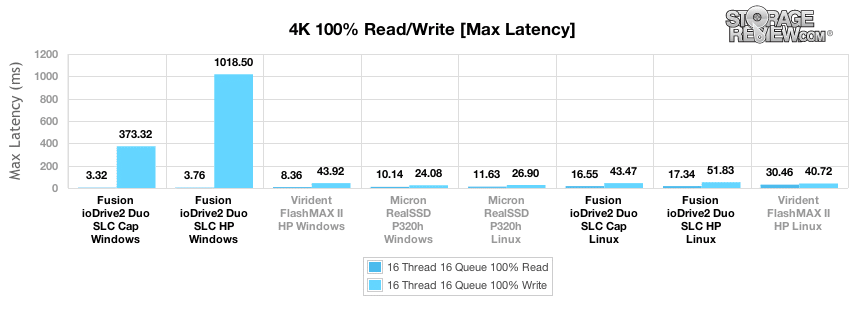

When comparing max latency, the Fusion ioDrive2 Duo stuck out in Windows with the highest peak response times in the group, measuring 373-1018ms with 4k random write activity. Its performance in Linux was much better, measuring 43-51ms at its highest. When it came to peak read latency, the ioDrive2 Duo in Windows led the pack with max latency measuring 3.32-3.76ms.

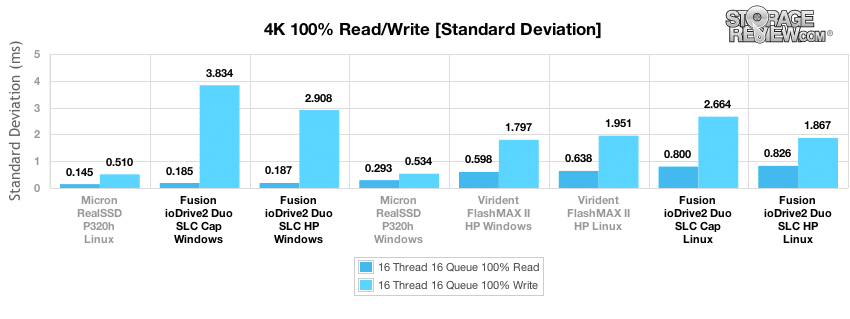

Comparing latency standard deviation between each PCIe AA in our random 4K profile, the ioDrive2 Duo SLC offered excellent read latency consistency in Windows, but with write activity it had some of the less consistent latency in the group. This was improved in a Linux environment, although those also stepped higher than others in the group. At the top of the group is the Micron RealSSD P320h, offering balanced latency standard deviation with both read and write activity.

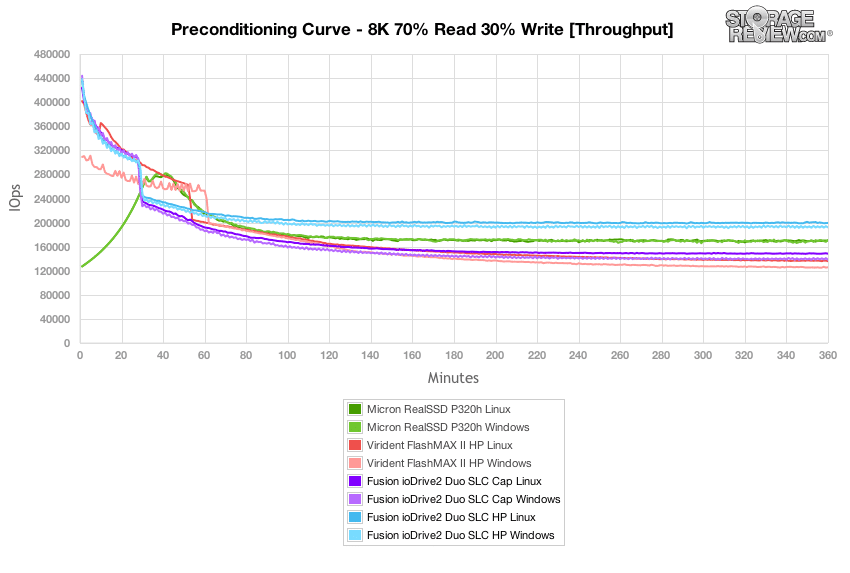

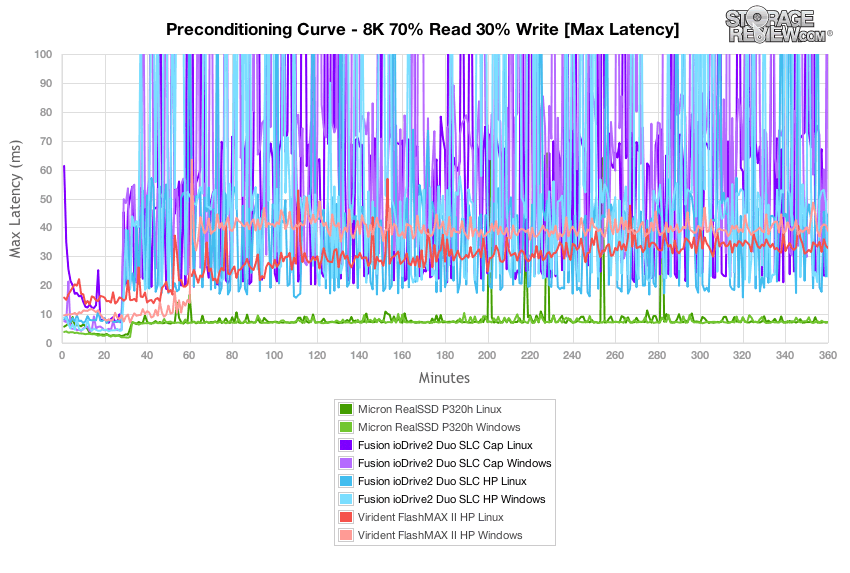

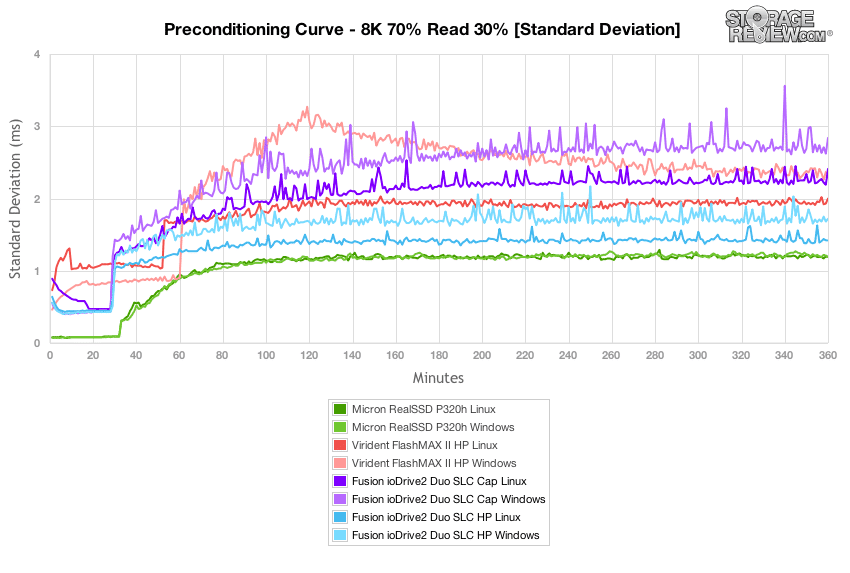

Our next test switches to an 8K 70/30 mixed workload where the ioDrive2 Duo SLC led the pack with the highest burst speeds measuring between 424-443,000 IOPS, with higher throughput in Windows. As performance neared steady-state, the ioDrive2 Duo SLC in stock capacity measured between 140-148k IOPS which increased to 195-200k IOPS in high-performance mode.

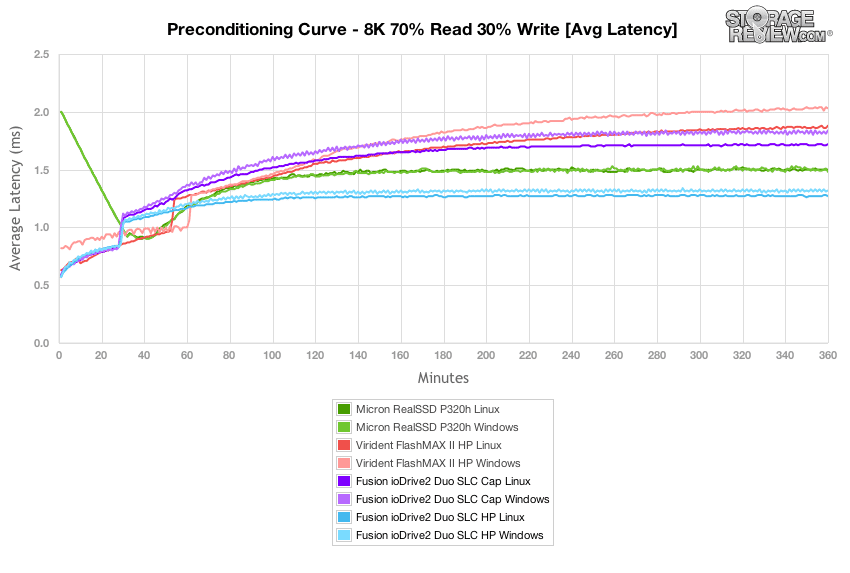

Comparing average latency in our 8k 70/30 workload, the Fusion ioDrive2 Duo SLC measured 0.57-0.59ms in burst (small lead in Windows) and increased to about 1.70-1.80ms in stock capacity and 1.28-1.33ms in high-performance mode.

As the ioDrive2 Duo SLC transitioned from burst to sustained or steady-state, peak latency in both Windows and Linux, as well as stock and high-performance modes fluttered between 50-250ms. This compared to the Micron P320h that measured 10-30ms or the Virident FlashMAX II that measured between 30-50ms.

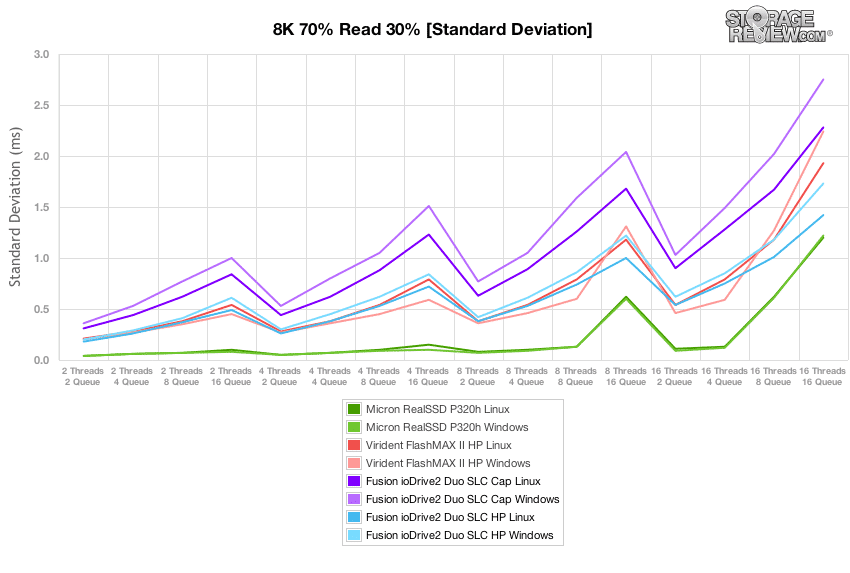

While the ioDrive2 Duo's max latency ran high in our 8k 70/30 preconditioning test, we noted its latency consistency in high-performance mode slotted in right behind the Micron P320h. In stock capacity configuration it scaled slightly higher than the Virident FlashMAX II.

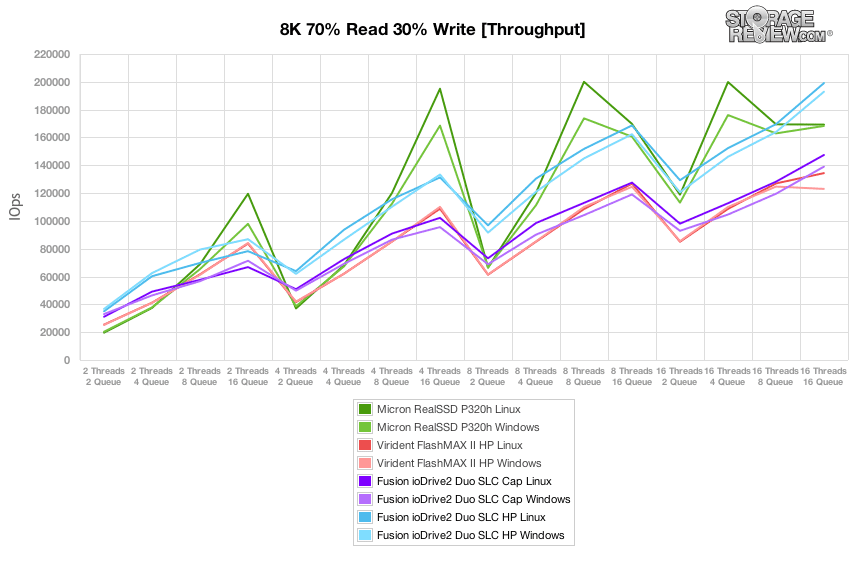

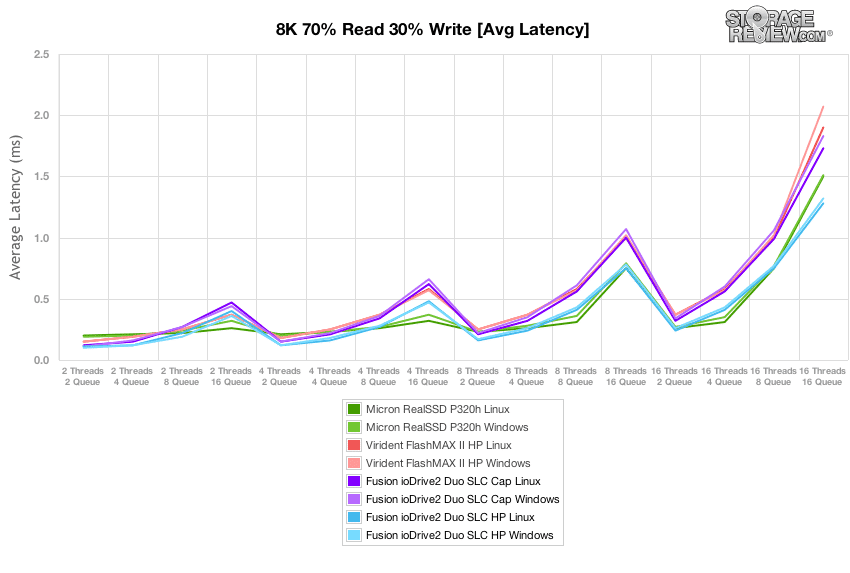

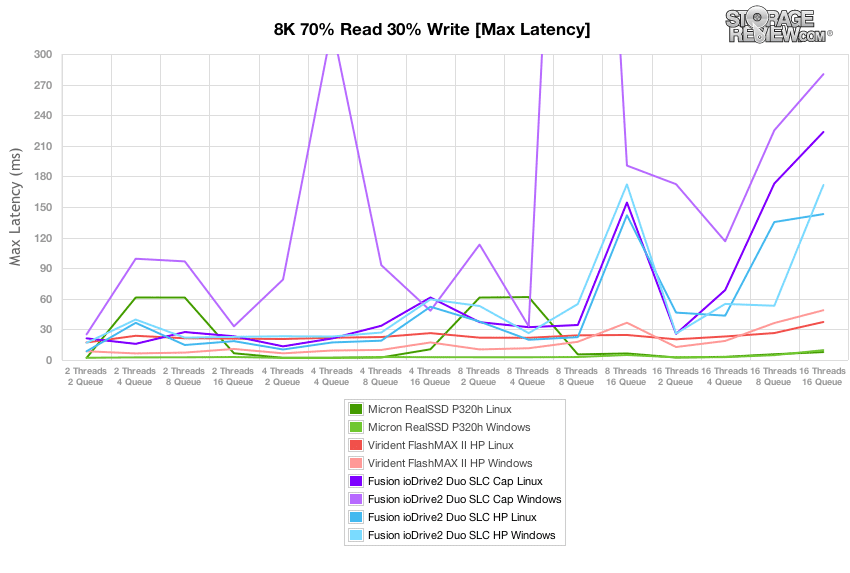

Compared to the fixed 16 thread, 16 queue max workload we performed in the 100% 4K write test, our mixed workload profiles scale the performance across a wide range of thread/queue combinations. In these tests we span our workload intensity from 2 threads and 2 queue up to 16 threads and 16 queue. In our expanded 8K 70/30 test, the Fusion ioDrive2 Duo SLC had the highest peak performance in the group in high-performance configuration. Comparing it directly against the Micron P320h, it was able to offer much higher low-queue depth performance at 2, 4, 8, and 16 threads, but then lagged behind as the queue depth increased. In stock provisioning, it offered remarkably close performance to the Virident FlashMAX II with it configured in high-performance mode.

In the scaled average latency segment of our 8k 70/30 test, we found that the Fusion ioDrive2 Duo SLC offered the lowest average latency in the group, thanks in part to its strong performance at low queue depths. As the queue depth increased, the Micron P320h took the lead, up until 16T/16Q where the ioDrive2 Duo SLC came out in front again.

As we compared max latency in our scaled 8k 70/30 test, we did record some larger spikes from the ioDrive2 Duo SLC, which seemed to edge higher as the effective queue depth increased. This was most notable with the stock-capacity ioDrive2 in Windows. Its Linux performance, as well as Windows in high-performance mode kept the peak latency below 70ms at effective loads less than or equal to QD64, while latency at QD128 and QD256 increased as high as 160ms and 220ms respectively.

In our scaled 8k 70/30 test, the Micron P320h led the pack with the most consistent latency across all workloads, with the Virident FlashMAX II coming second, and the ioDrive2 Duo SLC in high-performance trailing very close behind.

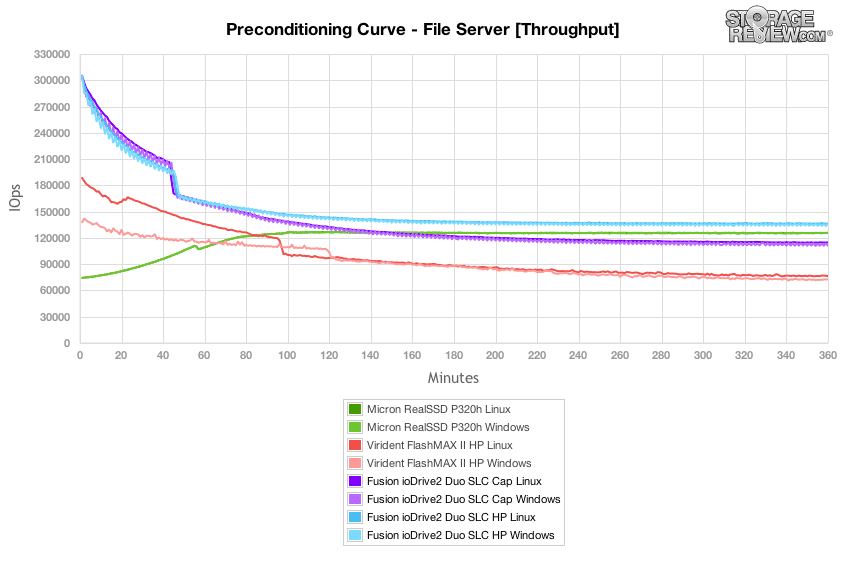

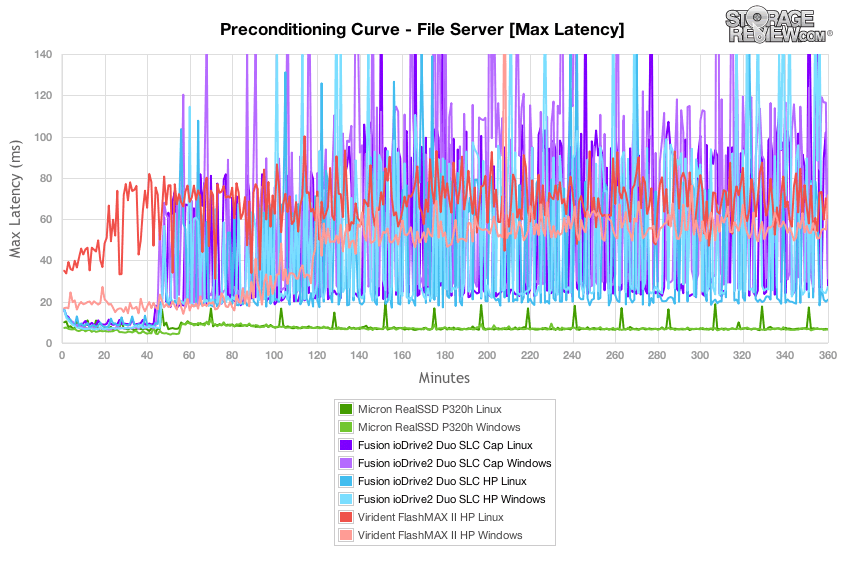

The File Server workload represents a larger transfer-size spectrum hitting each particular device, so instead of settling in for a static 4k or 8k workload, the drive must cope with requests ranging from 512b to 64K. In this workload as the Fusion ioDrive2 Duo SLC had to start coping with a larger transfer spread, it was able to flex some muscle and lead the pack in high-performance mode. In burst, the ioDrive2 Duo SLC had throughput nearly double the Micron P320h and Virident FlashMAX II, measuring about 305,000 IOPS. As it neared steady-state in high-performance mode, it leveled off around 136,000 IOPS for both Windows and Linux, compared to the P320h's 125,000 IOPS. In stock-capacity formatting, throughput tapered off to around 110,000 IOPS, compared to the Virident FlashMAX II in high-performance mode that came in near 70,000 IOPS.

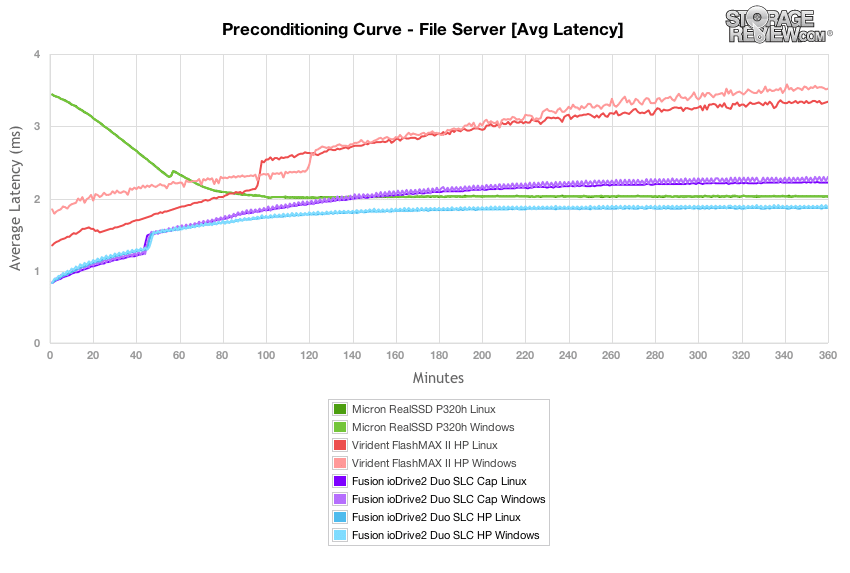

With its strong burst performance, average latency at the beginning of our preconditioning curve measured about 0.83ms from the ioDrive2 Duo SLC, before leveling off to 1.87ms in high-performance mode or about 2.25ms at stock-capacity.

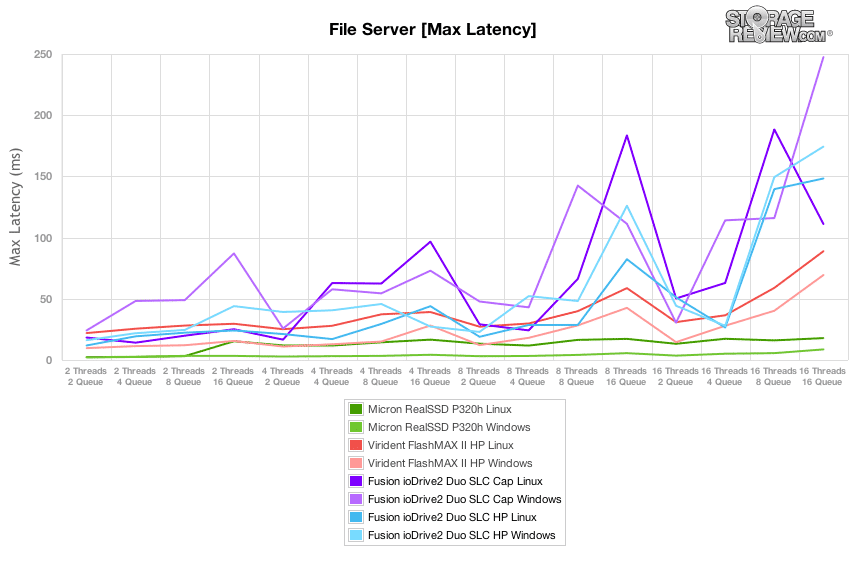

As the ioDrive2 Duo SLC transitioned from burst to sustained and steady-state, peak latency started to pick up like it did in our 8k 70/30 test, although not as high. Across the board we measured max latency ranging between 20ms to 200ms from the ioDrive2 Duo in all modes, with the best being Linux HP mode. We did note one spike slightly over 1,000ms from the ioDrive2 Duo in Windows in high-performance mode as well. In contrast, the Micron P320h maintained peak latency under 20ms for both Windows and Linux.

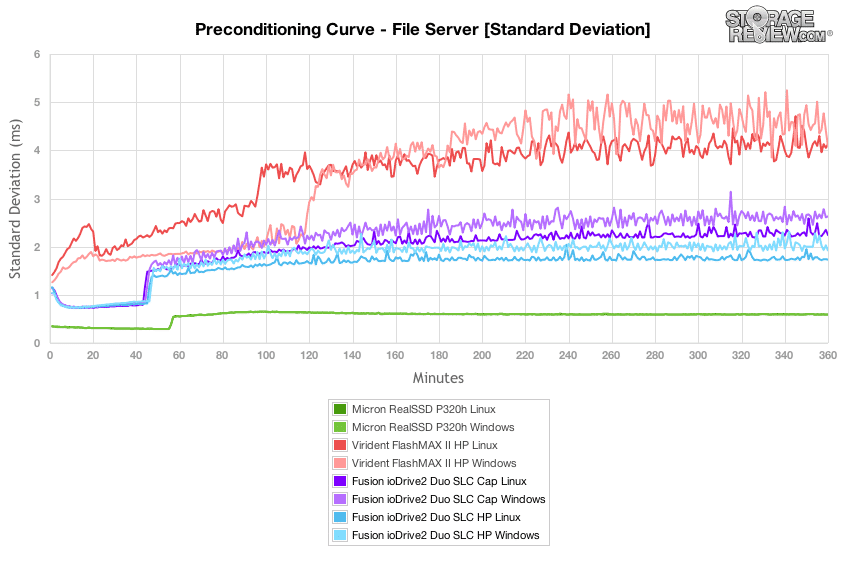

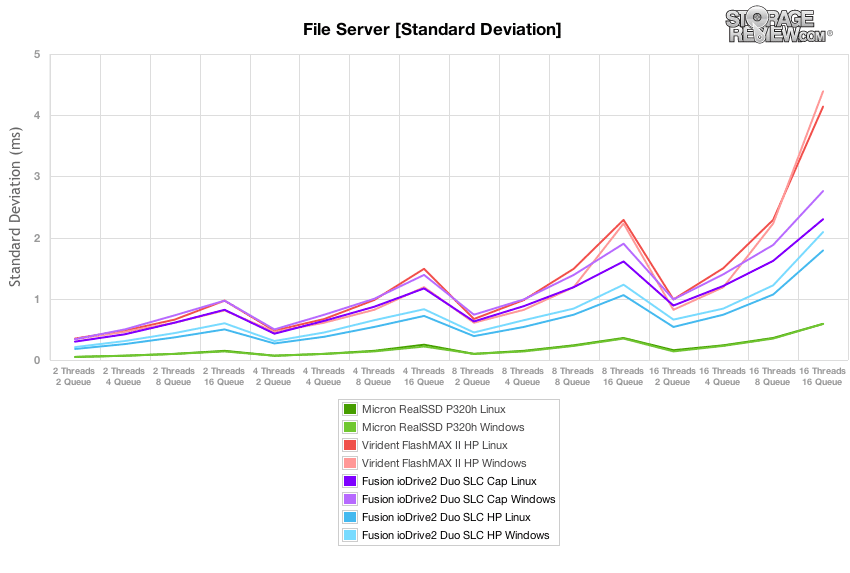

While noticed more peak latency flutter from the ioDrive2 Duo in our previous test, looking at its latency standard deviation, it ran middle of the pack in-between the Micron P320h and Virident FlashMAX II. Overall, Linux offered better consistency in both stock and high-performance configurations, with the latter having more stability.

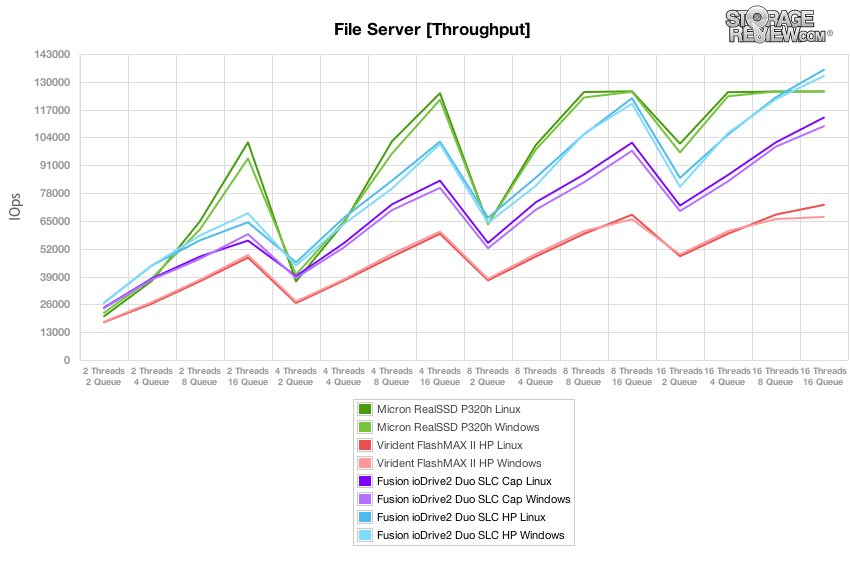

After the File Server preconditioning process had completed with a constant 16T/16Q load, we dropped into our main tests which measure performance at set levels between 2T/2Q and 16T/16Q. In our main File Server workload, the ioDrive2 Duo SLC offered the highest peak performance, measuring 132-135,000 IOPS at 16T/16Q to the P320h which measured 125,500 IOPS. The ioDrive2 Duo also showed its low queue depth strength over the Micron P320h at 2, 4, and 8 thread workloads, having a slight edge in high-performance mode. As queue depths increased, the Micron P320h offered the highest performance in each stage, topping out much higher.

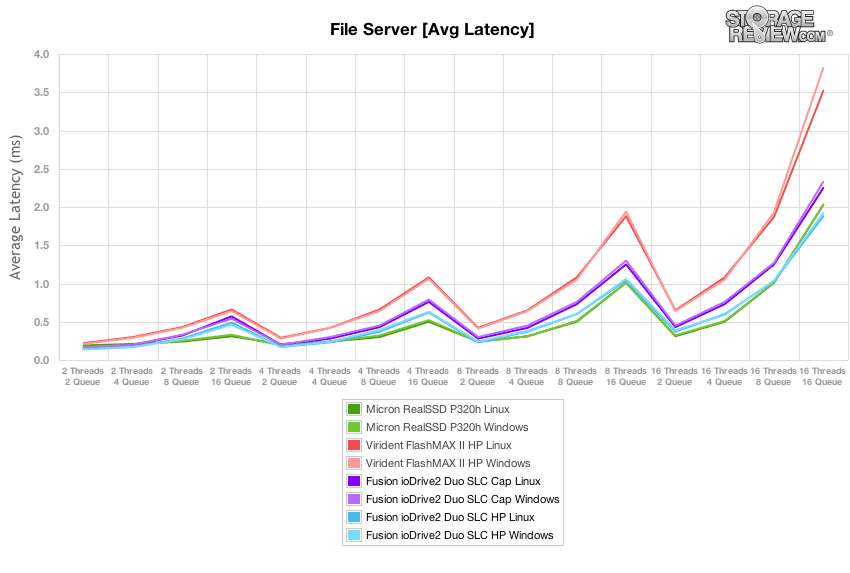

Comparing average latency between each top-in-class PCIe Application Accelerator in our File Server workload, the ioDrive2 Duo SLC had the lowest latency in the group, measuring 0.14ms for both Linux and Windows at 2T/2Q in high-performance mode. As the workload increased, the Micron P320h maintained a strong lead, up until 16T/16Q where the ioDrive2 Duo SLC had the lowest average latency at peak load.

Looking at peak response times in our main File Server test, the Fusion ioDrive2 Duo SLC had higher max latency, which started to pick up at workloads at or above an effective queue depth of 128. Below QD128, the ioDrive2 Duo had max latency measuring 11-100ms, with its better performance in high-performance mode.

Switching our view from peak response times to latency consistency in our File Server test, the ioDrive2 Duo SLC trailed the Micron P320h in high-performance mode, and offered a slight edge in latency standard deviation versus the Virident FlashMAX II in stock configuration.

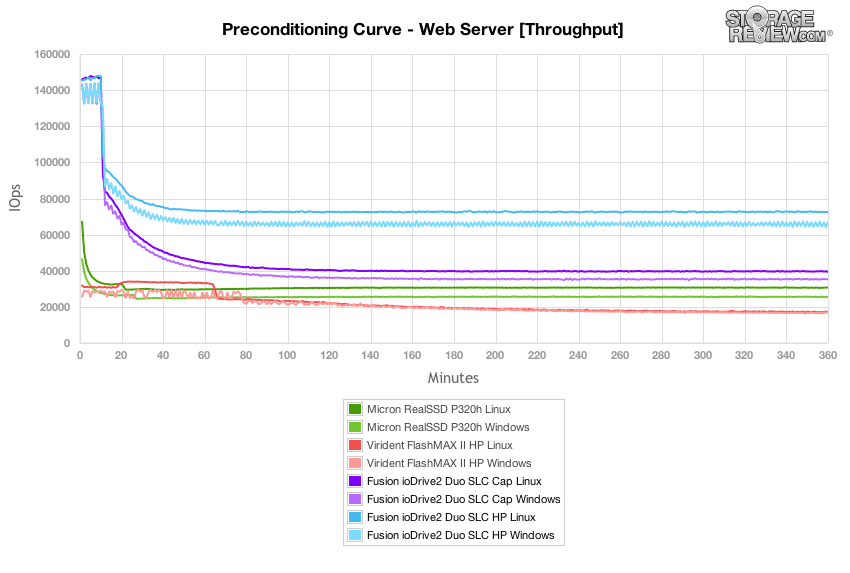

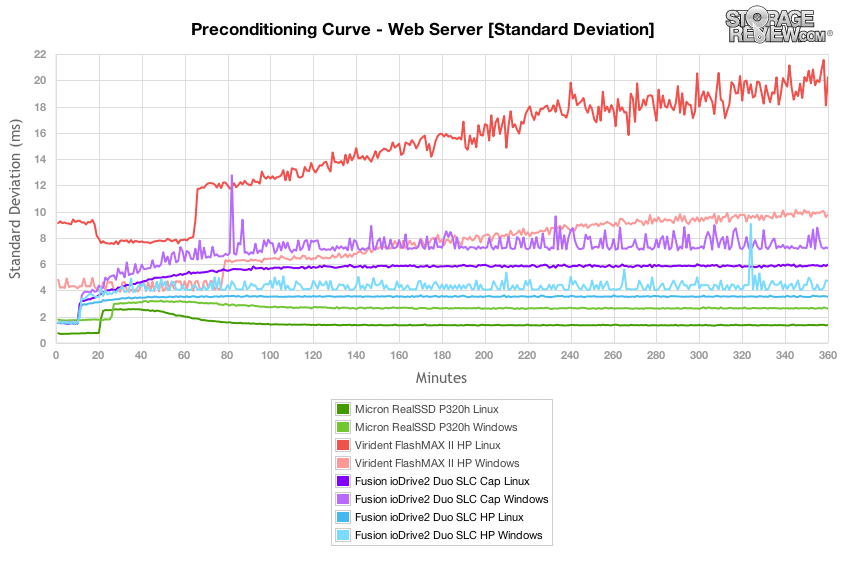

In our last synthetic workload covering a Web Server profile, which is traditionally a 100% read test, we apply 100% write activity to fully precondition each drive before our main tests. Under this stressful preconditioning test the ioDrive2 Duo SLC commanded an impressive lead over the Micron P320h and Virident FlashMAX II in both stock and high-performance configurations. In this test burst speeds topped 145,000 IOPS, whereas the Micron P320h peaked at 67,000 IOPS and the Virident FlashMAX II started at 32,000 IOPS in high-performance mode.

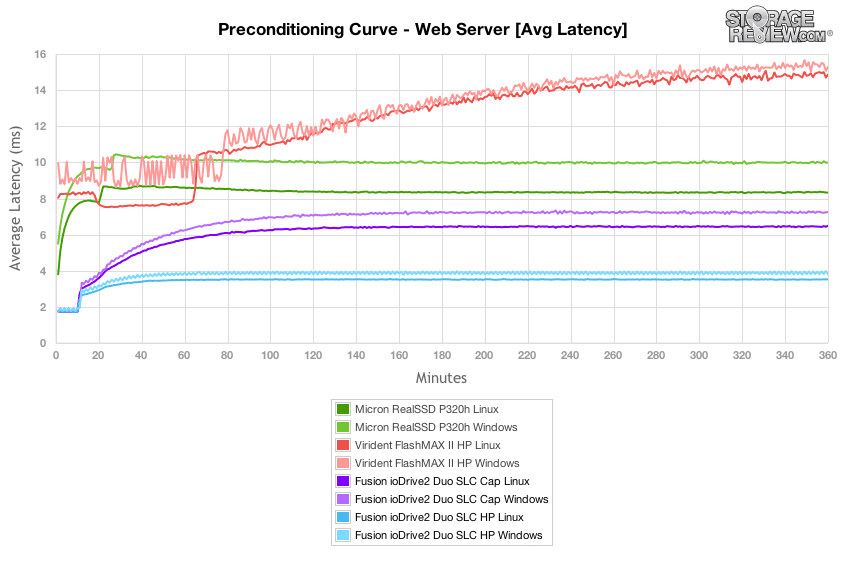

With a heavy 100% write workload of 16T/16Q in our Web Server preconditioning test, the ioDrive2 maintained an average response time of about 3.5-3.8ms in high-performance configuration and 6.4-7.2ms in stock configuration.

Comparing max latency in our Web Server preconditioning test, the ioDrive2 Duo SLC measured between 25-70ms in Linux in both stock and high-performance mode, with Windows peak latency spiking higher from 25-380ms with a couple of spikes above 1,000ms.

Comparing latency consistency in our stressful Web Server preconditioning run, the Micron P320h came in at the top of the pack, with the ioDrive2 Duo SLC coming in at the middle. When configured in high-performance mode it had stronger performance in both Windows and Linux than it did in stock-configuration.

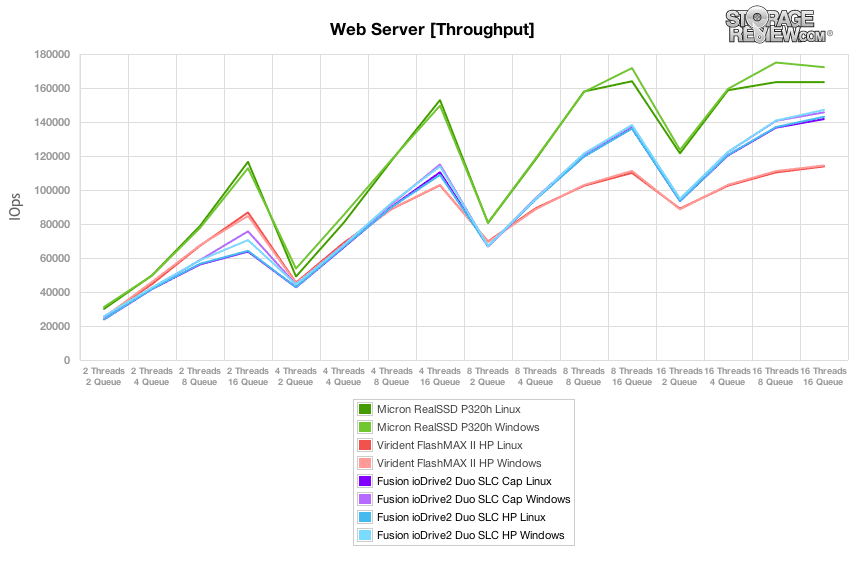

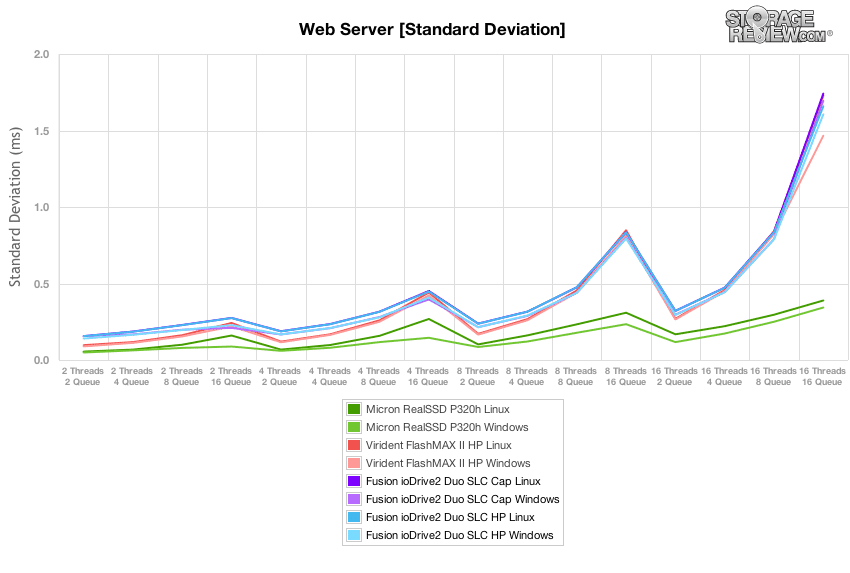

Switching to the main segment of our Web Server test with a 100% read profile, the ioDrive2 Duo SLC had performance scaling from 23.9-25.5k IOPS at 2T/2Q which increased to a peak of 141-147k IOPS at 16T/16Q. Compared to the Micron P320h, the ioDrive2 Duo couldn't match its performance at low or high queue depths, and it trailed the Virident FlashMAX II at effective queue depths below QD32, although above that level it quickly outpaced it.

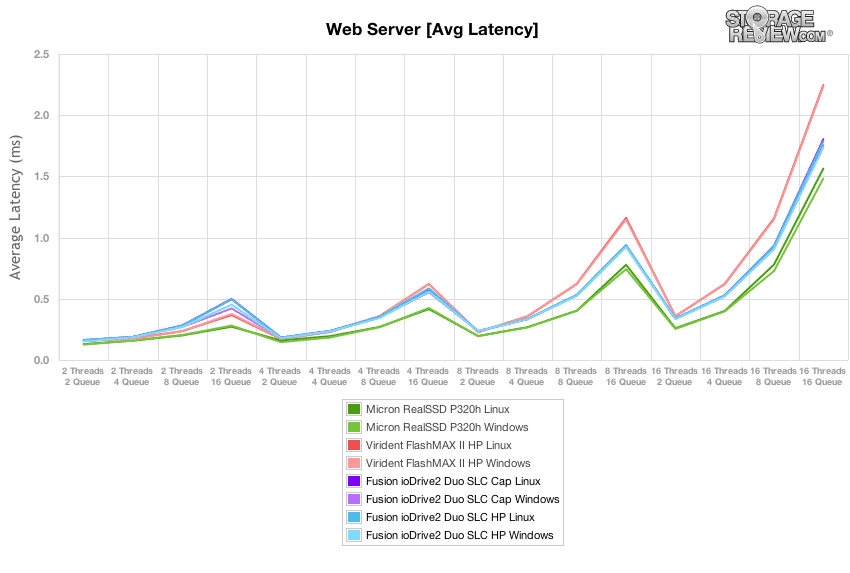

Average latency from the Fusion ioDrive2 Duo SLC ranged from 0.153-0.163ms at 2T/2Q and increased to a peak of 1.737-1.803ms at 16T/16Q. Across the board the Micron P320h had the lowest latency, while the Virident FlashMAX II had the edge at QD32 and below, which the ioDrive2 Duo SLC was able to surpass at higher effective queue depths.

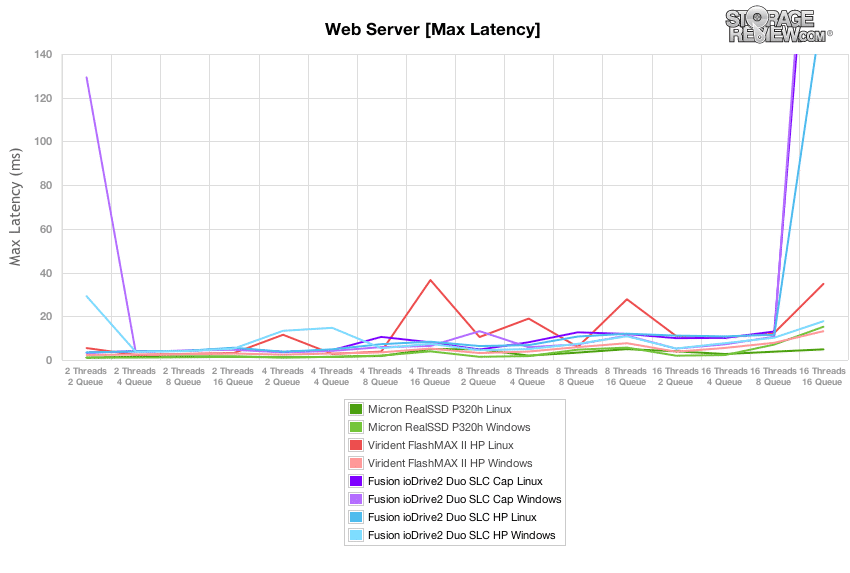

In our 100% read Web Server profile, we noted latency spikes from the ioDrive2 Duo SLC as high as 130ms, although most came in below 20ms from effective queue depths below 256. Under our highest load of 16T/16Q, the ioDrive2 Duo SLC spiked to 164-320ms, with the highest being measured in Windows under in stock configuration.

Comparing latency standard deviation in our 100% read Web Server test, the Micron P320h had a strong lead in the group, with the ioDrive2 Duo SLC trailing or being on-par with the Virident FlashMAX II from low to high effective queue depths.

Conclusion

Fusion-io has shown quite an ability to develop more than just incremental change with the ioDrive2 Duo SLC application accelerator. From the inner workings, like the Adaptive FlashBack NAND failure protection, to the enhanced board design that moves the NAND to its own daughter boards, Fusion-io has made several hardware changes that make the ioDrive2 better than the predecessor. The development team has worked aggressively to mitigate latency in the drive; we saw those benefits in the 4K 100% and 8K 70/30 testing where the ioDrive2 SLC posted the best latency and throughput scores under peak load. It also had the strongest performance at low queue depths, with a significant lead over the Micron P320h and FlashMAX II in our 8k 70/30 test at QD2 for 2, 4, and 8 thread workloads. This provided another benefit of driving the lowest average latency since it didn't have to have as many outstanding I/Os to drive significantly higher performance. On the management side, the ioDrive2 comes with ioSphere, the most comprehensive drive management software available. Compare it against any bundled software paired with Application Accelerators currently on the market and it has them beat hands down in terms of features and interface design.

We've seen with most other application accelerators that they generally perform better in one OS than another. Of course that's why we test the drives in both Windows and Linux, so we can suss out a drive's strengths and weaknesses. As we've seen with prior Fusion-io drives, the ioDrive2 Duo does exceedingly well in Linux environments, though it gets a little more erratic in Windows. While more evident when viewed on our charts, Windows performance overall doesn't suffer much, but there is certainly room for improvement. Under peak loads of 16T/16Q, we noticed peak latency blips from the ioDrive2 Duo. Although, when comparing its latency consistency, as a whole it didn't greatly impact those numbers. While some of the latency numbers aren't where Fusion-io would like, their design allows for very low-level updates that should be able to smooth out some of these latency issues over time. It is also worth noting while on the compatibility topic that Fusion-io supports one of the more robust lists of operating systems, making the drive easy to deploy in a wide number of use cases.

The Fusion-io card design often comes under fire from the competition because it uses the host CPU and RAM to do most of the NAND management work. As we've seen though, this is an efficient design, one also employed by Virident, that provides impressive performance and latency by leveraging increasingly more powerful CPUs. Micron's P320h is a shining example of the alternate approach, leveraging a very good on-board controller, but their card tops out at 700GB of SLC storage illustrating the compromises that must be made by storage vendors. A new industry attack against Fusion-io is somewhat interesting, this one around form factor. The ioDrive2 Duo uses an FHHL design, where most others use HHHL, which from a server fit perspective is a more universal design. All of the servers in our lab from HP, Dell, Lenovo and SuperMicro support FHHL and HHHL formats, but it's worth noting the Fusion-io design is somewhat of an outlier in this regard. From a design perspective, it seems acceptable to use more space to allow for modular NAND segments. This limits design costs over the long life of the product line as NAND changes, but competition is quick to note its limitations in special use cases.

Pros

- Offers the highest peak performance in our 100% 4K, 8k 70/30 and File Server workloads

- ioSphere management suite offers best in class feature set

- Highest throughput and lowest latency in 8k 70/30 and File Server workloads at low queue depths

- Proven architecture with features like Adaptive Flashback to enhance reliability

Cons

- Issues with max latency in both Windows and Linux

- Loses ground to the Micron P320h between the lowest and highest effective queue depths

Bottom Line

The Fusion-io ioDrive2 Duo SLC application accelerator provides 1.2TB of the fastest available storage in several workloads, while offering many design and feature upgrades over the prior generation product. One such upgrade is Adaptive FlashBack which preserves data and keeps the drive operational even after multiple NAND die failures. Coupling the industry's best management software and the ability to continually improve the drive without needing a new controller, the ioDrive2 Duo SLC will certainly find long-term duty serving the most critical applications that can take advantage of excellent storage performance.

Amazon

Amazon