Fusion-io’s ION Data Accelerator software platform leverages ioMemory flash storage and open server hardware to accelerate applications and SAN performance through sharing or clustering high-speed PCIe flash. ION Data Accelerator is designed for use with Tier 1 server hardware from a number of major vendors, where it functions as an application accelerator appliance that supports block storage protocols including 8/16Gb Fibre Channel, QDR/FDR InfiniBand, and 10Gbit iSCSI.

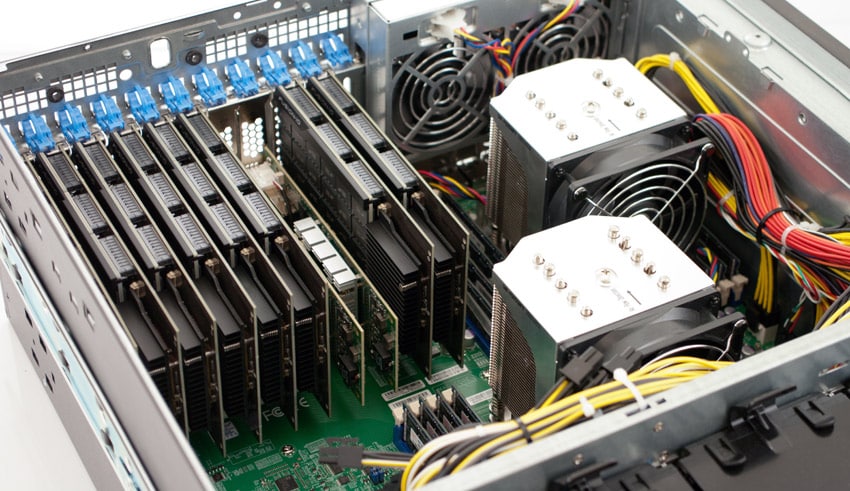

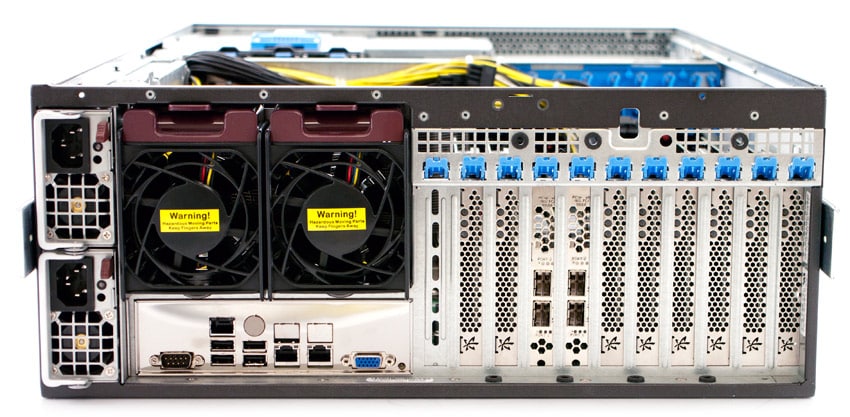

ION Data Accelerator’s capabilities as an application accelerator appliance can be fully brought to bear when used in conjunction with a server platform like the Supermicro X9DRX+-F that is built for maximum performance across all of its available PCIe slots. This review will focus on the performance of ION Data Accelerator when used with the X9DRX+-F motherboard in a SuperChassis 747 along with Fusion-io’s 3.2TB ioScale PCIe cards. In order to make sure that network performance is not a bottleneck, we will use two dual-port 16Gb QLogic 2600-series HBAs for SAN connectivity.

ioScale began as an application acceleration solution available only in large quantities for enterprise applications. More recently, Fusion-io has made ioScale cards available in smaller quantities, within reach of a much broader range of customers. ION Data Accelerator solutions with ioScale represent a contrast to SAN and application acceleration via SSD and tiered storage. In theory, a PCIe solution can scale performance with fewer new equipment and infrastructure investments than competing SSD-based architectures require. In other words, if Fusion-io’s application accelerator approach can provide more targeted acceleration than SSD storage, they should continue to find new clients for this technology even if there is a price premium for ioScale when viewed in per-gigabyte terms.

ION Data Accelerator improves storage network performance not only by managing the most demanding I/O requests and keeping copies of hot data in order to avoid the latency of hard drives, but also by freeing storage arrays from needing to manage high-speed caches or tiers. Those lighter throughput requirements can improve the performance of the underlying mass storage arrays. ION Data Accelerator integrates with Fusion-io’s ioTurbine caching solution, which provides both read and write acceleration.

ION Data Accelerator System Requirements

- Supported Servers

- Dell: PowerEdge R720, PowerEdge R420

- HP: ProLiant DL370 G6, ProLiant DL380 G7, ProLiant DL380p Gen8, ProLiant DL580 G7

- IBM: x3650 M4

- Supermicro: Superserver 1026GT-TRF, Superserver 1027GR-TRF, Superserver 6037-TRXF

- Cisco: UCS C240 M3 (support is for single-node)

- Basic Hardware Requirements

- Storage Controllers

- Fibre Channel requires QLogic 2500 Series Host Bus Adapters (HBAs).

- InfiniBand requires Mellanox ConnectX-2 or ConnectX-3 InfiniBand Host Channel Adapters (HCAs).

- iSCSI requires 10Gbit iSCSI NICs from Intel, Emulex, Mellanox, or Broadcom.

- ioMemory

- ION Data Accelerator only supports ioMemory, including ioDrive, ioDrive Duo, ioDrive2, ioDrive2 Duo, and ioScale.

- All ioMemory products within each ION Data Accelerator system must be identical in type and capacity.

- Minimum configuration supported: one ioDrive (no RAID capability unless you have more than one ioDrive).

- RAM: ION Data Accelerator requires a base of 8GB plus 5GB per TB of ioMemory. For example, if you have 4.8TB of ioMemory, your system should have 8GB + 4.8 * 5GB, or 32GB of RAM.

- Hard Drives: Fusion-io recommends servers have mirrored hard drives for boot and applications.

- Management NIC: the basic included NIC LOM on the supported hardware platform is sufficient.

- Storage Controllers

- Additional HA System Requirements

- A PCIe slot with a 40Gbit Mellanox ConnectX-3 interconnect available for use.

- Both internconnect ports must be connected.

- Each ION Data Accelerator system must be identically configured with ioDrives of identical type and capacities.

- NTP (network time protocol) must be implemented

Management

Enterprises can use Fusion ioSphere management with ION Data Accelerator for centralized control from both a graphical user interface and the command line. ioSphere can also provide monitoring and management for all ioMemory devices in the data center and streamlines tracking ioMemory health and life expectancy information throughout a deployment.

ION Data Accelerator can also be used in a high availability configuration with functionality built on integrated RAID to protect against component failures and as well as asymmetric active/active failover clustering to protect against system failures. ION Data Accelerator HA can be administered by the ioSphere GUI and a Command Line Interface. ION Data Accelerator HA includes replication features based on Linbit’s DRBD which synchronously replicated each write operation across a pair of clustered ION Data Accelerator nodes. It also leverages the Corosync and Pacemaker tools for cluster resource management and messaging during failures.

From a usability standpoint, Fusion-io got a lot of things right by making the setup process as easy enough for small IT departments to manage without being an expert in all things storage or networking. The configuration we tested leveraged a Supermicro X9DRX+-F motherboard with 10 PCIe 3.0 slots, allowing us to fill the server with 8 3.2TB ioScale PCIe SSDs as well as two dual-port 16Gb FC HBAs. Using the standard 2.1.11 release ISO, we were able to make a bare server into an ION Accelerator in under 15 minutes.

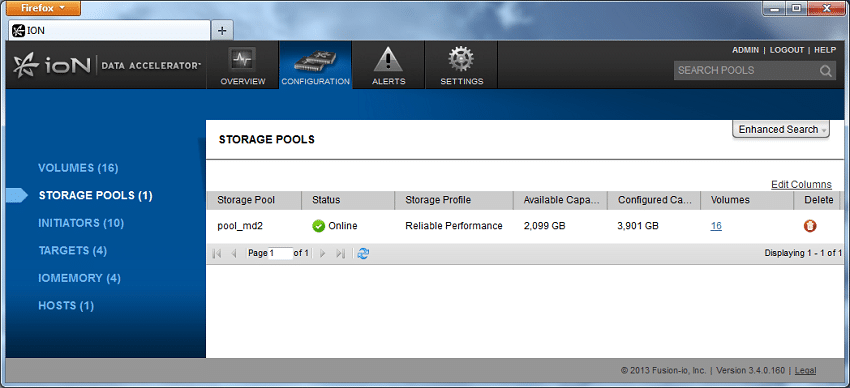

Once the system is up and running, the first step is creating a storage pool to start carving out LUNs from. Users get the choice of three storage modes, including RAID0, RAID10, and direct access. We chose RAID10 or Reliable Performance for our main testing environment, which still offers plenty of performance but can handle an ioMemory failure without losing data. With that storage pool in place, the next step is provisioning LUNs for your SAN environment.

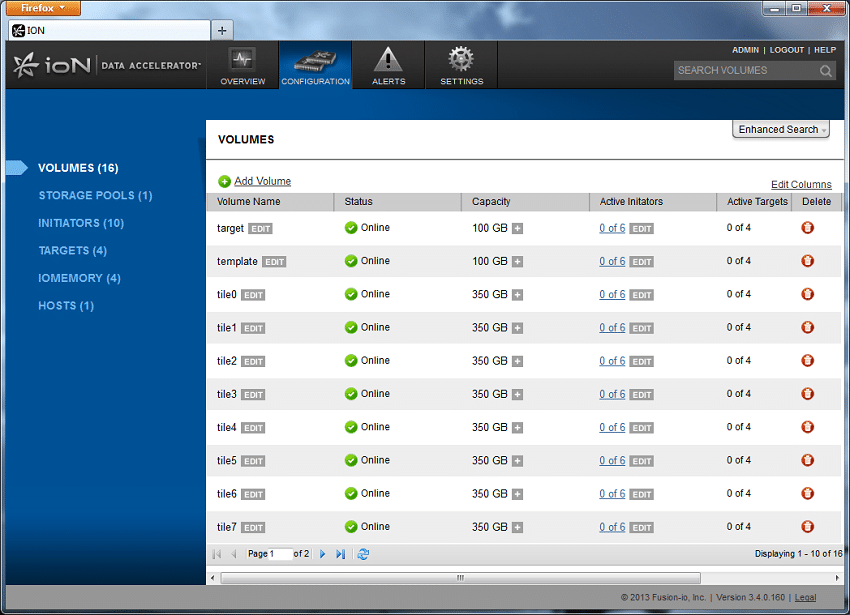

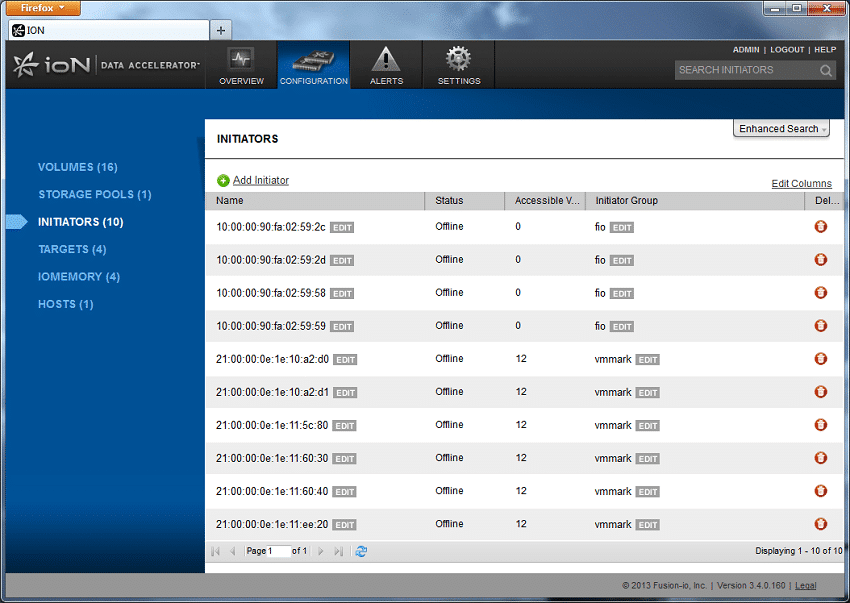

In our testing environment, we provisioned 12 LUNs for VMmark as well as additional LUNs for synthetic benchmarks. The process was very easy, with the user simply entering in the capacity required, the initiator group that is granting access, and the sector size (which can be set to 512-byte or 4K). The interface allows LUNs to be created one off, or many in one setting, to quickly roll out an environment. The next step is creating initiator groups for multiple FC interfaces can access a given LUN in a multi-pathed or virtualized environment. As can be seen below, we had our VMware initiators in one group, and our Windows initiators for FIO testing in another.

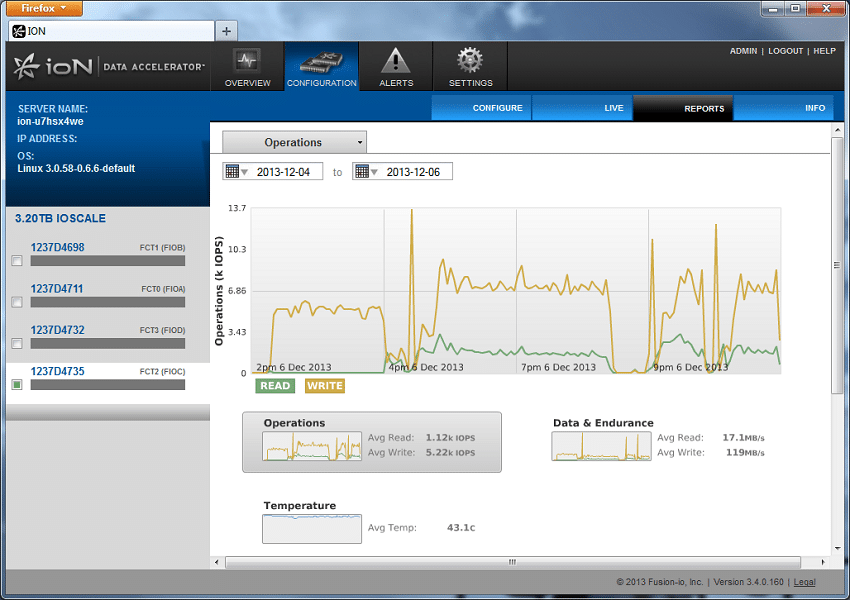

Once the environment is set up and active, the ION Accelerator continues to provide users with a wealth of management information. All performance monitoring is stored for historical purposes, which allows admins to track network and device performance down to the IOP, as well as track system vitals such as flash endurance or temperatures. In a sample report from VMmark testing, the throughput over the selected time period, as well as average transfer speeds and thermal monitoring are quick at hand. This data can also be narrowed down to specific ioMemory devices.

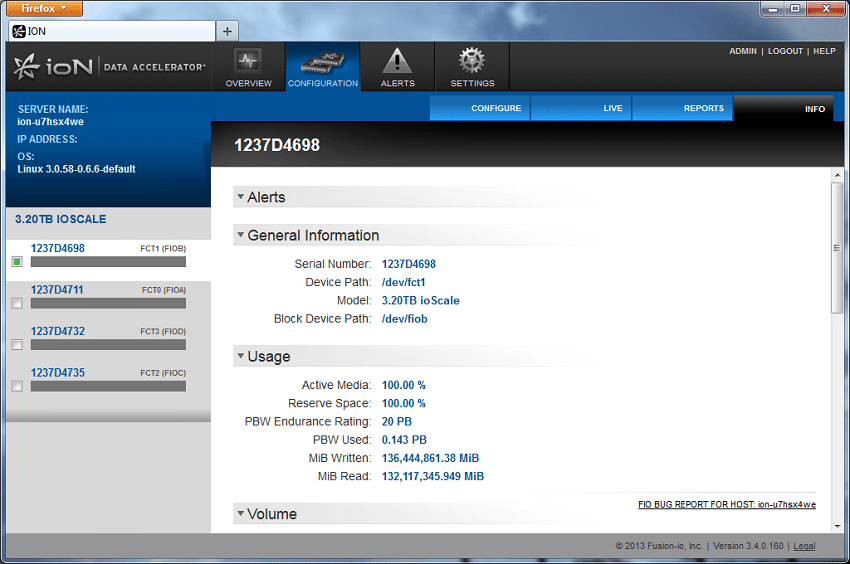

For more detailed ioMemory information, users can drill into specific cards to look at mount points, total endurance used and other detailed information.

Application Performance Analysis

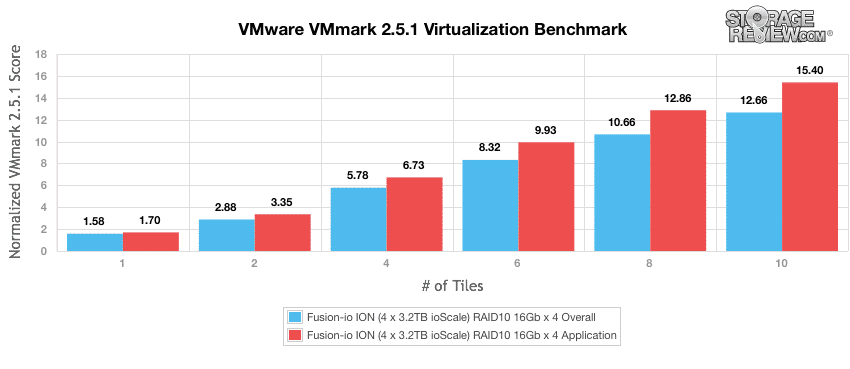

The StorageReview Enterprise Lab employs a VMmark-based virtualization benchmark in order to evaluate compute and storage devices that are commonly used in virtualized environments. ION Data Accelerator’s advanced PCIe utilization and management functionality is intended to improve the performance of such environments, making it a clear candidate for the VMware VMmark benchmark. Our VMmark protocol utilizes an array of sub-tests based on common virtualization workloads and administrative tasks with results measured using a tile-based unit corresponding to the ability of the system to perform a variety of virtual workloads such as cloning and deploying of VMs, automatic VM load balancing across a datacenter, VM live migration (vMotion) and dynamic datastore relocation (storage vMotion).

Measuring the ION Data Accelerator’s performance with four 3.2TB ioScale PCIe cards, the highest normalized VMmark 2.5.1 application score was 15.40 with 10 Tiles and the highest overall score was 12.66 at 10 Tiles. At one tile, the normalized application score was 1.7 while the one-tile overall score reached 1.58.

Enterprise Synthetic Benchmarks

Our enterprise storage synthetic benchmark process preconditions each device into steady-state with the same workload the device will be tested with under a heavy load of 16 threads with an outstanding queue of 16 per thread, and then tested in set intervals in multiple thread/queue depth profiles to show performance under light and heavy usage.

Preconditioning and Primary Steady-State Tests:

- Throughput (Read+Write IOPS Aggregate)

- Average Latency (Read+Write Latency Averaged Together)

- Max Latency (Peak Read or Write Latency)

- Latency Standard Deviation (Read+Write Standard Deviation Averaged Together)

Our analysis of ION Data Accelerator includes four profiles comparable to our past enterprise storage benchmarks and widely-published values such as max 4k read and write speed and 8k 70/30, which is commonly published in manufacturer specifications and benchmarks.

- 4k

- 100% Read and 100% Write

- 8k

- 100% Read and 100% Write

- 70% Read/30% Write

- 128k

- 100% Read and 100% Write

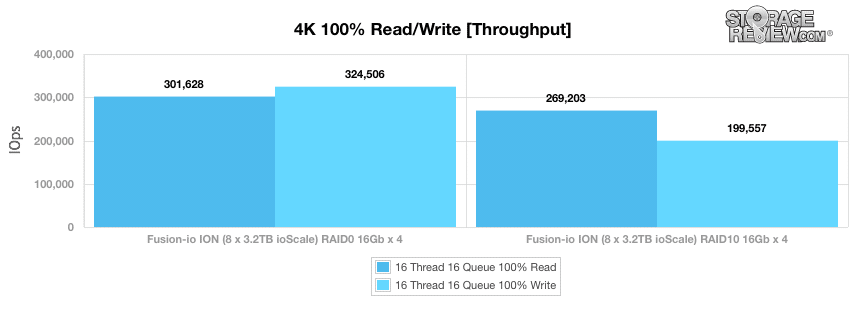

Measuring the throughput of the ION Data Accelerator system in our 4k tests reveals read IOPS of 301,638 and write IOPS of 324,506 when configured in a RAID0 array for maximum performance. Reconfigured as a RAID10 array, the system maintained a read performance of 269,203 IOPS while write performance came in at 199,557 IOPS.

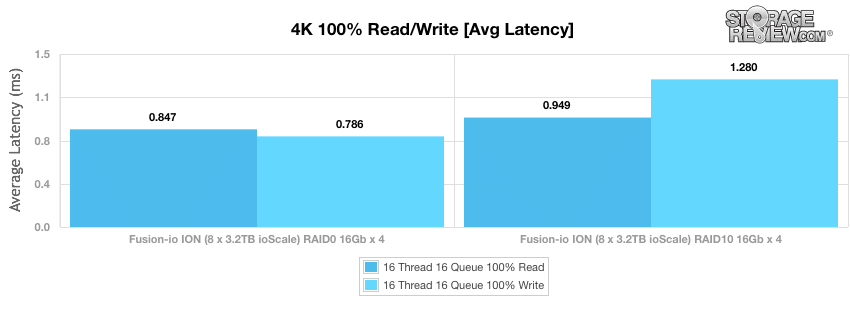

Plotting average 4k read and write latencies indicates similar delays for read and write operations for the RAID0 array at 0.847ms and 0.786ms respectively. In our benchmark of the RAID10 array, the average read latency increases slightly to 0.949ms while the write latency reaches 1.280ms.

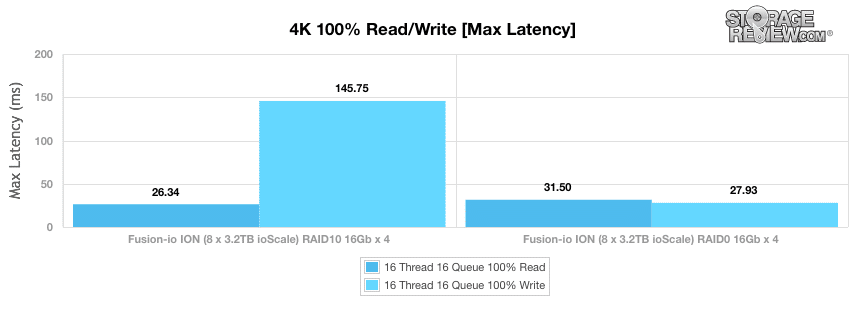

Maximum latency results reveal a notable high point in 4k write latency in the RAID10 configuration, which experienced a delay of 145.75ms at one point during the evaluation.

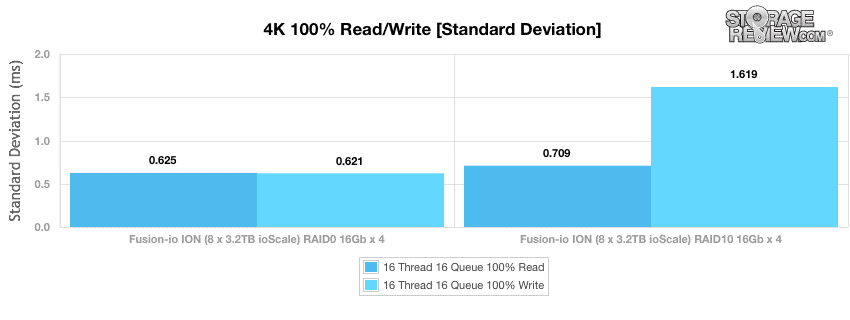

The 4k latency standard deviation also reflects the greater variability in write latencies that the RAID10 array imposes. When in RAID0, the Fusion ION Data Accelerator configuration kept both standard deviations near 0.62ms, which increased to 0.709ms for RAID10 reads and 1.619ms for write operations.

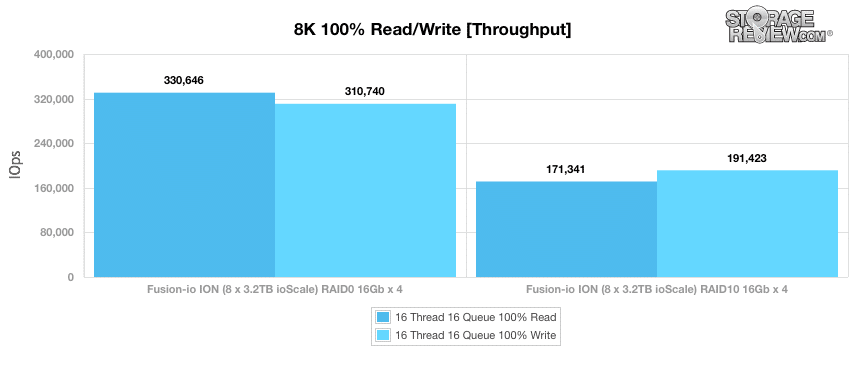

After preconditioning the ioScale cards for 8k workloads, we measured the throughput of the two array types with 8k transfers and a heavy load of 16 threads and a queue depth of 16, for both 100% read and 100% write operations. The ION system reached 330,646 read IOPS and 310,740 write IOPS configured as a RAID0 array, which scaled down to 171,341 IOPS for read and 191,423 for write in RAID10.

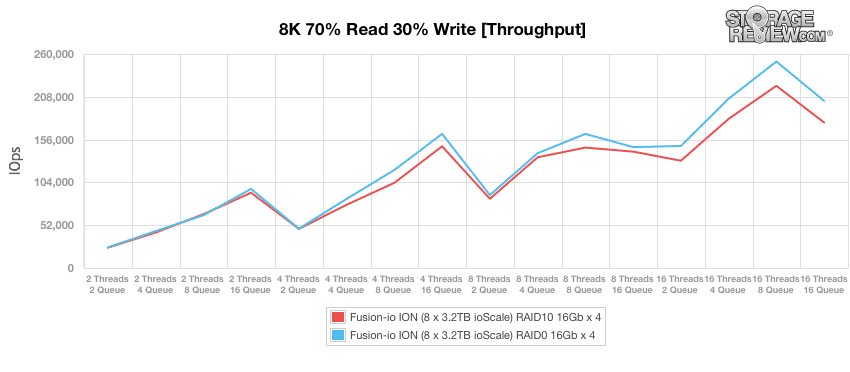

To get a more nuanced picture of performance with 8k transfers, we next used a test comprised of 70% read operations and 30% write operations across a range of thread and queue counts. Throughput remained more competitive between the two RAID types in this benchmark, with RAID0 offering the greatest performance advantages with greater workloads in general, and particularly with deep queues.

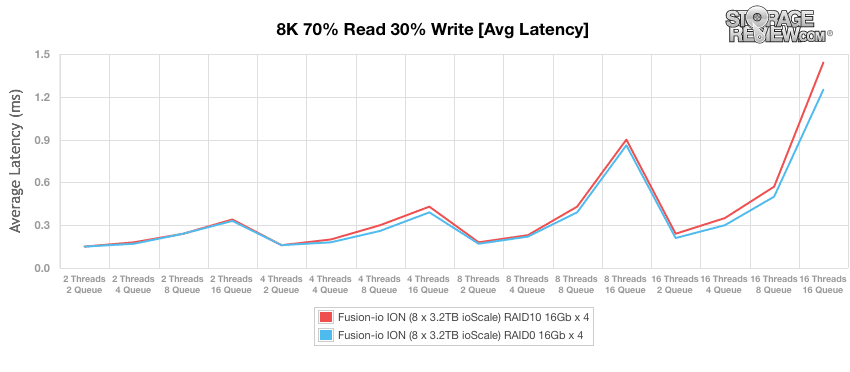

Our chart of average latencies from the 8k 70/30 benchmark reveal very similar performance from both RAID configurations, with RAID0 edging out RAID10 as the thread and queue counts increase.

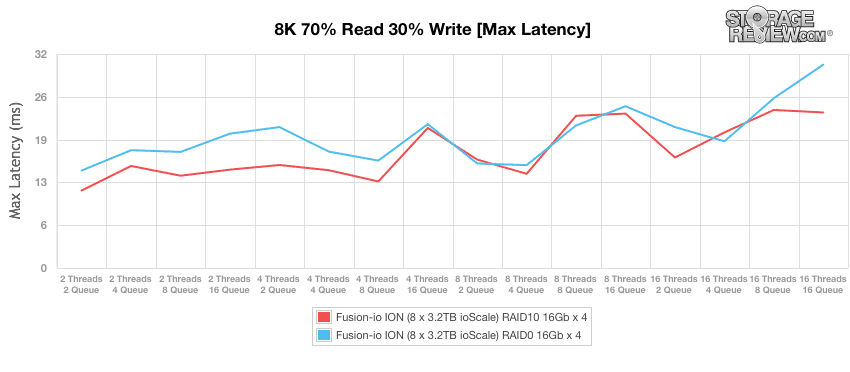

Maximum latency results during this test present a less consistent pattern, where RAID10 maintains a slight advantage on the low and high ends of the workload spectrum.

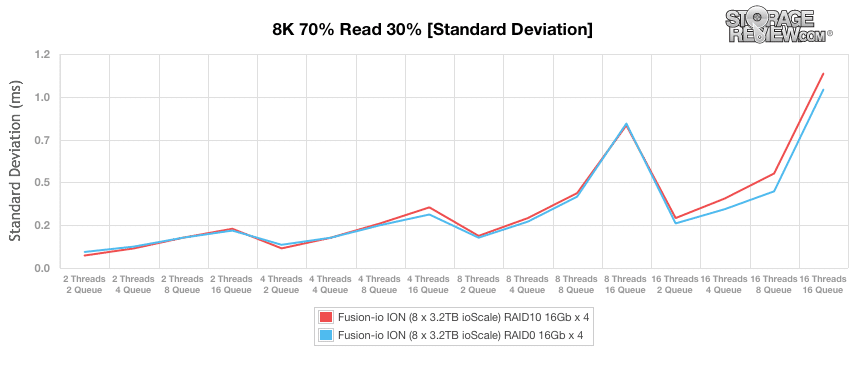

Both RAID configurations managed very consistent latency performances during the 8k 70/30 benchmark. At high queue depths and when the thread count exceeds 8, RAID0 edges out RAID10.

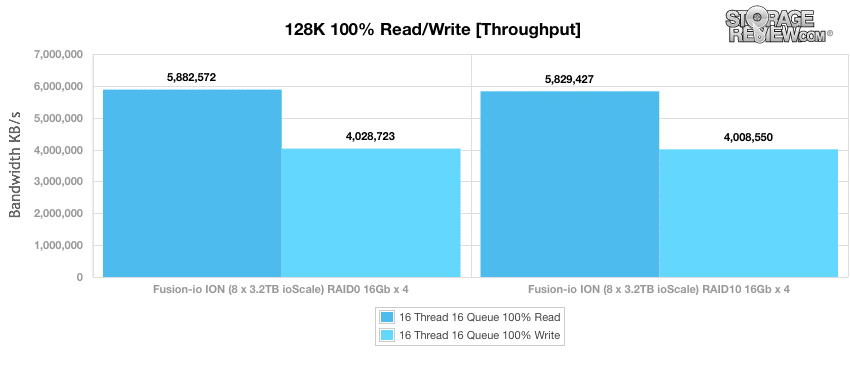

Our final synthetic benchmark uses a much larger 128k transfer size with tests for both 100% read operations and 100% write operations. The larger transfer size nearly eliminates the performance advantage of a RAID0 array for the ION system, with performance results for both read and write operations within 1% variation across array type.

Conclusion

Fusion-io offers some of the most sophisticated application acceleration devices available, and with its ioScale PCIe cards now available to a much broader segment of the enterprise market we expect to see more frequent and diverse utilization of this technology. Fusion’s ION Data Accelerator reveals one direction that PCIe storage is moving: towards coordinated and automated pooling of high-speed storage resources across multiple host servers. Between ION’s improved performance and its unified management for ioScale PCIe cards across multiple servers, the ION Data Accelerator has demonstrated that PCIe has a distinct future and use cases from SSD-based approaches to acceleration.

When it comes down to usability and performance, Fusion-io’s ION Accelerator has plenty to offer. Rolling the software out on our own server took less than 15 minutes start to finish. Provisioning storage after it was online took mere minutes, with intuitive menus and uncomplicated settings to get storage dished out to waiting servers. From a performance perspective, we were more than impressed with our 4-port 16Gb FC connectivity in testing, which provided upwards of 5.8GB/s read and 4GB/s write sequential, with peak random I/O topping 301k IOPS read and 324k IOPS write. Application performance as tested in VMmark 2.5.1 was also fantastic, handling 10-tiles with ease even as we scaled our configuration down to four ioScale PCIe SSDs in RAID10. As a “roll your own flash SAN” on commodity hardware, Fusion-io set the bar high with ION Accelerator.

Pros

- Allows users to build the exact flash SAN they need down to storage, networking interface, and server

- Easy to set up and manage

- Excellent performance

- Includes support for HA configurations

Cons

- Limited server support list

Bottom Line

The Fusion-io ION Data Accelerator gives IT admins a fresh way to deploy flash in a shared storage environment without many of the restrictions found in the mainstream SAN market. Fusion-io provides a simple GUI interface, support for most mainstream server brands and interface cards, and HA configuration options, while still driving tremendous scalable IO out of a single box.

Amazon

Amazon