In recent years, we continue to experience how storage performance is getting faster, which has been challenging the old storage protocols that have become a bottleneck for data centers. Despite using the latest fabric speed of 100GbE and new networking standards, such as InfiniBand, those slow network protocol technologies restrain flash device’s performance, keep it inside the device itself and isolated in the box. In this article, we take a quick look into Non-Volatile Memory Express (NVMe). Further, we overview NVMe over Fabrics (NVMe-oF) and NVMe over RDMA over Converged Ethernet (NVMe over RoCE), a new protocol specification developed to solve bottlenecks of modern storage networks.

Due to the always-demand for low latency and high throughput in the clouds and data centers, there is a lot of discussion going around NVMe over Fabrics. The NVMe specification has existed for less than a decade, and since NVMe-oF is also relatively new, there are still many misunderstandings about its practice and how it benefits businesses. This technology has been persistently evolving and extensively adopted in the IT industry. We are starting to see several network vendors delivering NVMe-oF related products to the enterprise market. Therefore, to keep up with advanced data center technologies, it is essential to understand what NVMe-oF is, the capabilities, and its performance characteristics. Furthermore, how it can be deployed and how we couple this technology into different and new solutions.

NVMe and NVMe-oF

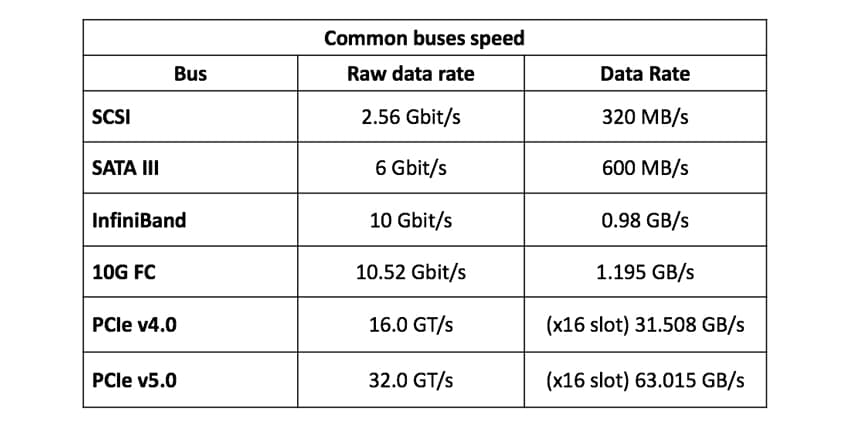

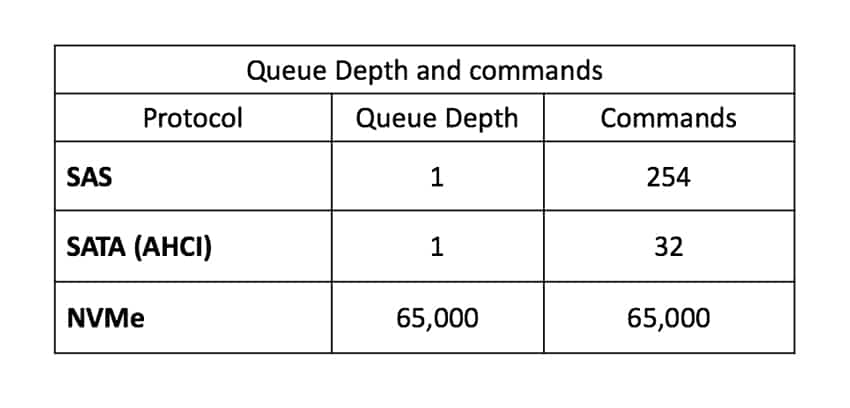

All-flash arrays (AFA) emerged in data centers to answer the call for high performance, as they are way faster than legacy storage, able to reach the always promised 1M IOPS in the market effortless. However, many of these arrays kept using a near-legacy storage technology, the SATA SSD. This type of storage is based on the AHCI (Advanced Host Controller Interface) command protocol and supports IDE. AHCI was essentially built for the spinning disk and not for the advanced flash-based drives. This SCSI-based technology creates a bottleneck for today’s SSDs and storage arrays controller, as the SATA III bus with AHCI only allows data transfer speed up to 600MB/s.

To unlock the full capability of SSDs, we needed new technology to unload the flash faster speed. NVMe is a specification that enables flash storage (or SSDs) to take real benefit of flash performance. This technology was first introduced in 2014 to improve application response time, presenting new and better capabilities. There are many form factors of NVMe solid-state drive, and the most known are AIC (add-in card), U.2, U.3, and M.2. NVMe SSDs, leverages the Peripheral Component Interconnector Express (PCIe) high-speed bus in the computer or server, by connecting it directly into it. Substantially, NVMe reduces the CPU overhead and streamline operations that lower latency, increases Input/Output Operations per Second (IOPS) and throughput. For instance, NVMe SSDs provide write speeds higher than 3,000MB/s. If we compare with SATA SSDs, this means 5x faster, or 30x faster than spinning disks.

Using NVMe, IOPS performs in parallel, allowing many calculations at the same time. Large tasks can be divided into several smaller tasks to be processed independently. Similar to a multi-core CPU, working with multiple threads. Each CPU core could work independently of each other to perform specific tasks.

Queue Depths (QD) is another advantage of NVMe over AHCI. While ACHI and SATA can handle queue depths of 32 (1 queue and 32 commands), NVMe can handle queue depths of up to 65K. These are 65K queues, where each queue can hold as many as 65K commands per queue. Along with latency reduction, this accelerates performance for busy servers processing simultaneous requests.

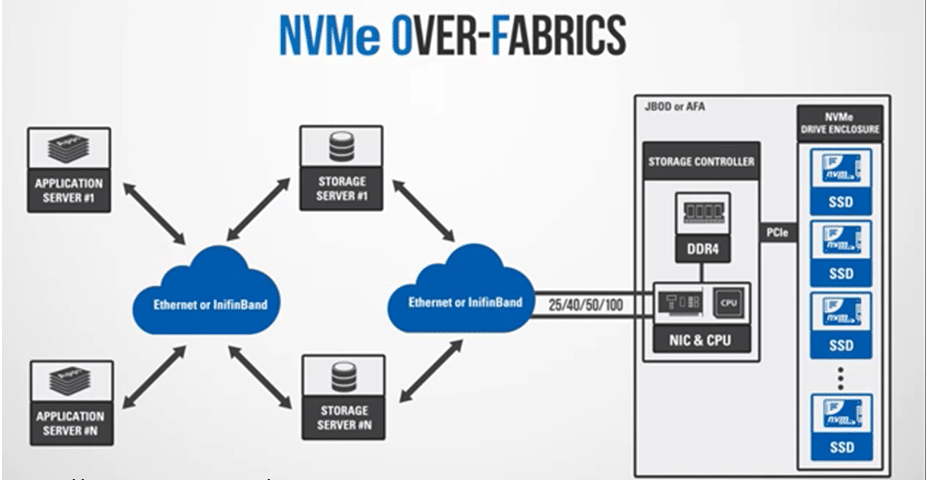

However, the problem for data centers remains on storage network protocols. Although the rise of NVMe, its gains are limited to each individual appliance. And the fact is that flash storage and other enterprise-grade (expensive) appliances, such as AFAs, are not intended to isolate its remarkable performance inside the chassis. Instead, they are meant to be used in massive parallel computer clusters, connecting them with additional and multiples appliances, such as other servers and storage. This interconnection of devices is what we call the fabric, the storage network, including switches, routers, protocol bridges, gateway devices, and cables.

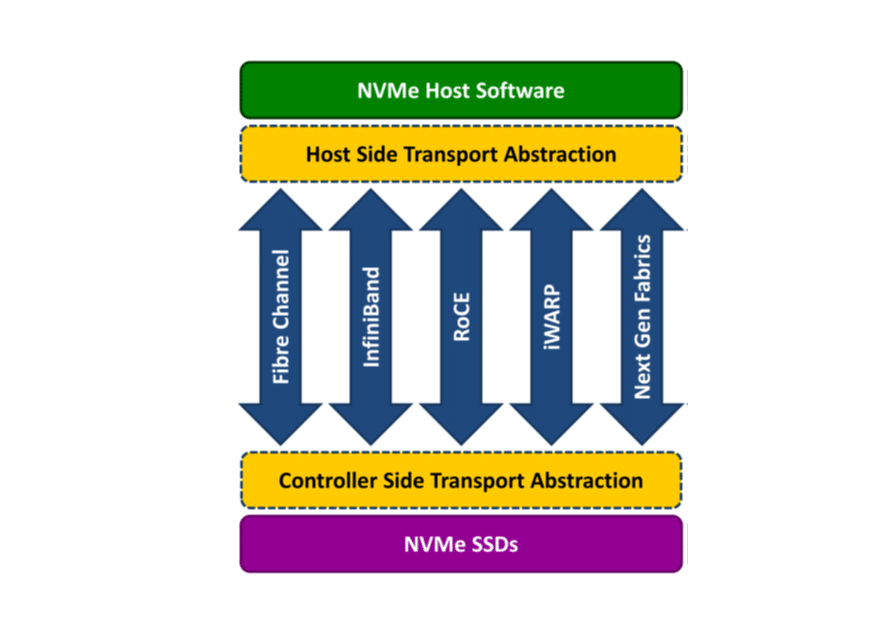

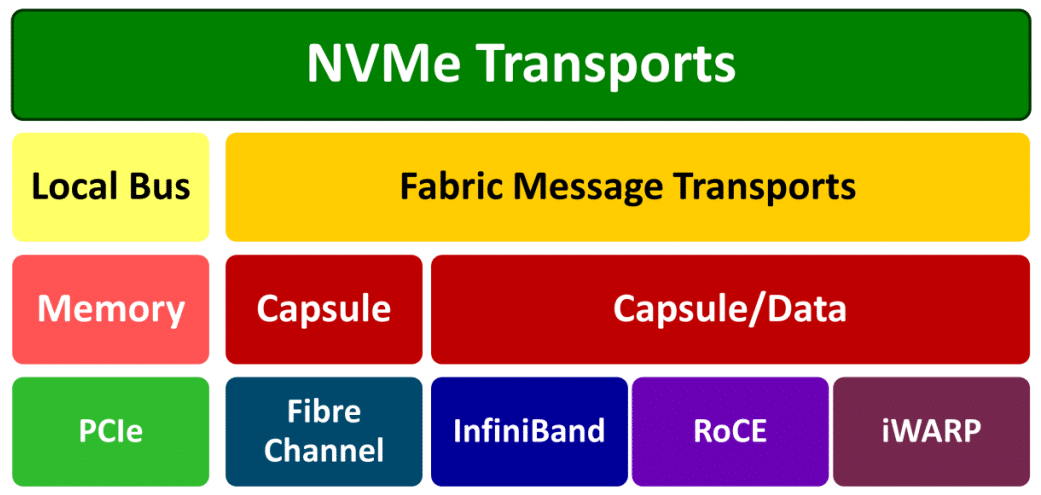

In 2016, the NVMe-oF industry standard has been launched.The protocol specification extends the incredible performance of NVMe from the storage array controllers to the fabric, using Ethernet, Fiber Channel, RoCE or InfiniBand. NVMe-oF uses an alternate data transport protocol (over fabrics) as a transport mapping, instead of the PCIe bus used by NVMe. Fabrics are built on the concept of sending and receiving messages without shared memory between endpoints. The NVMe fabric message transports encapsulate NVMe commands and responses into a message-based system that includes one or more NVMe commands or responses.

NVMe Fabrics Transports

Three types of fabric transports supported and used by NVMe are NVMe-oF using RDMA, NVMe-oF using Fiber Channel, and NVMe-oF using TCP.

NVMe-oF over RDMA

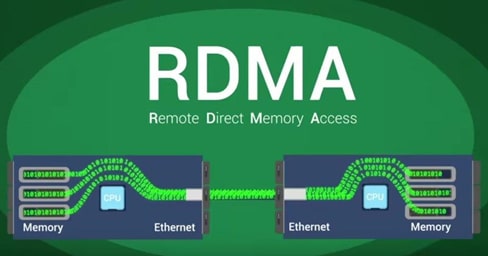

This specification uses Remote Direct Memory Access (RDMA) and enables data and memory to be transferred between computer and storage devices across the network. RDMA is a way of exchanging information between two computers’ main memory in a network without involving the processor, cache, or OS of either computer. Because RDMA avoids the OS, it is usually the fastest and lowest-overhead mechanism for transmitting data across a network.

NVMe-oF over RDMA uses the TCP transport protocol to deliver data across IP networksThe typical RDMA implementations include the Virtual Interface Architecture, RDMA over Converged Ethernet (RoCE), InfiniBand, Omni-Path, and iWARP. RoCE, InfiniBand, and iWARP are currently the most used.

NVMe over Fibre Channel

The combination of using NVMe over Fibre Channel (FC) is often referred to as FC-NVMe, NVMe over FC, or sometimes NVMe/FC. Fiber Channel is a robust protocol for transferring data between storage arrays and servers, and most enterprise SAN systems use it. In FC-NVMe, commands are encapsulated inside FC frames. It is based on standard FC rules, and it matches with the standard FC Protocol supporting access to shared NVMe flash. SCSI commands are encapsulated in FC frames; however, a performance penalty is imposed to interpret and translate them to NVMe commands.

NVMe over TCP/IP

This transport type is one of the latest developments within NVMe-oF. NVMe over TCP (Transport Control Protocol) uses NVMe-oF and the TCP transport protocol to transfer data across IP (Ethernet) networks. NVMe transported inside TCP datagrams over Ethernet as the physical transport. Despite having RDMA and Fiber Channel, TCP provides a likely cheaper and more flexible alternative. Additionally, comparing it with RoCE, which also uses Ethernet, NVMe/TCP performs more like FC-NVMe because they use messaging semantics for I/O.

Using NVMe-oF with either an RDMA, Fibre Channel, or TCP, makes a complete end-to-end NVMe storage solution. These solutions provide notably high performance while maintaining the very low latency available via NVMe.

NVMe over RDMA over Converged Ethernet (RoCE)

Among RDMA protocols, RoCE stands out. We know what RDMA and NVMe-oF are, and now we have Converged Ethernet (CE), the support of RDMA over an Ethernet network. CE is like an enhanced Ethernet version, also known as Data Center Bridging and Data Center Ethernet. It encapsulates the InfiniBand transport packet over Ethernet. Its solution provides the Link Level Flow Control mechanism to assure zero loss, even when the network is saturated. The RoCE protocol allows lower latencies than its predecessor, the iWARP protocol.

There are two RoCE versions, RoCE v1 and RoCE v2. RoCE v1 is an Ethernet layer 2 (link) protocol, allowing communication between two hosts in the same Ethernet broadcast domain. So, it cannot route between subnets. The new option is RoCE v2, a protocol on top of either the UDP/IPv4 or the UDP/IPv6 protocol. RoCE v2 is an Ethernet layer 3 (internet) protocol, which means that its packets can be routed. Software support for RoCE v2 is still emerging. Mellanox OFED 2.3 or later has RoCE v2 support and also Linux Kernel v4.5.

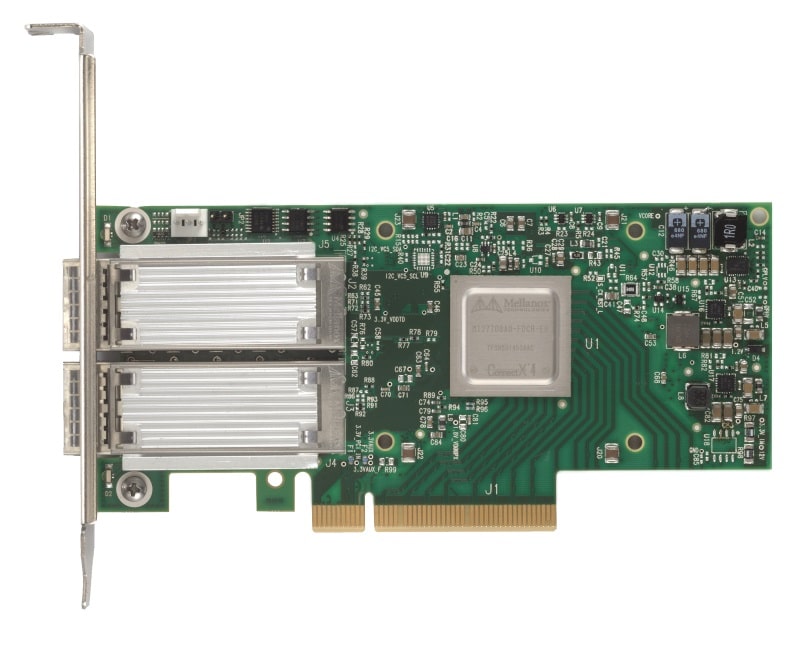

NVMe over RoCE is a new type of SAN. This protocol provides the same enterprise data services performance and flexibility of SAN hardware and software. Although the RoCE protocol benefits from a Converged Ethernet network’s characteristic, the protocol can also be used on a traditional or non-converged Ethernet network. To configure NVMe over RoCE storage fabric, the NIC, switch, and AFA must support Converged Ethernet. The NIC (called an R-NIC) and all-flash array must provide support for RoCE. Servers with R-NICs and AFAs with NVMe over RoCE interfaces will plug-and-play with installed CE switches.

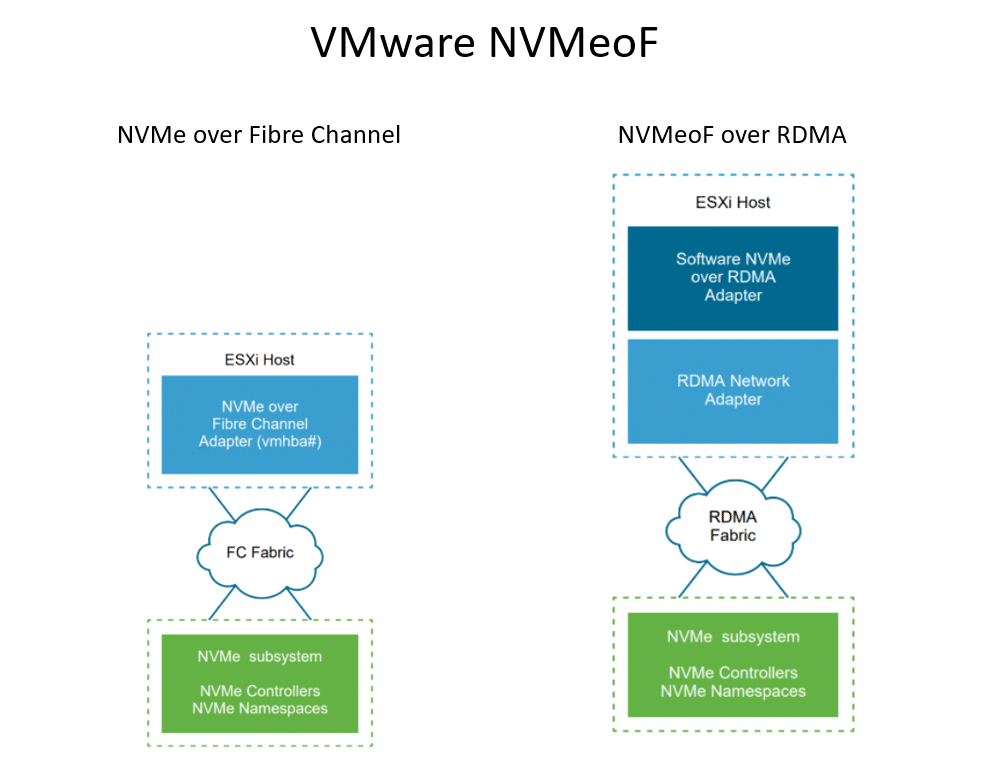

NVMe-oF over RoCE support for VMware

VMware added support for shared NVMe storage using NVMe-oF. For external connectivity, NVMe over Fibre Channel and NVMe over RDMA is supported in vSphere 7.0. ESXi hosts can use RDMA over Converged Ethernet v2 (RoCE v2). To enable and access NVMe storage using RDMA, the ESXi host uses an R-NIC adapter on your host and an SW NVMe over RDMA storage adapter. The configuration must be configured in both adapters to use them for NVMe storage discover.

Targets are presented as namespaces to a host in active/active or asymmetrical access modes (ALUA) when using NVMe-oF. This enables ESXi hosts to discover and use the presented namespaces. ESXi emulates NVMe-oF targets as SCSI targets internally and presents them as active/active SCSI targets or implicit SCSI ALUA targets.

NVMe over RDMA requirements:

- NVMe array supporting RDMA (RoCE v2) transport

- Compatible ESXi host

- Ethernet switches supporting a lossless network.

- Network adapter supporting RoCE v2

- SW NVMe over RDMA adapter

- NVMe controller

- RoCE runs today over lossy fabrics that support ZTR (Zero Touch RoCE) or requires a configured network for lossless traffic of information at layer 2 alone or at both layer 2 and layer 3(using PFC)

When setting up NVMe-oF on an ESXi host, there are a few practices that should be followed.

- Do not mix transport types to access the same namespace.

- Ensure all active paths are presented to the host.

- NMP is not used/support; instead, HPP (High-Performance Plugin) is used for NVMe targets.

- You must have dedicated links, VMkernels, and RDMA adapters to your NVMe targets.

- Dedicated layer 3 VLAN or layer 2 connectivity

- Limits:

-

- Namespaces-32

- Paths=128 (max 4 paths/namespace on a host)

Conclusion

With more and more people depending on data in cloud services, there is a growing demand for faster back-end storage in data centers. NVMe is a newer way to interface with flash devices, and along NVMe-oF, the technologies have been advancing external connectivity options. NVMe-oF and its different types of transports are starting to be recognized as the future of data storage. These storage devices and appliances are considered the heart of data centers, as every millisecond count within the interconnected fabric. The NVMe technology reduces memory-mapped input/output commands and accommodates operating system device drivers for higher performance and lower latency.

NVMe has become more and more popular because of its multitasking speed at low latency and high throughput. While NVMe is also being used in personal computers to improve Video Editing, Gaming, and other solutions, the real benefit is seen in the enterprise, through NVMe-oF. Industries, such as IT, Artificial Intelligence, and Machine Learning, continue to advance, and the demand for enhanced performance continues to grow. It is now regular to see software and network vendors, such as VMware and Mellanox delivering more NVMe-oF related products and solutions to the enterprise market. With modern and massively parallel computer clusters, the faster we can process and access our data, the more valuable it is to our business.

Resources

Amazon

Amazon