Lenovo has been a long-time leader in delivering solutions for the High-Performance Computing (HPC) market. Lenovo’s mainstream offerings are well known by end-users and data center administrators alike, but Lenovo’s HPC prowess is perhaps their best-kept secret. Well, the secret is insofar as being the largest provider of supercomputers globally (32% of them according to data maintained by Top 500).

These massive HPC wins are fundamentally driven by Lenovo’s intimate understanding of the HPC space and the willingness to take chances to meet customer needs. How exactly does that risk-taking translate? Well, about a decade ago, Lenovo delivered a liquid-cooled supercomputer to the Leibniz Supercomputing Centre in Munich, Germany. This event helped change the economics of supercomputing, especially in places like Europe, where rack space, cooling, and power come at a premium.

Lenovo ThinkSystem SR670 V2

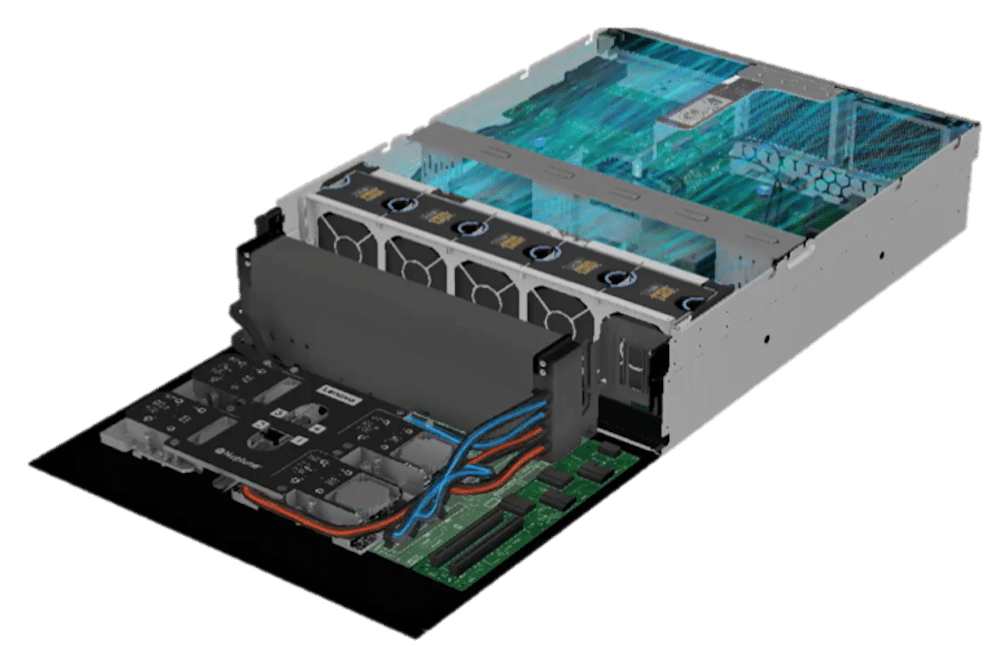

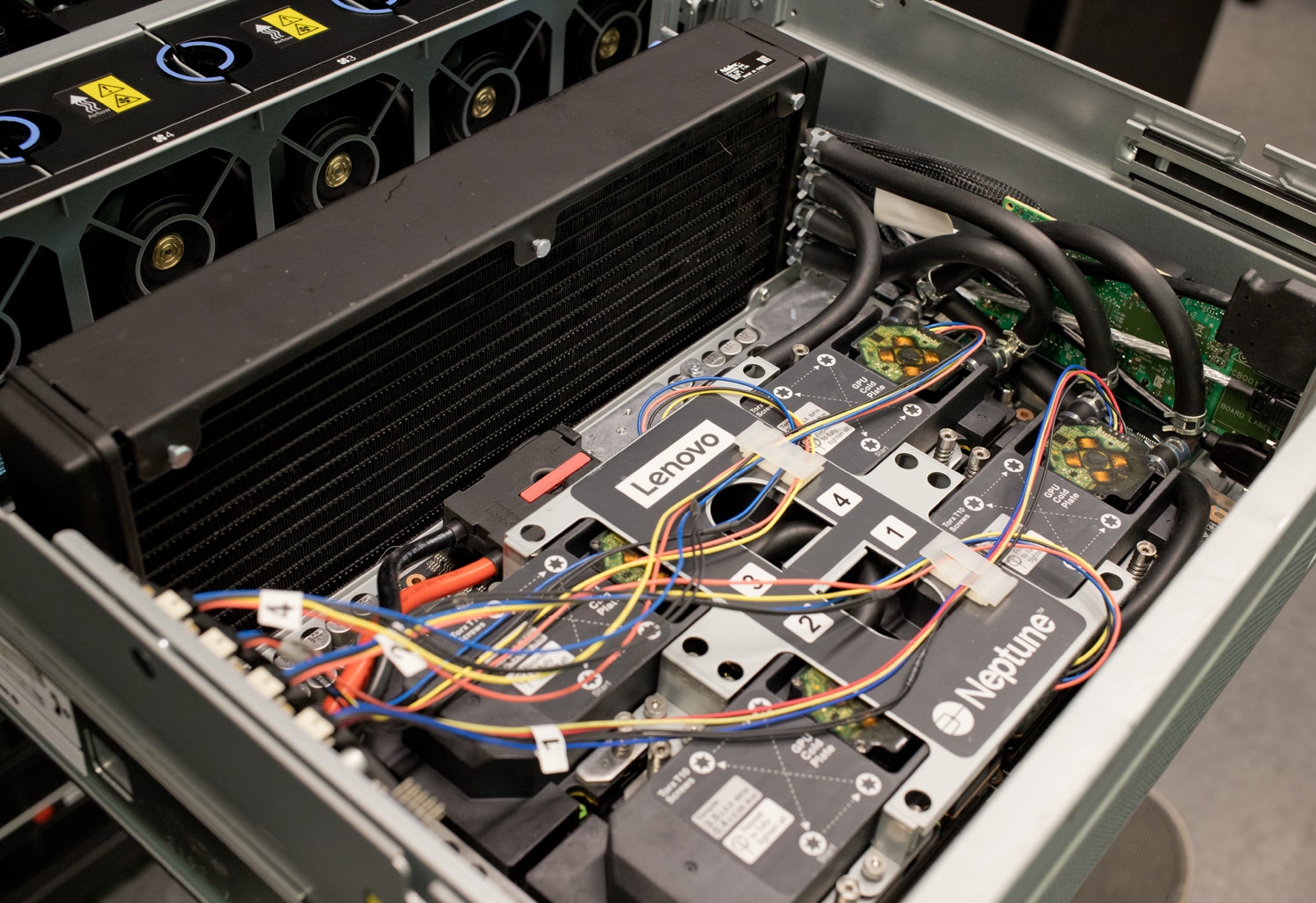

While much has changed in supercomputing since then, Lenovo continues to innovate. In the summer of 2018, Lenovo officially launched Neptune, showing off its vision for more efficient data centers thanks to liquid cooling. By bringing the ThinkSystem SD650 to market, Lenovo demonstrated to HPC customers how easy it could be to get liquid cooling to components in a 1U tray supporting 2x DWX (Neptune Direct Water Cooling) nodes per tray. Up to six trays are supported in the NeXtScale n1200 enclosure (6U). Two years later, Lenovo launched the SD650-N V2, liquid cooling, Ice Lake CPUs, socketed GPUs, DRAM, storage, and I/O modules. Implementing the Liquid to Air (L2A) heat exchanger in the ThinkSystem SR670 V2 is an example of Lenovo’s forward engineering.

Lenovo ThinkSystem SD650 V2 with Neptune™ liquid cooling technology

Who Needs HPC Systems Anyway?

Who needs all this power anyway with the sheer performance improvements in processing power, storage innovations, and memory?

Enterprises of all sizes are looking for more efficient ways to collect and analyze data to extract intelligence from several different resources in the network. Especially enterprises focused on compute-intensive programs such as molecular biology, finance, global climate change tracking, rapid gene analysis, and seismic imaging. HPC is also gaining attention from a wider field of organizations, such as those companies looking for an edge in the market and willing to invest in technology that will impact productivity and growth. HPC and AI, the base for the applications previously mentioned, are becoming more closely aligned, providing new paths for organizations to leverage that data.

The need for immediate access to aggregated data continues to drive the demand for these HPC systems. Keeping one step ahead of the competition is imperative to an organization’s success and longevity. HPC is critical in solving complex problems for business, science, and engineering, and has become the underlying foundation for innovations in science, research, retail, AV, and more and drives advancements in technologies that affect society.

The explosive growth of collected data from technologies such as AI & M/L, IoT, research, and live streaming services requires real-time processing, which is more than a typical server can handle.

Another driving force behind the growth in demand for HPC is that the systems can be deployed at the edge, in the cloud, or on-premises. The key is processing the data where that data is created and not having to transfer it to another remote location for processing.

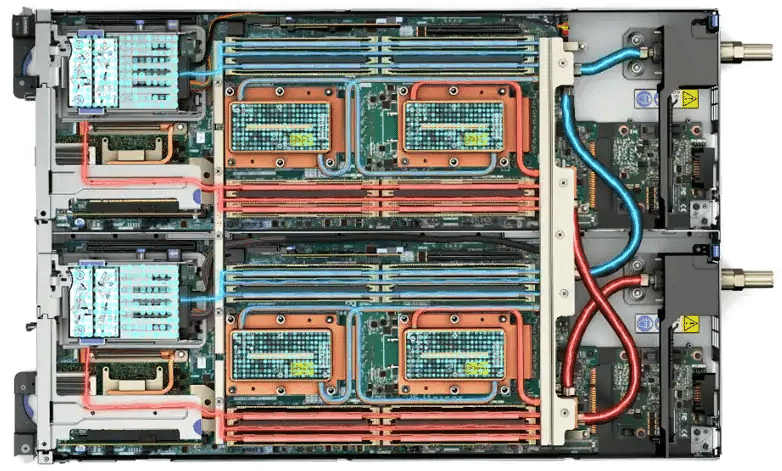

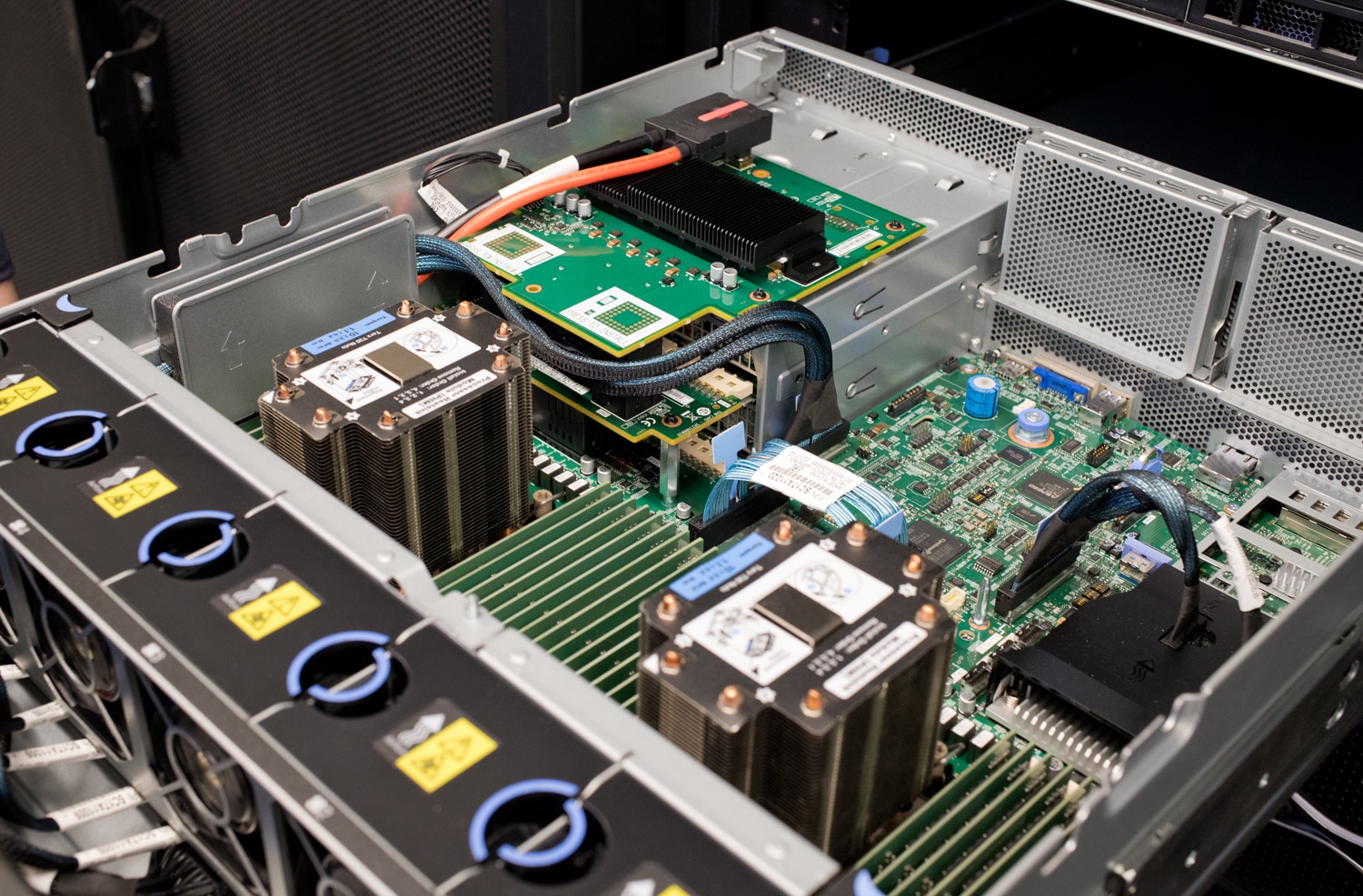

Lenovo ThinkSystem SR670 v2 with L2A Heat Exchanger

A critical consideration when selecting an HPC platform is the ability to scale out. When it comes to massive computational resources, more is better. The scale-out capabilities for these systems are crucial, and the ability to create large HPC clusters can mean success or failure depending upon the ability to scale. Utilizing high-speed, low-latency interconnects and newer storage technology like NVMe will speed up the computational outcome. Clusters can be built in a data center, cloud, or hybrid model, delivering a flexible and scalable deployment. The Lenovo ThinkSystem SR670 V2 is such a system.

A GPU-Rich Server that Meets the HPC Requirements

The Lenovo ThinkSystem SR670 V2 is a GPU-rich 3U rack server that supports eight double-wide GPUs, including the NVIDIA A100 and A40 Tensor Core GPUs, and a model with NVIDIA HGX A100 4-GPU offered with NVLink and Lenovo Neptune hybrid liquid-to-air cooling. The server is based on the new third-generation Intel Xeon Scalable processor family (formerly “Ice Lake”) and the latest Intel Optane Persistent Memory 200 Series.

The SR670 V2 delivers optimal performance for Artificial Intelligence (AI), High-Performance Computing (HPC), and graphical workloads across various industries. Retail, manufacturing, financial services, and healthcare industries can leverage the processing power of the GPUs in the SR670 V2 to extract more significant insights and drive innovation utilizing machine learning (ML) and deep learning (DL).

Traditional air-cooling methods are reaching critical limits. Increases in component power, especially for CPU and GPU, have resulted in higher energy and infrastructure costs, noisy systems, and high carbon footprints. The SR670 V2 model employs Lenovo Neptune liquid-to-air (L2A) hybrid cooling technology combats these challenges and dissipates heat quickly. The heat of the NVIDIA HGX A100 GPUs is removed through a unique closed-loop, liquid-to-air heat exchanger that delivers the benefits of liquid cooling such as higher density, lower power consumption, quiet operation, and higher performance without adding plumbing.

Industries Are Leveraging GPU Technology

The SR670 V2 is built on two 3rd-generation Intel Xeon Scalable processors designed to support the latest GPUs in the NVIDIA Ampere data center portfolio. The SR670 V2 delivers workload-optimized performance, whether utilizing visualization, rendering or computationally intensive HPC and AI.

Retail, manufacturing, financial services, and healthcare industries are leveraging GPUs to extract more significant insights and drive innovation utilizing machine learning (ML) and deep learning (DL). Here are a few ways accelerated computing leverages GPUs in different organizations:

- Remote visualization for work-from-home teams

- Ray-traced rendering for photo-realistic graphics

- Powerful video encoding and decoding

- In-silico trials and immunology in Life Sciences

- Natural language processing (NLP) for call centers

- Automatic optical inspection (AOI) for quality control

- Computer vision for retail customer experience

As more workloads utilize the capabilities of accelerators, the demand for GPUs increases. The ThinkSystem SR670 V2 delivers an optimized enterprise-grade solution for deploying accelerated HPC and AI workloads in production, maximizing system performance.

Flexible Configuration Options

The modular design delivers ultimate flexibility in the SR670 V2. Configuration options include:

- Up to eight double-width GPUs with NVLink Bridge

- NVIDIA HGX™ A100 4-GPU with NVLink and Lenovo Neptune™ hybrid liquid cooling

- Choice of front or rear high-speed networking

- Choice of local high speed 2.5″, 3.5″, and NVMe storage

The ThinkSystem SR670 V2 performance is optimized for your workload, visualization, rendering, or computationally intensive HPC and AI.

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest-performing elastic data centers for AI, data analytics, and HPC applications. The A100 can efficiently scale up or be partitioned into seven isolated GPU instances. Multi-Instance GPU (MIG) provides a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands. A rack of 13 ThinkSystem SR670 V2s can generate up to two PFLOPS of compute power.

Built on the latest Intel® Xeon® Scalable family CPUs and designed to support high-end GPUs, including NVIDIA Tesla V100 and T4, the ThinkSystem SR670 V2 delivers optimized accelerated performance for AI and HPC workloads.

Solutions That Scale

Whether just starting with AI or moving into production, solutions must scale with the organization’s needs. The ThinkSystem SR670 V2 can be used in a cluster environment using high-speed fabric to scale out as your workload demands increase.

Enabled with Lenovo intelligent Computing Orchestration (LiCO), it adds support for multiple users and will scale within a single cluster environment. LiCO is a powerful platform that manages cluster resources for HPC and AI applications.

LiCO provides both AI and HPC workflows and supports multiple AI frameworks, including TensorFlow, Caffe, Neon, and MXNet, leveraging a single cluster for diverse workload requirements.

The progression of innovation throughout the HPC portfolio has marched along just as quickly. For organizations not quite ready to take the plunge to full-on liquid cooling, the ThinkSystem SR670 V2 provides impressive flexibility.

Lenovo ThinkSystem SR670 V2 Configurability & Specifications

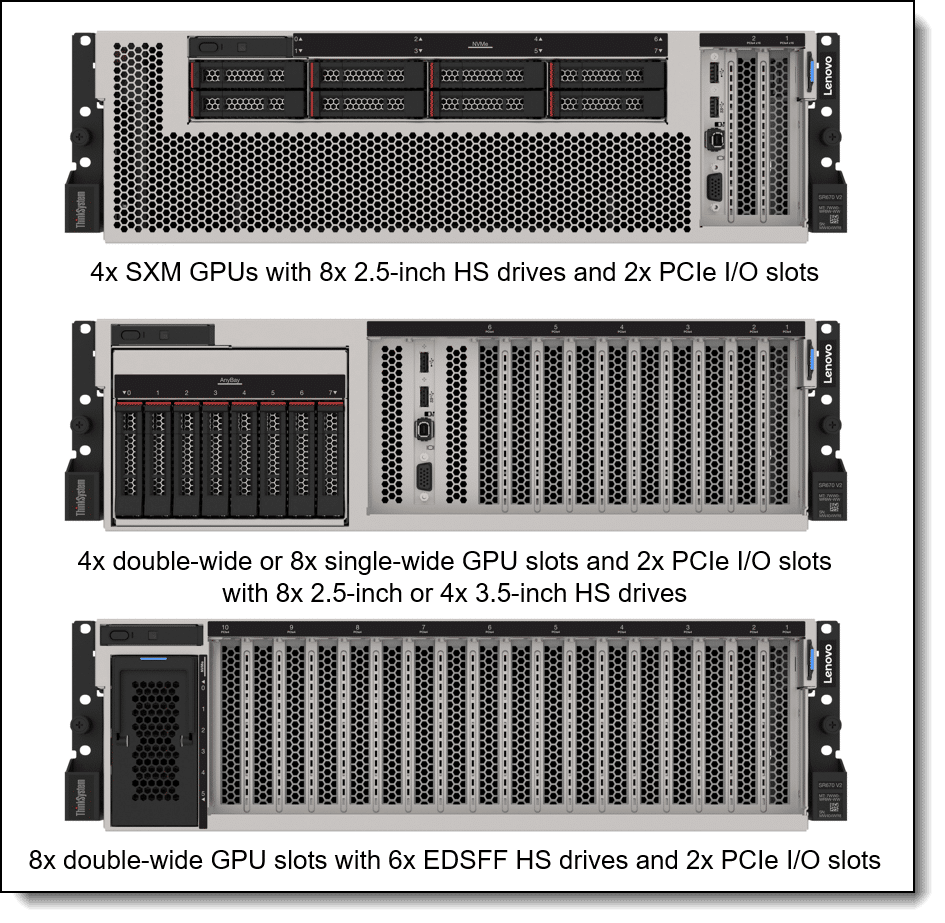

Configurability is the core of the ThinkSystem SR670 V2’s appeal. Its flexibility focuses on GPU-dense computing, and most of its physical volume is dedicated to modular GPUs, whether single or double-wide or NVIDIA SXM. The three base configurations are as follows.

| Config 1 | Config 2 | Config 3 | |

| # of GPUs | 4x SXM | 4x double-wide or 8x single-wide | 8x double-wide |

| Drive Support | 8x 2.5-inch | 8x 2.5-inch or 4x 3.5-inch | 6x E1.S |

The configurations illustrated:

The table below shows the full SR670 V2 specifications.

| Components | Specification |

| Machine types | 7Z22 – 1-year warranty 7Z23 – 3-year warranty |

| Form factor | 3U rack |

| Processor | Two third-generation Intel Xeon Scalable processors (formerly codenamed “Ice Lake”). Supports processors up to 40 cores, core speeds of up to 3.6 GHz, and TDP ratings of up to 270W. |

| Chipset | Intel C621A “Lewisburg” chipset, part of the platform codenamed “Whitley.” |

| Memory | 32 DIMM slots with two processors (16 DIMM slots per processor). Each processor has 8 memory channels, with 2 DIMMs per channel (DPC). Lenovo TruDDR4 RDIMMs and 3DS RDIMMs are supported. DIMM slots are shared between standard system memory and persistent memory. DIMMs operate at up to 3200 MHz at 2 DPC. |

| Persistent memory | Supports up to 16x Intel Optane Persistent Memory 200 Series modules (8 per processor) installed in the DIMM slots. Persistent memory (Pmem) is installed in combination with system memory DIMMs. |

| Memory maximum | With RDIMMs: Up to 4TB by using 32x 128GB 3DS RDIMMs With Persistent Memory: Up to 4TB by using 16x 128GB 3DS RDIMMs and 16x 128GB Pmem modules (1.5TB per processor) |

| Memory protection | ECC, SDDC (for x4-based memory DIMMs), ADDDC (for x4-based memory DIMMs, requires Platinum or Gold processors), and memory mirroring. |

| Disk drive bays | Either 2.5-inch, 3.5-inch, or EDSFF drives, depending on the configuration:

The server also supports an internal M.2 adapter supporting up to two M.2 drives. |

| Maximum internal storage |

|

| Storage controller |

|

| Optical drive bays | No internal optical drive. |

| Tape drive bays | No internal backup drive. |

| Network interfaces | OCP 3.0 SFF slot with flexible PCIe 4.0 x8 or x16 host interface, available depending on the server configurations:

The OCP slot supports a variety of 2-port and 4-port adapters with 1GbE, 10GbE, and 25GbE network connectivity. One port can optionally be shared with the XClarity Controller (XCC) management processor for Wake-on-LAN and NC-SI support. |

| PCI Expansion slots | Up to 4x PCIe 4.0 slots, depending on the GPU and drive bay configuration selected. Slot selection is from:

|

| GPU support | Supports up to 8x double-wide PCIe GPUs or 4x SXM GPUs, depending on the configuration:

Note: Configurations with single-wide GPUs such as the NVIDIA A10 may be possible via a Special Bid request. |

| Ports | Front:

Rear:

Internal:

|

| Cooling | 5x dual-rotor simple-swap 80 mm fans, configuration dependent. Fans are N+1 rotor redundant, tolerating a single-rotor failure. One fan is integrated into each power supply. |

| Power supply | Up to four hot-swap redundant AC power supplies with 80 PLUS Platinum certification. 1800 W or 2400 W AC options, supporting 220 V AC. In China only, power supplies also support 240 V DC.

|

| Video | G200 graphics with 16 MB memory with 2D hardware accelerator, integrated into the XClarity Controller. Maximum resolution is 1920×1200 32bpp at 60Hz. |

| Hot-swap parts | Drives and power supplies. |

| Systems management | Operator panel with status LEDs. On SXM and 4-DW GPU models, External Diagnostics Handset with LCD display (not available in 8-DW GPU models). XClarity Controller (XCC) embedded management, XClarity Administrator centralized infrastructure delivery, XClarity Integrator plugins, and XClarity Energy Manager centralized server power management. Optional XClarity Controller Advanced and Enterprise to enable remote control functions. |

| Security features | Chassis intrusion switch, Power-on password, administrator’s password, Trusted Platform Module (TPM), supporting TPM 2.0. In China only, optional Nationz TPM 2.0. |

| Operating systems supported | Microsoft Windows Server, Red Hat Enterprise Linux, SUSE Linux Enterprise Server, VMware ESXi. |

| Limited warranty | Three-year or one-year (model dependent) customer-replaceable unit and onsite limited warranty with 9×5 next business day (NBD). |

| Service and support | Optional service upgrades are available through Lenovo Services: 4-hour or 2-hour response time, 6-hour fix time, 1-year or 2-year warranty extension, software support for Lenovo hardware, and some third-party applications. |

| Dimensions | Width: 448 mm (17.6 in.), height: 131 mm (5.2 in.), depth: 892 mm (35.1 in.). |

| Weight | Approximate weight, dependent on the configuration selected:

|

GPUs Offer Significant Configuration Performance Options

GPU support is the most significant variable between configurations. Single-wide GPUs use PCIe x8 lanes and scale to the NVIDIA A10, while double-wide GPUs use PCIe x16 and scale to the NVIDIA A100. The flagship SXM configuration uses the NVIDIA HGX A100, which uses an NVIDIA NVLink bridge (direct GPU-to-GPU communication) to connect its four onboard GPUs. Double-wide GPU configurations support NVLink, and the SR670 V2 also supports the double-wide AMD Instinct MI210.

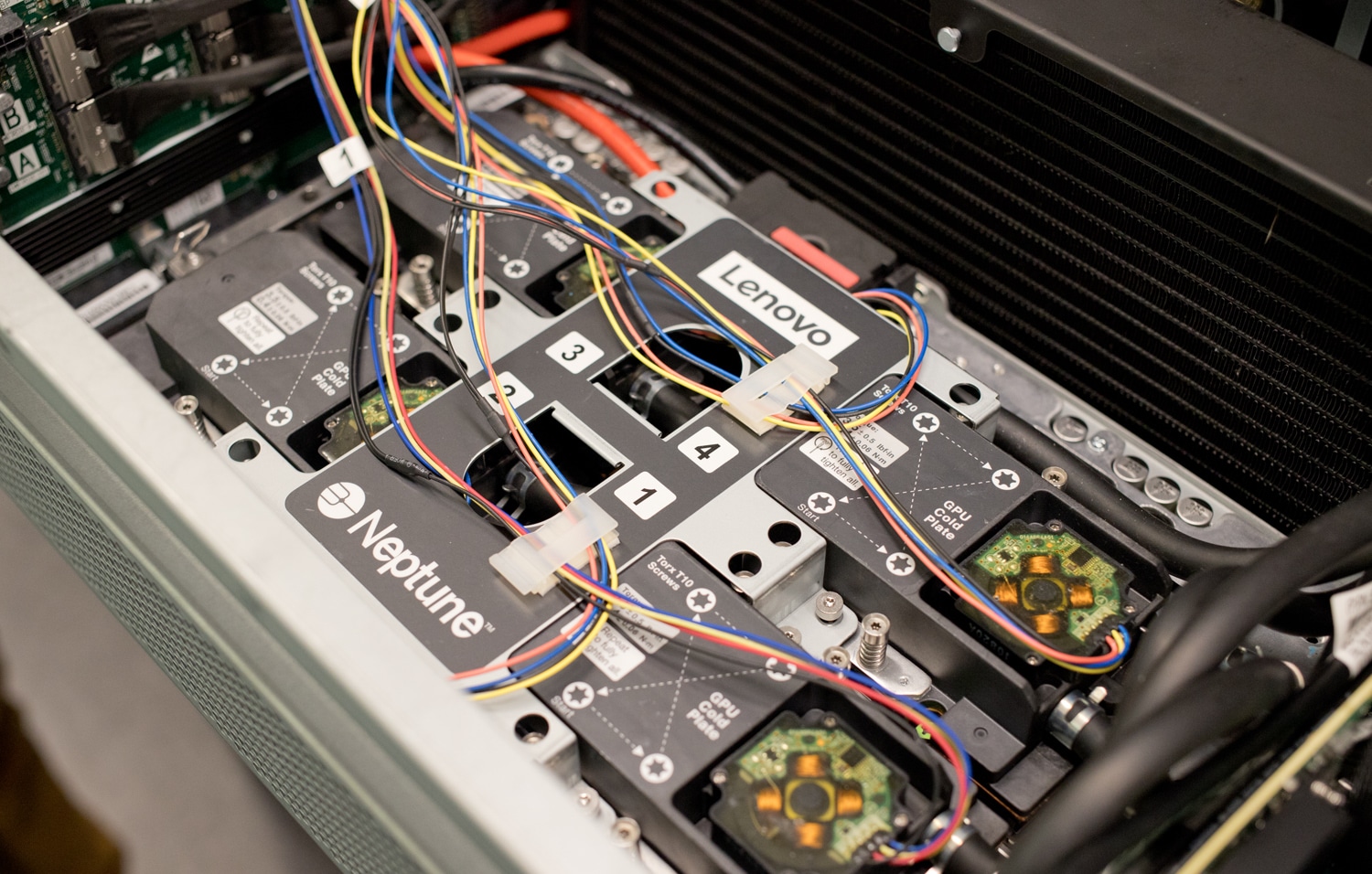

The HGX A100 platform is the “Redstone” variant without NVSwitch, with four SXM A100 GPUs on a single board. Both the 40GB, 400-watt, and 80GB, 500-watt variants are available. Notably, the SR670 V2 employs Lenovo’s Neptune liquid-to-air (L2A) hybrid cooling with this platform for quieter, more efficient cooling and lower power consumption. A cold plate is mounted on each GPU, through which four redundant low-pressure pumps circulate liquid. A large single radiator dissipates the heat. Other GPU configurations are air-cooled only.

The individual coolant pumps above each GPU are visible on the cold plate as part of the Neptune-branded section. These all flow back through the single radiator to keep temperatures in check even under peak loads.

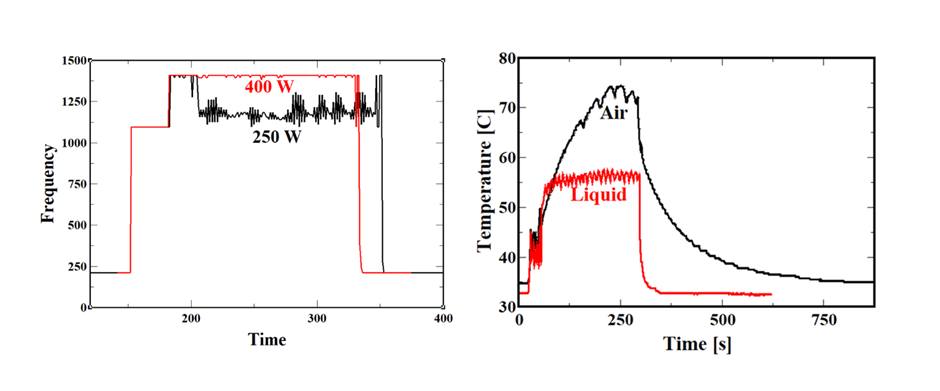

While liquid cooling has obvious benefits towards keeping temperatures lower, many don’t realize how much of a performance impact that can make with GPU clock speed. When GPUs are under high load with air cooling, they can reach peak thermal design points where they then have to throttle performance and lower clock speed to keep temperatures in check. Liquid cooling doesn’t have this issue, allowing the GPUs to run harder and faster while maintaining a consistent thermal profile over the course of the workload.

The chart below shows the difference between an air-cooled and liquid-cooled GPU under full load. When the air-cooled model starts to hit peak temperatures, the GPU frequency lowers, while the liquid-cooled CPU stays at peak clock speed over the duration.

For slots, the base SR670 V2 configurations have 2x front PCIe 4.0 x16 I/O slots, though the rest of the front is configurable for the drive options mentioned above. All support hot-swapping.

- SXM model – choice of:

- 4x 2.5-inch hot-swap NVMe drive bays

- 8x 2.5-inch hot-swap NVMe drive bays

- 4-DW GPU model – choice of:

- 8x 2.5-inch hot-swap AnyBay drive bays supporting SAS, SATA, or NVMe drives

- 4x 3.5-inch hot-swap drive bays supporting SATA HDDs or SSDs drives (support for NVMe only via Special Bid)

- 8-DW GPU model:

- 6x EDSFF E1.S hot-swap NVMe drive bays

The SR670 V2 also supports one or two M.2-format SATA or NVMe boot or storage drives. RAID support is offered via an onboard hardware controller.

Meanwhile, the backplane is fixed, with four PCIe 4.0 x16 slots and one OCP 3.0. The SR670 V2’s four redundant hot-swap power supplies are also visible from the rear. They come in 1800W or 2400W options and carry 80 Plus Platinum ratings.

A different power supply link is included on SR670 V2 models equipped with the SXM configuration, which supplies the front GPU section with a dedicated power link. These models are in stark contrast to the slot-load GPU models, which don’t include this substantial power link from the rear of the chassis.

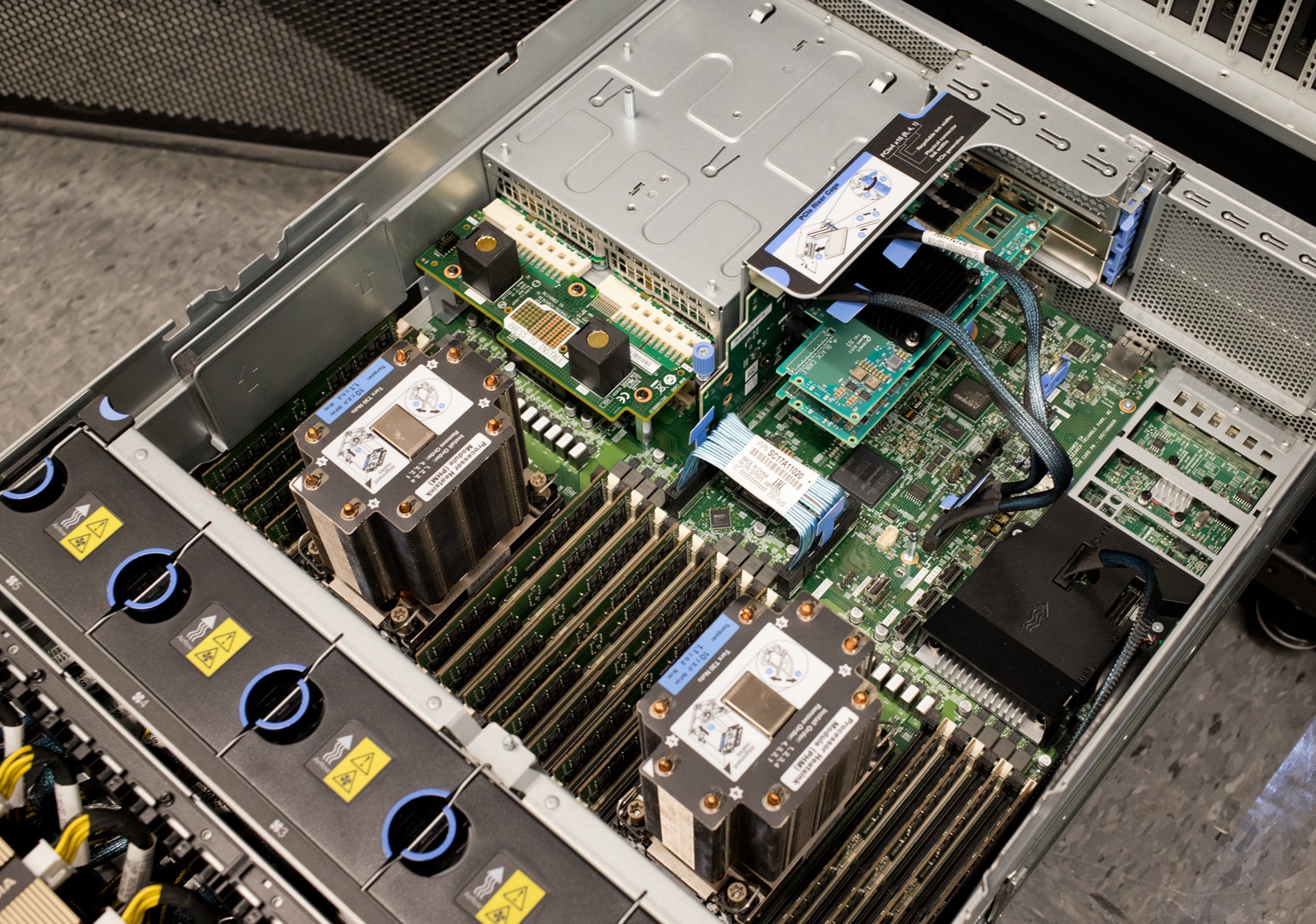

The rest of the SR670 V2’s hardware is equally impressive and continues its flexibility theme. It supports up to two 40-core/80-thread Intel “Ice Lake” third-generation Xeon Scalable processors, with up to a 270-watt TDP. Each CPU has 16 DDR4-3200 RDIMM slots; with 128GB RDIMMs, the memory ceiling is 4TB. Depending on the CPU, the SR670 V2 also supports up to 16 Intel Persistent Memory 200 Series, installed with regular system memory. With all of the hardware the ThinkSystem SR670 V2 has to offer, Lenovo put its best foot forward on the cooling layout to squeeze out the most performance from the system. Not all systems allow all components to operate at 100% utilization without throttling, whereas the SR670 V2 is designed to enable just that.

Final Thoughts

Lenovo is committed to liquid cooling and has leveraged that know-how to develop things like the L2A heat exchanger. As the power density continues to increase inside servers, vendors need to come up with creative methods to remove thermal load from components and pass it out of the system. Not all customers need or want full liquid cooling solutions. Lenovo, however, can deliver solutions to meet customer cooling demands with air-cooled, partially water-cooled, and fully water-cooled servers in its portfolio.

The first generation of Neptune™ delivered Liquid Cooling to only CPUs and memory. In addition to CPUs and memory, Lenovos’ Neptune Liquid Cooling system has expanded to include voltage regulation, storage, PCIe, and now GPUs. Lenovo has even released a liquid-cooled power supply that eliminates fans. Looking to the future, Lenovo sees liquid cooling as the key to handling heat generated by future generations of CPUs and GPUs and the way to maintain the density and footprint to which enterprise customers have become accustomed.

This report is sponsored by Lenovo All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Amazon

Amazon