The Mellanox SX6036 is the second Mellanox managed switch to join the rack in the StorgeReview Enterprise Lab. The SX6036 is designed for top-of-rack leaf connectivity, building clusters, and carrying converged LAN and SAN traffic. The integrated InfiniBand Subnet Manager can support an InfiniBand fabric of up to 648 nodes. While we’ve found 1GbE, 10GbE and 40GbE to be compelling interconnects in the lab, there are times when you just need a little more power to ensure that storage is the throughput and latency bottleneck, not the network fabric. To this end we are leveraging the InfiniBand switch and related gear on high end storage arrays and situations where there’s not just a need for speed, speed is a requirement for doing business.

The Mellanox SX6036 is the second Mellanox managed switch to join the rack in the StorgeReview Enterprise Lab. The SX6036 is designed for top-of-rack leaf connectivity, building clusters, and carrying converged LAN and SAN traffic. The integrated InfiniBand Subnet Manager can support an InfiniBand fabric of up to 648 nodes. While we’ve found 1GbE, 10GbE and 40GbE to be compelling interconnects in the lab, there are times when you just need a little more power to ensure that storage is the throughput and latency bottleneck, not the network fabric. To this end we are leveraging the InfiniBand switch and related gear on high end storage arrays and situations where there’s not just a need for speed, speed is a requirement for doing business.

The Mellanox SX6036 is the second Mellanox managed switch to join the rack in the StorgeReview Enterprise Lab. The SX6036 is designed for top-of-rack leaf connectivity, building clusters, and carrying converged LAN and SAN traffic. The integrated InfiniBand Subnet Manager can support an InfiniBand fabric of up to 648 nodes. While we’ve found 1GbE, 10GbE and 40GbE to be compelling interconnects in the lab, there are times when you just need a little more power to ensure that storage is the throughput and latency bottleneck, not the network fabric. To this end we are leveraging the InfiniBand switch and related gear on high end storage arrays and situations where there’s not just a need for speed, speed is a requirement for doing business.

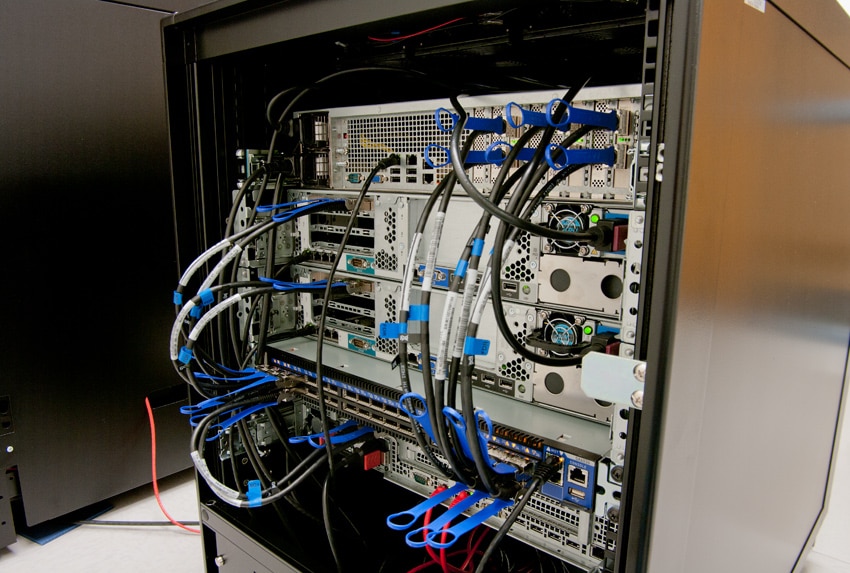

StorageReview’s Cluster-in-a-Box featuring three Romley servers, the Mellanox SX6036 switch, and the EchoStreams FlacheSAN2

Mellanox’s SX6036 switch system is part of our lab’s end-to-end Mellanox interconnect solution, which also includes our Mellanox SX1036 10/40 Ethernet switch. The SX1036 shares much of its design and layout with the SX6036; like the SX1036, the SX6036 is a 1U, 36-port switch. The SX6036 delivers up to 56Gb/s full bidirectional bandwidth per port, or cumulatively 4.032Tb/s of non-blocking bandwidth, with 170ns port-to-port latency.

Mellanox’s FDR InfiniBand technology uses 64b/66b encoding and increases the per lane signaling rate to 14Gb/s when used with approved Mellanox cables. When used with designated ConnectX-3 adapters, the SX6036 also supports FDR10, a non-standard InfiniBand data rate. With FDR10 each lane of a 4X port runs a bit rate of 10.3125Gb/s with a 64b/66b encoding, resulting in an effective bandwidth of 40Gb/s, or 20% more bandwidth over QDR.

SX6036 Key Features

- 36 FDR (56Gb/s) ports in a 1U switch

- 4Tb/s aggregate switching capacity

- Compliant with IBTA 1.21 and 1.3

- FDR/FDR10 support for Forward Error Correction (FEC)

- 9 virtual lanes: 8 data + 1 management

- 256 to 4Kbyte MTU

- 4 x 48K entry linear forwarding database

Upcoming Features

Mellanox has also announced a suite of functionality which will be enabled via future updates. These updates will focus on:

- InfiniBand to InfiniBand Routing

- Supporting up to 8 multiple switch partitions

- Adaptive routing

- Congestion control

- Port mirroring

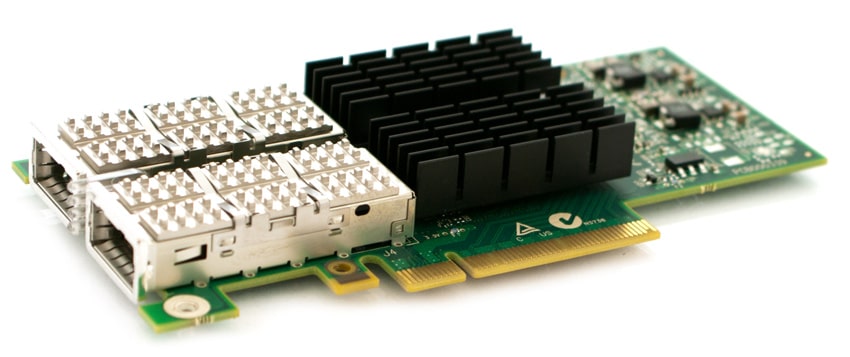

ConnectX-3 VPI Adapter

We have matched the SX6036 with Mellanox’s ConnectX-3 VPI adapter cards. These ConnectX-3 cards support InfiniBand, ethernet, and Data Center Bridging (DCB) fabric connectivity to provide flexible interconnect solution with auto-sensing capability. ConnectX-3’s FlexBoot enables servers to boot from remote storage targets via InfiniBand or LAN.

ConnectX-3 Virtual Protocol Interconnect adapters support OpenFabrics-based RDMA protocols and uses IBTA RoCE to provide RDMA services over Layer 2 ethernet.

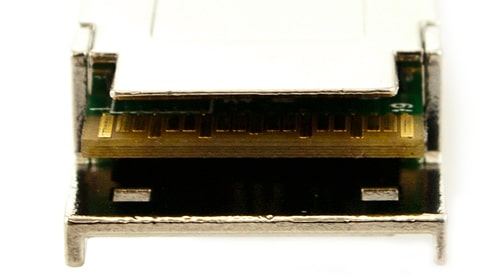

To complete our the end-to-end Mellanox networking configuration, we use Mellanox QSFP cabling solutions in our lab for our InfiniBand needs. To interface 56Gb/s InfiniBand adapters to our SX6036 switch, we have 0.5M, 1M, and 3M passive QSFP cables to use depending on how closely the equipment is grouped together.

Shown above is the QSFP (Quad Small Form-factor Pluggable) connector, which we use to connect 40GbE or 56Gb/s InfiniBand equipment.

ConnectX-3 VPI Adapter Key Features

- InfiniBand IBTA Specification 1.2.1 compliant

- 16 million I/O channels

- 256 to 4Kbyte MTU, 1Gbyte messages

- 1μs MPI ping latency

- Up to 56Gb/s InfiniBand or 40 Gigabit Ethernet per port

- Precision Clock Synchronization

- Hardware-based QoS, and congestion control

- Fibre Channel encapsulation (FCoIB or FCoE)

- Ethernet encapsulation (EoIB)

- RoHS-R6

- Hardware-based I/O Virtualization

- Single Root IOV

- Multiple queues per virtual machine

- Enhanced QoS for vNICs

- VMware NetQueue support

CPU Offloading and Acceleration

Connect-X features CPU offloads for protocol processing and data movement overhead such as RDMA and send/receive semantics. CORE-Direct offloads application overhead such as data broadcasting and gathering as well as global synchronization communication routines. GPU communication acceleration eliminates unnecessary internal data duplication to reduce run time. Applications utilizing TCP/UDP/IP transport benefit from hardware-based stateless offload engines which reduce the CPU overhead of IP transport.

Connect-X VPI Adapters:

- MCX353A-QCBT Single QDR 40Gb/s or 10GbE

- MCX354A-QCBT Dual QDR 40Gb/s or 10GbE

- MCX353A-FCBT Single FDR 56Gb/s or 40GbE

- MCX354A-FCBT Dual FDR 56Gb/s or 40GbE

Design and Build

This switch can be installed in any standard 19-inch rack with depths of 40cm to 80cm. The power side of the switch includes a hot-swap power supply module, a blank cover when not equipped with a redundant PSU, and the hot-swap fan tray.

The connector side of the switch features 36 QSFP ports, system LEDs, and management connection ports.

There are five management-related interfaces:

- 2 X 100M/1Gb Ethernet connectors labeled “MGT”

- 1 USB port to update software or firmware.

- 1 connector that is labeled “CONSOLE” to connect to the host PC.

- 1 I2C banana connector on the power supply side for diagnostic and repair use

The MSX6036 is available in a standard depth (MSX6036F-1SFR and MSX6036T-1SFR) or a short depth design (MSX6036F-1BRR and MSX6036T-1BRR) with forward and reverse airflow options. Mellanox offers long and short rail kits for the SX6036. Both the standard and the short switches can be mounted using the long rail kit, while the short kit will only work with the short switch.

Power

The switch features auto-sensing support for 100-240 VAC PSUs. Like all of Mellanox’s SwitchX QSFP solutions, the SX6036 is designed for active cables, with a max power per module of 2W. Typical FDR power consumption is 231W with active cables or 126W with passive cables.

The SX6036 switch is available with one factory-installed PSU. A redundant PSU needs to be added to support hot-swap replacement. The primary power supply unit (PS1) is located on the right side of the power side panel, with PS2 on the left side. With two power supplies installed, either PSU may be extracted while the switch is operational. To extract a PSU, you remove the power cord, and then push the latch release while pulling outward on the PSU handle. To install a PSU, slide the unit into the opening until you begin to feel slight resistance, and then continue pressing until the PSU is completely seated. When the PSU is properly installed, the latch will snap into place and you can attach the power supply cord.

Cooling

The SX6036 offers one redundant fan unit with hot-swap capability and comes in with configurations for two possible air flow directions. To extract the fan module, you must push both latches towards each other while pulling the module up and out of the switch. If you are removing the fan while the switch is powered, the fan status indicator for the module in question will turn off once the fan is unseated.

To install a fan module, you slide it into the opening until you begin to feel a slight resistance, then continue pressing until it seats completely and the corresponding fan status indicator displays green when the switch is powered on.

Management

As with the SX1036, the first time you configure the SX6036, you must establish a DB9 connection to the console RJ-45 port of the switch using the supplied harness with terminal emulation software on the host PC in order to enable remote and web management functions. Mellanox offers two solutions for managing the SX6036. MLNX-OS delivers chassis management to manage firmware, power supplies, fans, ports, and other interfaces as well as integrated subnet management. SX6036 can also be coupled with Unified Fabric Manager (UFM) to manage scale-out InfiniBand computing environments.

MLNX-OS includes a CLI, WebUI, SNMP and chassis management software and IB management software (OpenSM). The SX6036 InfiniBand Subnet Manager supports up to 648 nodes. (Mellanox recommends its Unified Fabric Manager for fabrics which include more than 648 nodes.) The InfiniBand Subnet Manager discovers and applies network traffic related configurations such as QoS, routing, partitioning to the fabric devices. Each subnet needs one subnet manager to be running in either the switch itself (switch based) or on one of the nodes which is connected to the fabric (host based).

Conclusion

The Mellanox SX6036 56Gb InfiniBand Switch is a core component of the StorageReview Enterprise Lab, pairing with Mellanox interface cards and cabling to give us a complete high-speed InfiniBand fabric. With new flash storage arrays integrating 56Gb IB interconnects, the Mellanox networking gear gives us the backbone we need to properly review and stress the fastest storage arrays. As interconnects gain pace, storage is the new performance bottleneck. Stay tuned as we try to balance the equation with an expanding set of arrays and servers that hope to stress the InfiniBand fabric.

Mellanox SX6036 InfiniBand Switch Product Page

ConnectX-3 VPI Adapter Product Page