The QSAN XCubeSAN XS1200 Series is a dual controller SAN designed to meet the needs of SMBs and ROBO. Supporting both Fibre Channel and iSCSI, the XS1200 can handle the requisite workloads. QSAN offers a wide variety of features in the array via SANOS 4.0 highlighted by thin provisioning, SSD read/write cache, tiering, snapshots, local volume clones and remote replication. Internally, the controllers are powered by Intel D1500 two-core CPUs and 4GB of DDR4 memory. For those who need to scale up, QSAN offers the XD5300 expansion unit; the XS1200 can support up to 286 drives in total.

Within the XS1200 family, QSAN offers a bevy of form factors with either one (S) or two (D) controllers. The XS1224S/D is 4U, 24x 3.5″, the XS1216S/D is 3U, 16x 3.5″ and the XS1212S/D is 2U, 12x 3.5″ bay system. QSAN also offers a model optimized for flash, which is the system under review here in the dual controller configuration. The XS1226D uniquely offers 26x 2.5″ bays across the front, two more than most arrays or servers typically offer. This comes in handy in a variety of ways depending on RAID configuration. In this case testing was done in RAID10, so the extra bays can be leveraged for hot spares. Other RAID configs could use the bays to provide extra capacity.

Getting access to all of this flash means controller connectivity is important. Each controller offers two expansion slots that can support 1GbE, 10GbE, Fibre Channel or some combination. Each controller has twin 10GbE ports on board, meaning a total of up to 10 10GbE ports per controller. If Fibre Channel, the XS1200 supports 4 ports per controller.

Data integrity and reliability in a system like this is important. QSAN claims five nines of reliability, on par with most enterprise systems. For those who want an extra layer of data path protection, QSAN offers an optional Cache-to-Flash module, which comes with an M.2 SSD and either a BBM (Battery Backup Module) or a SCM (Super Capacitor Module), which protects in-flight data in the event of unexpected power loss.

As configured, without disks included, the cost of our XS1226D as reviewed was $9,396 (base XS1226D, plus rails and two 4-port 16Gb FC cards).

QSAN XCubeSAN XS1200 Series Specifications

- RAID Controller

- Dual-active controller (Model: XS1224D / XS1216D / XS1212D / XS1226D)

- Single-upgradable controller (Model: XS1224S / XS1216S / XS1212S / XS1226S)

- Processor: Intel D1500 dual core processor

- Memory (per Controller): DDR4 ECC 4GB, up to 32GB (two DIMM slots, insert two DIMMs or more will boost performance)

- Host Connectivity (per Controller):

- Host Card Slot 1 (optional)

- 4 x 16Gb FC (SFP+) ports

- 2 x 16Gb FC (SFP+) ports

- 4 x 10GbE iSCSI (SFP+) ports

- 2 x 10GbE iSCSI (RJ45) ports

- 4 x 1GbE iSCSI (RJ45) ports

- Host Card Slot 2 (optional)

- 4 x 16Gb FC (SFP+) ports (Slot 2 provides 20Gb bandwidth)

- 2 x 16Gb FC (SFP+) ports (Slot 2 provides 20Gb bandwidth)

- 4 x 10GbE iSCSI (SFP+) ports (Slot 2 provides 20Gb bandwidth)

- 2 x 10GbE iSCSI (RJ45) ports

- 4 x 1GbE iSCSI (RJ45) ports

- Onboard

- 2 x 10GBASE-T iSCSI ports

- 1 x 1GbE management port

- Host Card Slot 1 (optional)

- Expansion Connectivity (per Controller): Built-in 2 x 12Gb/s SAS wide ports (SFF-8644)

- Drive Type:

- XS1224 / XS1216 / XS1212

- Mix & match 3.5″ 2.5″ SAS, NL-SAS, SED1 HDD

- 2.5” SAS, SATA SSD (6Gb MUX board needed for 2.5″ SATA drives in dual controller system)

- XS1226

- 2.5″ SAS, NL-SAS, SED1 HDD

- 2.5” SAS, SATA SSD (6Gb MUX board needed for 2.5″ SATA drives in dual controller system)

- XS1224 / XS1216 / XS1212

- Expansion Capabilities:

- Up to 10 expansion units using XD5300 series 12Gb SAS expansion enclosure

- XD5324 (4U 24-bay, LFF)

- XD5316 (3U 16-bay, LFF)

- XD5312 (2U 12-bay, LFF)

- XD5326 (2U 26-bay, SFF)

- Up to 10 expansion units using XD5300 series 12Gb SAS expansion enclosure

- Max. Drives Supported:

- XS1224 (4U 24-bay, LFF): 284

- XS1216 (3U 16-bay, LFF): 276

- XS1212 (2U 12-bay, LFF): 272

- XS1226 (2U 26-bay, SFF): 286

- Dimensions (H x W x D):

- 19” Rackmount

- XS1224 (4U 24-bay, LFF): 170.3 x 438 x 515 mm

- XS1216 (3U 16-bay, LFF): 130.4 x 438 x 515 mm

- XS1212 (2U 12-bay, LFF): 88 x 438 x 515 mm

- XS1226 (2U 26-bay, SFF): 88 x 438 x 491 mm

- 19” Rackmount

- Memory Protection:

- Cache-to-Flash module (optional)

- Battery backup module + Flash module (to protect all memory capacity)

- Super capacitor module + Flash module (to protect up to 16GB memory per controller)

- Cache-to-Flash module (optional)

- LCM (LCD Module): USB LCM (optional)

- Power Supply

- 80 PLUS Platinum, two redundant 770W (1+1)

- AC Input:

- 100~127V, 10A, 50-60Hz

- 200~240V, 5A, 50-60Hz

- DC Output:

- +12V, 63.4A

- +5VSB, 2.0A

- Fan Module: 2 x hot pluggable/redundant fan modules

- Software

- Storage Management:

- RAID level 0, 1,0+1, 3, 5, 6, 10, 30, 50, 60, and N-way mirror

- Flexible storage pool ownership

- Thin Provisioning (QThin) with space reclamation

- SSD Cache (QCache2)

- Auto Tiering (QTiering2)

- Global, local, and dedicated hot spares

- Write-through and write-back cache policy

- Online disk roaming

- Spreading RAID disks drives across enclosures

- Background I/O priority setting

- Instant RAID volume availability

- Fast RAID rebuild

- Online storage pool expansion

- Online volume extension

- Online volume migration

- Auto volume rebuilding

- Instant volume restoration

- Online RAID level migration

- SED drive1 support

- Video editing mode for enhanced performance

- Disk drive health check and S.M.A.R.T attributes

- Storage pool parity check and media scan for disk scrubbing

- SSD wear lifetime indicator

- Disk drive firmware batch update

- iSCSI Host Connectivity:

- Proven QSOE 2.0 optimization engine

- CHAP & Mutual CHAP authentication

- SCSI-3 PR (Persistent Reservation for I/O fencing) support

- iSNS support

- VLAN (Virtual LAN) support

- Jumbo frame (9,000 bytes) support

- Up to 256 iSCSI targets

- Up to 512 hosts per controller

- Up to 1,024 sessions per controller

- Fibre Channel Host Connectivity:

- Proven QSOE 2.0 optimization engine

- FCP-2 & FCP-3 support

- Auto detect link speed and topology

- Topology supports point-to-point3 and loop

- Up to 256 hosts per controller

- High Availability:

- Dual-Active (Active/Active) SAN controllers

- Cache mirroring through NTB bus

- ALUA support

- Management port seamless failover

- Fault-tolerant and redundant modular components for SAN controller, PSU, FAN module, and dual port disk drive interface

- Dual-ported HDD tray connector

- Multipath I/O and load balancing support (MPIO, MC/S, Trunking, and LACP)

- Firmware update with zero system downtime

- Security:

- Secured Web (HTTPS), SSH (Secure Shell)

- iSCSI Force Field to protect from mutant network attack

- iSCSI CHAP & Mutual CHAP authentication

- SED drive support

- Storage Efficiency:

- Thin Provisioning (QThin) with space reclamation

- Auto Tiering (QTiering2) with 3 levels of storage tiers

- Networking:

- DHCP, Static IP, NTP, Trunking, LACP, VLAN, Jumbo frame (up to 9,000 bytes)

- Advanced Data Protection:

- Snapshot (QSnap), block-level, differential backup

- Writeable snapshot support

- Manual or schedule tasks

- Up to 64 snapshots per volume

- Up to 64 volumes for snapshot

- Up to 4,096 snapshots per system

- Remote Replication (QReplica)

- Asynchronous, block-level, differential backup based on snapshot technology

- Traffic shaping for dynamic bandwidth controller

- Manual or schedule tasks

- Auto rollback to previous version if current replication fails

- Up to 32 schedule tasks per controller

- Volume clone for local replication

- Configurable N-way mirroring

- Integration with Windows VSS (Volume Shadow Copy Service)

- Instant volume restoration

- Cache-to-Flash memory protection2

- M.2 flash module

- Power module: BBM or SCM (Super Capacitor Module)

- USB and network UPS support with SNMP management

- Snapshot (QSnap), block-level, differential backup

- Virtualization Certification:

- Server Virtualization & Clustering

- Latest VMware vSphere certification

- VMware VAAI for iSCSI & FC

- Windows Server 2016, 2012 R2 Hyper-V certification

- Microsoft ODX

- Latest Citrix XenServer certification

- Easy Management:

- USB LCM2, serial console support, online firmware update

- Intuitive Web management UI, secured web (HTTPS), SSH (Secured Shell), LED indicators

- S.E.S. support, S.M.A.R.T. support, Wake-on-LAN, and Wake-on-SAS

- Green & Energy Efficiency:

- 80 PLUS Platinum power supply

- Wake-on-LAN to turn on or wake up the system only when necessary

- Auto disk spin-down

- Host Operating Systems Support:

- Windows Server 2008, 2008 R2, 2012, 2012 R2, 2016

- SLES (SUSE Linux Enterprise Server) 10, 11, 12

- RHEL (Red Hat Enterprise Linux) 5, 6, 7

- CentOS (Community Enterprise Operating System) 6, 7

- Solaris 10, 11

- Free BSD 9, 10

- Mac OS X 10.11 or later

- Warranty

- System: 3 years

- Battery backup module: 1 year

- Super capacitor module: 1 year

Design and Build

The XS1226D is a dual-controller active/active storage array with a 2U profile featuring 26 2.5″ bays for SAS HDDs or SSDs. The 26-drive format is a bit unique in the space, as most systems only fit 24 bays up front, giving QSAN a bit of a leg up on competition. On the right side of the front panel are the system power button, the UID (Unique Identifier) button, system access and system status LEDs and a USB port for the USB LCM module.

The rear of the chassis has the dual redundant power supplies, as well as the dual controllers. Each controller has onboard twin 10Gbase-T network connectivity, in addition to an out-of-band management interface. For additional connectivity, each controller has two host card slots, which can be loaded up with dual or quad port 8/16Gb cards, or dual or quad port 1-10Gb Ethernet cards. This gives users a wide range of options for attaching storage into a diverse datacenter environment. Expansion capabilities are also supported through two 12Gb/s SAS ports per controller, enabling SAS 3.0 expansion shelves.

Management and Usability

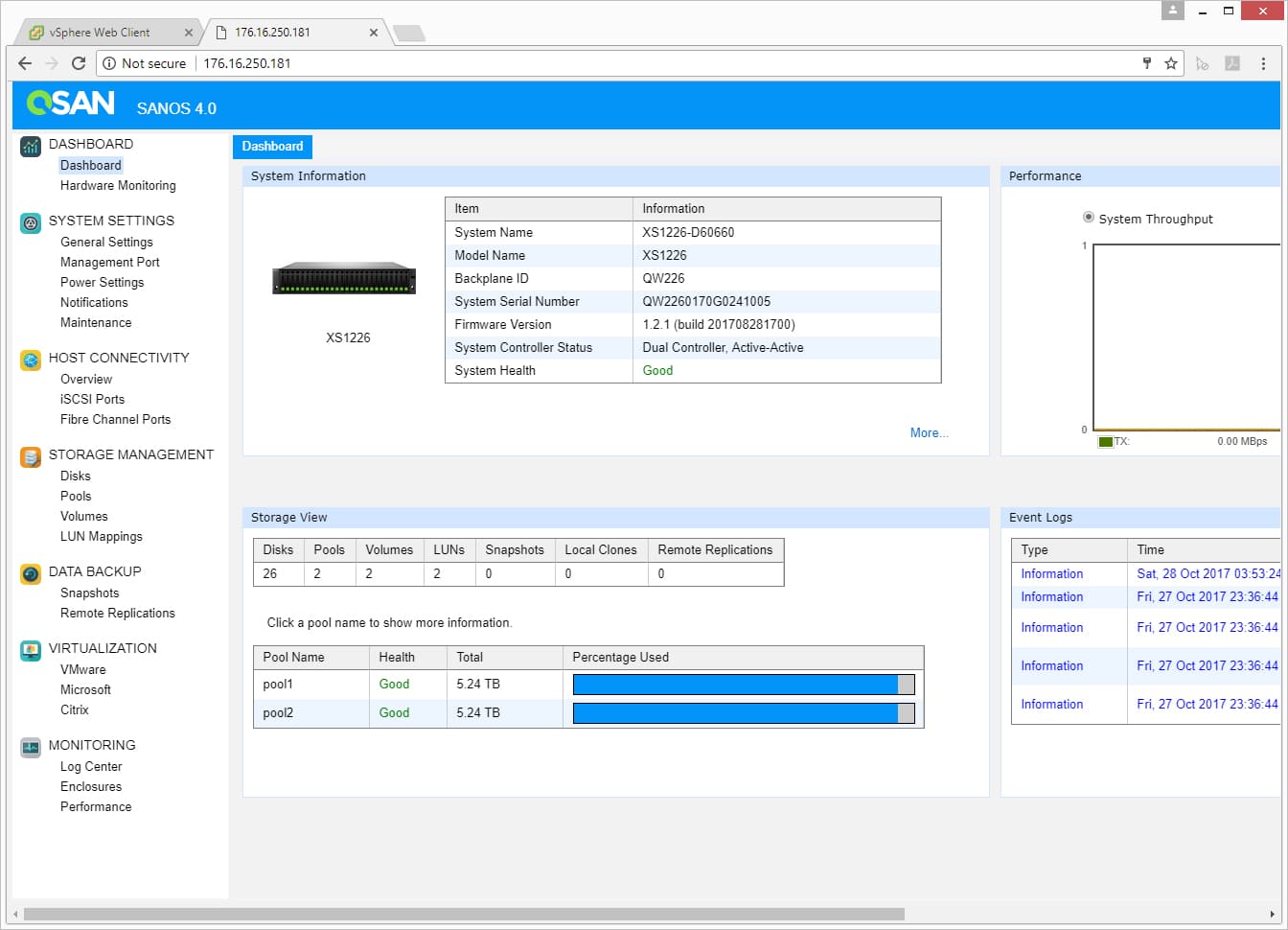

The QSAN XS1200 series uses the company’s QSAN SANOS operation system, currently in its 4.0 release. The OS has an overall simple and intuitive layout. Along the left-hand side of the screen are various main and sub menus for functions such as Dashboard, System Settings, Host Connectivity, Storage Management, Data Backup, Virtualization, and Monitoring. Each of the main menus has sub menus allowing users to drill down into specifics. Basically SANOS 4.0 gives users easy access to all the functions they will need when managing a SAN.

The first screen we look at is Dashboard. The Dashboard screen gives users a general look at the system (breaking it down into specific information), performance, storage, and event logs.

Right-click and open in new tab for a larger image

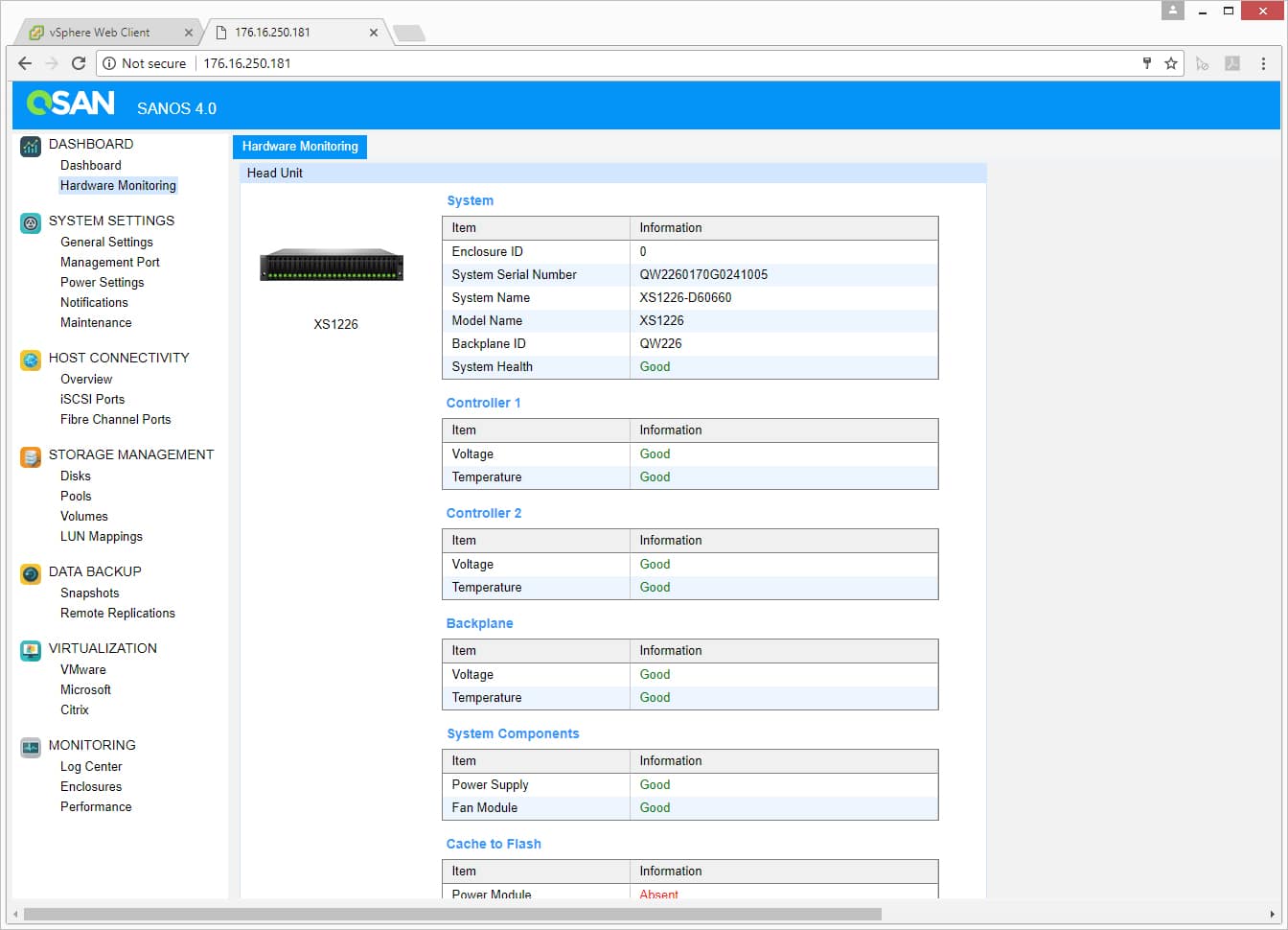

The only sub-menu for Dashboard is Hardware Monitoring. As the name implies, this function allows users to drill down into what hardware is in the system and information on it such as whether it is functioning properly or whether it has been installed (one can see at the bottom that we didn’t install the power module for the Cache to Flash and it is showing up absent).

Right-click and open in new tab for a larger image

Under System Settings users can access menus such as general settings, management port, power settings, notifications, and maintenance. Under the maintenance menu users are given system information (for the overall system and each controller), the ability to update the system, firmware synchronization, system identification, reset to defaults, configure backup, volume restoration, and the ability to reboot or shut down the system.

Right-click and open in new tab for a larger image

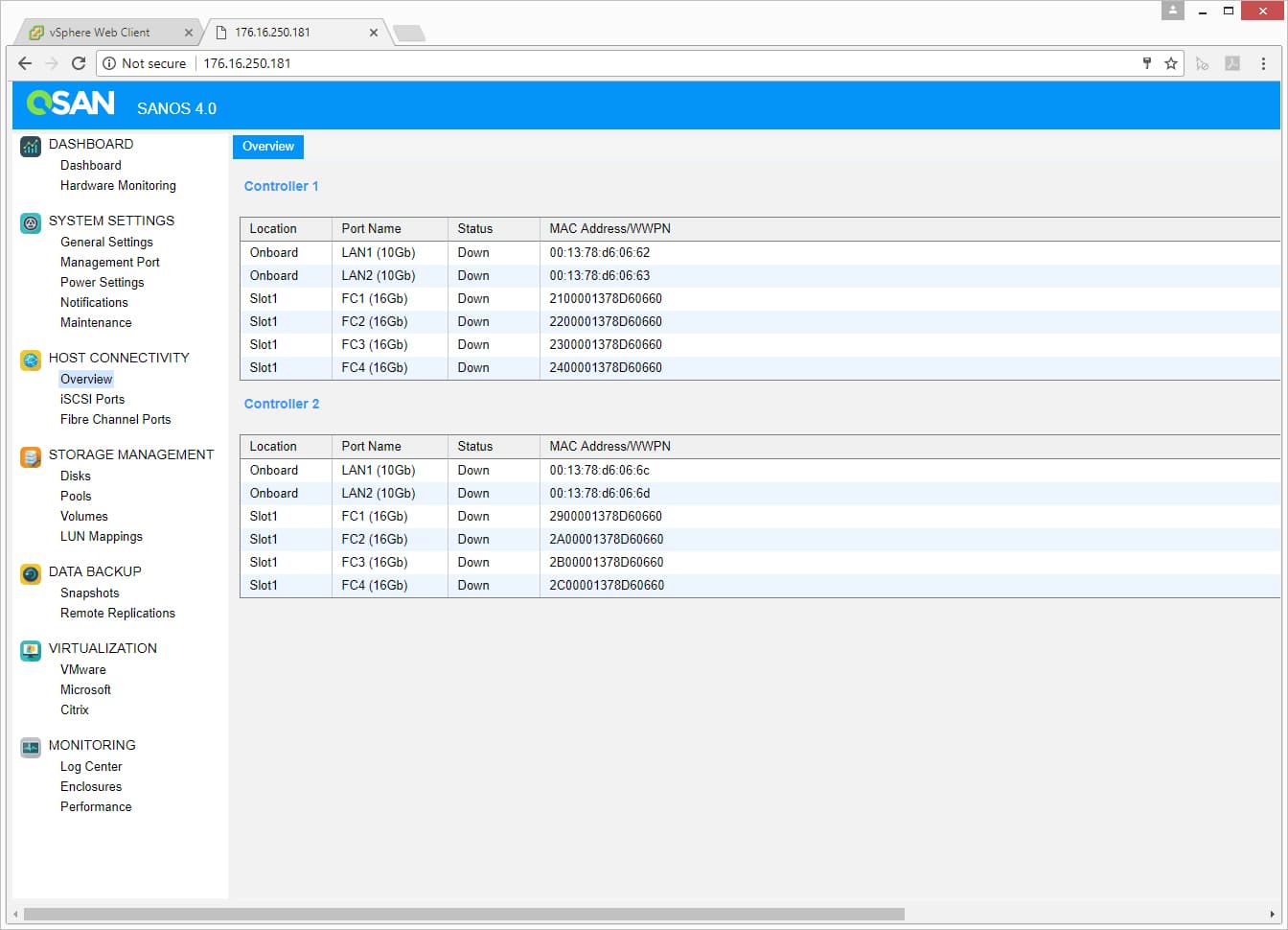

Host Connectivity gives users an overview of each controller as well as location, port name, status, and MAC address/WWPN. Users also have the option of drilling down further into either the iSCSI ports or Fibre Channel Ports.

Right-click and open in new tab for a larger image

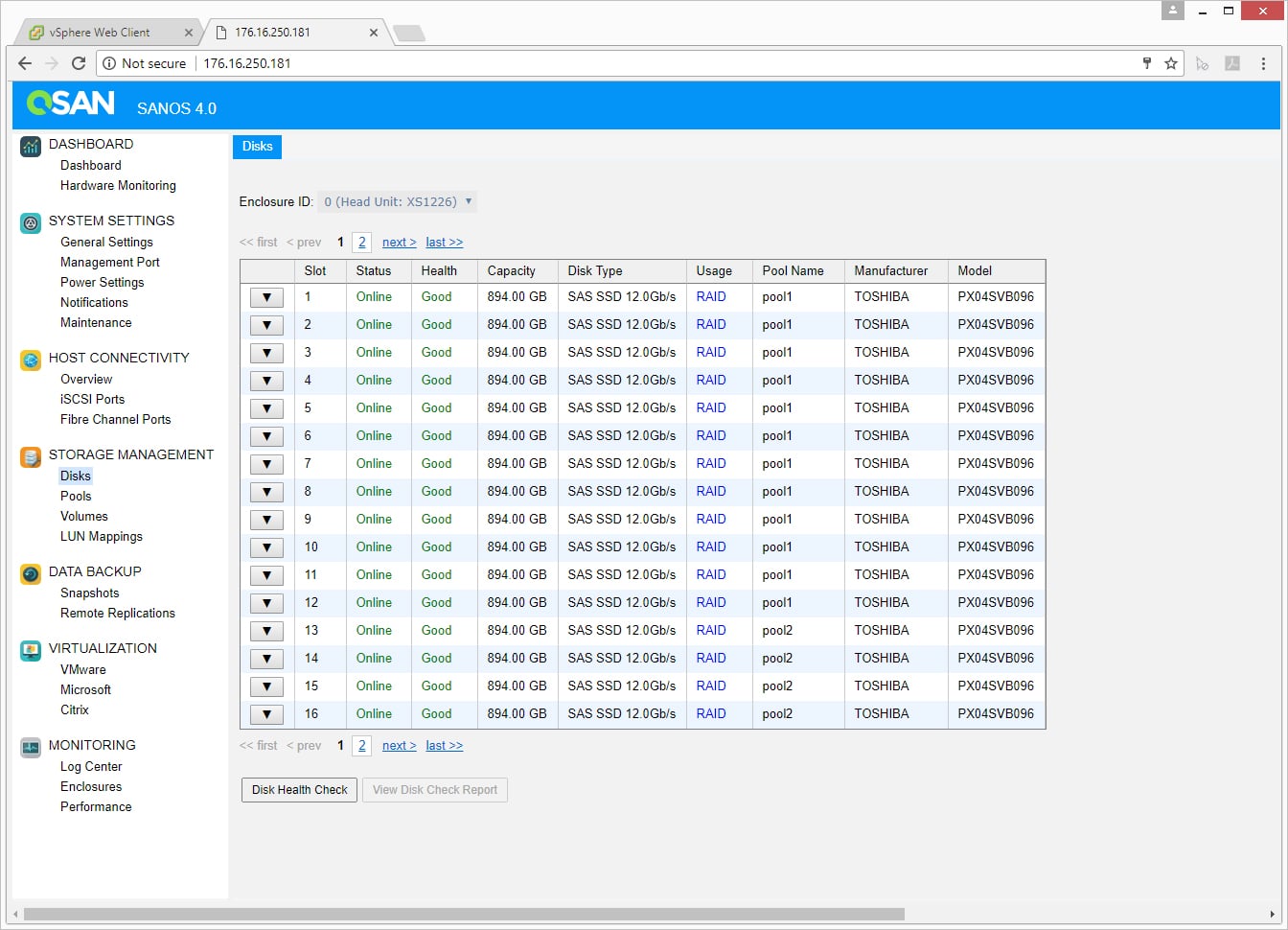

The last main menu we are going to look at for this review is, of course, Storage Management. This menu has four sub-menus. The first looks at Disks. Here one can easily see the slot the disk is in, its status, health, capacity, type (interface and whether it is a SSD or HDD), usage, pool name, manufacturer, and model.

Right-click and open in new tab for a larger image

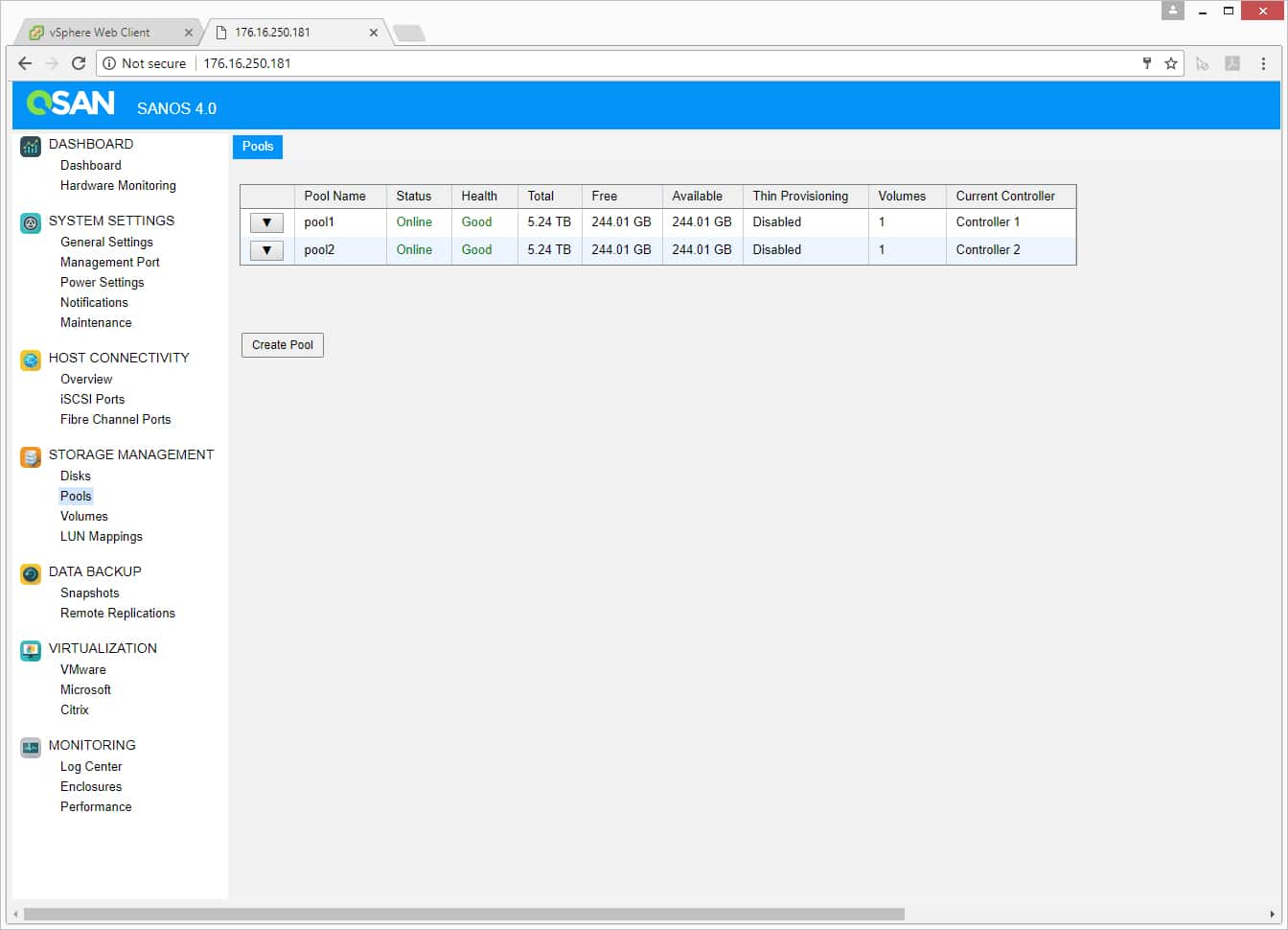

The next sub-menu looks at pools. Here one can see the pool name, status, health, total capacity, free capacity, available capacity, whether thin provisioning is enable or not, which volume is being used, and the current controller.

Right-click and open in new tab for a larger image

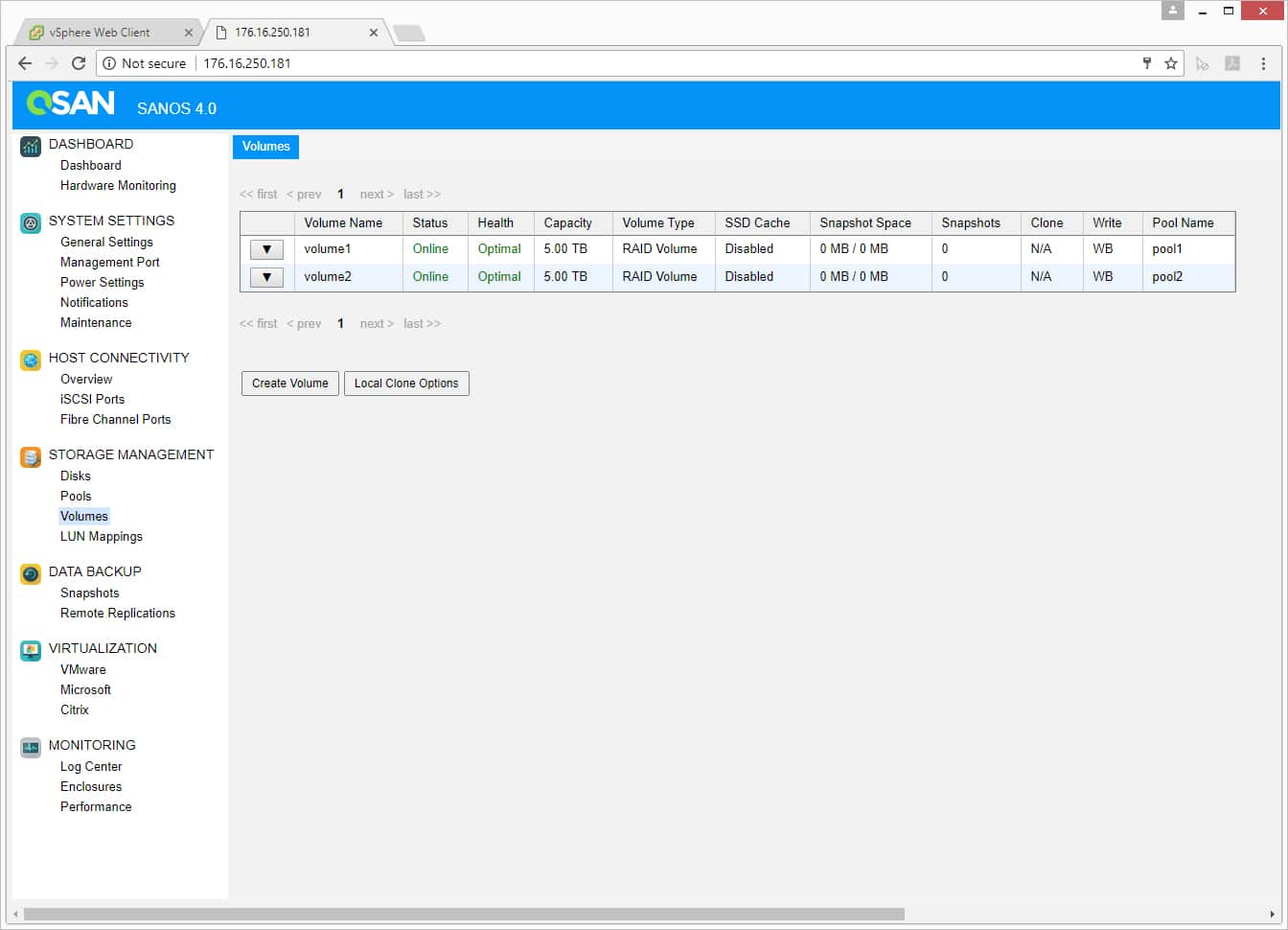

The volumes sub-menu is similar to the other in this category with the ability to create volume and see information such as the volume name, status, health, capacity, type, whether the SSD Cache is enabled or not, snapshot space, the amount of snapshots, clone, write, and pool name.

Right-click and open in new tab for a larger image

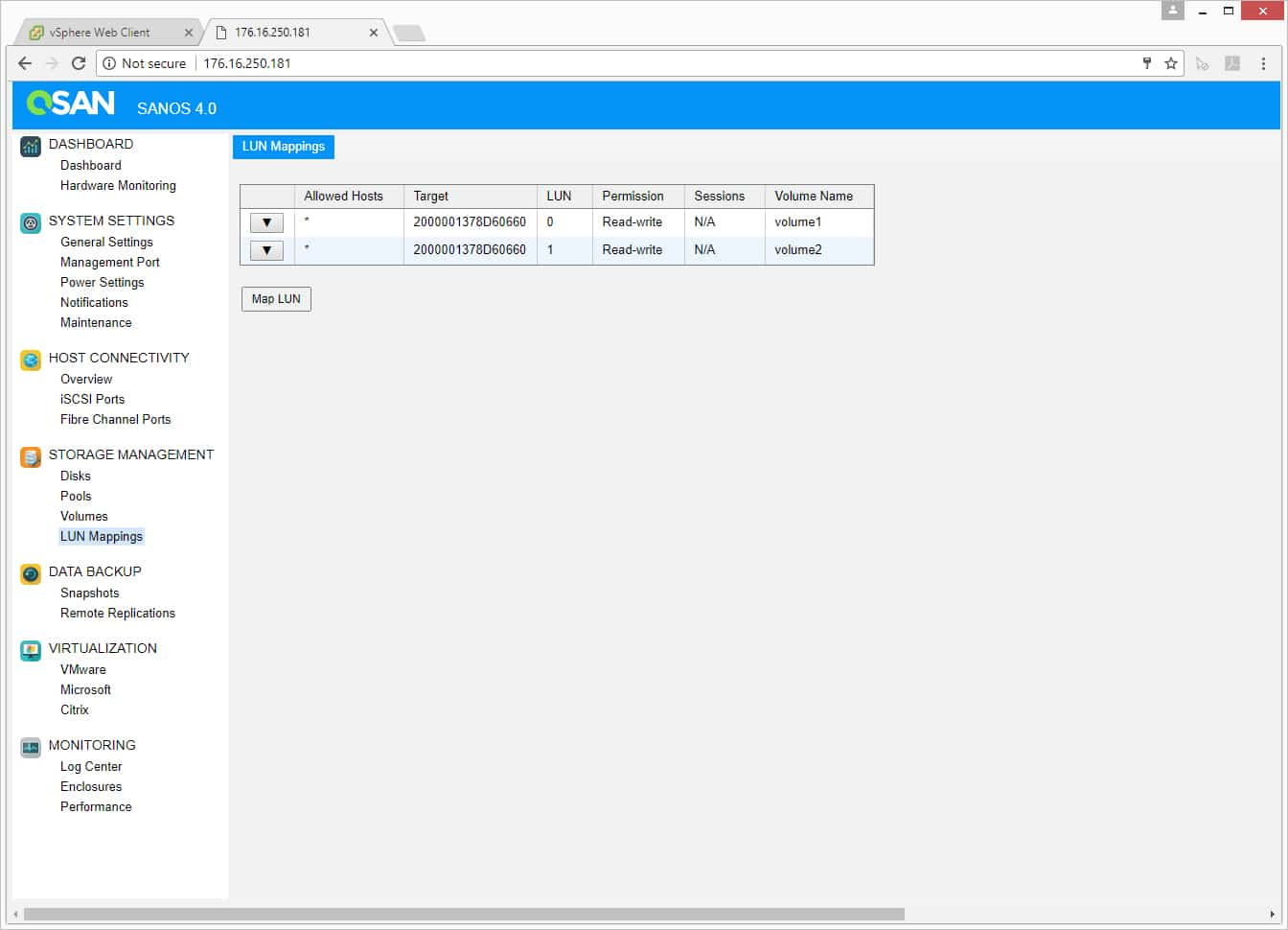

The final sub-menu is LUN Mappings. Through this screen users can map LUNs and see information such as Allowed Hosts, target, LUN, permission, sessions, and volume name.

Right-click and open in new tab for a larger image

Application Workload Analysis

The application workload benchmarks for the QSAN XCubeSAN XS1200 consist of the MySQL OLTP performance via SysBench and Microsoft SQL Server OLTP performance with a simulated TPC-C workload. In each scenario, we had the array configured with 26 Toshiba PX04SV SAS 3.0 SSDs, configured in two 12-drive RAID10 disk groups, one pinned to each controller. This left 2 SSDs as spares. Two 5TB volumes were then created, one per disk group. In our testing environment, this created a balanced load for our SQL and Sysbench workloads.

SQL Server Performance

Each SQL Server VM is configured with two vDisks: 100GB volume for boot and a 500GB volume for the database and log files. From a system resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM and leveraged the LSI Logic SAS SCSI controller. While our Sysbench workloads tested previously saturated the platform in both storage I/O and capacity, the SQL test is looking for latency performance.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs, and is stressed by Quest’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across the QSAN XS1200 (two VMs per controller).

SQL Server Testing Configuration (per VM)

- Windows Server 2012 R2

- Storage Footprint: 600GB allocated, 500GB used

- SQL Server 2014

- Database Size: 1,500 scale

- Virtual Client Load: 15,000

- RAM Buffer: 48GB

- Test Length: 3 hours

- 2.5 hours preconditioning

- 30 minutes sample period

SQL Server OLTP Benchmark Factory LoadGen Equipment

- Dell EMC PowerEdge R740xd Virtualized SQL 4-node Cluster

- 8 Intel Xeon Gold 6130 CPU for 269GHz in cluster (Two per node, 2.1GHz, 16-cores, 22MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Mellanox ConnectX-4 rNDC 25GbE dual-port NIC

- VMware ESXi vSphere 6.5 / Enterprise Plus 8-CPU

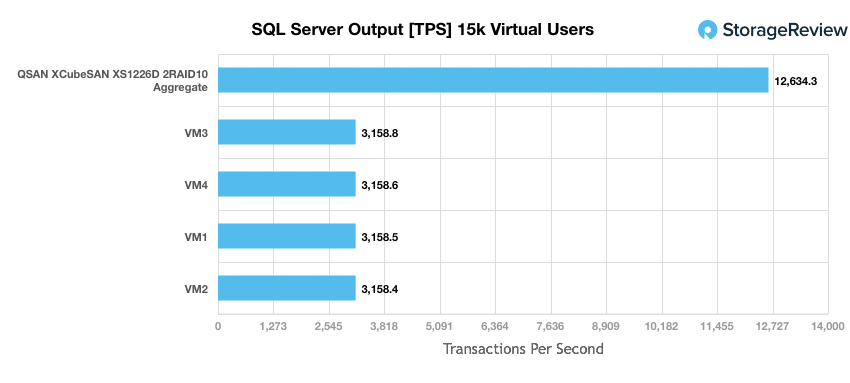

We measured the performance of an SQL Server configuration that leveraged 24 SSDs in RAID10. Individual VM TPS performance was virtually identical with 3,158.4 to 3,158.8 TPS. The aggregate performance recorded was 12,634.305 TPS.

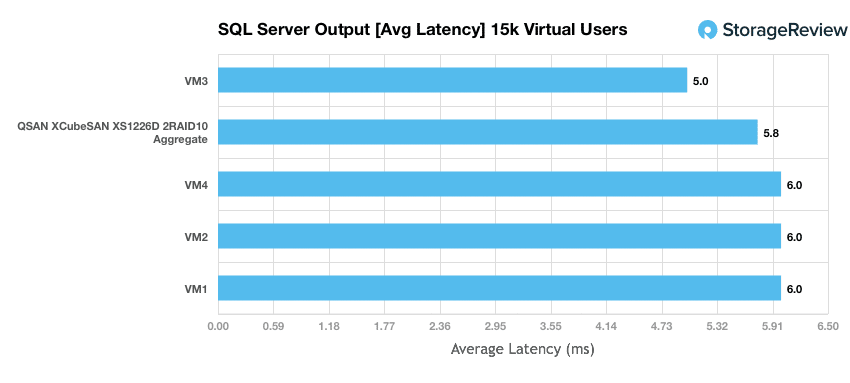

With average latency, the XCubeSAN XS1200 recorded latencies between 5ms and 6ms, with individual VMs and an aggregate of 5.8ms.

Sysbench Performance

Each Sysbench VM is configured with three vDisks, one for boot (~92GB), one with the pre-built database (~447GB), and the third for the database under test (270GB). From a system-resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM and leveraged the LSI Logic SAS SCSI controller. Load gen systems are Dell R740xd servers.

Dell PowerEdge R740xd Virtualized MySQL 4 node Cluster

- 8 Intel Xeon Gold 6130 CPU for 269GHz in cluster (Two per node, 2.1GHz, 16-cores, 22MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Mellanox ConnectX-4 rNDC 25GbE dual-port NIC

- VMware ESXi vSphere 6.5 / Enterprise Plus 8-CPU

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Storage Footprint: 1TB, 800GB used

- Percona XtraDB 5.5.30-rel30.1

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

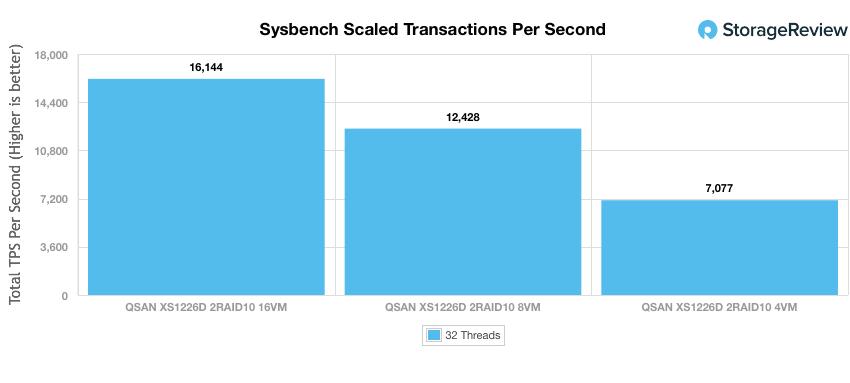

In our Sysbench benchmark, we tested several sets of 4VMs, 8VMs, and 16VMs. Unlike SQL Server, here we only looked at raw performance. In transactional performance, the XS1200 posted solid performance beginning with 7,076.82 TPS for 4VM and up to 16,143.94 TPS at 16VM.

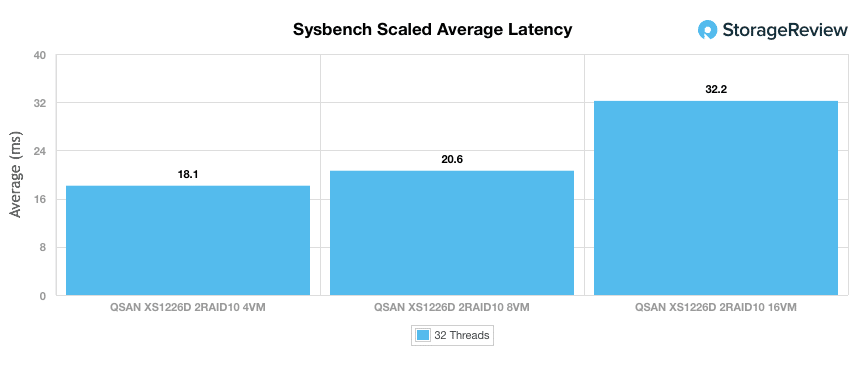

With average latency, the XS1200 had 18.14ms at 4VM and went up to just 20.63 when the VMs were doubled to 8. When doubling the VMs again, latency jumped to only 32.22ms.

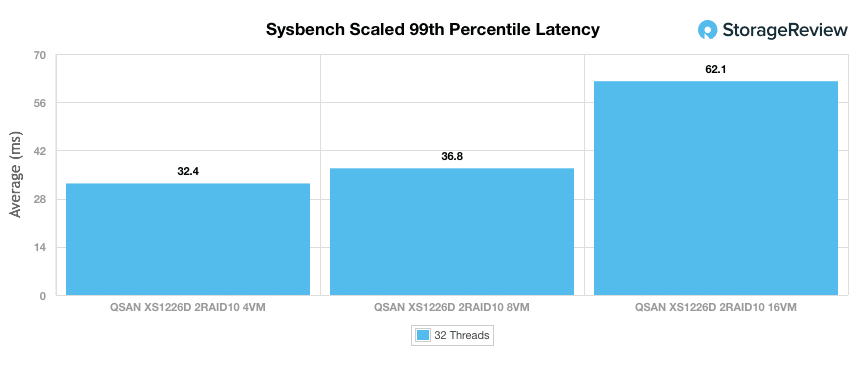

In our worst-case scenario latency benchmark, the XS1200 again showed very consistent results with a 99th percentile latency of 32.40ms at 4VM and topping out at 62.1ms latency when testing with 16VMs.

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices. On the array side, we use our cluster of Dell PowerEdge R740xd servers:

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

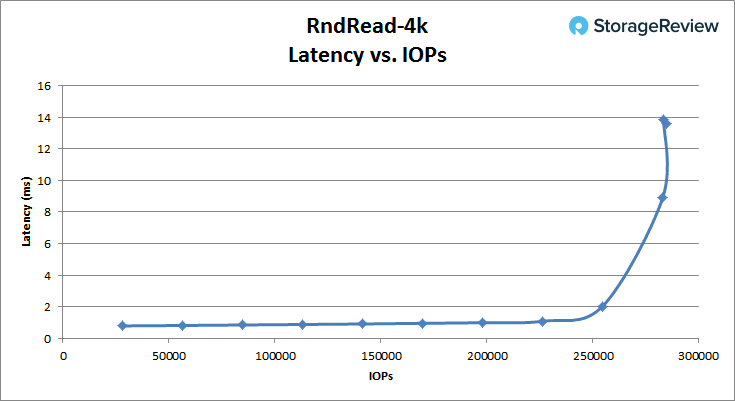

The XS1200 performed very well in our first synthetic profile, which looks at 4K random read performance. The unit maintained sub-1ms latency until roughly 198,000 IOPS, and offered a peak throughput at 284,000 IOPS, with an average latency of 13.82ms.

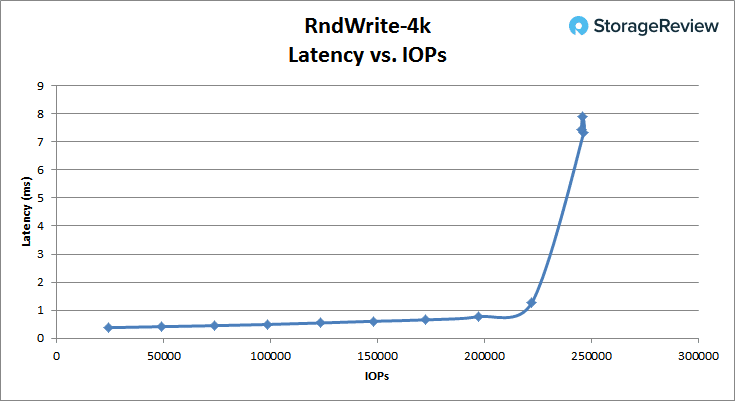

Looking at 4K peak write performance, the XS1200 showed impressively-low latency performance starting at 0.38ms and staying under 1ms until roughly 222,000 IOPS. It peaked at a latency of 7.9ms and IOPS at over 246,000.

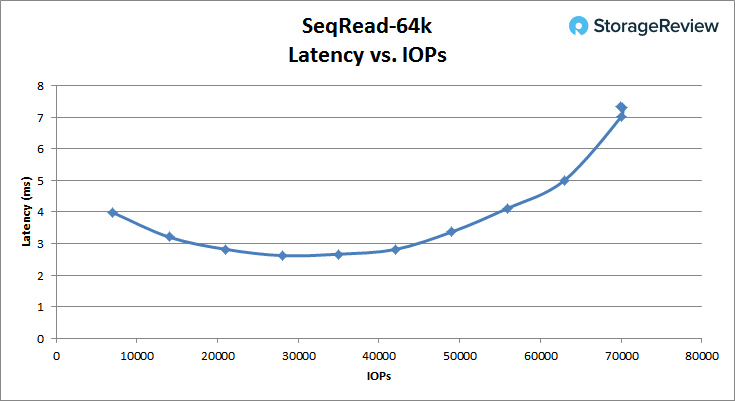

Switching to 64K peak read, the XS1200 began the test at 3.98ms and was able to dip as low as 2.62ms at roughly 28,000 IOPS. It peaked at 70,000 IOPS with a latency of 7.29ms and a bandwidth of 4.37GB/s.

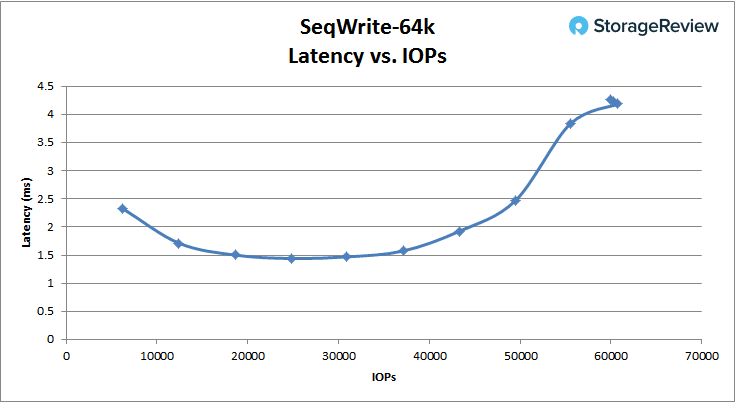

For 64K sequential peak write, the XS1200 started at 2.32ms latency with its lowest latency reaching 1.44ms at 24,800 IOPS. The array peaked at 60,800 at 4.2ms latency and 3.80GB/s bandwidth.

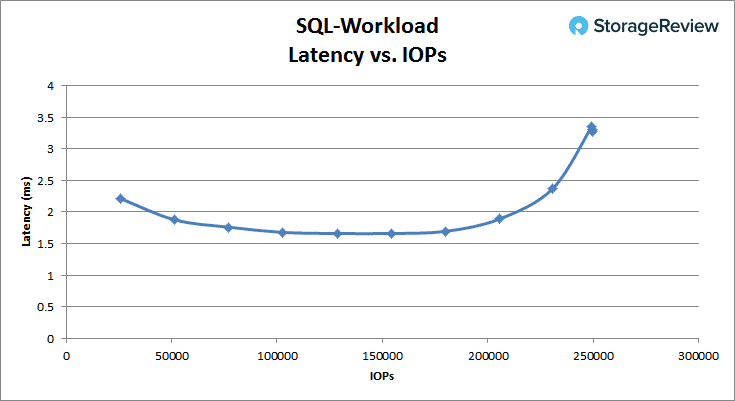

In our SQL workload, the XS1200 started at 2.21ms with its lowest latency reaching 1.66ms at just over 154,000 IOPS. It peaked at 249,000 IOPS at 3.35ms latency.

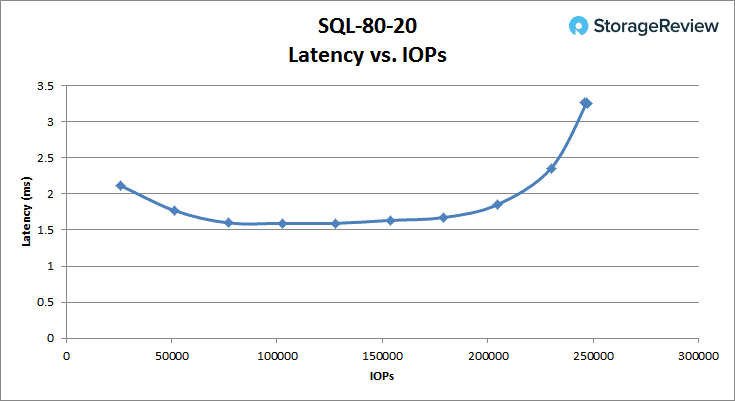

The SQL 80-20 benchmark started off with 2.12ms and recorded its best latency at the 1.593ms during 100,000 IOPS through 128,000 IOPS. It peaked at 247,000 IOPS at 3.26ms latency.

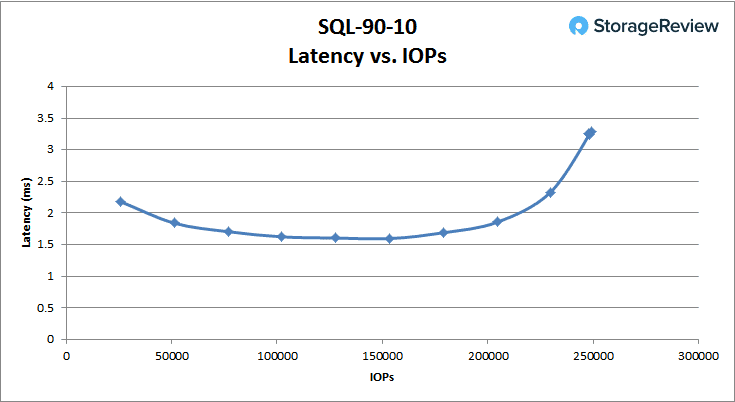

In the SQL 90-10 benchmark, the XS1200 started at 2.18ms and recorded its lowest latency at 1.6ms around the 154,000 IOPS mark. It peaked at 249,000 IOPS at 3.29ms latency.

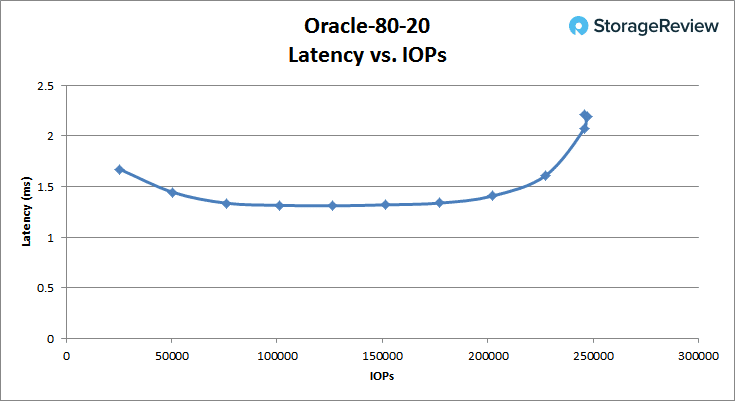

With the Oracle Workload, the XS1200 started at 1.67ms while its lowest latency was recorded at 126,000 IOPS with 1.31ms. It peaked at 246,186 IOPS with a latency of 2.21ms.

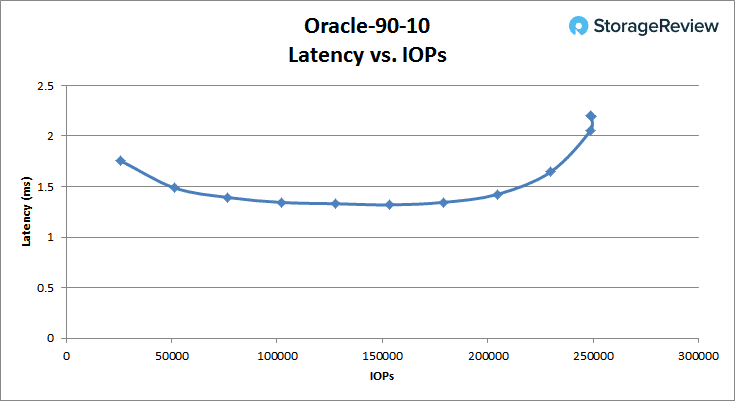

With the Oracle 90-10, the XS1200 started at 1.76ms while recording its lowest latency at 1.32ms during the 153,427 IOPS mark. It peaked at 248,759 IOPS at 2.2ms latency.

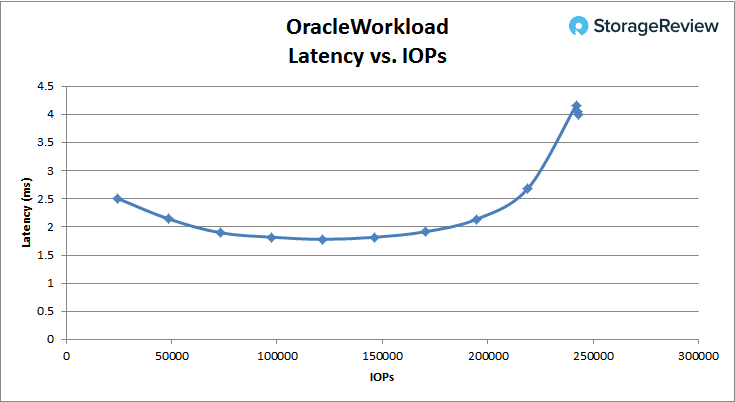

With the Oracle 80-20, the XS1200 started at 2.5ms and managed to dip to 1.78ms at 121,600 IOPS. The array peaked at 242,000 IOPS with a latency of 4.16ms.

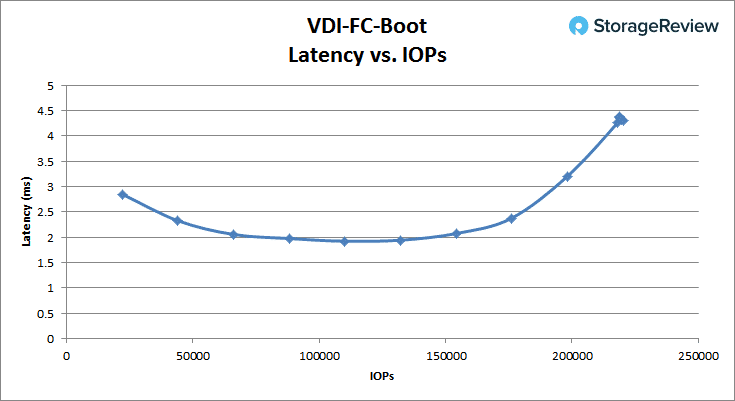

Switching over to VDI Full Clone, the boot test showed the XS1200 starting at a latency of 2.85ms with a low latency of 1.92ms up to around 110,190 IOPS. It peaked at 218,000 IOPS with a latency of 4.26ms.

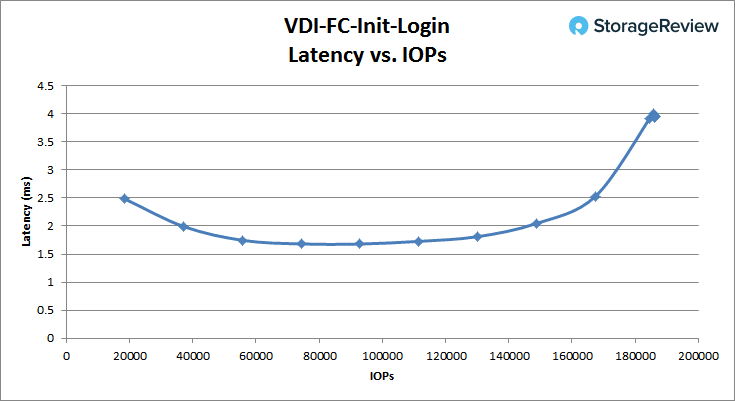

The VDI Full Clone initial login started off at 2.48ms and dipped to a low 1.68ms at 74,370 IOPS. It peaked at 185,787 IOPS at 3.91ms latency.

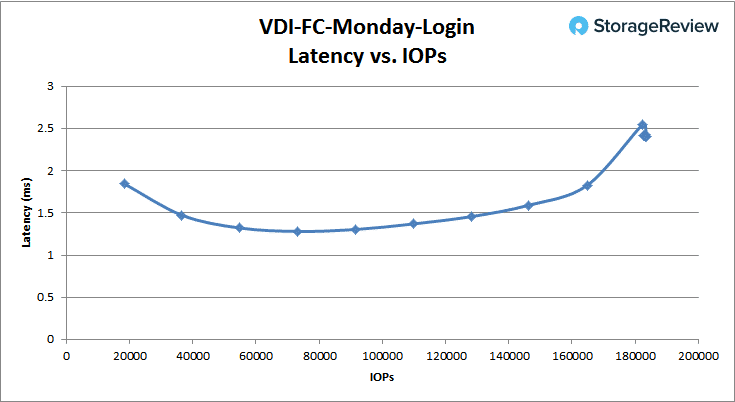

The VDI Full Clone Monday Login started off at 1.85ms and went as low as 1.28ms at around 73,000 IOPS. It peaked at 182,376 IOPS at 2.55ms latency.

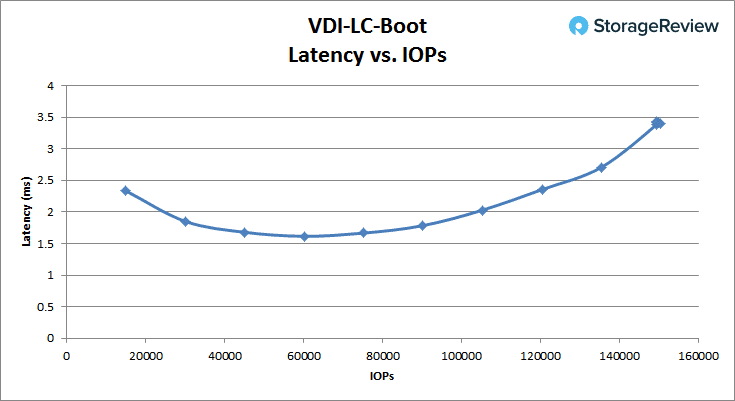

Switching over to VDI Linked Clone, the boot test showed the XS1200 starting at a latency of 2.33ms, and its lowest latency of 1.62ms at 60,200 IOPS. It peaked at 149,488 IOPS with a latency of 3.39ms.

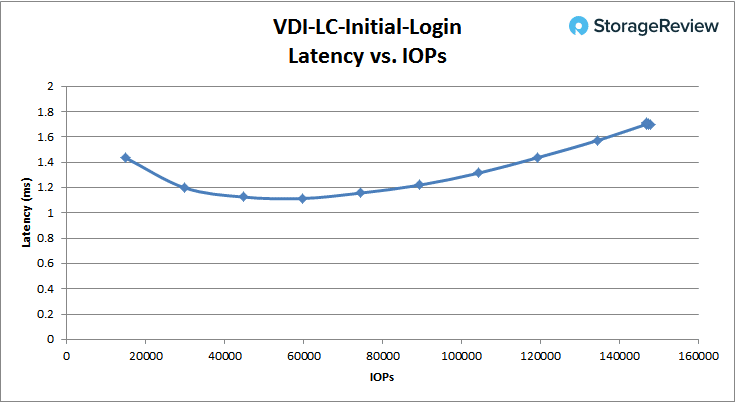

The VDI Linked Clone initial login started off at 1.143ms and reached its lowest latency at 59,689 IOPS with 1.11ms. It peaked at 147423 IOPS at 1.71ms latency.

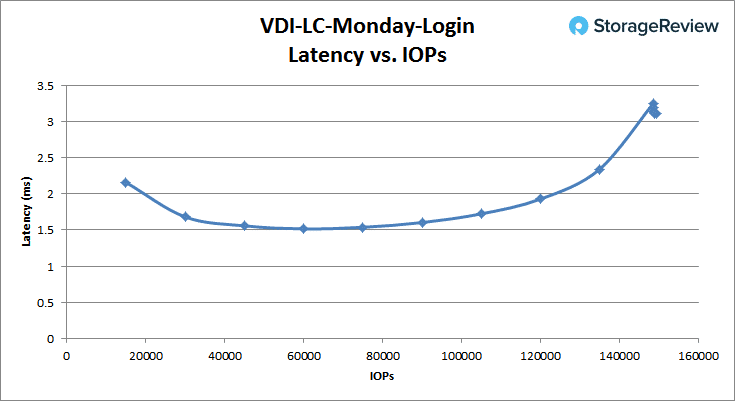

The VDI Linked Clone Monday started off at 2.16ms and reached its lowest latency at 60,000 IOPS with 1.52ms. It peaked at 248.514 IOPS at 3.24ms latency.

Conclusion

The QSAN XCubeSAN XS1200 Series are dual controller SANs aimed more for the smaller side of business or remote and branch locations. The XS1200 Series has a wide variety of form factors depending on the total amount of capacity needed. The units are powered by Intel D1500 two-core CPUs and 4GB of DDR4 memory per controller. They also support both iSCSI and Fibre Channel connectivity. For our particular review, we looked at the XS1226D dual controller SAN with 26 Toshiba PX04SV 960GB SAS 3.0 SSDs.

In our transactional benchmark for SQL Server, the XCubeSAN XS1200 had an impressive aggregate score of 12,634.305 TPS and an aggregate average latency of only 5.8ms. With these numbers, it certainly is one of the fastest SQL Server storage arrays we have seen so far. Sysbench results showed solid TPS scores as well, posting 7,076.82 TPS for 4VM and 16,143.94 TPS at 16VM. The XS1200 continued it great performance with average latency posting 18.14ms at 4VM and just 20.63 at 8VM, while jumping to only 32.22ms when doubling the VMs again. This trend continued when looking at our worst-case scenario results with a 99th percentile latency of 32.40ms at 4VM and topping out at 62.1ms latency when testing with 16VMs.

The results of our VDBench tests told a similar story, although with average latency climbing above flash arrays we’ve tested. In random 4K, the XS1200 recorded sub-1ms latency up until 198,000 IOPS, while boasting a peak throughput of 284,000 IOPS with 13.82ms in average latency. When looking at 64K peak read, the XS1200 started at 3.98ms and was able go as low as 2.62ms at the 28,000 IOPS mark. Throughput peaked at around 70,000 IOPS with a latency of 7.29ms and a bandwidth of 4.37GB/s. We also put the new QSAN XS1200 through three SQL workloads: 100% read, 90% read and 10% write, and 80% read and 20% write. Here, the XS1200 peaked at 249,000 IOPS, 249,000 IOPS, and 247,000 IOPS, all of which posted latency just over 3ms. The same three tests were run with an Oracle workload, resulting in performance that peaked at 246,186 IOPS, 248,759 IOPS, and 242,000 IOPS, respectively, at just over 3ms again. Lastly, we ran VDI Full Clone and Linked Clone benchmarks for Boot, Initial Login, and Monday Login. The XS1200 peaked at 218,000 IOPS, 185,787IOPS, and 182,376 IOPS in Full Clone, and 149,488 IOPS, 147,423 IOPS, and 248,514 IOPS in Linked Clone.

Overall the QSAN XCubeSAN XS1200 has a lot of great capabilities to help it make a name for itself in the market. At entry-level midmarket pricing, it outpaced many of the systems we’ve tested in much higher price brackets. That said, there are areas where those more expensive models are able to show their strengths. UI is a big one, where the QSAN system is functional but lacks the fit and finish many other systems provide. Feature set is another; other systems are able to maintain similar performance levels, with full in-line data services activated, such as inline compression and dedupe. At the end of the day, though, customers looking for a great performance/budget ratio and who don’t mind compromising some in other areas, will be enticed by the XCubeSAN XS1200.

Bottom Line

The QSAN XCubeSAN XS1226D offers a compelling blend of feature set, performance and pricing, making it a very good storage solution for SMB/ROBO situations that want it all, while remaining as cost effective as possible.

Amazon

Amazon