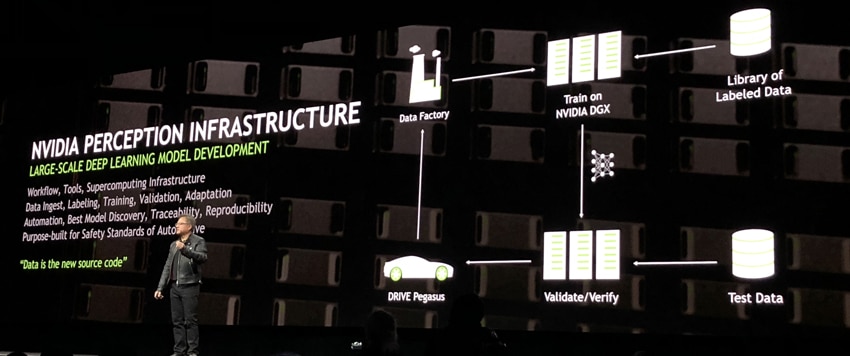

Today at the GPU Technology Conference 2018 in Silicon Valley, NVIDIA made a few announcements around Deep Learning. These announcements include partnering with Arm to bring deep learning inferencing to billions of IoT devices. NVIDIA is also announcing advancements in performance for deep learning that they state are 10x higher than six months ago.

Today at the GPU Technology Conference 2018 in Silicon Valley, NVIDIA made a few announcements around Deep Learning. These announcements include partnering with Arm to bring deep learning inferencing to billions of IoT devices. NVIDIA is also announcing advancements in performance for deep learning that they state are 10x higher than six months ago.

Today at the GPU Technology Conference 2018 in Silicon Valley, NVIDIA made a few announcements around Deep Learning. These announcements include partnering with Arm to bring deep learning inferencing to billions of IoT devices. NVIDIA is also announcing advancements in performance for deep learning that they state are 10x higher than six months ago.

The partnership is an integration of the open-source NVIDIA Deep Learning Accelerator (NVDLA) architecture into ARM’s Project Trillium platform for Machine Learning. The companies state that this partnership will allow IoT chipmakers to integrate AI into their designs, or putting AI into billions of IoT devices. The partnership will also bring higher levels of performance to deep learning developers as they leverage Arm’s flexibility and scalability.

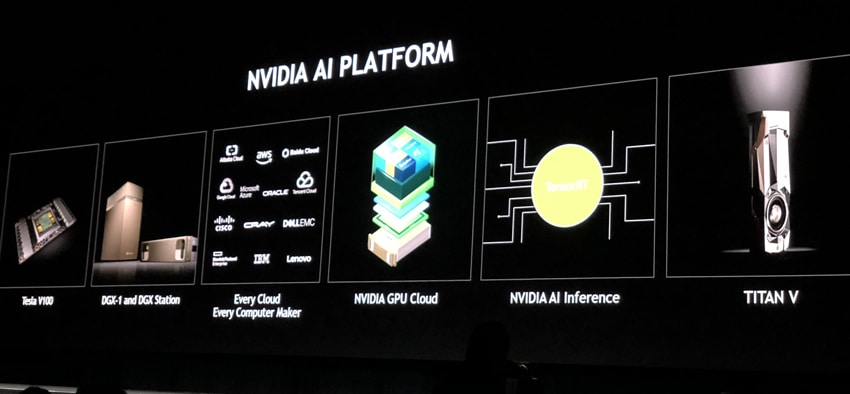

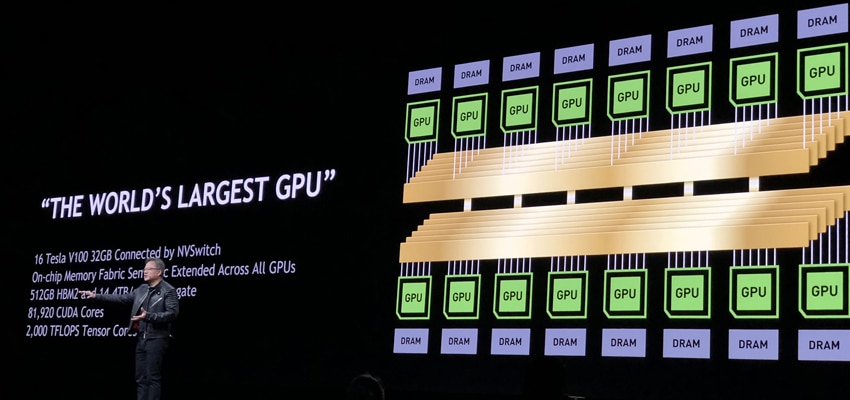

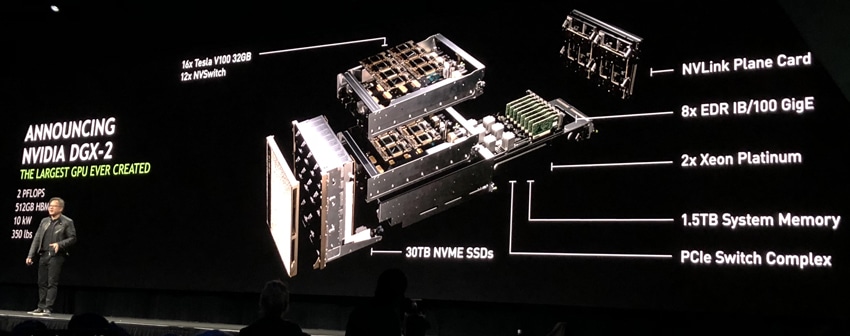

NVIDIA made performance enhancements to cloud service providers and server makers with advancements such as its 2x memory boots to NVIDIA Tesla V100 (now up to 32GB of memory per GPU) and the GPU interconnect fabric, NVSwitch, which enables up to 16 Tesla GPUs to communicate at a speed of 2.4TB/s. The company also released single server, the NVIDIA DGX-2. The DGX-2 can deliver two petaflops of computational power or 300 severs taking up 15 racks of space.

As stated above the new Tesla V100 now have 32GB of memory instead of 16GB and all the benefits that come with more memory. Not much more to say about that other than several computer manufacturers will be offering this in the future. Oracle Cloud Infrastructure will be offering the new Tesla V100s in the cloud as well.

The new interconnect fabric NVSwitch, is designed to overcome the limitation of larger datasets. NVIDIA states that this interconnect has 5x higher bandwidth than the best PCIe switch. The NVSwitch takes the innovations that came from NVIDIA NVLink and extends them further, aided the build of even more advanced and complex systems.

As stated above, The DGX-2 can deliver two petaflops, a world’s first. The system leverages the NVSwitch and all 16 GPUs in a unified memory space to hit this milestone. The DGX-2 comes with a suite of NVIDIA deep learning software making it ideal for data scientists that are on the edge of research in deep learning. The company states that the DGX-2 is able to train FAIRSeq (a state-of-the-art neural machine translation model) in under two days. This is a 10x improvement from the DGX-1.

Sign up for the StorageReview newsletter