NVIDIA’s integrated solutions reduce complexity, enhance operational efficiency, and enable the rapid deployment of next-generation AI systems.

At GTC Paris, NVIDIA delivered a sweeping set of announcements that reinforce its leadership in AI-driven infrastructure, digital twins, robotics, and autonomous vehicles. The event’s central theme—Physical AI—underscored NVIDIA’s commitment to bridging the digital and physical worlds, empowering partners and customers to build smarter, safer, and more sustainable systems. For technical sales professionals, these innovations represent a robust portfolio of solutions that address the most pressing challenges in urban development, manufacturing, and mobility.

Omniverse Blueprint for Smart City AI: Enabling Sustainable Urban Futures

NVIDIA introduced the Omniverse Blueprint for smart city AI, a reference framework that integrates Omniverse, Cosmos, NeMo, and Metropolis platforms. This blueprint is designed to help cities and their partners create simulation-ready, photorealistic digital twins, enabling the development, testing, and deployment of AI agents that optimize city operations and infrastructure.

With urban populations projected to double by 2050, cities face mounting pressure to deliver sustainable services and efficient public planning. The Omniverse Blueprint addresses the complexity of building and managing city-scale digital twins by providing a unified software stack that enables seamless integration and management. Omniverse powers physically accurate simulations, Cosmos generates synthetic data for AI model training, and NeMo curates and refines large language and vision-language models. At the same time, Metropolis deploys AI agents for video analytics to provide real-time insights.

The workflow is streamlined: developers create SimReady digital twins using aerial or map data, train and fine-tune AI models with TAO and NeMo Curator, and deploy real-time AI agents for monitoring and operational intelligence. Early adopters—including SNCF Gares&Connexions, K2K, Milestone Systems, and Bentley—are already leveraging the blueprint to achieve measurable improvements in maintenance, energy efficiency, and public safety.

For example, SNCF Gares&Connexions has implemented digital twins and AI agents across its network of 3,000 train stations, achieving a 100% on-time preventive maintenance rate, halving downtime and response times, and reducing energy consumption by 20%. In Sicily, K2K’s AI agents, built with NVIDIA’s AI Blueprint and Nebius cloud, provide real-time public safety alerts. Milestone Systems’ Project Hafnia is creating an anonymized, regulatory-compliant video data platform for AI model development, while Linker Vision and AVES Reality are bringing 3D city geometry into SimReady Omniverse twins.

DiffusionRenderer and Cosmos Predict

NVIDIA Research continues to push the boundaries of AI-powered rendering with DiffusionRenderer, a neural rendering technique that merges inverse and forward rendering into a single, high-performance engine. This technology enables content creators and physical AI developers to edit lighting in 2D videos, generate synthetic data for AV and robotics training, and create photorealistic scenes with unprecedented realism.

DiffusionRenderer can de-light and relight scenes using only 2D video data, estimating complex properties like normals and material roughness without requiring 3D geometry. This allows for the creation of new shadows, reflections, and lighting conditions, enhancing the diversity and quality of training datasets for autonomous systems.

By integrating DiffusionRenderer with Cosmos Predict-1, NVIDIA has further improved the quality and consistency of synthetic data generation. Cosmos Predict, part of the broader Cosmos platform, provides world-class foundation models and accelerated data pipelines for physical AI development. These advancements are critical for industries that rely on high-fidelity simulation and data augmentation, such as automotive, robotics, and creative media.

FoundationStereo, Scene Flow, and Difix3D+

NVIDIA’s research teams continue to set the pace in computer vision and AI, with several papers recognized at CVPR. FoundationStereo reconstructs 3D information from 2D images, outperforming current methods and generalizing across domains. The Zero-Shot Monocular Scene Flow Estimation model, developed with Brown University, predicts 3D motion fields from single images. Difix3D+ eliminates artifacts in 3D scene reconstructions, improving the quality of digital twins and simulation environments.

NVIDIA’s consistent recognition at the Autonomous Grand Challenge—winning for the third consecutive year—demonstrates the company’s leadership in end-to-end autonomous system development.

Accelerating Industrial Automation

As European manufacturers face labor shortages and sustainability demands, NVIDIA is enabling the next generation of safe, AI-driven robots. At GTC Paris, leading robotics companies—including Agile Robots, Extend Robotics, Universal Robots, and more—showcased breakthroughs powered by NVIDIA’s platforms.

NVIDIA Isaac GR00T N1.5, an open foundation model for humanoid robot reasoning, is now available for download, offering improved adaptability and instruction-following for material handling and manufacturing. The latest Isaac Sim and Isaac Lab frameworks, optimized for RTX PRO 6000, are available for developer preview, supporting advanced simulation and learning.

NVIDIA Halos, a comprehensive safety system, now extends to robotics, integrating hardware, AI models, and software to ensure functional safety throughout the development lifecycle. The Halos AI Systems Inspection Lab, accredited by the ANSI National Accreditation Board (ANAB), provides compliance and safety validation for robotics and automotive applications. New safety extension packages for the NVIDIA IGX platform and the Holoscan Sensor Bridge further simplify the integration of safety functions and sensor-to-compute architectures.

AV End-to-End AI, Safety, and Synthetic Data

NVIDIA DRIVE continues to power the global shift toward autonomous and highly automated vehicles. The full-stack AV software platform, already in production with leading automakers, enables safe, scalable, and efficient development of autonomous vehicles. NVIDIA’s modular approach allows customers to adopt the entire stack or specific components, supporting everything from ADAS to fully autonomous driving.

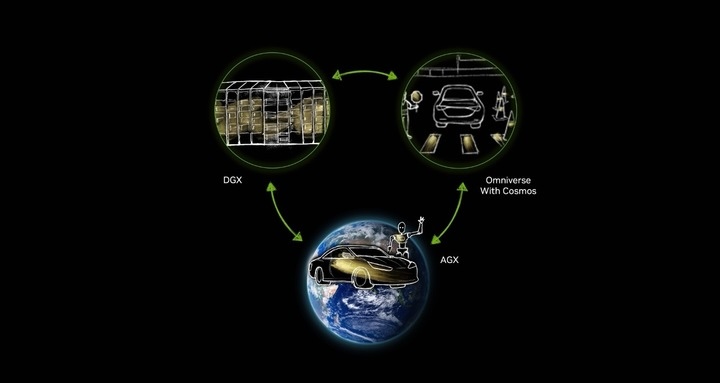

The three-computer solution—DGX systems for AI training, Omniverse and Cosmos for simulation and synthetic data, and DRIVE AGX for in-vehicle compute—provides a comprehensive pipeline for AV development. This architecture enables automakers to convert real-world driving data into billions of simulated miles, accelerating validation and continuous improvement.

NVIDIA’s end-to-end AV software leverages deep learning and foundation models to process sensor data and control vehicle actions, moving beyond traditional modular pipelines. The Omniverse Blueprint for AV simulation and Cosmos Predict-2 world foundation model further enhance the development of robust, human-like driving systems.

Cosmos Predict-2, with enhanced context understanding and video generation, accelerates the creation of synthetic data for autonomous vehicle (AV) training. Post-training on real-world driving data enables the generation of multi-view videos, which augment datasets and improve model performance in challenging conditions. Industry leaders such as Gatik, Oxa, Plus, and Uber are already leveraging Cosmos Predict to scale their AV development.

CARLA, the leading open-source AV simulator, now integrates Cosmos Transfer and NuRec APIs, enabling developers to generate high-fidelity synthetic scenes and simulate diverse driving scenarios. The NVIDIA Physical AI Dataset, with 40,000 Cosmos-generated clips, provides a rich resource for training and validation.

End-to-End Safety with NVIDIA Halos

Safety remains paramount in AV development. NVIDIA Halos, launched earlier this year, delivers a comprehensive safety platform that integrates hardware, software, and AI models to ensure safe development and deployment across the cloud-to-car spectrum. The DriveOS safety-certified operating system meets stringent automotive standards, while Halos provides guardrails throughout simulation, training, and deployment.

Bosch, Easyrain, Nuro, and other industry leaders have joined the Halos AI Systems Inspection Lab to validate the safe integration of their products with NVIDIA technologies. This collaborative approach ensures that AV systems meet the highest functional safety benchmarks, supporting the industry’s transition to AI-defined vehicles.

NVIDIA’s Blueprint for the Future of Physical AI

NVIDIA’s announcements at GTC Paris mark a pivotal moment for the convergence of AI, simulation, and real-world systems. By delivering unified platforms and reference blueprints for smart cities, robotics, and autonomous vehicles, NVIDIA is empowering partners and customers to accelerate innovation, enhance safety, and drive sustainable growth.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed