Dell and CoreWeave deliver the first NVIDIA GB300 NVL72 system, setting a new benchmark in AI performance and scalability for enterprise workloads.

Dell Technologies, in close collaboration with CoreWeave, has announced the shipment of the industry’s first NVIDIA GB300 NVL72 system. This milestone follows Dell’s earlier achievement as the first vendor to ship the NVIDIA GB200, further cementing its leadership in AI infrastructure. CoreWeave, a leading AI Cloud Service Provider (CSP), becomes the first hyperscaler to deploy NVIDIA’s GB300 NVL72, a liquid-cooled, rack-scale AI system designed for the most demanding enterprise workloads.

This achievement is the result of a strong partnership between Dell, CoreWeave, and NVIDIA, building on the momentum of the Dell AI Factory with NVIDIA. The Dell AI Factory delivers comprehensive, secure AI solutions tailored to the evolving needs of modern enterprises, enabling organizations to accelerate their AI initiatives with confidence.

Unlocking Enterprise Potential with CoreWeave

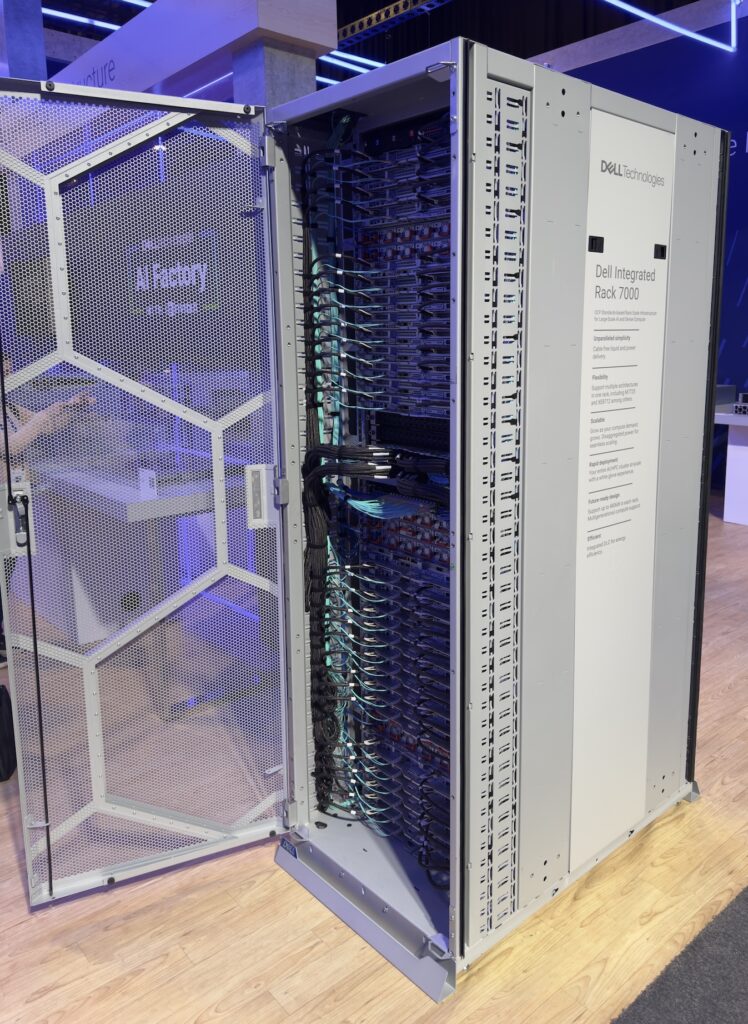

CoreWeave’s deployment of Dell Integrated Racks featuring the NVIDIA GB300 NVL72 marks a significant leap in the scalability and performance of cloud-based AI services. The solution leverages liquid-cooled Dell Integrated Rack Scalable Systems powered by PowerEdge XE9712 servers. Each rack is equipped with 72 NVIDIA Blackwell Ultra GPUs and 36 Arm-based NVIDIA Grace CPUs, providing a robust foundation for next-generation AI workloads.

The NVIDIA Blackwell Ultra GPUs at the heart of this system deliver 1.5 times more AI compute FLOPS compared to their predecessors, enabling faster and more efficient processing of complex models. The system also features expanded HBM3e memory, offering up to 21TB of high-bandwidth GPU memory per rack, 1.5 times more than the previous GB200 NVL72 generation. This substantial memory increase enables larger batch sizes and more complex models, which is essential for maximizing throughput in AI reasoning and inference tasks.

Fifth-generation NVIDIA NVLink technology provides an impressive 130TB/s of aggregate bandwidth, ensuring seamless, high-speed communication between every GPU in the system. This level of interconnectivity is critical for achieving peak performance in AI models, particularly those operating at scale.

Networking is further enhanced by next-generation InfiniBand, with NVIDIA Quantum-X800 InfiniBand switches and NVIDIA ConnectX-8 SuperNICs delivering 800 gigabits per second (Gb/s) of dedicated connectivity to each GPU. This architecture ensures best-in-class remote direct-memory access (RDMA), maximizing efficiency for large-scale AI workloads.

Security and multi-tenancy are addressed through the NVIDIA DOCA software framework, running on NVIDIA BlueField-3 DPUs. This combination accelerates AI workloads by providing line-speed tenant networking of up to 200Gb/s and high-performance GPU data access, supporting secure, multi-tenant cloud environments.

Designed for Frontier-Scale AI

The Dell Integrated Rack solution is designed for rapid deployment and seamless integration into production environments. According to Dell, the system can enhance user response times by up to tenfold and deliver a fivefold increase in throughput per watt compared to the previous NVIDIA Hopper generation.

CoreWeave emphasizes that the system is purpose-built for frontier-scale AI, enabling users to train, optimize, and deploy models with multi-trillion parameters. It is specifically designed to handle the high computational demands of test-time scaling inference, a critical requirement for deploying advanced AI models in production.

This deployment significantly enhances CoreWeave’s AI cloud platform, simplifying training, reasoning, and real-time inference for large language models. The system’s architecture supports fast GPU-to-GPU communication via NVIDIA NVLink switch trays, delivers over one exaflop of dense AI performance, and provides up to 40TB of high-speed memory in a single rack.

Commitment to Performance and Scalability

CoreWeave plans to roll out NVIDIA GB300-accelerated Dell servers throughout the year, underscoring its commitment to efficiency, scalability, and industry-leading performance. This initiative is fully supported by Dell’s AI Professional Services, which brings deep expertise in data center design and integration, ensuring customers achieve optimal performance and a smooth transition to next-generation AI infrastructure.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed