NVIDIA addresses challenges in robotic manipulation and announces advanced robotic learning tools at the Conference on Robotic Learning.

NVIDIA is using the stage at the Conference on Robot Learning (CoRL) in Korea to showcase its most ambitious advances in robotic learning to date. The company is highlighting a suite of open models, powerful new computer platforms, and simulation engines that aim to close the gap between robotic research and real-world deployment.

At the center of NVIDIA’s announcements are mobility and manipulation challenges, two of the most complex hurdles in robotics. By fusing AI-driven reasoning, advanced physics simulation, and domain-specific compute platforms, NVIDIA aims to accelerate the entire lifecycle of robotic development, from early R&D and simulation to training and, ultimately, deployment in physical environments.

Open Models for Robotics Learning: DreamGen and Nerd

NVIDIA introduced DreamGen and Nerd, two open foundation models designed specifically for robotic learning.

- DreamGen is focused on generating diverse training environments for robotics simulation, producing scenarios that expose robots to edge cases and variability they would encounter in the real world.

- Nerd, a Neural Robotics Development model, provides advanced perception and reasoning capabilities, enabling robots to understand natural environments, predict outcomes, and adapt their behavior dynamically.

By releasing these models openly, NVIDIA is reinforcing its commitment to collaborative robotics research, lowering the barrier to entry for labs and startups, and ensuring reproducibility across the academic and industrial landscapes. These models can plug directly into Isaac robotics frameworks, streamlining experimentation and deployment.

New Computing Platforms: Simulation, Training, and Deployment

NVIDIA also announced three new computing systems optimized for each stage of robotics development:

Omniverse with Cosmos on RTX Pro (Simulation)

-

- Provides a physics-accurate environment for simulating robotics tasks.

- Integrates with the newly upgraded Isaac Lab for reinforcement learning, control testing, and environment creation.

- Cosmos builds on NVIDIA’s Omniverse RTX platform, featuring ray-traced rendering, photorealism, and domain randomization, critical for sim-to-real transfer in robotics.

- Researchers can create realistic simulations at scale without relying on expensive and risky physical trials.

NVIDIA DGX (Training)

- Positioned as the compute backbone for training large robotic learning models.

- Uses NVIDIA Blackwell architecture GPUs, enabling real-time reasoning for robotics AI with extremely low latency.

- Optimized for multi-modal workloads, combining vision, language, motion, and environmental feedback into unified models.

- Rapidly shortens the cycle from training to usable robotic behaviors, with the capacity to scale from lab experimentation to industrial R&D.

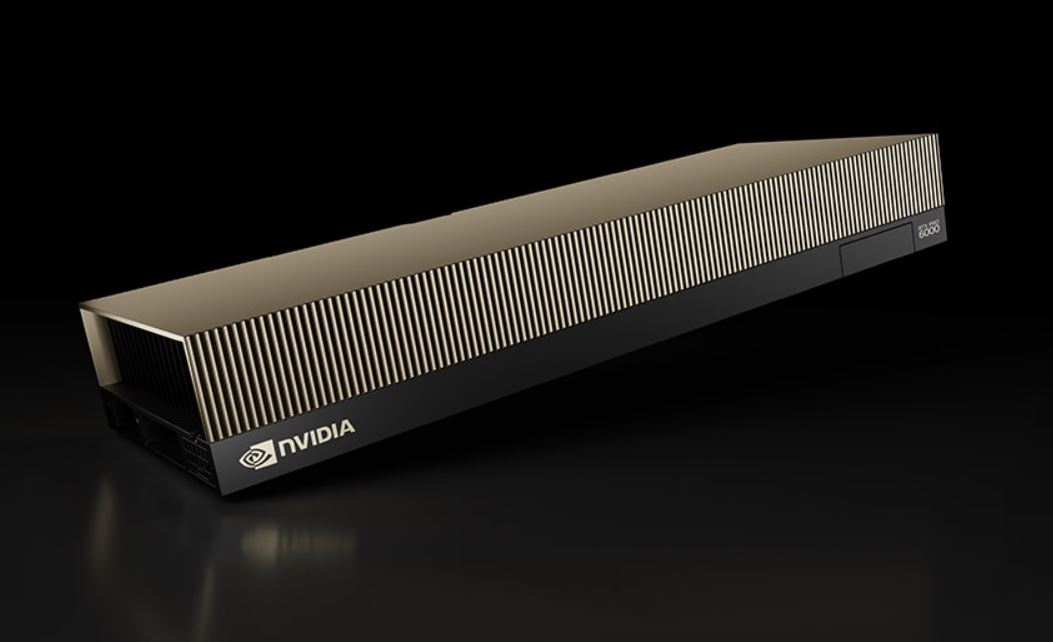

NVIDIA Jetson AGX Thor (Deployment)

- Designed for on-device inference and autonomy in real-world conditions.

- Packs Transformer Engine acceleration for LLM and diffusion workloads directly into embedded robotics systems.

- Energy-efficient profile makes it viable for commercial mobile robots, drones, and automated manipulators.

- Benefit: Brings real-time, human-like reasoning out of the data center and directly into the physical world.

- Newton Physics Engine in Isaac Lab

Newton Physics Engine, first announced at GTC 2025, was developed in collaboration with Disney Research and Google DeepMind.

- Newton integrates rigid-body physics, fluid dynamics, and soft-body dynamics into simulations.

- Within the Isaac Lab, researchers now have precise modeling capabilities for contact, friction, deformation, and multi-material interactions.

- This fidelity enables roboticists to train manipulation tasks, such as grasping fragile objects or navigating uneven terrain, with high transfer reliability to real-world applications.

NVIDIA also showcased the continued evolution of its Groot model family for robotic reasoning, advancing from Groot N1.5 (announced in May) to Groot N1.6. These models are tuned for “human-like” planning, reasoning, and task decomposition, enabling robots to break down complex tasks into executable steps, much like a human operator would.

Blackwell: Driving Real-Time Robotic Reasoning

NVIDIA highlighted how its new Blackwell GPU architecture is enabling real-time reasoning in robotics.

- With transformer-scale compute packed into energy-efficient form factors, robots can execute real-time decision-making, path planning, and multi-modal inference.

- This means robots are no longer constrained to batch processing in cloud servers; they can think and act on the fly.

- Key application domains include logistics automation, healthcare robotics, industrial manipulation, and autonomous mobility.

Significance

NVIDIA’s robotics push is significant not only for the breadth of tools being introduced but also for how tightly integrated the ecosystem has become. The advancements include:

- Open Models: Leveling the playing field, encouraging rapid innovation across academia and enterprise.

- Simulation Engines: Reduce costs and risks of hardware iteration, ensuring faster transfers from lab to production.

- Scalable Compute (DGX + Jetson): Provide continuity; the same models can be trained on DGX clusters and then deployed efficiently with Jetson AGX Thor.

- Robotic Reasoning Advances: Addressing a key gap in current systems, most robots are reactive rather than proactive. NVIDIA is equipping them with the ability to plan and reason like humans.

As mobility and manipulation remain challenging, NVIDIA’s unified approach, combining open research models, physics-accurate simulation, and deployment-ready compute, positions it as a central force in accelerating robotic autonomy.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed