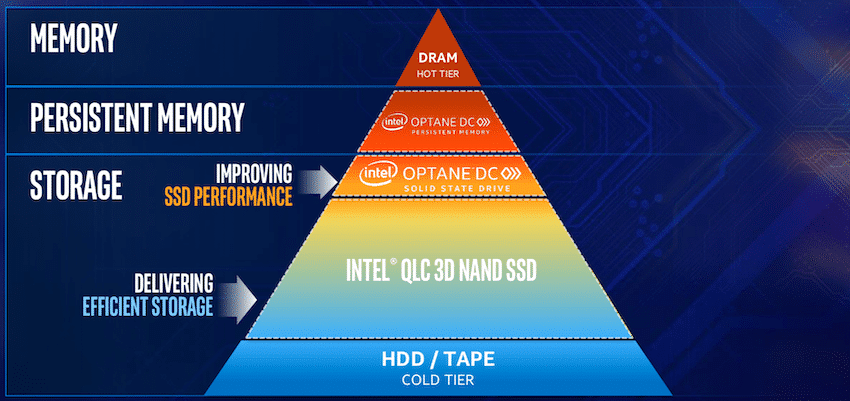

Intel has talked about Optane DC Persistent Memory Modules (PMM) publicly for over a year now, espousing the benefits of a new tier of data centric architecture that sits between DRAM and Optane DC SSDs, with sequentially slower SSD and HDD media cascading down the pyramid to tape at the archive level. The goal with persistent memory has always been to move more data closer to the CPU, offering DRAM-like latency with storage-like persistence and capacities. After a year of listening to hardware and software partners talk about the benefits of persistent memory in the lab, with the release of the second generation Intel Xeon Scalable Processors, Optane DC PMEM is now available across a wide variety of server solutions.

Intel Optane DC Persistent Memory Module

Intel Optane DC Persistent Memory Hardware Overview

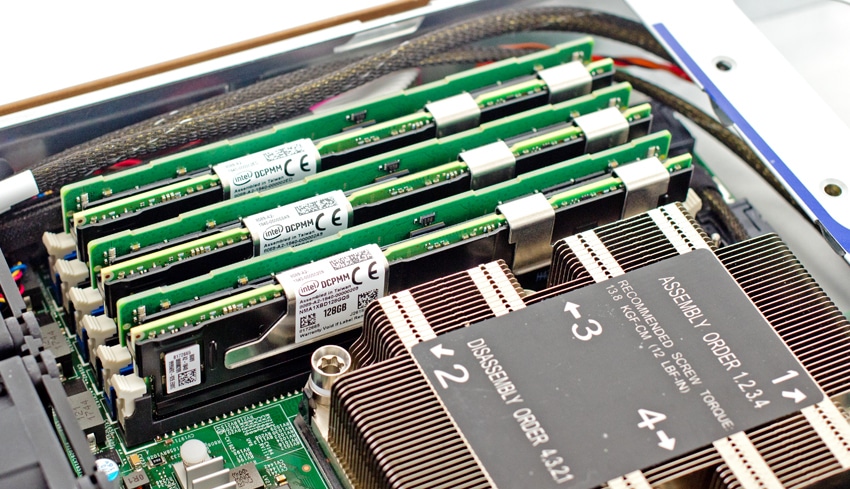

Intel Optane DC PMMs come in much higher capacities than traditional DRAM. Intel Optane DC persistent memory modules come in 128GB, 256GB and 512GB capacities, vastly larger than DRAM sticks that typically range from 4GB to 32GB, though larger capacities exist. PMMs are on the same channel as DRAM, and should be populated on the slot closest to the CPU on each channel. A popular configuration Intel is recommending is a 4:1 ratio, with 32GB DRAM to 128GB DCPMM, which you can see below.

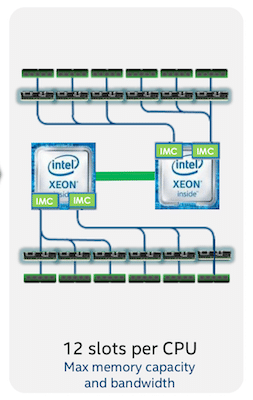

Each CPU can support up to 6 persistent memory modules. In a typical server that supports two Intel Xeon Salable Processors, that means 12 persistent memory modules per system, or up to 6TB in total PMEM capacity (3TB per socket). Servers that are persistent memory capable will also show awareness of the modules within their system BIOS where persistent memory modes can be set, namespaces created, and pools can be configured, amongst other settings. This same level of visibility and configuration can also be performed through the OS.

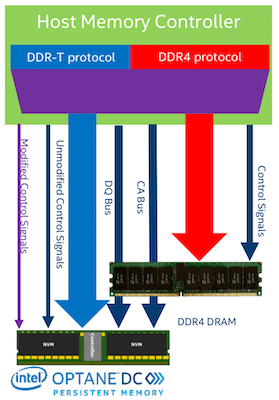

For a look at how it communicates, Intel Optane DC persistent memory uses the DDR-T protocol. This allows for asynchronous command/data timing. The module controller uses the request/grant scheme to communicate with host controller. Data bus direction and timing is controlled by the host. A command packet per request is sent from the host to the persistent memory controller. The transaction can be re-ordered in the Intel Optane DC persistent memory controller if need be. The modules use 64B cache line access granularity which is similar to DDR4.

From a hardware perspective, Optane DC persistent memory is a complete system on a module with several key components:

- The power management integrated circuit (PMIC) generates all the rails for media and controller

- SPI Flash stores the module’s firmware

- The Intel Optane Media makes up the storage space itself which is comprised of 11 parallel devices for data, ECC, and spare

- DQ buffers for high bit rate signal integrity

- AIT DRAM holds the address indirection table

- Energy Store Caps ensures the flushing of all modules queues in the event of a power failure

- At the heart of every persistent memory module is in the Intel Optane DC persistent memory controller which handles the data transfers as well as management of the subcomponents on the board.

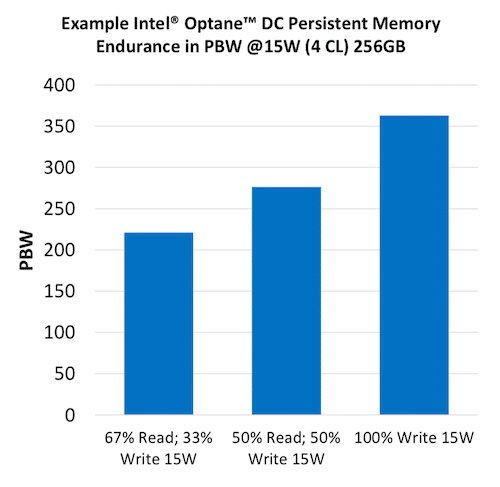

Of course when considering the modules themselves, after cost and performance, perhaps endurance is the largest concern. Like other storage media, Intel Optane DC persistent memory is measured in Petabytes Written (PBW). PBW is estimated based on bandwidth and media endurance considerations over 5 year lifetime assuming maximum bandwidth at target power usage for 24/7, 365 days per year. In the case of 100% write 15W the persistent memory modules support over 350PBW, as seen in the chart below.

One further note on settings, Optane DC modules are programmable for different power limits allowing for a wide swatch of optimization. The persistent memory modules support a power envelope of 12W – 18W and can be tuned in 0.25 watt granularity. The higher power settings give the best performance, albeit with the cost associated with higher overall server power draw. In some cases, that may not be an issue and organizations can choose to max out the power envelope based on server support.

Intel Optane DC Persistent Memory Operating Modes

Once deployed in a server, the PMMs can be further configured in a variety of operating modes that include Memory Mode and App Direct Mode, along with a sliding scale of allocations in between.

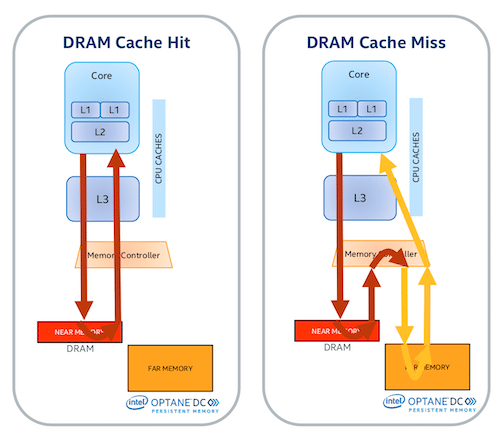

Optane DC Persistent Memory – Memory Mode

In memory mode, PMMs are used very similar to DRAM. There is no need for specific software or changes to applications, persistent memory mimics DRAM keeping the data “volatile,” though the volatile key is cleared every power cycle. In memory mode the persistent memory is used as an extension of DRAM and is managed by the host memory controller. There is no set ratio for persistent memory to DRAM, the mix can be dependent on application needs. In terms of latency profile, anything hitting the DRAM cache (near memory) will of course deliver <100 nanosecond latency. Any cache misses will flow to the persistent memory (far memory) that will deliver latency in the sub microsecond range.

Optane DC Persistent Memory – App Direct Mode

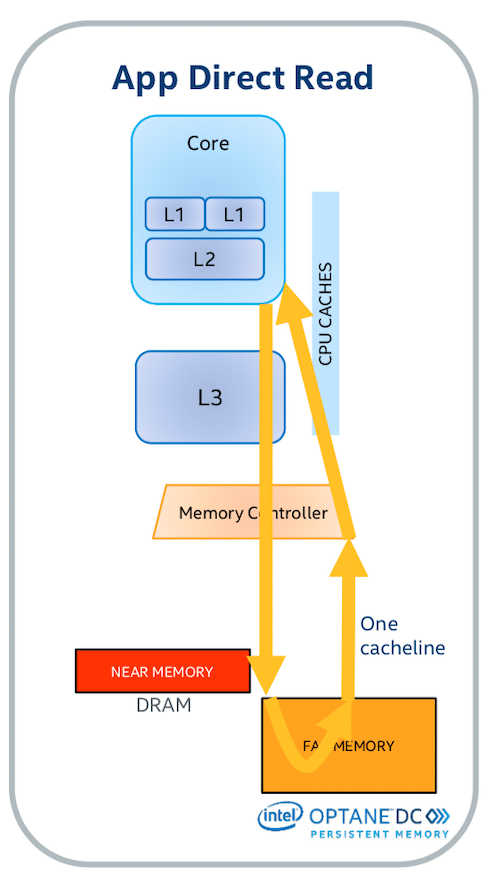

Optane DC persistent memory also has an App Direct mode. This mode needs specific persistent memory-aware software/applications. This mode makes the persistent memory in place persistent but still byte addressable similar to memory. In App Direct mode persistent memory remains cache coherent and offers the ability to do DMA and RDMA.

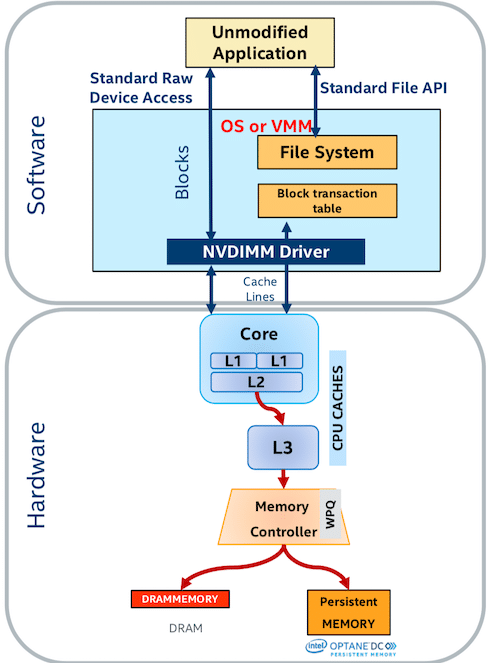

There is also the ability to configure persistent memory as storage over App Direct. Here, persistent memory acts in blocks in the way SSDs would, with traditional read/write instructions. This works with existing file systems, offers atomicity at a block level and is block size configurable (4K, 512B). To use the storage over app direct, users simply need an NVDIMM driver. This mode allows capacity scaling and better performance, lower latency, and higher endurance than traditional enterprise class SSDs.

Benefits of Intel Optane DC Persistent Memory

Intel Optane DC persistent memory modules offer a wide variety of benefits for end users. First off, the modules offer a way to effectively scale a server’s DRAM footprint in a much more cost effective way. Because persistent memory can be meshed with the DRAM layer, the effective usable DRAM footprint scales more quickly with persistent memory improving the overall TCO of an organization’s server investment. Further, with servers being able to process more data more quickly, it may be possible for some to take advantage of new opportunities to consolidate workloads. There’s also a second argument that can be made when it comes to value. For workloads that may not need the as much of the nanosecond latency DRAM offers, organizations could opt to build their servers with less DRAM but more Optane DC persistent memory to still keep a reasonable, or larger, memory footprint, but with the more cost effective persistent memory modules rather than DRAM.

The persistent memory modules, as the name overtly states, are persistent. This means the PMMs do not need to be refreshed with data, which leads to faster server reboots. This is critically important when it comes to memory resident databases. After a server reboot, the time to restore all of that in memory data can take a very long time. Independent software vendors (ISVs) that focus on high-performance databases have seen tremendous gains from persistent memory under these scenarios, where getting operational quickly is a critical notion. In fact, Intel has shown data to this effect. A columnar store entire reload into DRAM for a 1.3TB dataset they found to be 20 minutes in a DRAM only server. An entire system restart in that server before persistent memory was 32 minutes; 12 minutes for the OS, 20 minutes for the data. The same server with Optane DC persistent memory took 13.5 minutes. While on the surface that looks impressive, it’s even more impressive when considering the data component was only a minute and a half, which amounts to a 13X gain.

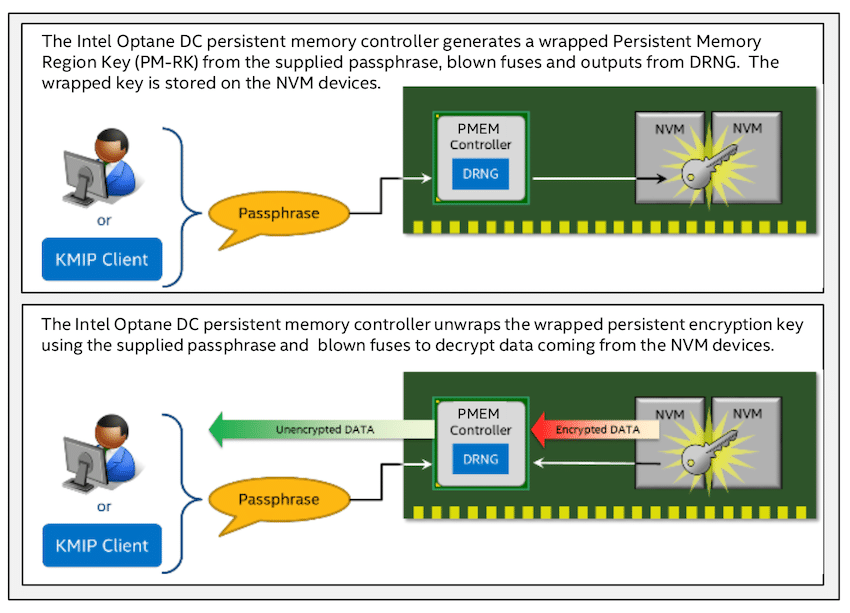

Intel Optane DC persistent memory modules also offer on-module encryption, making this the first ever hardware encrypted memory. The modules use data at rest protection using a 256bit AES-XTP encryption engine. In Memory Mode if the DRAM cache loses its data, the encryption key is lost and regenerates at each boot. In App Direct Mode, persistent media is encrypted using a key stored in a security metadata region on module that is only accessible by the Intel Optane DC controller. The Intel Optane DC persistent memory is locked at a power loss event and needs a passphrase to unlock. The modules also support secure cryptographic erase and DIMM overwrite, for secure repurposing or discard at end of life. Lastly, signed versions of the firmware are allowed, revision control options are available.

Intel Optane DC Persistent Memory Software

While the emphasis is clearly around the benefits of the persistent memory hardware, Intel has a set of software tools that are important as well. The following tools would be the primary way to manage the persistent memory through the operating system, versus power cycling the server and making those changes in the system BIOS. This saves time and prevents downtime to make changes on the fly.

IPMCTL- Utility for managing Intel Optane DC persistent memory modules

Supports functionality to:

- Discover persistent memory modules in the platform.

- Provision the platform memory configuration.

- View and update the firmware on PMMs.

- Configure data-at-rest security on PMMs.

- Monitor PMM health.

- Track performance of PMMs.

- Debug and troubleshoot PMMs.

NDCTL- Utility to manage “libnvdimm” subsystem devices (Non-volatile Memory)

ndctl is utility for managing the “libnvdimm” kernel subsystem. The “libnvdimm” subsystem defines a kernel device model and control message interface for platform NVDIMM resources like those defined by the ACPI 6.0 NFIT (NVDIMM Firmware Interface Table). Operations supported by the tool include, provisioning capacity (namespaces), as well as enumerating/enabling/disabling the devices (dimms, regions, namespaces) associated with an NVDIMM bus.

Intel Optane DC Persistent Memory Module Availability

Persistent memory modules are available now, with numerous server vendors announcing system availability:

Storage systems vendors are also looking at persistent memory as a way to accelerate their solutions:

Cloud support for PMEM:

Leading workstation providers are also embracing Intel Optane DC persistent memory, especially for data science workloads.

Intel Optane DC Persistent Memory Reviews and Benchmarks

Amazon

Amazon