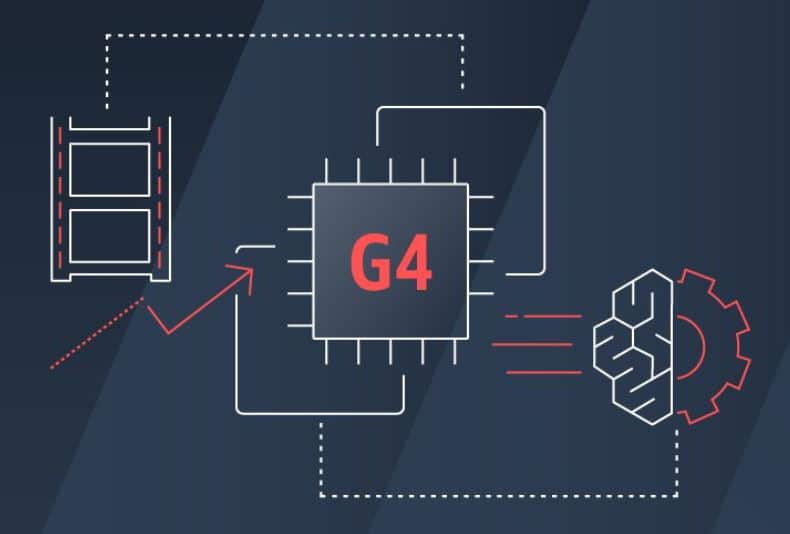

Today Amazon Web Services, Inc. (AWS) announced the general availability of a new GPU-powered Amazon Elastic Compute Cloud (Amazon EC2) instance, the G4 Instance. This new instance is designed to accelerate machine learning (ML) inferencing while providing the most cost-effective ML inference in the industry for applications. The new instance are also great for graphics-intensive workloads in a cost-effective manner and would be ideal for building and running graphics-intensive applications, such as remote graphics workstations, video transcoding, photo-realistic design, and game streaming in the cloud.

AWS states that ML involves two processes that require compute – training and inference. Training entails using labeled data to create a model that is capable of making predictions, a compute-intensive task that requires powerful processors and high-speed networking. Inference is the process of using a trained machine learning model to make predictions, which typically requires processing a lot of small compute jobs simultaneously. This job is ideal for NVIDIA’s powerful GPUs. AWS is no stranger to instances for ML, first launching the P3 instance two years ago. While this was a big step, inferencing takes up a majority of the operational costs for ML workloads.

To address this, AWS released the new G4 instances that leverage the latest generation NVIDIA T4 GPUs, custom 2nd Generation Intel Xeon Scalable (Cascade Lake) processors, up to 100 Gbps of networking throughput, and up to 1.8TB of local NVMe storage, to deliver the most cost-effective GPU instances for machine learning inference. The new G4 instances can deliver up to 65 TFLOPs of mixed-precision performance, great for inferencing. G4’s can also be used on cost-effectively for small-scale and entry-level machine learning training jobs that are less sensitive to time-to-train. For graphics-intensive workloads, the new instances offer up to 1.8x increase in graphics performance and up to 2x video transcoding capability over the previous generation G3 instances.

Availability

G4 instances are available to be purchased as On-Demand, Reserved Instances, or Spot Instances.

Amazon

Amazon