The cloud environment is currently experiencing a significant transformation. Over the past year, hyperscalers including Amazon Web Services (AWS), Google Cloud, and Microsoft Azure, have dramatically increased their investments in custom silicon. The growing number of AI companies entering the chip market continues to expand.

This surge in chip development is reshaping the data center, promising new levels of performance, efficiency, and differentiation. Where typical chip manufacturers create processors and accelerators for the masses, these new players engineer chips specifically for demanding AI workloads.

Chip Design

The motivation to develop custom silicon stems from the inability of off-the-shelf CPUs and accelerators to keep pace with the demands of hyperscale cloud workloads. AI and machine learning, in particular, drive higher compute density, lower latency, and greater energy efficiency requirements. Hyperscalers respond by building chips tailored to their infrastructure and customer needs. New players enter the market at an increasing rate with processors and accelerators touted as the “fastest,” or “cheapest,” or the “best” the industry has to offer.

Of course, this isn’t a new phenomenon. Cloud providers have built custom networking hardware, storage devices, and servers for many years. However, engineering processors is a different animal.

Who are the Players?

This is not a complete list. At present, these are the primary players in this field. We have also included new suppliers, bringing their special touch to AI-focused service delivery.

AWS

Now in its fourth generation, Amazon’s Graviton series has set the pace for Arm-based CPUs in the cloud, delivering significant performance-per-watt gains compared to traditional x86 offerings. AWS has also rolled out custom AI accelerators, such as Inferentia and Trainium, targeting inference and training workloads at scale.

According to the AWS website, Anthropic indicated that AWS would be its primary training partner and use AWS Trainium to train and deploy its largest foundation models. Amazon is also said to invest an additional $4 billion in Anthropic.

Google, meanwhile, continues to push the envelope with its Tensor Processing Units (TPUs), which are now powering some of the largest AI models in production. The company’s latest TPU v5 and Ironwood architectures are designed for massive parallelism and are tightly integrated with Google’s data center fabric.

Azure

Microsoft is not far behind, having recently unveiled its custom AI chips, Azure Maia and Azure Cobalt, optimized for AI and general-purpose workloads. These chips are already being deployed in Microsoft’s data centers, supporting everything from large language models to core cloud services.

CSPs Are Not Alone

Although not necessarily cloud providers, other players are in the chip development market. These companies also realize the benefits of designing chips: lower cost, performance, management, and ownership.

Groq

Groq provides an AI inference platform centered around its custom Language Processing Unit (LPU) and cloud infrastructure. It offers high performance at low costs for popular AI models.

Unlike GPUs designed for graphics, the LPU is optimized for AI inference and language tasks. Groq offers the LPU through GroqCloud™ and on-premises solutions instead of individual chips.

SambaNova Systems

SambaNova Systems has created an AI platform tailored for complex workloads. It is centered around the DataScale® system and custom Reconfigurable Dataflow Unit (RDU) chips optimized for dataflow computing.

The company offers pre-trained foundation models and the SambaNova Suite, which combines hardware, software, and models to enable quick AI deployment, particularly in finance and healthcare.

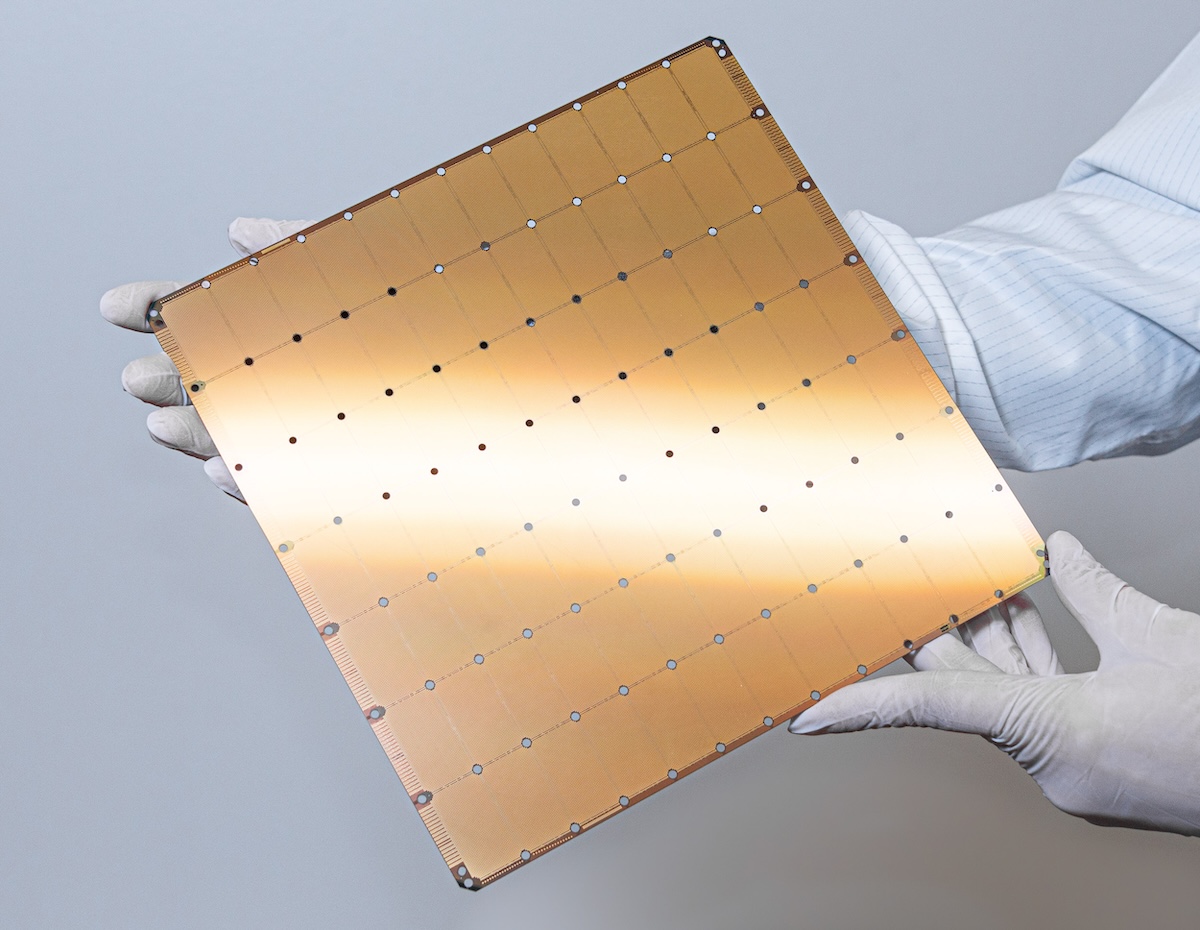

Cerebras

Cerebras is known for its AI inference and training platform, which features the Wafer-Scale Engine (WSE). With its numerous AI-optimized cores and on-chip memory, this large chip allows Cerebras systems to handle complex models that traditional hardware finds challenging.

Organizations in medical research and energy fields use Cerebras systems for on-premise supercomputers, while developers can access its capabilities through the Cerebras Cloud.

Tenstorrent

Tenstorrent is developing advanced AI and high-performance computing hardware, led by a team specializing in computer architecture and ASIC design. Their approach resembles Google’s TPUs, focusing on open hardware and software, and they have attracted investments from figures like Jeff Bezos.

The company’s Blackhole™ PCIe boards are designed for scalable AI processing, featuring RISC-V cores and GDDR6 memory. The Blackhole p100a model includes the Blackhole Tensix Processor and is crafted for desktop workstations.

The Benefits: Performance, Efficiency, and Control

Custom silicon gives CSPs and other players a robust set of levers. Providers can optimize for their workloads, data center architectures, and power/cooling constraints by designing chips in-house. This results in better performance per dollar, improved energy efficiency, and the ability to offer differentiated services to customers. Strategically, owning the silicon stack reduces reliance on third-party vendors, mitigates supply chain risks, and enables faster innovation cycles. This agility is a competitive advantage in a world where AI models evolve at lightning speed.

Building chips is not for the faint of heart. It requires deep engineering expertise, substantial capital investment, and close collaboration with foundries and design partners. CSPs also invest significantly in software stacks, compilers, and developer tools to ensure their custom hardware is accessible and user-friendly. The ripple effects are being felt across the industry. Traditional chipmakers like Intel, AMD, and NVIDIA face new competition, while startups and IP vendors find fresh opportunities to collaborate with CSPs. The open-source hardware movement, typified by RISC-V, is gaining momentum as providers seek more flexible and customizable architectures.

The Future of Cloud Silicon

The rate of innovation shows no signs of slowing down. As AI, analytics, and edge computing evolve, CSPs and hyperscalers are expected to invest in heavily custom silicon while branching out into new networking, storage, and security areas. The next generation of cloud infrastructure will be shaped as much by the hardware inside it as by the software and services layered on top.

This advancement offers more options, improved performance, and the ability to handle workloads previously thought impossible for businesses and developers. For the industry, this marks the start of a new era in which the largest cloud providers also become some of the most influential chip designers.

Amazon

Amazon