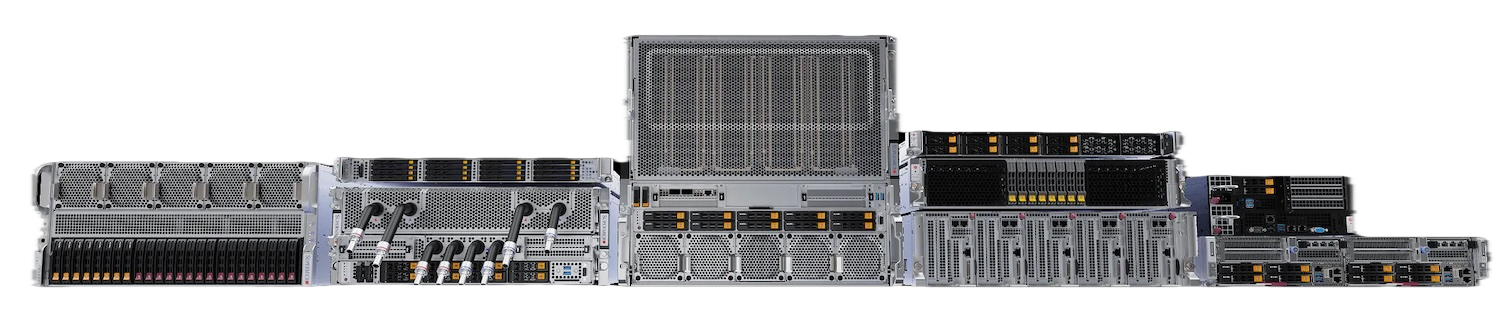

Supermicro has announced its Data Center Building Block Solutions (DCBBS), a comprehensive approach designed to simplify the deployment of liquid-cooled AI data centers. DCBBS combines all critical infrastructure components, servers, storage, networking, racks, liquid cooling, software, services, and support under a standardized, modular architecture. This initiative aims to address the growing complexity of building and operating AI factories, where the demands of training and inference workloads are rapidly escalating.

Streamlining AI Data Center Deployment

DCBBS extends Supermicro’s established System Building Block Solutions to the data center level, offering pre-validated, scalable units that accelerate planning, buildout, and operation. The solution is designed to reduce the time and cost of deploying high-performance AI infrastructure. According to Supermicro, DCBBS enables customers to move from design to deployment in as little as three months, thanks to a package that includes floor plans, rack elevations, bills of materials, and all necessary components.

Supermicro’s president and CEO, Charles Liang, notes that DCBBS intends to give clients a time-to-market and time-to-online advantage, with total solution coverage that spans data center layout, network topology, power, and battery backup. The result is a streamlined process that reduces costs and improves overall quality.

Modular, Scalable, and Customizable

At the core of DCBBS is a modular building block approach, structured across three hierarchical levels: system, rack, and data center. This architecture allows customers to tailor their infrastructure to specific requirements, from selecting individual CPUs, GPUs, memory, and storage at the system level, to choosing rack configurations (such as 42U, 48U, or 52U) and optimizing for thermals and cabling. After an initial consultation, Supermicro delivers a project proposal aligned with the customer’s power budget, performance targets, and other operational needs.

The 256-node AI Factory DCBBS scalable unit is a key offering that provides a turnkey solution for large-scale AI training and inference. Each unit includes up to 256 liquid-cooled 4U Supermicro NVIDIA HGX system nodes, each equipped with eight NVIDIA Blackwell GPUs, totaling 2,048 GPUs per unit. These nodes are interconnected with high-speed NVIDIA Quantum-X InfiniBand or Spectrum X Ethernet networking, supporting up to 800 GB/s of bandwidth. The compute fabric is complemented by scalable, tiered storage using PCIe Gen5 NVMe, TCO-optimized Data Lake nodes, and resilient management systems for continuous operation.

Advancing Liquid Cooling for Next-Generation Data Centers

Super Micro Computer has also announced significant advancements to its Direct Liquid Cooling (DLC) solution, introducing new technologies that address the evolving needs of high-density, AI-optimized data centers. As the industry rapidly shifts toward liquid cooling to support modern workloads’ power and thermal demands, Supermicro’s DLC-2 solution aims to deliver measurable improvements in efficiency, deployment speed, and operational sustainability.

The adoption of liquid cooling in data centers is accelerating, driven by the increasing density of compute resources and the rise of AI and machine learning workloads. Industry analysts estimate that liquid-cooled data centers could account for up to 30% of all new installations in the near future. Traditional air cooling is reaching its practical limits, especially as organizations deploy servers with high-performance GPUs and CPUs that generate significant heat.

Supermicro’s latest DLC-2 solution is designed to address these challenges head-on, offering a comprehensive, end-to-end liquid cooling architecture that supports faster deployment, reduced operational costs, and improved sustainability metrics. The company’s DLC-2 technology is engineered to address the thermal challenges of modern AI workloads, where GPUs can exceed 1,000W TDP.

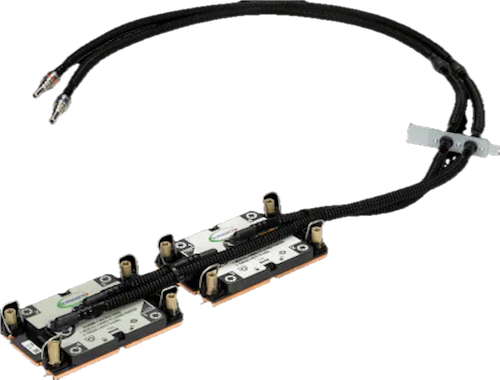

DLC-2 captures heat directly from critical components—CPUs, GPUs, memory, PCIe switches, and voltage regulators—using cold plates and a facility-scale liquid cooling infrastructure. The system includes in-rack or in-row coolant distribution units (CDUs), vertical coolant distribution manifolds, and support for facility-side cooling towers. This approach enables up to 40% power savings, a 60% reduction in data center footprint, and up to 40% lower water consumption, contributing to a total cost of ownership (TCO) reduction of around 20%.

Supermicro’s DLC-2 is designed for rapid deployment and high-density environments. It supports warm water cooling with inlet temperatures up to 45°C, reducing the need for chillers and lowering operational costs. The solution fully integrates with Supermicro’s SuperCloud Composer® software, which provides centralized management, analytics, and orchestration across compute, storage, and network resources.

DLC-2 Operational Benefits

- Up to 40% Power Savings: Reduce overall data center power consumption by up to 40% compared to conventional air-cooled systems. Cold plate technology achieves this through efficient heat transfer directly from critical components, such as CPUs, GPUs, memory, PCIe switches, and voltage regulators. Data centers can significantly lower their energy bills and carbon footprint by minimizing reliance on high-speed fans and air-based cooling infrastructure.

- Faster Time-to-Deployment and Reduced Time-to-Online: Engineered for rapid deployment. The solution provides a fully integrated, end-to-end liquid cooling stack, including cold plates, coolant distribution units (CDUs), and vertical coolant distribution manifolds (CDMs)

- Reduced Water Consumption with Warm Water Cooling: The DLC-2 architecture supports warm water cooling, with inlet temperatures up to 45°C. This capability reduces the need for traditional chiller systems, which are both costly and resource-intensive.

- Quiet Data Center Operation: With comprehensive cold plate coverage and lower fan requirements, the DLC-2 solution enables data center noise levels to drop to approximately 50dB.

- Lower Total Cost of Ownership: In addition to energy and water savings, Supermicro estimates that the DLC-2 solution can reduce total cost of ownership (TCO) by up to 20%. The reduction in cooling infrastructure, lower power and water usage, and increased server density all contribute to a more cost-effective data center operation.

DLC-2 Technical Innovations

- Comprehensive Cold Plate Coverage: The DLC-2 solution features cold plates that cover CPUs, GPUs, memory modules, PCIe switches, and voltage regulators. This approach ensures that nearly all heat-generating components are efficiently cooled, reducing the need for supplemental air cooling and rear-door heat exchangers.

- Support for High-Density AI Systems: A highlight of the new architecture is a GPU-optimized Supermicro server that accommodates eight NVIDIA Blackwell GPUs and two Intel Xeon 6 CPUs within a compact 4U rack height. This system is specifically designed to handle increased supply coolant temperatures, maximize performance per watt, and support the latest AI workloads.

- High-Efficiency Coolant Distribution: The in-rack Coolant Distribution Unit (CDU) can remove up to 250kW of heat per rack. Vertical Coolant Distribution Manifolds (CDMs) efficiently circulate coolant throughout the rack, matching the number of servers installed and enabling higher compute density per unit of floor space. The liquid-cooling heat capture, up to 98% per server rack, further enhances efficiency.

- Integrated Management and Orchestration: The entire DLC-2 solution stack fully integrates with Supermicro’s SuperCloud Composer® software, providing data center-level management and infrastructure orchestration.

Supermicro’s DLC-2 solution also incorporates hybrid cooling towers, which combine the features of standard dry and water towers. This design is particularly advantageous in regions with significant seasonal temperature variations, as it allows data centers to optimize resource usage and further reduce operational expenses throughout the year.

Service and Software Integration

DCBBS is supported by a comprehensive suite of services, from initial consultation and data center design to solution validation, onsite deployment, and ongoing support. Supermicro offers a 4-hour onsite response option for mission-critical environments, ensuring high availability and rapid issue resolution.

On the software side, Supermicro’s expertise extends to application integration for AI training, inference, cluster management, and workload orchestration. The company supports deployment of the NVIDIA AI Enterprise software platform and provides software provisioning and validation services tailored to the customer’s stack.

AI Training, Inference, and Beyond

DCBBS’s primary use case is the deployment of large-scale AI training clusters, where thousands of GPUs are required to develop foundation models. The solution is equally suited for AI inference workloads, which increasingly demand high compute capacity to deliver real-time intelligence across multiple models and applications.

Beyond AI, DCBBS applies to any data center environment requiring high-density, high-performance computing, such as scientific research, financial modeling, and advanced analytics. The solution’s modular, customizable nature allows organizations to adapt their infrastructure as workloads evolve.

The rapid growth of AI and high-performance computing drives a shift toward liquid-cooled data centers. While less than 1% of data centers were liquid-cooled in recent years, industry projections suggest this figure could reach 30% within the following year. Supermicro’s DCBBS and DLC-2 offerings position Supermicro positively during the transition, providing a practical, vendor-integrated path to efficient, scalable, and sustainable data center operations.

Amazon

Amazon