The tech giant Hewlett Packard Enterprise (HPE) has taken its first steps in the AI cloud landscape by launching HPE GreenLake for Large Language Models (LLMs). This state-of-the-art cloud service aims to provide extensive AI model training, tuning, and deployment capabilities for businesses of all sizes, from new startups to Fortune 500 corporations.

HPE GreenLake for Large Language Models: What is it?

As the first of many specialized AI applications, HPE GreenLake for LLMs will run on top-tier supercomputers and AI software, most powered by green energy. Plans are underway to expand the service to industries like climate modeling, healthcare, life sciences, and more.

For those less familiar with the term, Large Language Models like HPE GreenLake’s Luminous are advanced AI models capable of understanding and generating human-like text based on a given input. These models, trained on vast amounts of data, can generate relevant, contextual responses, making them exceptionally useful in numerous applications.

From streamlining customer service through AI chatbots to aiding healthcare professionals by scanning and interpreting medical records, LLMs can drive efficiency and innovation across various sectors. The advent of HPE’s offering makes this sophisticated technology more accessible to businesses of all sizes. While HPE’s vision is large-scale, LLMs don’t have to start that way and often don’t. See our piece on getting started with Meta LLaMa to learn more.

HPE’s CEO, Antonio Neri, compared the impact of AI to revolutionary technologies like web, mobile, and cloud. He expressed HPE’s commitment to democratizing AI, a field that has been chiefly within reach of large government labs and cloud giants. We’ve often lamented the fact that most AI tools aren’t accessible outside the large HPC and enterprise data centers. While we applaud Neri’s plan to democratize AI, it remains to be seen how well HPE will execute on this goal.

How HPE GreenLake for Large Language Models Stands Out

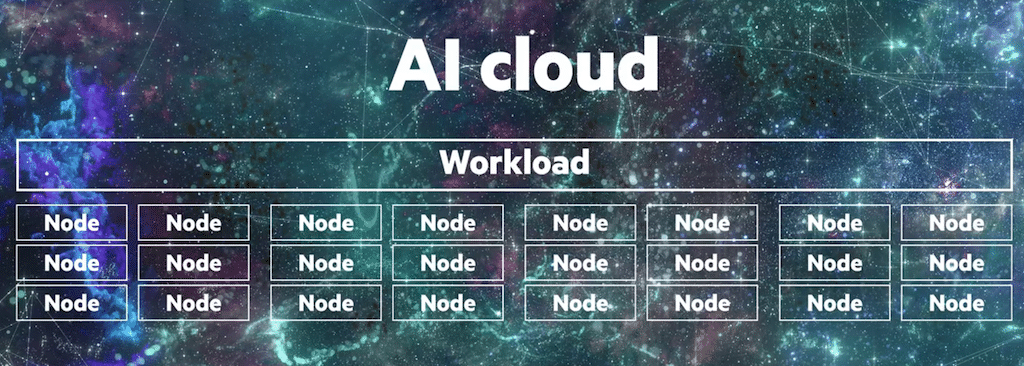

Unlike typical, general-purpose cloud offerings running workloads in parallel, HPE GreenLake for LLMs runs on an AI-native architecture designed to run one large-scale AI training and simulation workload at full computing capacity. This method allows for handling many AI and HPC jobs on hundreds or thousands of CPUs or GPUs at once, boosting AI training efficiency and precision in model creation.

The service gives access to Luminous, a pre-trained language model by Aleph Alpha. Clients can personalize this model with their data, yielding valuable real-time insights. This is significant for this offering as Aleph Alpha’s “Luminous” pre-trained model is a key part of the HPE GreenLake for LLMs offering. Aleph Alpha’s CEO, Jonas Andrulis, underscored the benefits of training Luminous with HPE’s supercomputers and AI software.

Harnessing the Might of HPE Cray XD Supercomputers

HPE GreenLake for LLMs will run on HPE Cray XD supercomputers, among the most potent and eco-friendly machines globally. The use of HPE Cray Programming Environment software suite optimizes HPC and AI applications. Consequently, customers can avoid buying and maintaining their supercomputers.

HPE is accepting orders for HPE GreenLake for LLMs. They expect wider availability by late 2023, starting in North America, then Europe.

The Potential Benefits and Implications

The advent of HPE GreenLake for Large Language Models could change how businesses handle data and make decisions. For example, LLMs could review medical records and patient data in healthcare, aiding doctors in diagnoses and treatments. In finance, it could improve market trend prediction, guiding analysts to make better choices.

LLMs could also enhance customer service by developing AI customer service agents to handle varied queries. This can free up human agents to solve more complex issues. In industries like manufacturing and transportation, LLMs could identify inefficiencies and suggest improvements, leading to increased efficiency and cost savings.

Reflecting on the potential impact of this service, the quick transition from a mere proof-of-concept to full-fledged production represents one of the most significant advantages of HPE GreenLake for LLMs. This rapid implementation can revolutionize industries by accelerating decision-making processes, conserving valuable resources, and leading to more innovative and efficient business practices.

With the robust capabilities of HPE GreenLake for LLMs, businesses no longer have to invest extensive time in testing. They can move swiftly from conceptualization to deploying their AI models, providing a competitive edge in their respective markets.

The sustainability feature of HPE GreenLake for LLMs is also noteworthy. By using nearly 100% renewable energy, HPE joins the fight against carbon emissions, setting a new benchmark for eco-friendly computing in the AI sector.

A Bright Horizon to Enterprise AI

Introducing HPE GreenLake for LLMs is a crucial step towards democratizing AI. By offering access to large language models for all businesses, HPE fosters growth and innovation across sectors. As AI continues to evolve and integrate into everyday life, services like HPE GreenLake for LLMs will be instrumental in driving this change.

Amazon

Amazon