VMware's VSAN group recently announced a free storage performance testing tool for Hyperconverged Infrastructure (HCI), the HCI benchmark or HCIbench. While stressing traditional arrays with I/O or bandwidth tests is fairly well understood, applying synthetic tests to HCI gear requires a careful approach and a steep learning curve. With traditional storage infrastructure you setup your I/O generator of choice with a given workload profile, point it to some LUNs and let it rip. Hyperconverged testing requires a large-scale approach where many VMs (often 10-64) simultaneously apply that workload across multiple vDisks, at the same time… not to mention collecting all of that data when the task finishes. This type of approach is needed to adequately stress the platform and move closer, although not equal to, a real-world production setting. As you might imagine this is hard for experienced reviewers to accomplish and can be impossible for many end-users to complete in a reasonable time frame. VMware’s HCIbench helps make this testing process much easier and lets anyone who can deploy a pre-built VM test as well as an independent reviewer test HCI better.

Before VMware launched this tool and shared it with us, we had some growing pains while we worked towards scaling up our own tools to measure the performance of VSAN and other HCI platforms. Currently we use FIO from Windows and Linux systems (some physical and others VMs) where we point it to a local or remote (iSCSI, SMB, CIFS) volume and kick off a singular workload. With an SSD, SAN or NAS, you target the physical storage device, or multiple LUNs to stress a dual-controller platform and can effectively stress it from a central point. One script will kick this off, which for an example we've included a snippet of one of our FIO parameters below:

fio.exe –filename=\\.\PhysicalDrive1:\\.\PhysicalDrive2:\\.\PhysicalDrive3:\\.\PhysicalDrive4 –thread –direct=1 –rw=randrw –refill_buffers –norandommap –randrepeat=0 –ioengine=windowsaio –bs=4k –rwmixread=100 –iodepth=16 –numjobs=16 –time_based –runtime=60 –group_reporting –name=4ktest –output=4ktest.out

The reviewer or tester needs to be able to correctly modify the script between tests to hit the correct device, apply the correct load, not to mention have multiple scripts for different block-size workloads. Then add in the scripts inside scripts (need to loop for preconditioning or multiple loads!), modify them for powershell or bash and you can see how this quickly spirals out of control. To make all of this worse, you need to have more custom-written scripts to parse your benchmark-generated output files to graph and compare differences. As a reviewer, we need to have these in our toolbox and use them daily. It generally takes months or years to get a good set of tools, know their strengths, but also understand their weaknesses. A customer evaluating a new storage platform generally doesn't test storage for a living and would be quickly overwhelmed by all of this. HCIbench uses the same tools trusted by independent-reviewers and makes it easy for anyone to use them.

HCIbench simplifies hyperconverged testing. Instead of running standard testing tools with lots of scripted setup and gathering lots of data, HCIbench automatically deploys multiple VMs running Vdbench across your cluster. While the tool is initially aimed at HCI market segments, it works just as well on traditional clusters powered by shared storage. The kicker in all of this is VMware isn’t pushing their own proprietary tool at the market or their own workload profiles. Instead they’ve opted to use the already known and trusted vdbench as the workload generator, give the customer or tester the ability to use their own test profiles and put a GUI around it to better compare and contract results. The level of reporting detail is impressive, since it not only covers the workload profiles, but ties into the hypervisor, pulling in those stats to see the cluster-wide impact of tests as they operate.

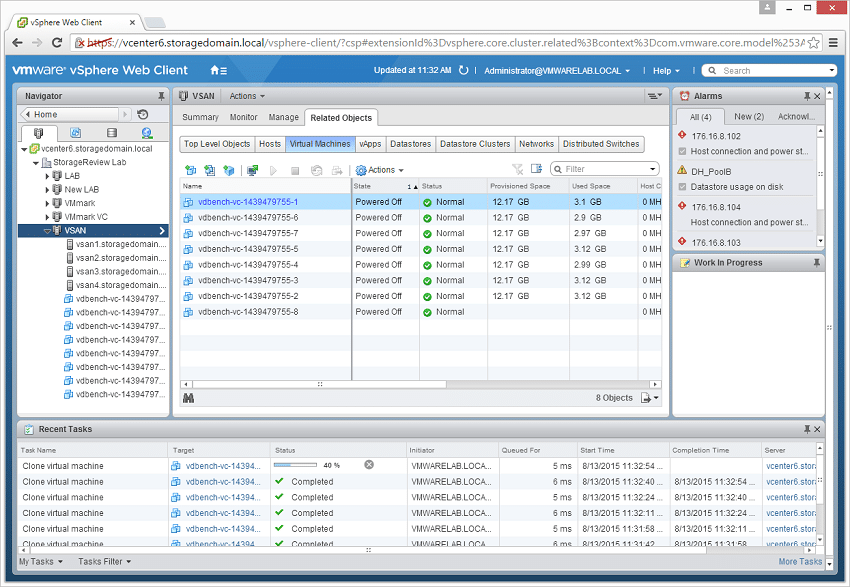

Using HCIbench is pretty easy as far as testing goes. You download the pre-built Auto-Perf-Tool OVA and deploy it into your VMware environment. Once powered it will take you through a few steps to assign itself an IP address through your DHCP, or one can be assigned to it. After that is complete you move your attention to your web browser.

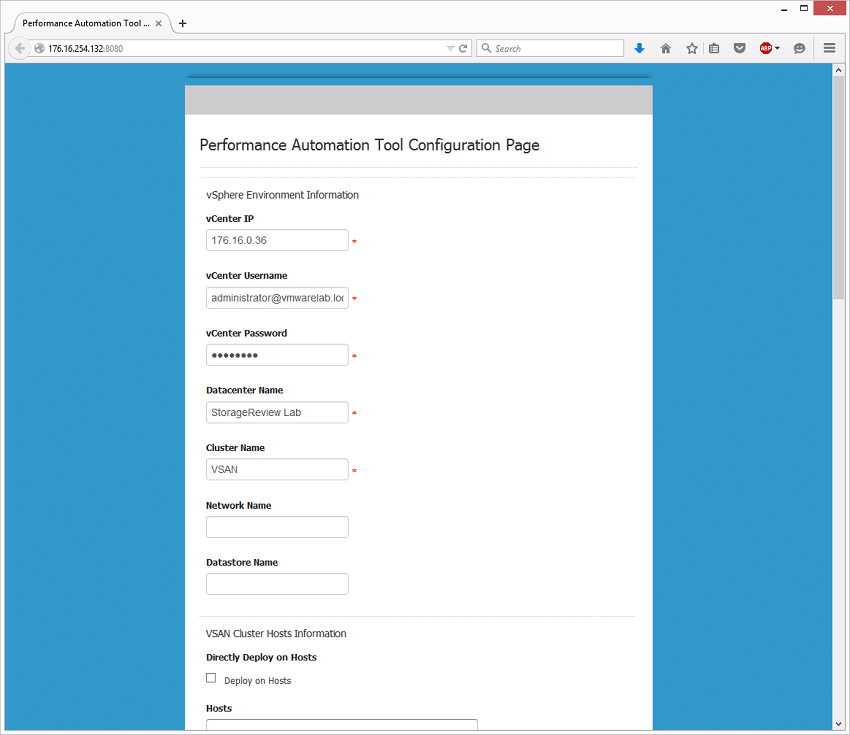

The configuration page is where you enter in all the important bits for your testing environment, so the tool can automatically deploy its generated VMs.

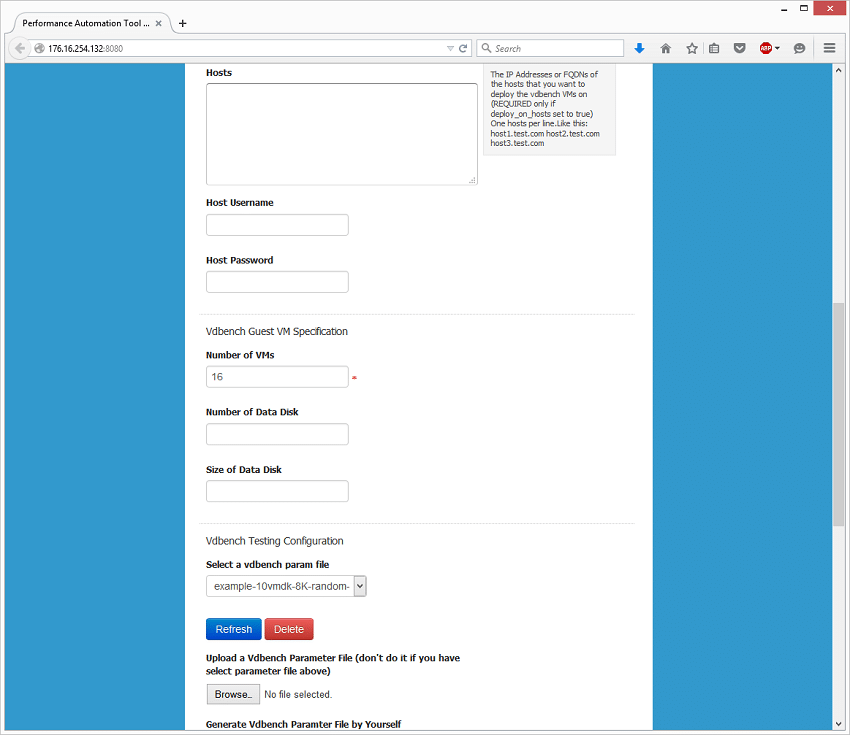

After you scroll down, there are areas to change the default deployment details. In the Vdbench Guest VM Specification area you are able to address how the tool deploys on your gear. Four our 4-node VSAN platform we started with a number of 16 VMs and kept to the default number of data disks (10) and the default size of the data disk (10GB). With 16VMs, that gives us a 1600GB footprint on our cluster, letting us measure the performance of VMs sitting well inside flash. Increasing those values, either through VM, vdisk count, or vdisk size lets you push those limits to measure how things react to pushing outside of flash and into your spinning media tier, or in the case of all-flash arrays into the slower read-centric flash tier. For an end-user this is incredibly important, since many vendor-driven results show best case performance, not performance as your working dataset grows.

Next up you can upload, select or build your own vdbench parameter file. For four-corners testing to measure peak I/O and peak bandwidth we like to use 4K random read and write, as well as a large-block sequential value for read and write bandwidth. For a mixed workload, 8K random 70% read 30% write works pretty well to compare to industry-reported figures. To make this super-easy, we’ve created all 5 of these workload profiles and offered them up for download. These help create a starting point and will be profiles we use in reviews going forward that leverage this tool. Want to copy our tests? Download the tool, apply these profiles and compare the full reporting stats.

StorageReview's HCIbench Workload Profiles

- 4K Random 100% read

- 4K Random 100% write

- 8K Random 70% read / 30% write

- 32K Sequential 100% read

- 32K Sequential 100% write

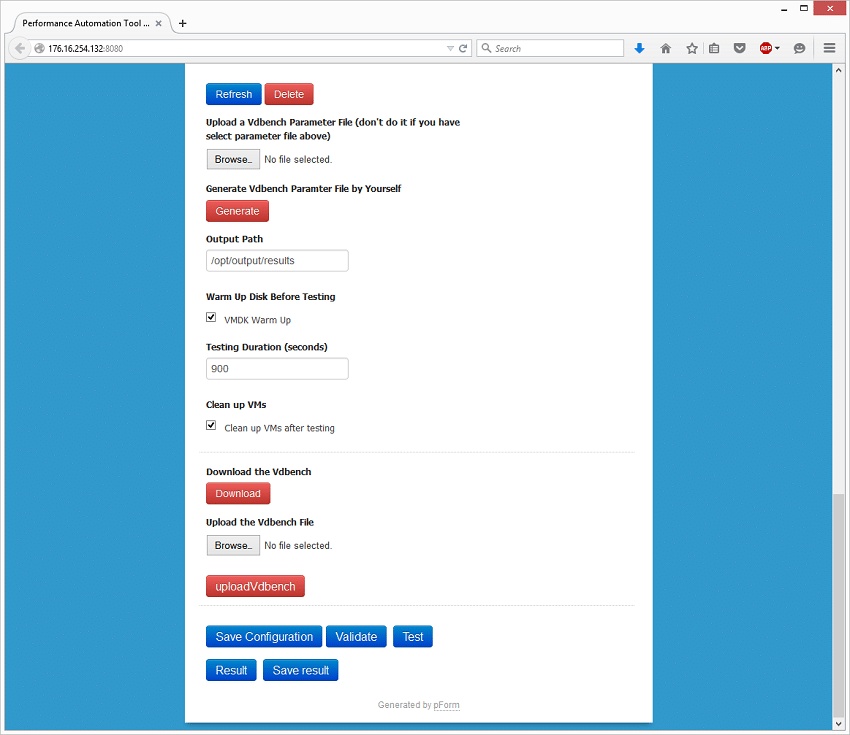

Once your workload is defined, scroll down and select any other settings you want to use. The VMDK Warm Up parameter is helpful to fill the newly created vDisks before testing. We’ve also added the “warmup” command into our shared workload files to further help precondition the workload so hot-data is migrated into flash. Testing duration is best kept to longer times for a cleaner result. If you want to try out a few runs, start at 900 or 1800 seconds. For a more formal run go for 6 hours (21600 seconds) or longer. The checkbox for Clean up the VMs should be checked as well, since this will let HCIbench create and deploy the VMs and delete them off your storage once the test completes. At the very bottom you will notice you need to download the Vdbench binary and upload the complete ZIP file for the tool to function. There are restrictions on distributing the tool in the Oracle EULA, meaning you need to download it yourself. Click the download link to take you to the Oracle site and download the vdbench50403.zip and upload that file still zipped in the prompt. Lastly, save your configuration, validate it to make sure you didn’t leave anything out and start the test. Once completed the you can review the results.

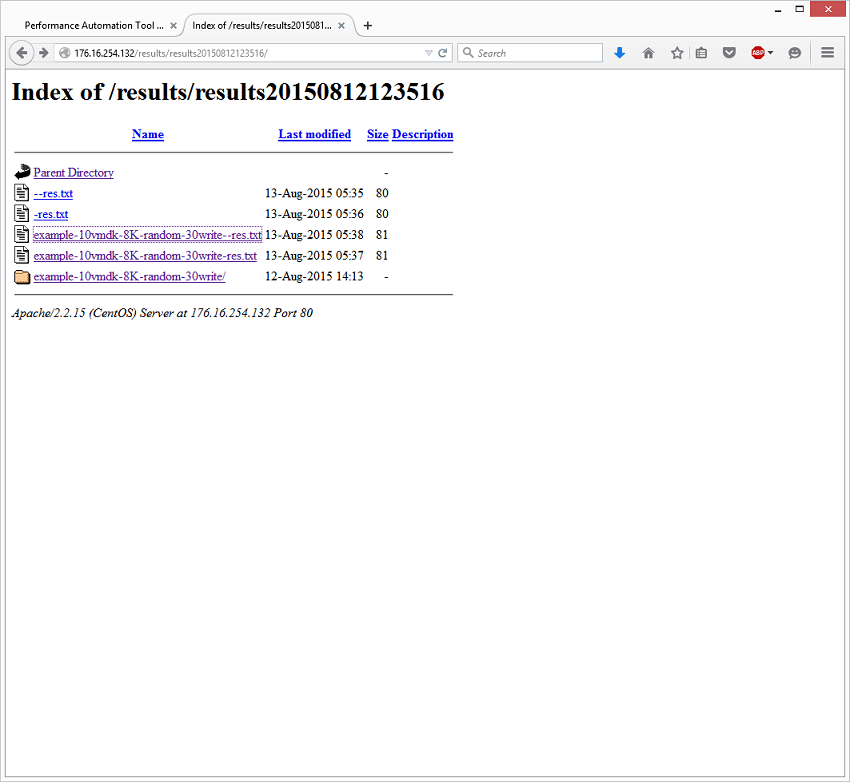

The results page drops you into a basic HTML file viewer.

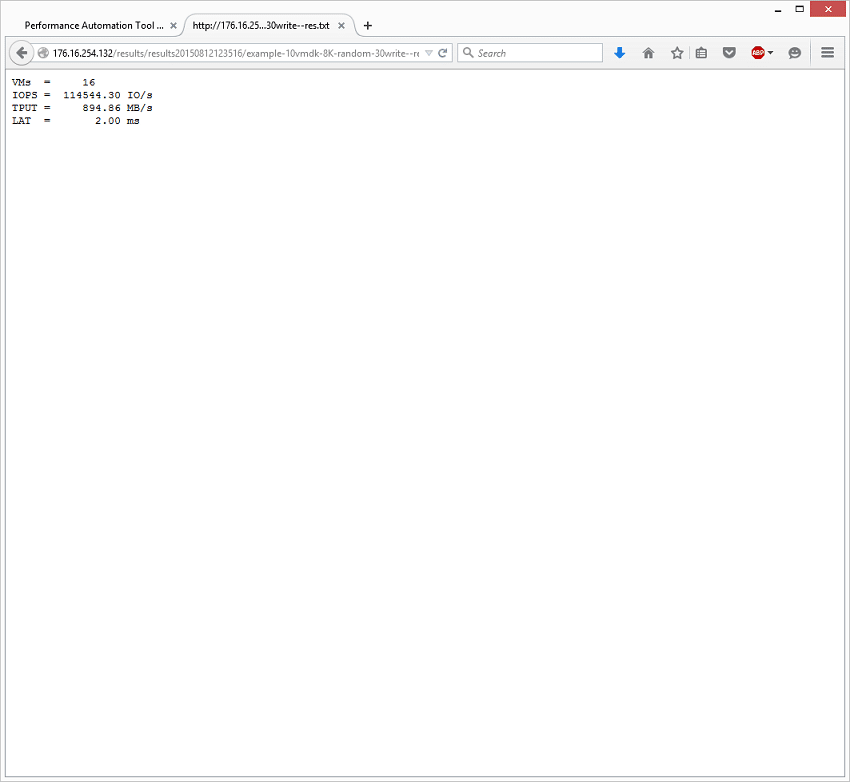

The first file presented is the very basic summary of the results. In our case with the VSAN workload we hit 114k IOPS with an 8K 70/30 workload with an average latency of 2ms and a throughput of 984MB/s. This is generated from the vdbench output files pulled from all of the VMs deployed on the environment. Users can go through those files if they wish to see second-by-second reported stats.

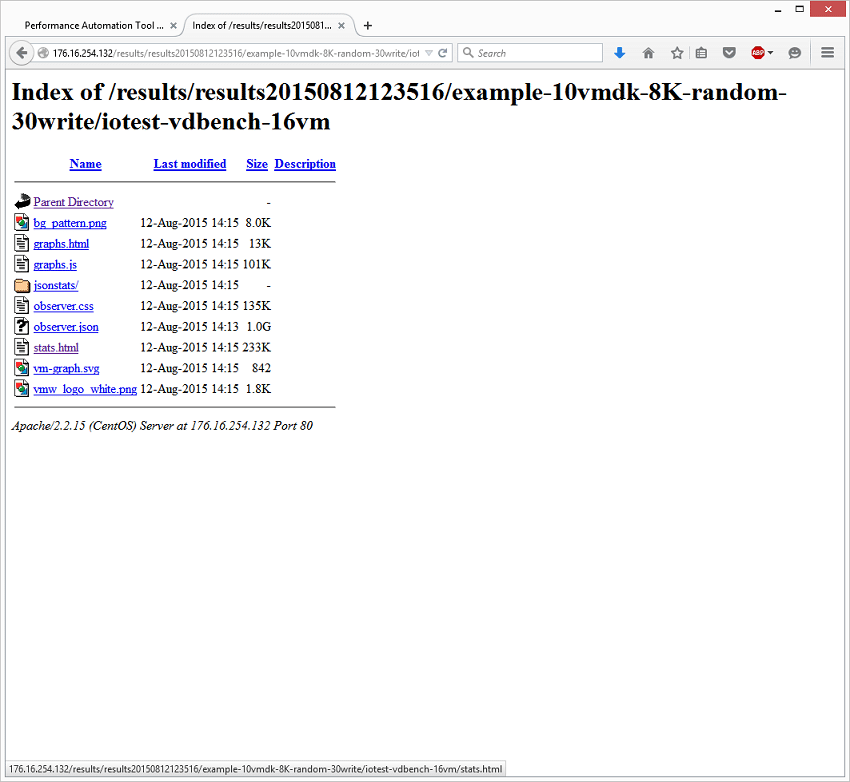

Moving into the iotest folder, click on the “stats.html” file.

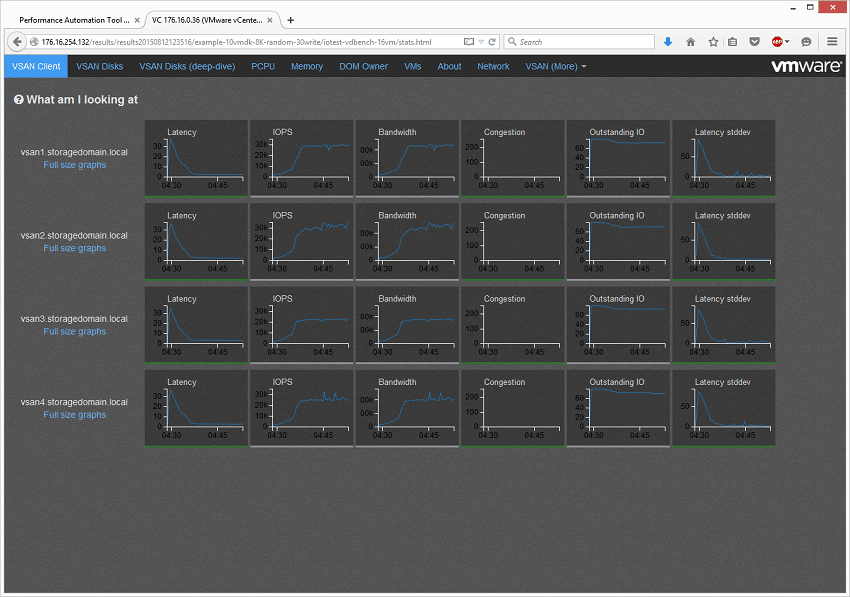

This brings up every and almost any detail you can think of in a nice GUI with automatically-generated charts.

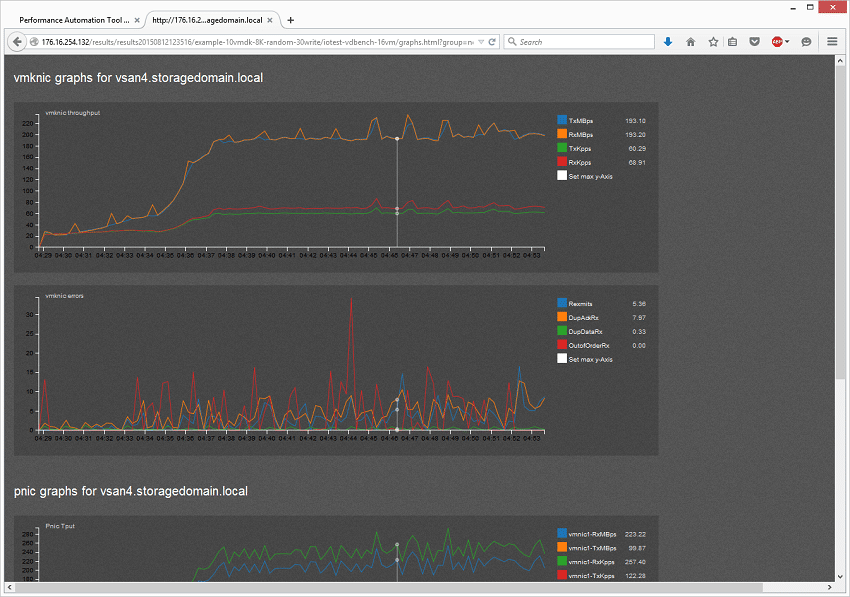

You can even drill into helpful stats like how much data was pushed over your vmknic on each host and all of its associated information.

VMware has gone to impressive lengths to make synthetic HCI testing an easier process for an end-user in a POC or even a reviewer like myself. It removes the difficulty or confusion with deploying synthetic workloads across a shared storage environment and gives you more generated details than you can shake a stick at. It also shortens the testing time needed to compare to systems effectively, increasing your chances of making a better educated decision during your POC window. At the end of the day it’s a wrapper around the open-source tool vdbench, an I/O generator known and trusted by the community, meaning there is less concern that VMware is up to shenanigans. There are limitations to this tool, since it can’t replicate an application workload or a working production environment. Those are additional tools that can be added to the comparison though, where this one offers a good starting point.

Amazon

Amazon