NVIDIA continues the evolution of the Data Processing Unit (DPU) with the release of the NVIDIA BlueField-2. The NVIDIA BlueField-2 DPU is touted as the data center infrastructure-on-a-chip and optimized for enterprise cloud and HPC workloads. BlueField-2 combines NVIDIA ConnectX-6 Dx network adapter with an array of Arm cores and infrastructure-specific offloads and offers purpose-built, hardware-acceleration engines with full software programmability. Brian had a discussion earlier this year with NVIDIA on one of his regular podcasts. You can get some NVIDIA DPU detail here.

The features are impressive, but first, let’s take a look at the evolution of the DPU. If you are not into the history, you can skip down to the details for the NVIDIA BlueField-2. It started in the ’90s when Intel x86 processors delivered unmatched power to enterprises, combined with an OS. Next came the client/server, then the advent of distributed processing. Software development and growing databases accelerated rapidly, causing an explosion of hardware deployment into the data center.

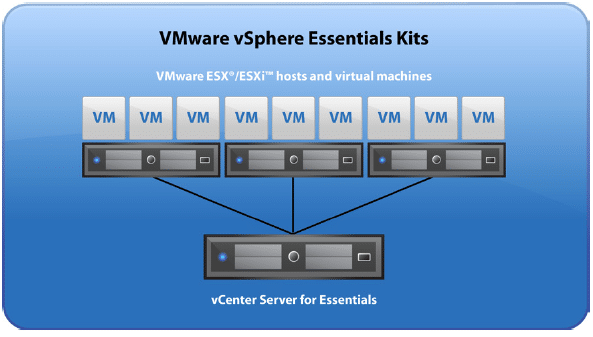

In the early 2000s, VMware introduced the ESX hypervisor and virtualized the x86 CPU, allowing multiple operating system instances to run on a single server. VMs were not necessarily new; IBM ran virtual machines on their mainframes for many years before creating the ESX hypervisor. However, this development led to the data center infrastructure aggregation growth.

Hardware was now programmable with developers writing code that defined and provisioned virtual machines without manual intervention. This led to the eventual push for migration to cloud computing.

VMware realized the success of its ESX platform and moved quickly into storage and network virtualization. Not to be outdone, EMC teamed up with Cisco to build their own virtualized network and storage solution. A flurry of acquisitions took place. VMware developed vSANS integrated into their vSphere platform.

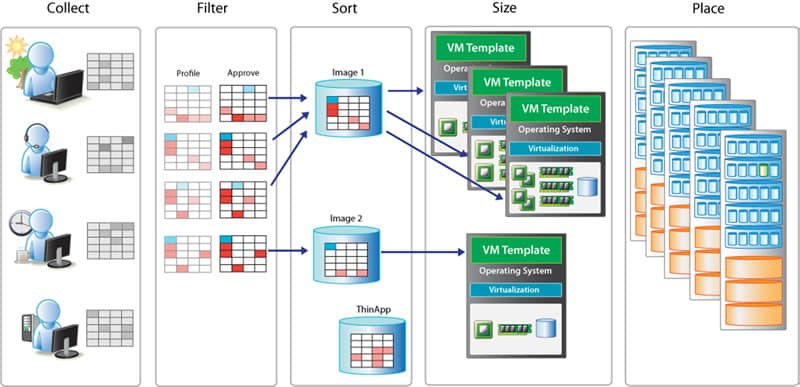

The development of this converged infrastructure is called Software-Defined Data Center(SDDC). The big players, Microsoft, VMware, Cisco, and EMC, all scrambled to win the SDDC market. Everything became programmable: I/O, security, OS, applications, etc. SDDC was just a CPU component, taxing the available resources used for other services.

All this convergence and programmability lead to Artificial Intelligence(AI) development where GPUs were developed to address the processing requirements for these graphic-intensive applications. This leads to the development of hardware offloading some of the operations from the CPU. Networking functions, typically CPU intensive, were offloaded and NVIDIA jumped on this new opportunity, acquired Mellanox to develop smart network adapters.

GPUs became smarter and smart NICs were pivotal in removing the network and graphics processing from the overall SDDC aggregation. Ultimately, the development of DPU is the result of offloaded intelligence from the CPU.

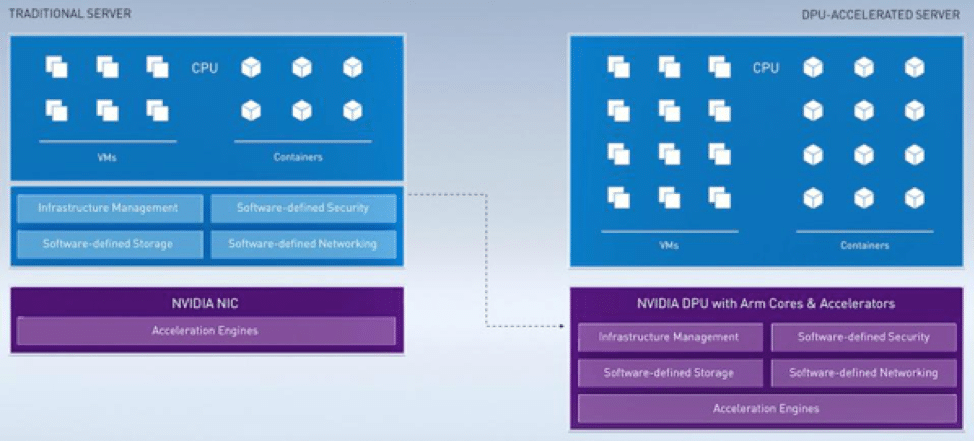

The new NVIDIA BlueField-2 DPU has created a system on a chip combining multi-core CPU, high-performance network interface, and programmable acceleration engines.

NVIDIA BlueField-2 DPU – The Meat

CPU v GPU v DPU: What Makes a DPU Different?

A DPU is a new class of programmable processors that combines three key elements. A DPU is a system on a chip, or SoC, that combines:

- An industry-standard, high-performance, software-programmable, multi-core CPU, typically based on the widely used Arm architecture, tightly coupled to the other SoC components.

- A high-performance network interface capable of parsing, processing and efficiently transferring data at line rate, or the speed of the rest of the network, to GPUs and CPUs.

- A rich set of flexible and programmable acceleration engines that offload and improve applications performance for AI and machine learning, security, telecommunications, and storage, among others.

The NVIDIA® BlueField®-2 DPU is the first data center infrastructure-on-a-chip optimized for modern cloud and HPC. Delivering a broad set of accelerated software-defined networking, storage, security, and management services with the ability to offload, accelerate and isolate data center infrastructure. Equipped with 200Gb/s Ethernet or InfiniBand connectivity, the BlueField-2 DPU accelerates the network path for both the control plane and the data plane and is armed with “zero trust” security to prevent data breaches and cyber-attacks.

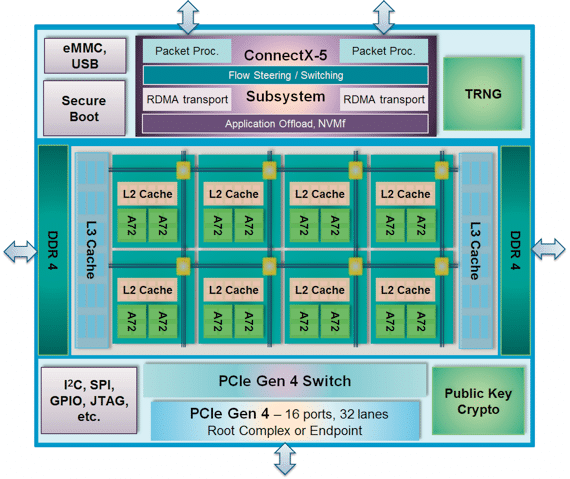

NVIDIA ConnectX®-6 Dx combines the network adapter with an array of Arm® cores and infrastructure-specific offloads, offering purpose-built, hardware-acceleration engines with full software programmability. Sitting at the edge of every server, BlueField-2 empowers agile, secured, and high-performance cloud and artificial intelligence (AI) workloads, and reduces TCO while increasing data center efficiency.

The NVIDIA DOCA™ software framework enables developers to rapidly create applications and services for the BlueField-2 DPU. NVIDIA DOCA leverages DPU hardware accelerators, increasing data center performance, efficiency and security.

NVIDIA BlueField-2 DPU Features

Network and Host Interfaces |

Storage |

| Network Interfaces | BlueField SNAP – NVMe™ and VirtIO-blk |

| Ethernet – Dual ports of 10/25/50/100Gb/s, or a single port of 200Gb/s | NVMe-oF™ acceleration |

| InfiniBand – Dual ports of EDR / HDR100, or single port of HDR | Compression and decompression acceleration |

| PCI Express Interface | Data hashing and deduplication |

| 8 or 16 lanes of PCIe Gen 4.0 | M.2 / U.2 connectors for direct-attached storage |

| PCIe switch bi-furcation with 8 downstream ports | Networking |

| ARM/DDR Subsystem | RoCE, Zero Touch RoCE |

| Arm Cores | Stateless offloads for: |

| Up to 8 Armv8 A72 cores (64-bit) pipeline | TCP/UDP/IP |

| 1MB L2 cache per 2 cores | LSO/LRO/checksum/RSS/TSS/HDS |

| 6MB L3 cache with plurality of eviction policies | VLAN insertion/stripping |

| DDR4 DIMM Support | SR-IOV |

| Single DDR4 DRAM controller | VirtIO-net |

| 8GB / 16GB / 32GB of onboard DDR4 | Multi-function per port |

| ECC error protection support | VMware NetQueue support |

| Hardware Accelerations | Virtualization hierarchies |

| Security | 1K ingress and egress QoS levels |

| Secure boot with hardware root-of-trust | Boot Options |

| Secure firmware update | Secure boot (RSA authenticated) |

| Cerberus compliant | Remote boot over Ethernet |

| Regular expression (RegEx) acceleration | Remote boot over iSCSI |

| IPsec/TLS data-in-motion encryption | PXE and UEFI |

| AES-GCM 128/256-bit key | Management |

| AES-XTS 256/512-bit data-at-rest encryption | 1GbE out-of-band management port |

| SHA 256-bit hardware acceleration | NC-SI, MCTP over SMBus, and MCTP over PCIe |

| Hardware public key accelerator | PLDM for Monitor and Control DSP0248 |

| RSA, Diffie-Hellman, DSA, ECC, | PLDM for Firmware Update DSP026 |

| EC-DSA, EC-DH | I2C interface for device control and configuration |

| True random number generator (TRNG) | SPI interface to flash |

| eMMC memory controller | |

| UART | |

| USB |

A DPU For Storage, Networks, And Machine Learning

Let’s take a look at how the new BlueField-2 tackles fast storage technologies. BlueField offers a complete solution for storage platforms, such as NVMe over Fabrics (NVMe-oF), All-Flash Array (AFA) and a storage controller for JBOF, server caching (memcached), disaggregated rack storage, and scale-out direct-attached storage. The smarts in this DPU make it a flexible choice.

NVIDIA has posted the impressive results of their test for the BlueField-2 here. The test environment is included in the blog.

Complete Storage Solution

BlueField-2 utilizes the processing power of Arm cores for storage applications such as All-Flash Arrays using NVMe-oF, Ceph, Lustre, iSCSI/TCP offload, Flash Translation Layer, data compression/decompression, and deduplication.

In high-performance storage arrays, BlueField-2 functions as the system’s main CPU, handling storage controller tasks and traffic termination. It can also be configured as a co-processor, offloading specific storage tasks from the host, isolating part of the storage media from the host, or enabling abstraction of software-defined storage logic using the BlueField Arm cores.

NVMe over Fabrics Capabilities

Utilizing the advanced capabilities of NVMe-oF, BlueField RDMA-based technology delivers remote storage access performance equal to that of local storage, with minimal CPU overhead, enabling efficient disaggregated storage and hyper-converged solutions.

Storage Acceleration

The BlueField embedded PCIe switch enables customers to build standalone storage appliances and connect a single BlueField to multiple storage devices without an external switch.

Signature Handover

The BlueField embedded network controller enables hardware checking of T10 Data Integrity Field/Protection Information (T10-DIF/PI), reducing software overhead and accelerating delivery of data to the application. Signature handover is handled by the adapter on ingress and egress packets, reducing the load on the software at the Initiator and Target machines.

BlueField for Networking & Security

Whether in the form of a smartNIC or as a standalone network platform, the new Bluefield-2 provides an efficient deployment of networking applications. Using a combination of advanced offloads and Arm compute capabilities, BlueField terminates network and security protocols inline.

BlueField SmartNIC

As a network adapter, you get the flexibility to fully or partially implement the data and control planes, unlocking more efficient use of compute resources. The programmability of the adapter provides the ability to integrate new data and control plane functionality.

BlueField Security Features

When it comes to security, the integration of encryption offloads for symmetric and asymmetric crypto operations makes it a great choice for implementing security applications. Security is built into the DNA of the data center infrastructure, reducing threat exposure, minimizing risk, and enabling prevention, detection, and response to potential threats in real-time.

Painless Virtualization

PCIe SR-IOV technology from NVIDIA, data center administrators will benefit from better server utilization while reducing cost, power, and cable complexity, allowing more Virtual Machines and more tenants on the same hardware. This certainly addresses any TCO concerns.

Overlay Networks

Datacenter operators use network overlay technologies (VXLAN, NVGRE, GENEVE) to overcome scalability barriers. By providing advanced offloading engines that encapsulate/de-capsulate the overlay protocol headers, this DPU allows the traditional offloads to operate on the tunneled protocols and also offloads NAT routing capabilities.

BlueField for Machine Learning Environments

Of course, NVIDIA has addressed the AI/ML market with this new DPU providing cost-effective and integrative solutions for Machine Learning appliances. Multiple GPUs can be connected via the PCIe Gen 3.0/4.0 interface. With its RDMA and GPUDirect® RDMA technologies, BlueField-2 offers efficient data delivery for real-time analytics and data insights.

RDMA Acceleration

The network controller data path hardware utilizes RDMA and RoCE technology, delivering low latency and high throughput with near-zero CPU cycles.

BlueField for Multi-GPU Platforms

BlueField-2 enables the attachment of multiple GPUs through its integrated PCIe switch. BlueField PCIe 4.0 support is future-proofed for next-generation GPU devices.

PeerDirect®

PeerDirect, a Mellanox product, is an accelerated communication architecture that supports peer-to-peer communication between BlueField and third-party hardware such as GPUs (e.g., NVIDIA GPUDirect RDMA), co-processor adapters (e.g., Intel Xeon Phi), or storage adapters. PeerDirect provides a standardized architecture where devices can directly communicate to remote devices across the fabric, avoiding unnecessary system memory copies and CPU overhead by copying data directly to/from devices.

GPUDirect RDMA Technology

The rapid increase in the performance of graphics hardware, coupled with recent improvements in GPU programmability, has made graphic accelerators a compelling platform for computationally demanding tasks in a wide variety of application domains. Since GPUs provide high core count and floating-point operations capabilities, high-speed networking is required to connect between the platforms to provide high throughput and the lowest latency for GPU-to-GPU communications. GPUDirect RDMA is a technology implemented within Bluefield-2 and NVIDIA GPUs that enables a direct path for data exchange between GPUs and the high-speed interconnect.

GPUDirect RDMA provides order-of-magnitude improvements for both communication bandwidth and communication latency between GPU devices of different cluster nodes.

Conclusion

The NVIDIA testing revealed the following performance characteristics of the BlueField DPU:

- Testing with smaller 512B I/O sizes resulted in higher IOPS but lower-than-line-rate throughput, while 4KB I/O sizes resulted in higher throughput but lower IOPS numbers.

- 100 percent read and 100 percent write workloads provided similar IOPS and throughput, while 50/50 mixed read/write workloads produced a higher performance by using both directions of the network connection simultaneously.

- Using SPDK resulted in higher performance than kernel-space software, but at the cost of higher server CPU utilization, which is expected behavior, since SPDK runs in userspace with constant polling.

- The newer Linux 5.15 kernel performed better than the 4.18 kernel due to storage improvements added regularly by the Linux community.

Overall, the results from the internal test are pretty impressive. The BlueField-2 reached 41.5 million IOPS which is more than four times that of any other DPU on the market today.

Standard networking results were also impressive. The DPU clocked more than five million 4KB IOPS and seven million to over 20 million 512KB IOPS for NVMe-oF. If you are looking to improve overall performance in the data center this DPU should fit the bill.

Amazon

Amazon