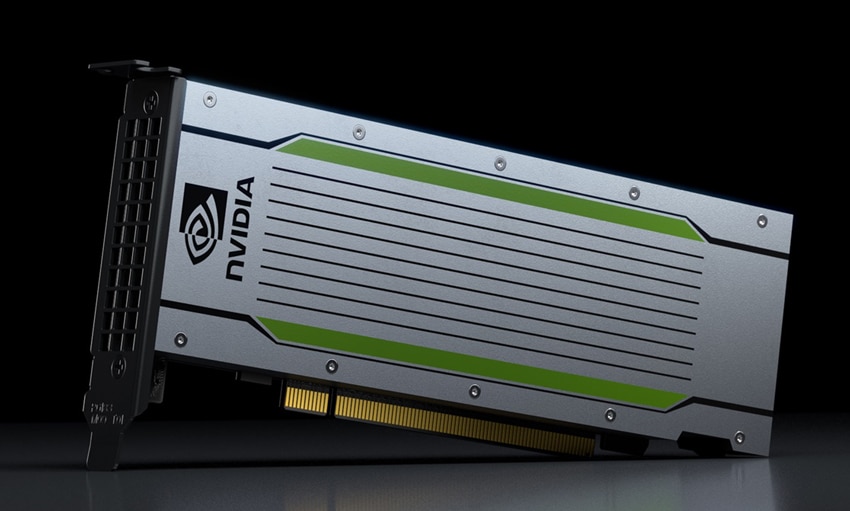

Today at the GPU Technology Conference, NVIDIA introduced its CUDA-X AI platform, what it calls the only end-to-end platform for the acceleration of data science. Along with this, several major vendors have announced NVIDIA T4 servers architected to run CUDA-X AI accelerated data analytics, machine learning, and deep learning. NVIDIA T4 GPUs instances paired with NVIDIA CUDA-X AI are coming to AWS Marketplace. And NVIDIA CUDA-X AI Acceleration libraries are now available on Microsoft Azure.

Today at the GPU Technology Conference, NVIDIA introduced its CUDA-X AI platform, what it calls the only end-to-end platform for the acceleration of data science. Along with this, several major vendors have announced NVIDIA T4 servers architected to run CUDA-X AI accelerated data analytics, machine learning, and deep learning. NVIDIA T4 GPUs instances paired with NVIDIA CUDA-X AI are coming to AWS Marketplace. And NVIDIA CUDA-X AI Acceleration libraries are now available on Microsoft Azure.

Today at the GPU Technology Conference, NVIDIA introduced its CUDA-X AI platform, what it calls the only end-to-end platform for the acceleration of data science. Along with this, several major vendors have announced NVIDIA T4 servers architected to run CUDA-X AI accelerated data analytics, machine learning, and deep learning. NVIDIA T4 GPUs instances paired with NVIDIA CUDA-X AI are coming to AWS Marketplace. And NVIDIA CUDA-X AI Acceleration libraries are now available on Microsoft Azure.

As more and more business are looking to AI and its potential, NVIDIA is releasing its CUDA-X AI to unlock the flexibility of its Tensor Core GPUs that are designed to address the end-to-end AI pipeline. CUDA-X AI is a series of specialized acceleration libraries that NVIDIA states can speed up machine learning and data science workloads by as much as 50x. GPUs can accelerate computing that is required for processing the large volumes of data associated with AI.

Several vendors including Cisco, Dell EMC, Fujitsu, HPE, Inspur, Lenovo and Sugon are NVIDIA NGC-Ready validated and are announcing T4 servers that are fine-tuned to run NVIDIA CUDA-X AI acceleration libraries. The NGC-Ready program features a select set of systems powered by NVIDIA GPUs with Tensor Cores that are ideal for a wide range of AI workloads. These servers include:

- Cisco UCS C240 M5

- Dell EMC PowerEdge R740/R740xd

- Fujitsu PRIMERGY RX2540 M5

- HPE ProLiant DL380 Gen10

- Inspur NF5280M5

- Lenovo ThinkSystem SR670

- Sugon W760-G30

Several other partners are working on the NGC validation process for their own T4 servers.

On the T4 front, AWS announced new Amazon Elastic Compute Cloud (EC2) G4 instances featuring NVIDIA T4 Tensor Core GPUs. AWS customers will be able to pair these G4 instances with NVIDIA GPU acceleration software, including NVIDIA CUDA-X AI libraries for accelerating deep learning, machine learning and data analytics. The new instances are also supported by Amazon Elastic Container Service for Kubernetes and support next-gen computer graphics for creative professionals.

Microsoft Azure Machine Learning (AML) service has integrated RAPIDS, a key component of NVIDIA CUDA-X AI. Data scientist can use RAPIDS on AML service to dramatically reduce the time it takes to train their AI models, lowering training times from days to hours or from hours to minutes, depending on dataset size.

Availability

CUDA-X AI acceleration libraries are freely available as individual downloads or as containerized software stacks from the NVIDIA NGC software hub. The AWS G4 instances are expected to be available in the next few weeks. The RAPIDS on AML service is available now from Microsoft Azure.

Sign up for the StorageReview newsletter