NVIDIA unleashed a torrent of exciting announcements at Computex 2025. The company unveiled new technologies spanning semi-custom AI infrastructure, enterprise AI factories, democratized AI development tools, and advanced platforms for humanoid robotics, signaling a significant acceleration in the fusion of AI into every facet of computing and industry.

NVLink Fusion: Enabling a New Era of Semi-Custom AI Infrastructure

One of the major announcements at Computex was NVIDIA opening the doors to NVLink. With NVIDIA NVLink Fusion, the company provides IP and a new silicon foundation that allows industries to build semi-custom AI infrastructure by integrating their technologies with its advanced interconnects.

Customers can develop custom ASICs or connect their CPUs directly to the high-performance scale-up fabric. For instance, companies can pair their custom CPUs with NVIDIA GPUs using C2C links, or conversely, integrate their specialized AI ASICs with NVIDIA’s CPUs like the Grace or upcoming ones, such as Vera, all interconnected via NVLink. The current generation chip-to-chip interface offers substantial 900GB/s bidirectional bandwidth between the CPU and the GPU. At the same time, the broader fifth-generation NVLink platform boasts an impressive 1.8TB/s of bidirectional bandwidth per GPU.

This is exciting news for the industry. Companies building AI accelerators now have a compelling choice: they can opt for emerging open standards like UALink, which, while promoting openness, currently lag NVIDIA’s established interconnect speeds and are still under development. Alternatively, with NVLink Fusion, they can integrate with the fastest scale-up interconnect available right now, gaining immediate access to cutting-edge performance and NVIDIA’s comprehensive and mature software suite.

Many industry partners are already jumping on board. Key players adopting NVLink Fusion to create custom AI silicon and specialized infrastructure include ASIC and IP partners MediaTek, Marvell, Alchip Technologies, Astera Labs, Synopsys, and Cadence. Furthermore, CPU innovators Fujitsu and Qualcomm Technologies also plan to build custom CPUs that couple with NVIDIA GPUs and the NVLink ecosystem.

Blackwell Pro 6000 Servers: Fueling Enterprise AI Factories

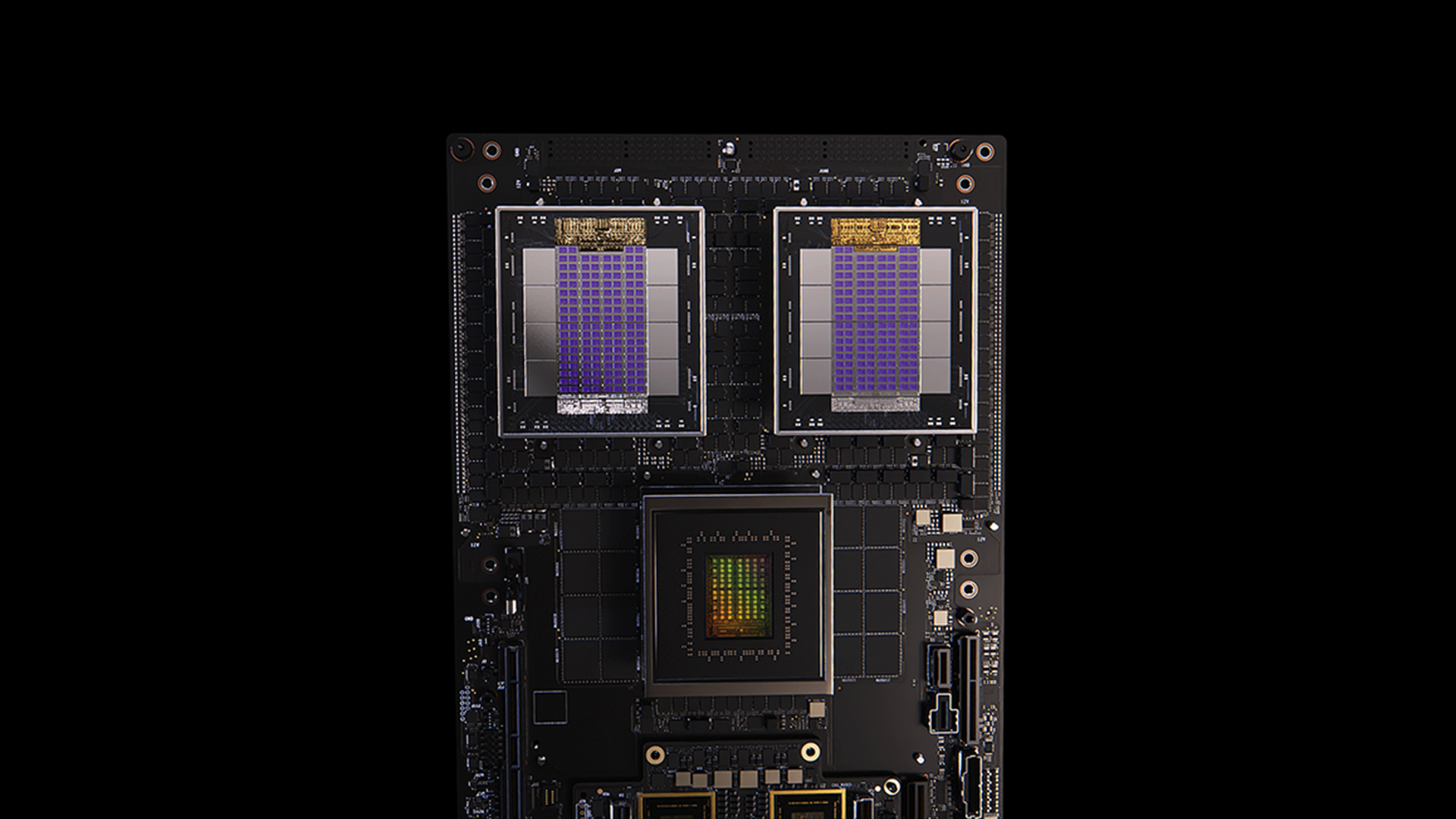

Another pivotal announcement from NVIDIA at Computex centered on revolutionizing enterprise AI with the NVIDIA RTX PRO Blackwell servers. While the core GPU, the RTX PRO 6000 Blackwell, shares its robust architecture with its workstation counterpart, its integration into a more standard server design is significantly enhanced by a unique innovation: the NVIDIA ConnectX-8 (CX8), which Jensen Huang described as “a switch first, networking chip second.”

The true breakthrough lies in how CX8 facilitates high-speed east-west traffic directly between the GPUs within the server and across multiple servers. As Huang mentioned, this capability allows the RTX PRO 6000 GPUs to communicate efficiently for demanding inference workloads, mirroring the high-bandwidth interconnectivity seen with NVLink on the flagship Blackwell GB200 and GB300 AI factory systems. This direct GPU-to-GPU communication, bypassing slower traditional pathways, makes the RTX PRO Blackwell servers an exceptional value proposition for enterprise inferencing. When paired with software like NVIDIA Dynamo, these servers deliver highly efficient and scalable AI agent deployment and operation.

We also got a look at the actual board. It features eight PCIe slots to house the RTX PRO 6000 Blackwell GPUs. Alongside these are eight PCIe connections linking back to the main motherboard. And, the star of the show, eight 800Gbps networking connections powered by the 4 CX8 chips. These high-speed ports enable robust inter-server communication, allowing enterprises to scale their inference capabilities by networking multiple RTX PRO Blackwell servers into powerful clusters.

Finally highlighting the performance leap, Huang presented benchmarks showing the new RTX PRO Blackwell server delivering 1.7 times the performance of a Hopper H100 HGX system for Lama 70B inference. Even more impressively, for the DeepSeek R1 model, the Blackwell server demonstrated a four-fold performance increase over the previous generation state-of-the-art H100. This significant uplift in tokens per second and per second per user underscores the server’s capability to handle high-throughput and low-latency interactive AI tasks.

Humanoid Robotics, Nvidia Jetson Thor and Project GR00T: Advancing the Frontier of Physical AI

NVIDIA also spotlighted its plans for the burgeoning field of humanoid robotics, unveiling significant advancements to its NVIDIA Isaac platform designed to accelerate the development of “Physical AI.” According to Jensen Huang, the new Jetson Thor robotic processor is at the heart of this initiative, which has just started production. Jetson Thor is engineered to be the powerful, energy-efficient brain for a new generation of humanoid robots and autonomous systems.

Complementing the hardware is NVIDIA Isaac GR00T N1.5, an updated version of NVIDIA’s open, generalized foundation model for humanoid robot reasoning and skills. Huang announced that GR00T N1.5 is now open-sourced and has garnered significant interest with thousands of downloads. This model aims to provide robots with the intelligence to understand complex instructions and perform various tasks in dynamic human environments.

A critical challenge in robotics is the acquisition of vast and diverse datasets for training. NVIDIA addresses this with Isaac GR00T-Dreams, a groundbreaking blueprint built upon NVIDIA Cosmos physical AI world foundation models. Huang detailed this as a “real-to-real data workflow” where AI is ingeniously used to amplify human demonstrations. Developers can fine-tune Cosmos with initial human-teleoperated demonstrations of a task. Then, using GR00T-Dreams, they can prompt the model with new scenarios or instructions to generate a multitude of “dreams” synthetic video sequences of the robot performing new actions. These 2D dream videos are converted into 3D action trajectories to train the GR00T robot model. “So a small team of human demonstrators can now do the work of thousands,” Huang explained, highlighting how this dramatically scales data generation.

This entire ecosystem, encompassing the Isaac Sim now open-source on GitHub for simulation, Isaac Lab for robot learning, the advanced Newton physics engine developed with Google DeepMind and Disney Research, and Cosmos world models, provides a comprehensive cloud-to-robot platform. Huang envisions that “Physical AI and robotics will bring about the next industrial revolution,” with humanoid robots, uniquely suited for “brownfield” human environments, potentially becoming “the next multi-trillion dollar industry.”

DGX Spark, Station, and Cloud Lepton

NVIDIA continued its push to enable access to high-performance computing with key updates around its DGX offerings, from global cloud marketplaces to powerful personal AI supercomputers.

A significant new initiative unveiled was NVIDIA DGX Cloud Lepton, an AI platform featuring a compute marketplace designed to connect the world’s AI developers with tens of thousands of GPUs. This platform aims to unify cloud AI services and GPU capacity access from a global network of NVIDIA Cloud Partners such as CoreWeave, Crusoe, Foxconn, and SoftBank Corp. These partners will offer NVIDIA Blackwell and other NVIDIA architecture GPUs on the DGX Cloud Lepton marketplace. Developers can tap into compute capacity in specific regions for on-demand and long-term needs, supporting strategic and sovereign AI operations. DGX Cloud Lepton integrates with NVIDIA’s software stack, including NIM and NeMo microservices, to simplify AI application development and deployment and create a planetary-scale AI factory in collaboration with its NCPs.

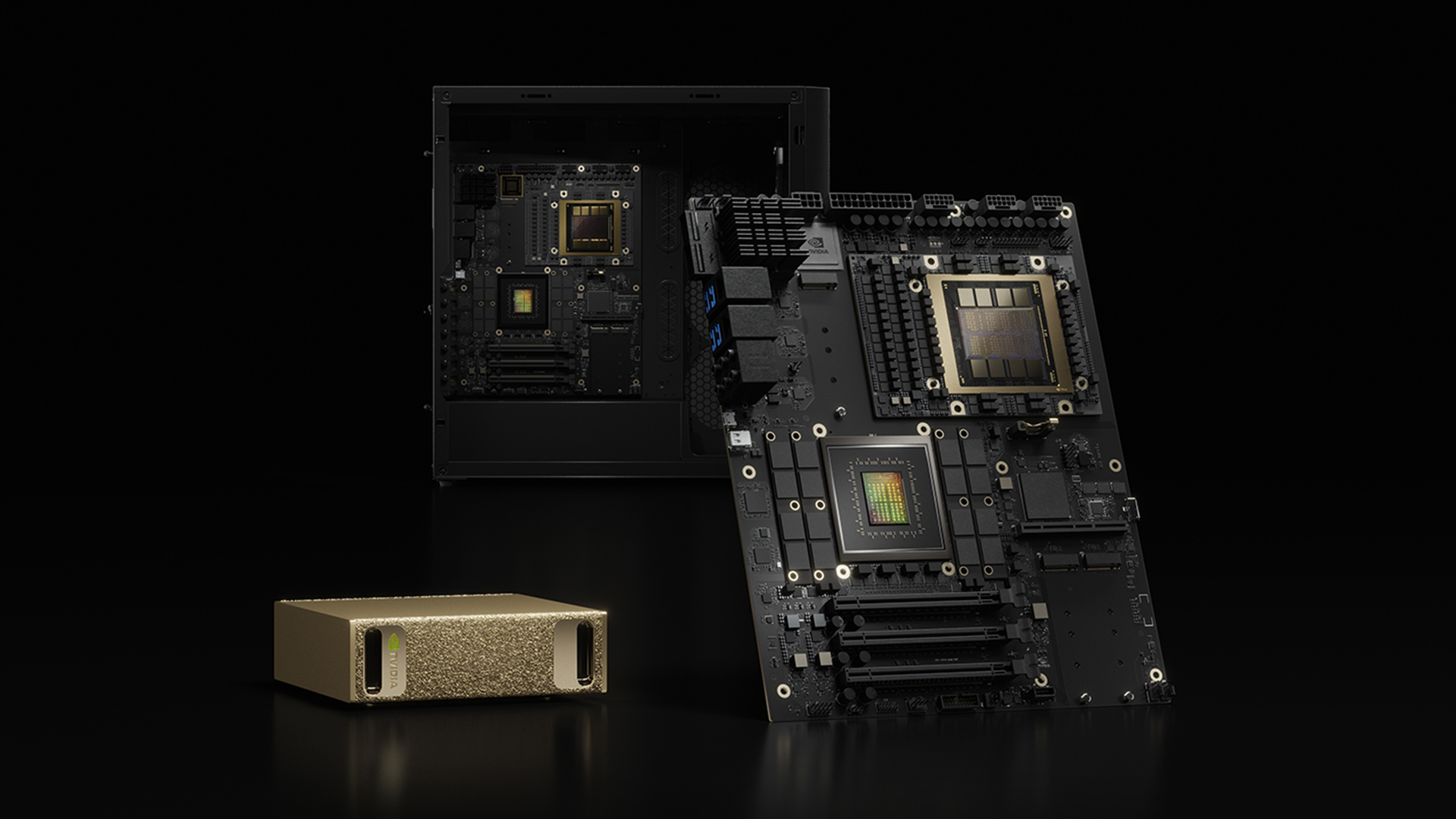

While DGX Cloud Lepton focuses on broad cloud access, NVIDIA highlighted the expanding ecosystem for its personal AI supercomputers, NVIDIA DGX Spark and DGX Station. The emphasis was on growing partnerships and increasing availability through more vendors. These systems are positioned as AI-native computers for developers, students, and researchers who desire a dedicated AI cloud environment.

- DGX Spark, powered by the NVIDIA GB10 Grace Blackwell Superchip, offers up to 1 petaflop of AI compute. It is now in full production and will be available shortly from an expanded list of partners, including Acer, ASUS, Dell Technologies, GIGABYTE, HP, Lenovo, and MSI.

- DGX Station is also seeing broader partner adoption, featuring the NVIDIA GB300 Grace Blackwell Ultra Desktop Superchip for up to 20 petaflops of AI performance. It will be available from ASUS, Dell Technologies, GIGABYTE, HP, and MSI later this year, providing a desktop solution capable of running trillion-parameter AI models.

Nvidia RTX 5060 Launch

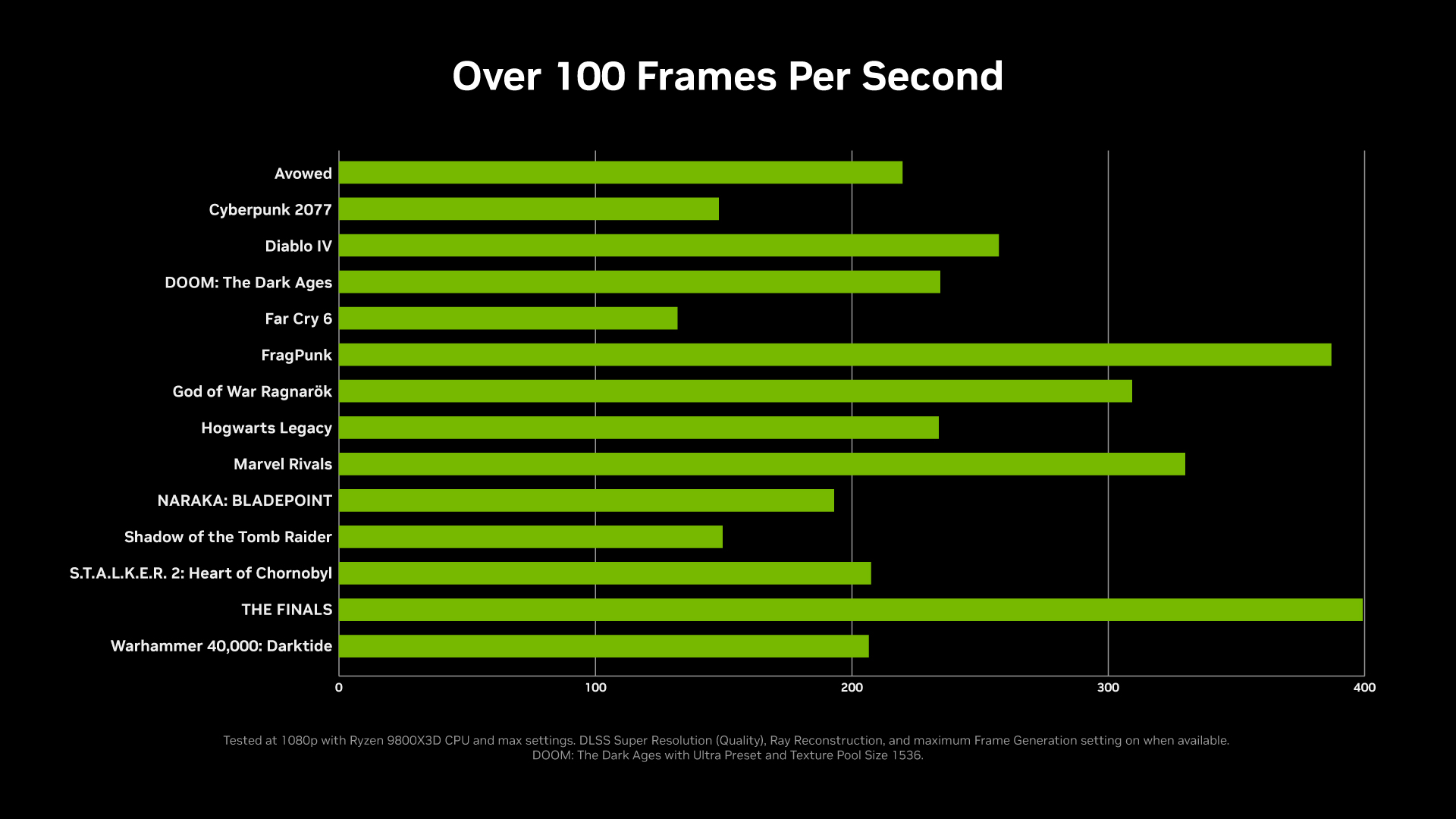

Rounding out its extensive Computex announcements, NVIDIA also launched the NVIDIA GeForce RTX 5060. Available starting today in both add-in card form factors from partners and integrated into new gaming laptops, the RTX 5060 aims to deliver compelling performance and features to a broader audience.

The RTX 5060, built on the Blackwell architecture, brings with it support for DLSS 4, leveraging NVIDIA’s latest AI-powered super-resolution and frame generation technology. It boasts 614 AI TOPS (INT4) for enhanced AI processing capabilities. The card features 5th Generation Tensor Cores and 4th Generation RT Cores for improved ray tracing and AI performance. For media processing, it includes one 9th Generation NVIDIA Encoder (NVENC) and one 6th Generation NVIDIA Decoder (NVDEC). The RTX 5060 has 8GB of GDDR7 memory, providing a memory bandwidth of 448 GB/sec, ensuring smooth performance for modern gaming titles and creative workloads.

These updates collectively signify NVIDIA’s strategy to make powerful AI tools and compute resources more readily available, through vast cloud networks or dedicated, high-performance desktop systems, ensuring developers at all scales can participate in the AI revolution.

Amazon

Amazon