When thinking about Content Delivery Networks (CDNs), it’s easy to go straight to the big brands we know, like Netflix, Hulu, etc. That makes sense; it’s intuitive to think about the latest episode of your favorite show being distributed to your phone or living room TV. Of course, it’s much more complicated than that, and high-capacity storage plays a major role in the customer experience.

When calling up Slotherhouse on Hulu, your streaming device will first hit an edge CDN close to home. The more data that the CDN node can hold, the more likely the service delivers a fast start rather than bouncing to a node further away to retrieve the requested content. The benefits of reducing the hops required for video streaming are rather obvious, but CDNs do much more than that.

CDNs enable other use cases like over-the-air (OTA) updates for cars like Tesla or moving those movie streams from the internet to the inside a commercial airliner. Regardless of the type of files being delivered, one thing is clear: the more you can store at the edge, the more responsive a CDN can be – which is critical as customers measure success by the spins of the throbber, with little care about the underlying infrastructure making it all happen.

As usual with our reports, we didn’t just want to speculate about how CDNs work and where the pressure on the architecture is most likely to be found. We went to the experts. In this case, that’s Varnish Software, one of the preeminent leaders in content delivery software.

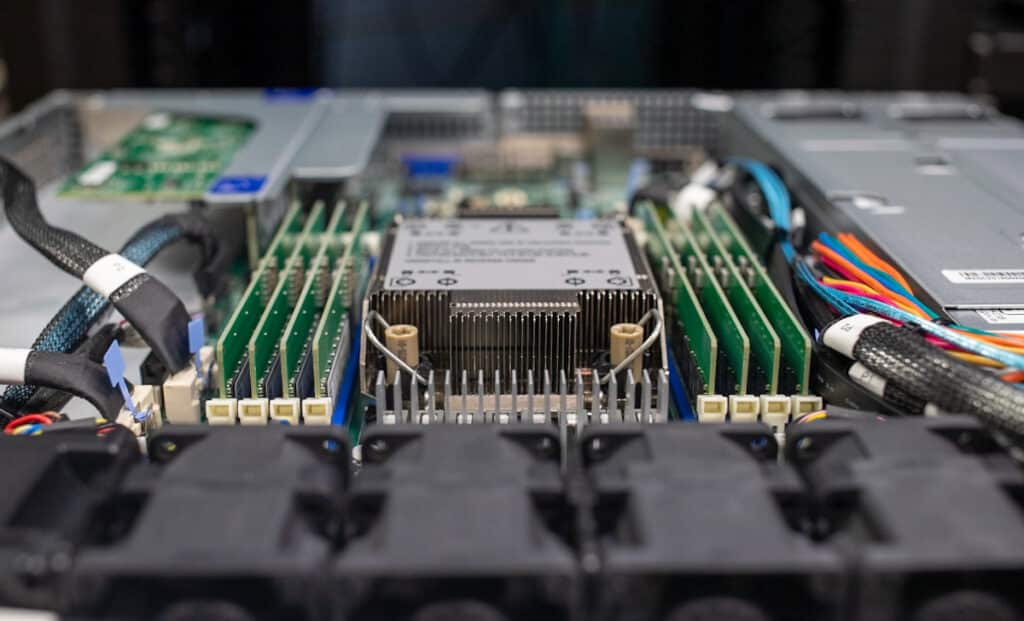

We partnered with Varnish to configure a perfect edge CDN node in our lab, outfitted with Varnish’s content delivery software, a CDN-specific server from Supermicro, a massive storage footprint thanks to 30.72TB Solidigm P5316 SSDs, and a high-speed 200GbE interconnect from NVIDIA to get a better handle on the stressors on edge CDN nodes and how storage, specifically, impacts outcomes.

Who is Varnish Software?

Varnish offers content delivery software that makes it easy to speed up digital interactions, handle massive traffic loads, and protect web infrastructure. Varnish helps organizations move content delivery as close to the client as possible to ensure the best experience while enabling the maximum return on investment in infrastructure.

The foundation is based on a feature-rich and robust open-source HTTP cache and reverse proxy, Varnish Cache, that sits between the origin and the client. It has been optimized to extract the maximum performance and efficiency from the underlying hardware. Varnish Cache streamlines system-level queuing, storage, and retrieval, making it the ideal method for benchmarking content delivery and edge delivery workloads.

Varnish can run on just about anything, but there’s a benefit to giving their edge nodes more horsepower in a few key areas to enhance the customer experience. Every hop back to the data center from the client device introduces latency, so the more the edge node can deliver, the better. To that end, we built the ultimate edge CDN node and tested it with Varnish’s rigorous node validation tools.

What Makes Varnish CDN So Darn Fast?

CDNs need more than a fast network, especially at the edge. It would be inefficient and slow if every request had to go back to the host site for a refresh. The optimal solution would be a storage system to process and store data close to the customer. The system needs massive storage capabilities and a high-performance server that can rapidly pull information from cache and deliver it without delay.

Varnish Software has implemented a solution that supports very large data sets to meet virtually any environment with high-performance servers and high-density storage systems. Meet the Massive Storage Engine.

Varnish Software’s Massive Storage Engine (MSE) is an optimized disk and memory cache engine. MSE enables high-performance caching and persistence for 100TB-plus data sets supporting video and media distribution, CDNs, and large-cache use cases. MSE is a perfect fit for companies where high-performance delivery of large data sets is critical.

With the high-performing MSE, cache remains intact between restarts and upgrades, preventing expensive and time-consuming cache refills. This provides rapid retrieval and helps avoid network congestion following a restart.

The MSE solution can store and serve almost unlimited-sized objects in cache for fast and scalable content delivery. MSE has been optimized to deliver content with less fragmentation for least recently used (LRU) cache eviction policy, resulting in superior performance and concurrency. For customers with a cache size greater than 50GB or limited memory, Varnish recommends using MSE.

The latest generation of MSE (MSE 4) allows for the graceful failure of disks, enabling persistent cache footprints to resume operation automatically after disk failure is detected.

Edge CDN Node Hardware Configuration

In our testing scenario, we leveraged a single server acting as an Edge CDN Node and a single client. Our CDN node is based on the Supermicro SYS-111E-WR server with a single Intel Xeon Gold 6414U CPU. This CPU offers 32 cores and has a base frequency of 2GHz.

We paired this CPU with 256GB of DDR5 memory and eight Solidigm P5316 30.72TB QLC SSDs. This design aimed to show what a lean deployment model can offer in terms of performance without requiring more expensive SSDs or additional CPU resources that would be left under-utilized.

For the client side, we used an available dual-processor platform in our lab with Intel Xeon Platinum 8450H CPUs, which is overkill but had plenty of resources to ensure the bottleneck was either networking or the CDN node.

Our systems were configured with Ubuntu 22.04 as the OS, and each was equipped with an NVIDIA 200Gb NIC. The 200Gb Ethernet fabric offered plenty of bandwidth for this testing scenario.

Edge CDN Node Performance

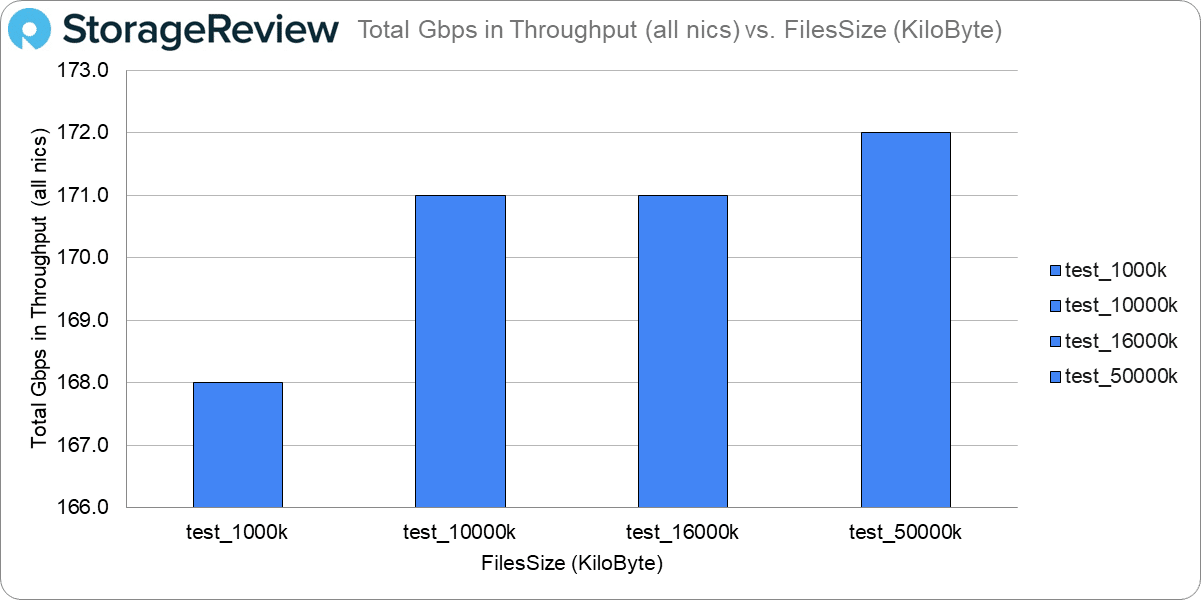

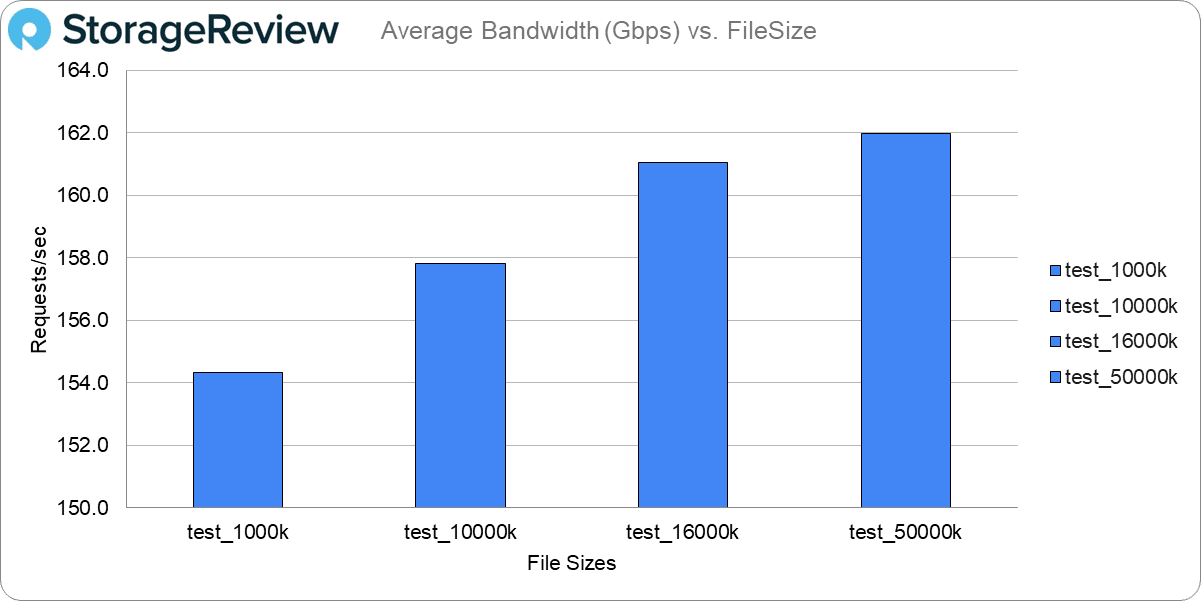

The test run looked at the overall performance of Varnish Software on the edge node we built. Specifically, the critical metrics evaluated include TTLB (Time to Last Byte), requests/second, transfer/second (bytes), total requests, errors, CPU usage, memory usage, throughput, and goodput. As a point of clarity, throughput is everything being sent by Varnish, and goodput is what the client actually sees, ignoring retransmissions or overhead data.

The tests were completed using WRK as a load-generating tool, pulling different-sized file chunks from a video backend using 100 TCP connections. The test was designed to have a 90% up to 95% cache hit ratio to simulate what is often seen in deployed video delivery environments. To simulate different workloads, we focused on the performance of small and large files, where smaller files could simulate API calls and larger files could represent various video qualities in a live or video-on-demand (VOD) scenario.

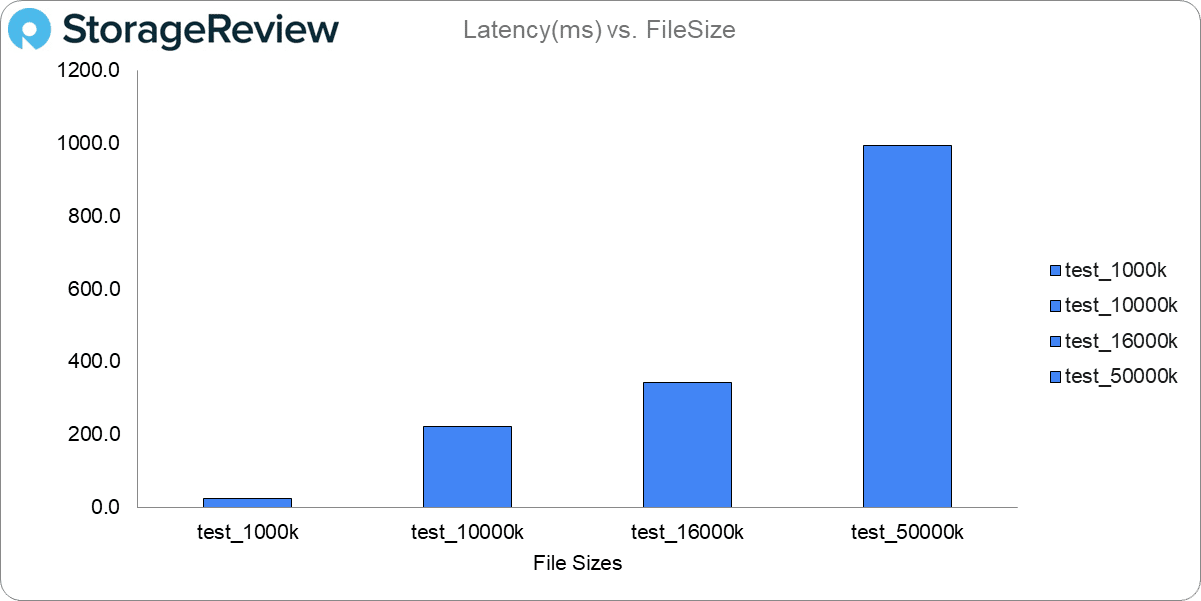

We tested file sizes of 100 and 500 kilobytes for the smaller object tests and 1,000, 10,000, 16,000, and 50,000 kilobytes for larger objects. We hoped to capture a mix of CDN use cases by looking at a range of file sizes. For organizations making high-volume but small API calls, 100 kilobytes will likely be larger than most. For VOD, a 10MB object may represent a short video clip, 16MB an HD video, and 50MB an even higher quality video. These file sizes can also be applied to distributing and delivering ISO images, software updates, and installation packages.

The load testing tool WRK returns TTLB (Time to Last Byte), so the latency metrics show the complete load time for the entire video chunk. Also, TTFB (Time to First Byte) is the time of the first server response, usually measured in milliseconds, and is constant for many different file sizes.

We observed TTLBs of 4.4ms up to 995.2ms. For the smallest video chunk of 100 kilobytes, the average full response was only 4.4 ms. For the largest size of 50MB, the entire load was still completed in under 1 second on average.

Other metrics of note are the error counts; the only errors noted were some residual timeout errors. Those are expected for the largest-sized objects. CPU and Memory usage remained healthy, ~50 percent up to 60 percent of full capacity across these tests. The highest CPU usage was during the 100KB test with 58.8 percent and 50MB test with 58 percent due to the sheer number of requests for the smaller files and the size of the larger files.

The average throughput for the larger-sized video was 170.5+ Gbps, and the average for the smaller-sized video was 164+ Gbps.

Goodput averages for the larger sizes were 158.8+ Gbps and 149.1+ Gbps for the smaller sizes using one WRK client as a loading generator. It’s expected that higher throughputs can be achieved by scaling the WRK clients, as observed in a few other experiments run internally by Varnish, but that’s outside the scope of this paper.

While raw performance metrics are important, power consumption is another consideration for Edge CDN systems. This is where the platform we chose for this project comes into play. The single-socket Supermicro SYS-111E-WR server offers a dense NVMe storage platform with plenty of PCIe slots for NICs without going too power-aggressive with dual processors.

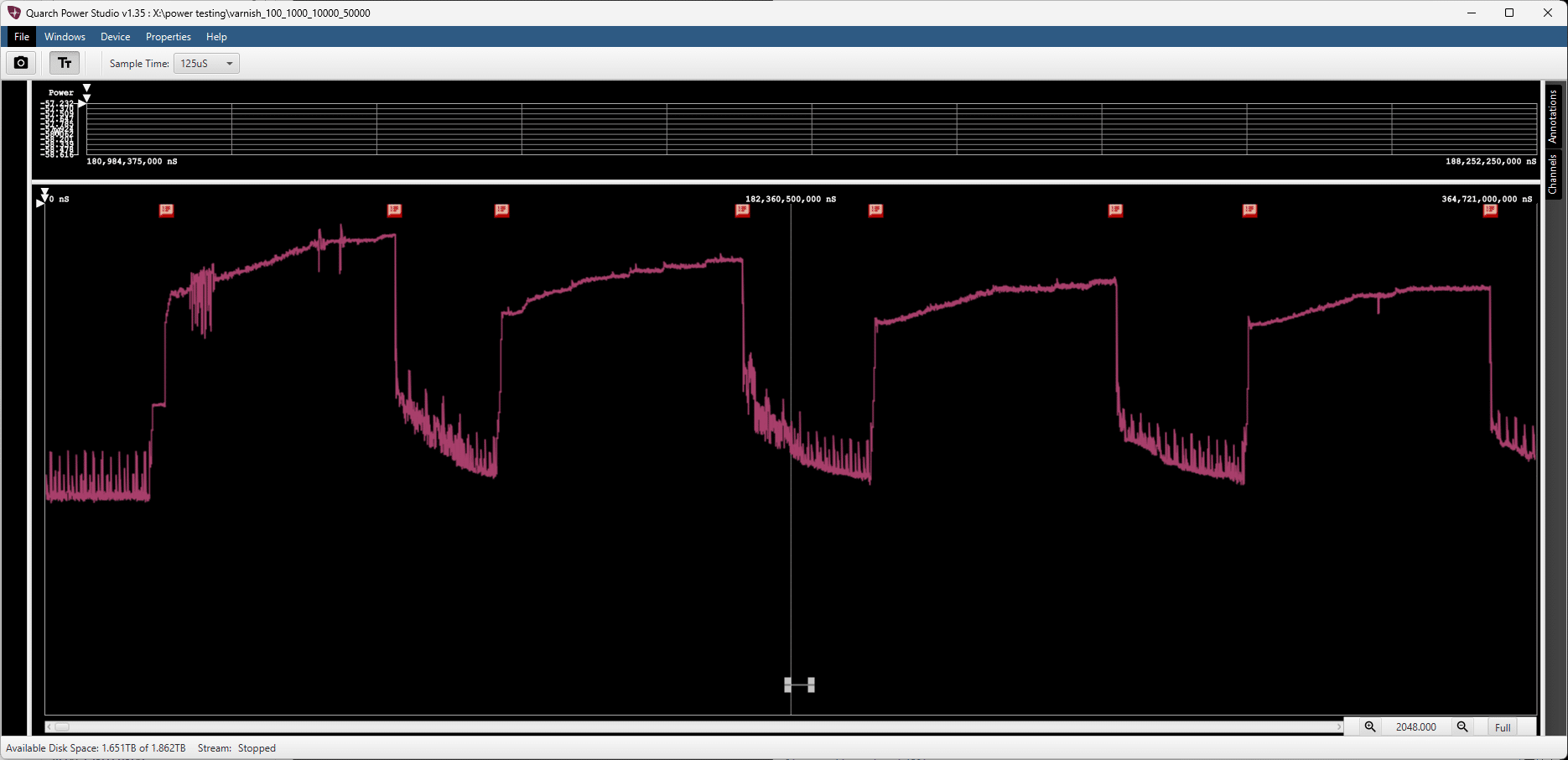

To measure the power draw from the server with the load applied, we leveraged our Quarch Mains Power Analysis Module. This gives us an accurate view of the power drawn from the server, with a response time of 125us. Here, we ran through each test group for the same period of time and measured the average power from the start of the workload to the end.

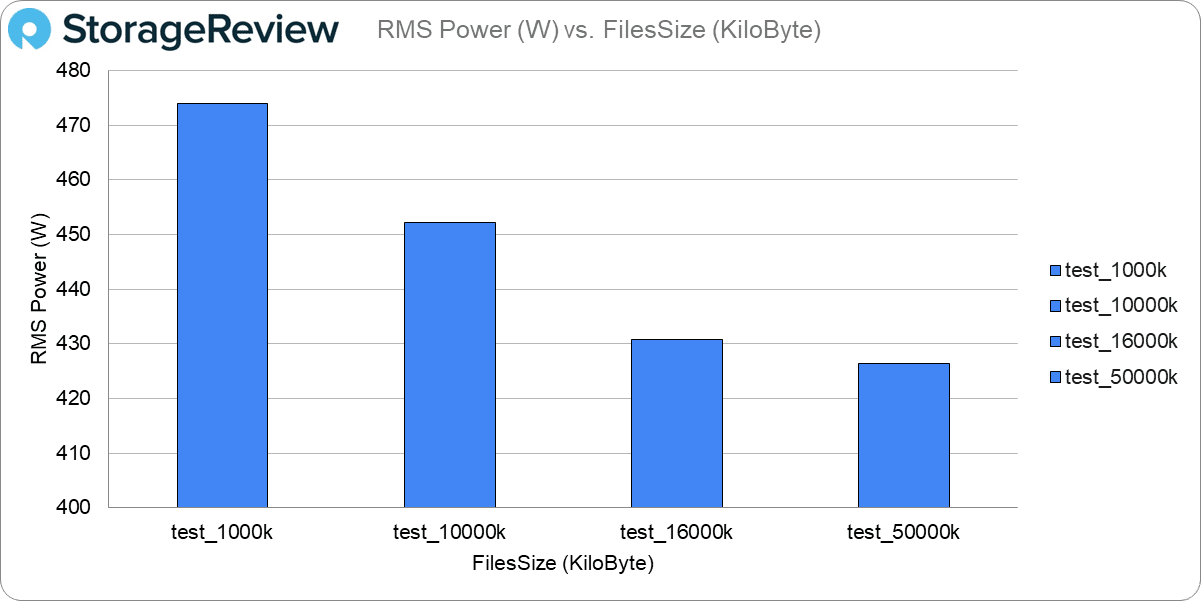

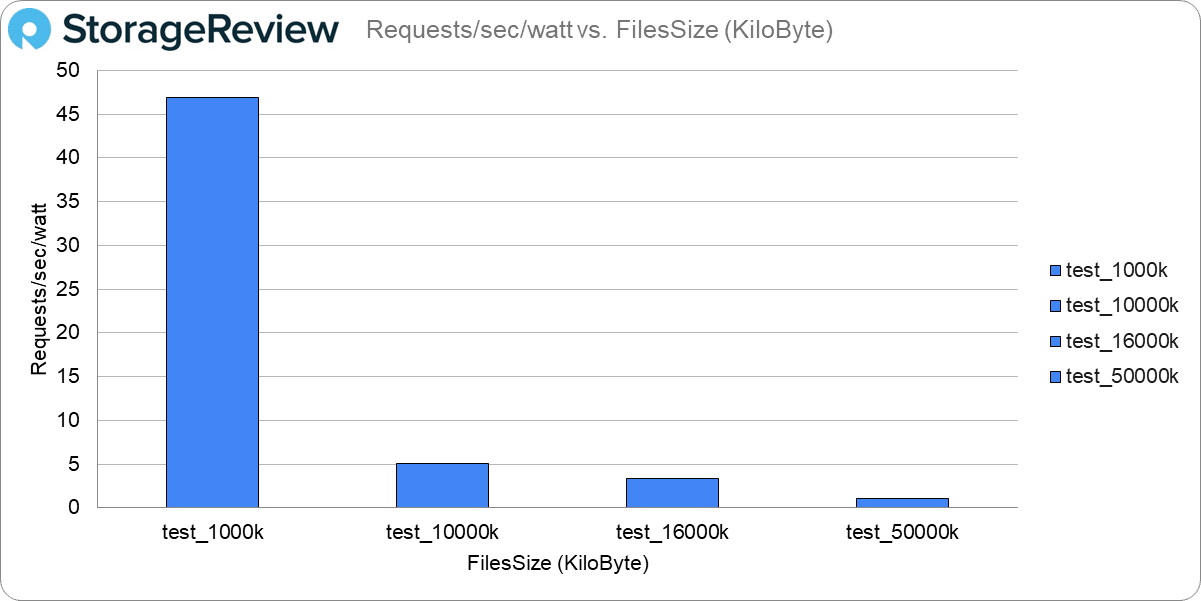

We focused on two power metrics: total system RMS power versus test filesize and requests per second per watt. While the first assumption would be that power usage increases with greater transfer speeds, that wasn’t the case. We saw elevated power consumption at lower transfer sizes, which slightly tapered off as the transfer size increased. This comes down to the smaller transfer sizes driving more I/O processes, with larger transfer sizes with fewer I/O processes.

Looking at total system power, with the 1M transfer size, we measured a system power level of 473.9W, which decreased to 426.5W with the 50M transfer size. When we break that down into requests per second per watt, the 1M transfer size measured 46.9, down to 1.09 for the 50M transfer size.

Balancing Performance And Cost

Our Varnish CDN node was created to provide exceptional performance and density. Not just with the 1U server rack density, but with the capacity density provided by the Solidigm SSDs as well. We’re “only” using the 30.72TB P5316 drives today, but there’s even more upside available with the 61.44TB P5336 units. What’s even better, the CDN workload is extremely read-heavy, meaning these QLC-based SSDs are perfect for the task. As a funny aside, when reviewing the performance numbers with Varnish, their engineer thought we were using Gen5 SSDs because the node performance was so impressive.

While server density is one critical element, a cost-optimized CDN node is something else. The single-processor Supermicro server we used here provides Varnish with plenty of hardware might and expansion options, while the ten NVMe bays let us accumulate over 600TB of storage using Solidigm’s SSD capacity leadership. The relative performance per dollar, and if you want to dive a little deeper into our data, performance per watt, metrics here are undisputable.

CDNs have the unenviable task of delivering data at a moment’s notice with sometimes predictable, oftentimes not, requests. A finely tuned piece of server hardware makes all the difference with it comes to the performance of these CDN nodes, which is increasingly being pushed further out to the edge. With Solidigm’s massive enterprise SSDs, these nodes can dramatically improve cache hit rates, ultimately delivering a superior customer experience.

This report is sponsored by Solidigm. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Amazon

Amazon