Modern server designs are taking advantage of an expanding world of accelerator cards to enable new or enhanced capabilities. While many go straight to GPUs when thinking about acceleration, there’s a new breed of processors that are not only addressing performance but also data protection and economics as well. The Pliops Extreme Data Processor (XDP) is one of these accelerators, helping customers take advantage of the unique blend of performance, capacity, and economics thanks to QLC-based enterprise SSDs.

A Brief View on the Impact of Flash in the Data Center

NVMe SSDs significantly impacted server and storage performance, especially when they hit Gen4 speeds. These gains put stress on other parts of the system though, meaning traditional RAID card architectures were getting in the way. The flash storage game changed again with the introduction of QLC flash. New solutions are needed for servers to effectively leverage these modern technologies.

Intel, now Solidigm, was the first to commercialize a high-quality QLC SSD. The Solidigm P5316 is the now defacto standard when it comes to high-capacity, affordable, enterprise SSDs. We’ve spent a lot of time with these drives in the past, not just in our reviews, but in enterprise and cloud deployments that can make proper use of the drives.

What do we mean by proper use? Well, QLC SSDs are traditionally very good when it comes to read performance, but when writing to the drives, systems have to be a little more intelligent. In our P5316 review, we talked a little bit about a term called indirection unit (IU). This is more or less the block size a drive wants to be written to. With the P5316, its IU is 64K. While you can write in 4K blocks to the drive, it’s wildly inefficient in terms of performance and write amplification.

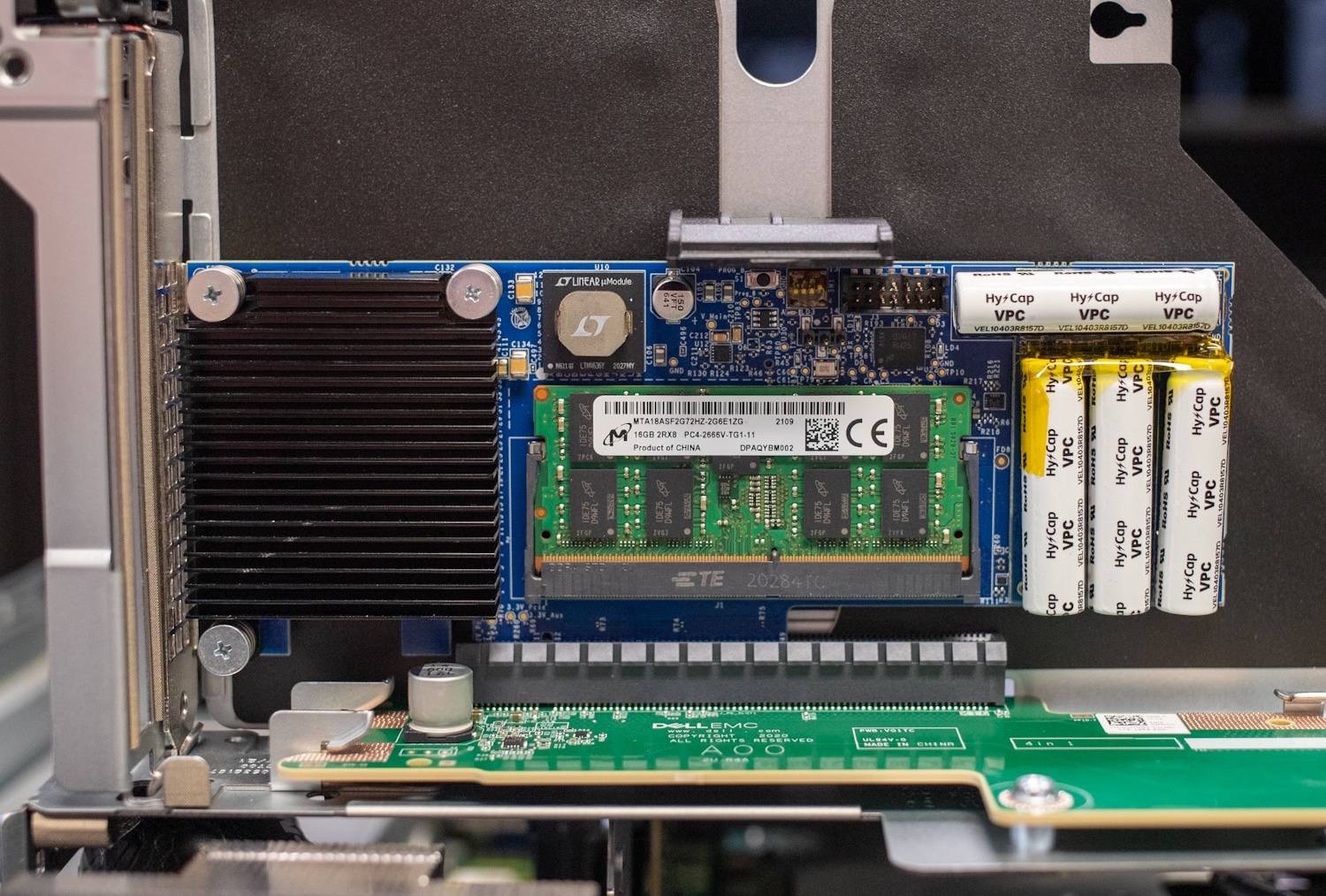

Diving into SSD minutia is important in understanding at least one of the key reasons why the Pliops XDP exists. In one aspect it acts as a RAID card for the server by aggregating and managing the SSDs. The XDP is also backed by onboard DRAM and power protection so it can coalesce the writes coming into the drives to ensure performance and capacity utilization that’s better than software RAID.

IoT Device Demand on Applications

Data collection continues to grow at an incredible rate. The requirement to have that data and the associated applications readily available is even more evident, dramatically so with the importance of gathering insights at the edge and AI/ML application development.

Enterprises fully embrace SSD technology, given the continued capacity growth while becoming even more cost-effective. There is broad adoption of NVMe drives that are able to run more than 1,000 times faster than hard disk drives (HDDs). Data centers are deploying networks running 400Gbps to keep up with these storage devices, while efficient protocols like NVMe-over-Fabrics (NVMe-oF) push the limits of systems and infrastructures.

Applications have also become more efficient with IoT device demands continuing to grow at unprecedented rates. More servers, storage, and switches add complexity to an already complex environment. And let’s not forget the importance of backing up all this data efficiently and securely.

It would seem that installing NVMe SSDs would solve performance issues facing enterprises today. But, those NVMe SSDs are not being utilized effectively. Moore’s Law is lagging behind with CPU performance on pace to double every 20 years as opposed to every two years. Adding more cores doesn’t address the problem of performance since those cores share the same memory and I/O. By adding more servers, the old adage that more is better would work, but it is a very expensive solution and not good for the environment.

With all this data stored on these high-speed SSDs, it has become a nightmare to process and manage overloading the CPU with computationally intensive storage tasks. Servers cannot keep up with the demands of the user community nor the need to provide reliable protection in the event of a drive failure, especially when dealing with higher performance and capacity.

The Pliops Extreme Data Processor (XDP) may provide the solution to many of the performance demands for SSD-based storage and protection. The Pliops XDP is the new benchmark for application acceleration, increasing the effectiveness of your data center infrastructure investments.

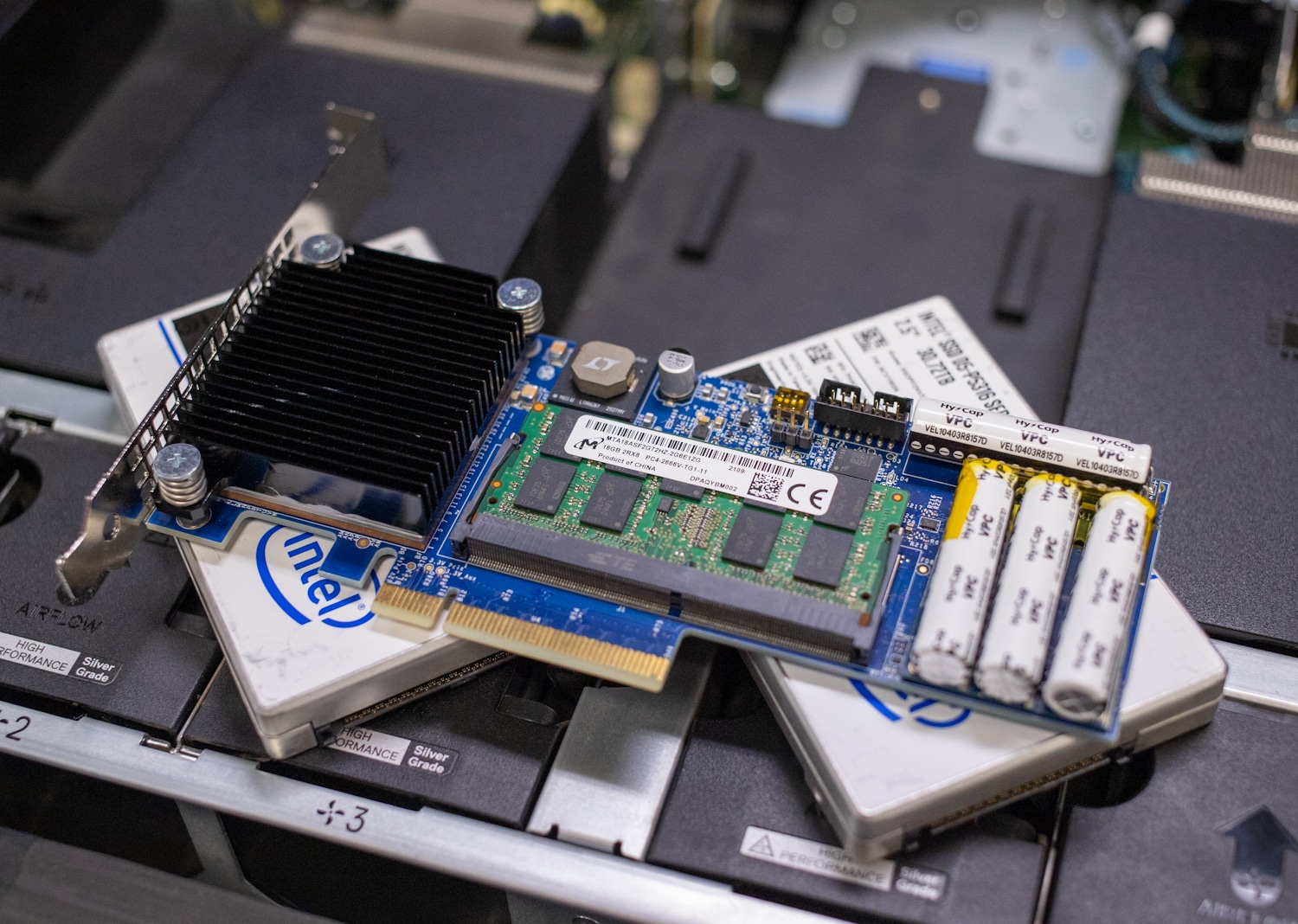

Just as GPUs overcome processing inefficiencies to accelerate AI and analytics performance, the Pliops XDP overcomes storage inefficiencies to massively accelerate performance and dramatically lower infrastructure costs for today’s modern applications. The Pliops XDP simplifies how data is processed and SSD storage is managed. Delivered on an easy-to-deploy HHHL (half height, half length) PCIe card, Pliops XDP radically increases performance, reliability, capacity, and efficiency across a diverse set of data-intensive workloads.

Pliops Extreme Data Processor Architecture

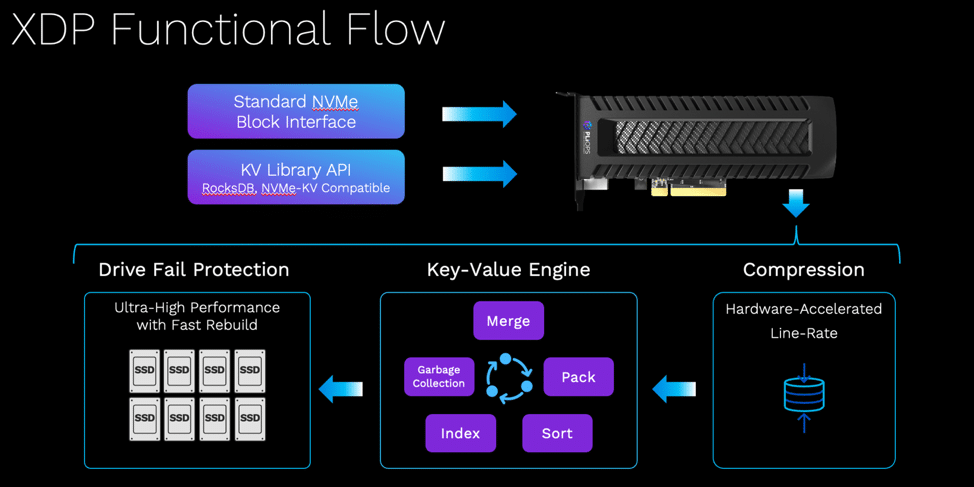

The Pliops XDP has been designed with two interfaces for hosts to access it, the key to delivering higher performance.

The first is a standard block interface, which has the broadest adoption, with the XDP looking like any storage device in the system. Once the XDP is installed, it simply shows up.

The second host interface is the RocksDB compatible Key-Value Library API. There is an emerging NVMe-KV standard that is also supported. This interface is the most efficient way for applications to have direct access to XDP and gain even higher performance. The XDP treats blocks as a special type of Key-Value pair, so everything runs through the engine in the same way.

Superior performance in the Pliops XDP can be attributed to doing most functions in hardware. Line-rate compression is performed using a fast, efficient hardware accelerated engine. The key-value storage engine is also hardware-based. Pliops likens this to RocksDB on a chip. The key-value engine is the true workhorse of XDP, performing much of the magic to deliver real performance benefits.

Simply put, when a block is compressed, it creates an arbitrary-size object. Flash has fixed-block sizes, so this would present issues around capacity management. This is addressed by merging compressed blocks, packing them all together, sorting and indexing them for fast retrieval, then garbage collection. As updates are made, the blocks are unpacked and the process starts again. This is what drives write amp, read amp, and space amp in software-based solutions. From a CPU perspective, the host makes compromises so it doesn’t consume all the processing power.

Pliops has implemented extremely efficient algorithms and data structures that are computationally intensive. For example, XDP delivers the equivalent of five hundred of Xeon Gold Cores of RocksDB performance.

Reliability

Traditional data protection solutions require tradeoffs in both performance and capacity. But Pliops XDP eliminates

these tradeoffs with advanced drive failure protection that maintains constant data availability and eliminates

data loss and downtime. XDP supports multiple single drive failures and has virtual hot capacity (VHC), eliminating the need for a hot spare. Because XDP manages the data, only actual data is rebuilt, unlike RAID-based solutions. In other words, users get data protection at the speed of flash with ZERO performance penalty.

In the event of a sudden loss of power, XDP preserves metadata and users data in flight by automatically flushing it to non-volatile memory. Recovery is automatic and begins immediately when power is restored using available VHC capacity without reducing usable capacity.

Capacity

Pliops XDP supports all command flash technologies, TLC, QLC, Intel Optane, and SSDs from any vendor. The XDP’s in-line compression implements multiple engines to prevent bottlenecks, which frees the CPU from this burden. Compression, minimal drive failure protection overhead, and near-full drive utilization (95%) expand usable capacity by up to 6x. This increase in usable capacity delivers a substantial reduction in the cost/TB.

SSDs have finite endurance, which translates to the amount of data that can be written and erased before the device wears out and can no longer store data safely. As the industry adopts QLC SSDs and beyond, the level of endurance decreases. Since XDP transforms all random writes into sequential ones, it eliminates this problem, resulting in up to 7x higher endurance.

It should be noted that as of today, Pliops XDP supports 128TB of user data per card. For use cases where more storage is needed, it’s possible to leverage multiple XDP cards within a host system.

Efficiency

Compact yet powerful, XDP gets more out of the existing infrastructure footprint to keep up with organizational data growth and application adoption. Moreover, it is easy to deploy across an entire data center. Pliops XDP can deliver up to 80 percent better economics across a range of workloads.

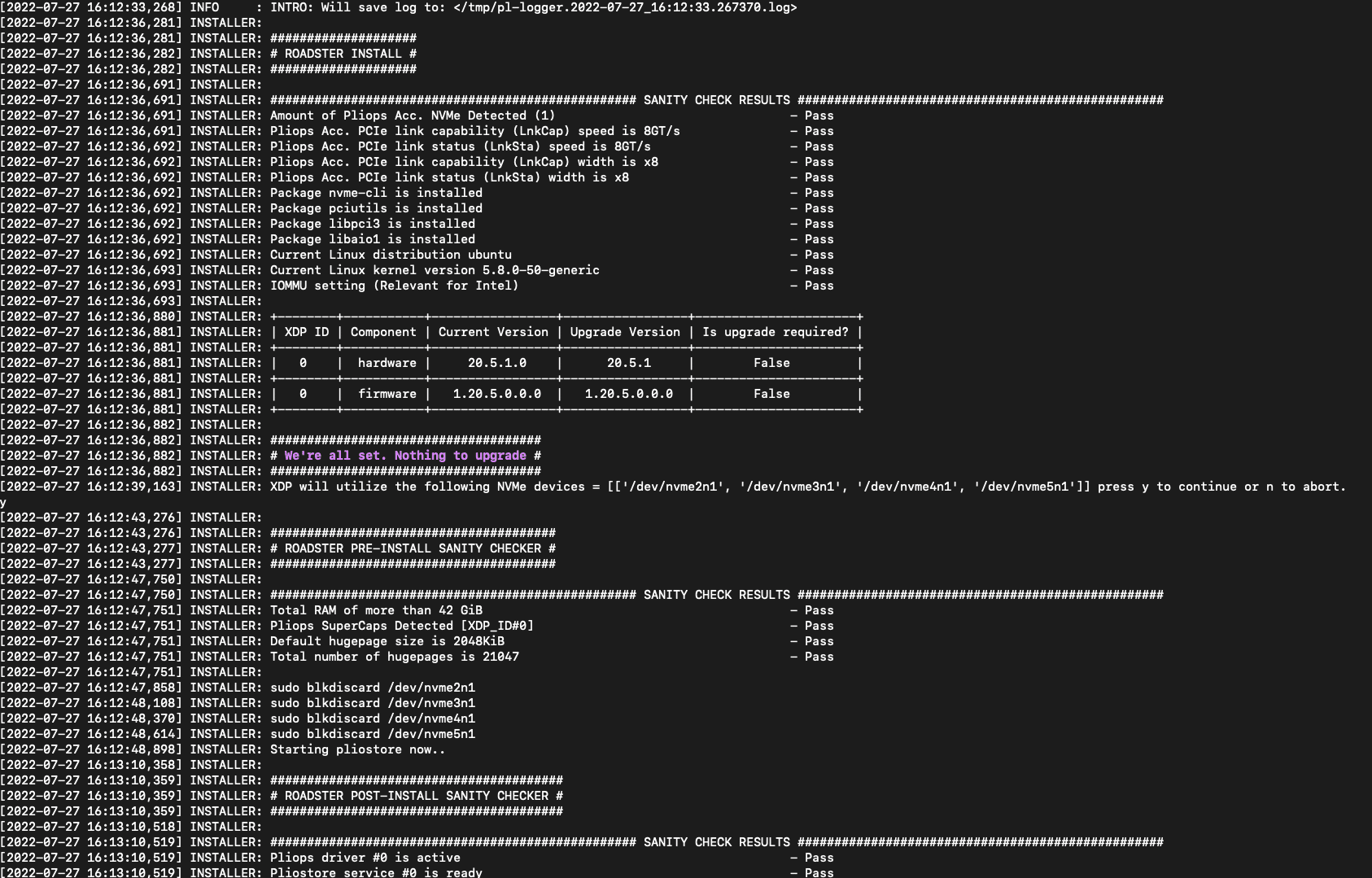

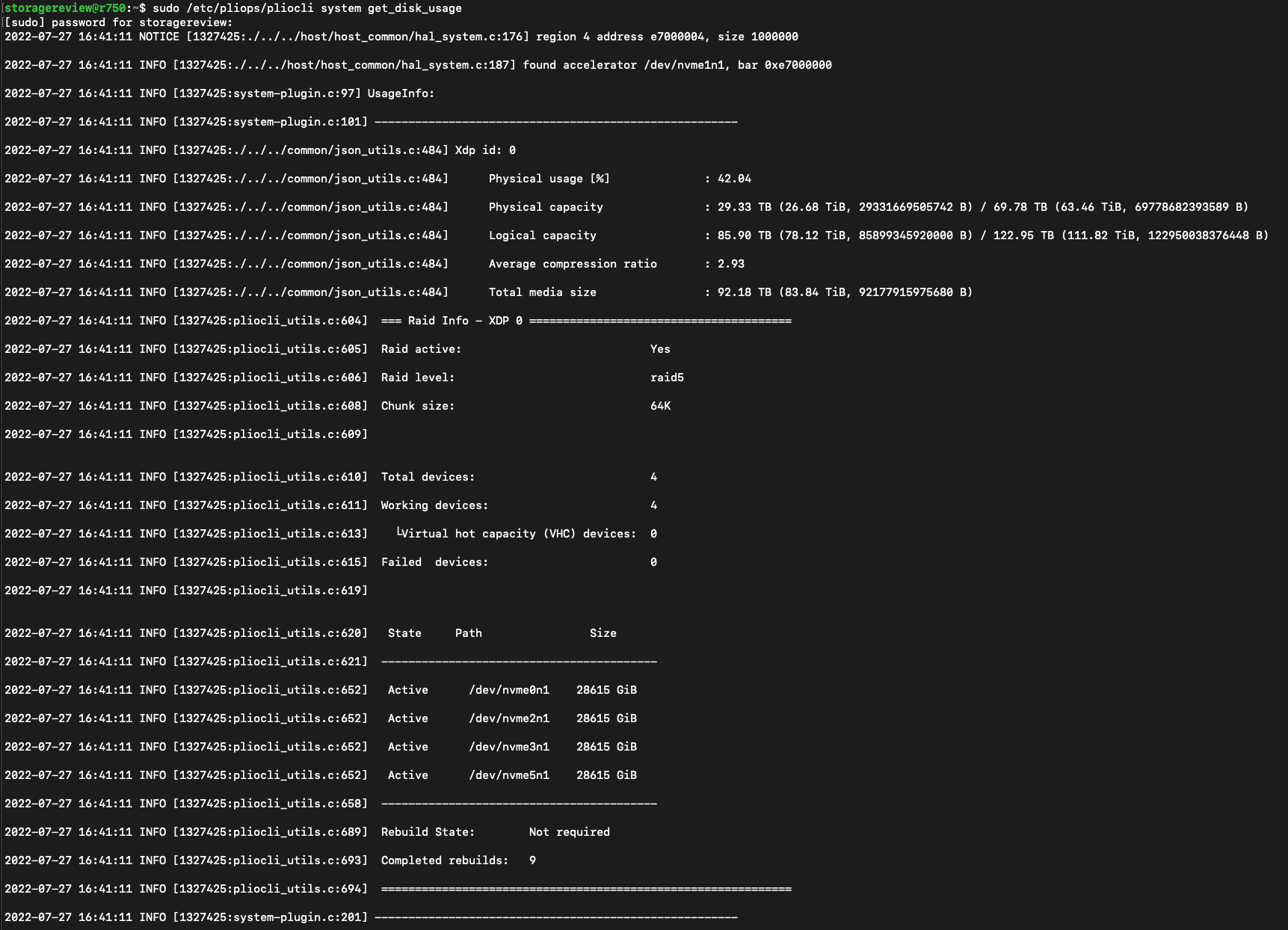

Currently, Pliops leverages a CLI interface for their XDP software installation.

The interface is straightforward and to the point. It’s easy to configure XDP and navigate array status if the need arises.

Advanced Features

Pliops XDP Advanced Features include:

- Standard block device with high and consistent performance

- No compromise Drive Fail Protection (DFP) protects against multiple single drive failures

- Expands usable capacity with Compression, high drive fill, and minimal DFP overhead

- Virtual Hot Capacity eliminates the need for a dedicated hot spare

- Reduced write amp extends the useful life of TLC and QLC SSDs

- Rapid Recovery by rebuilding only user data to allocated Virtual Hot Capacity

- User configurable rebuild rate to balance performance

- Total data & metadata protection in the event of sudden power down

- Balances over-provisioning and improves performance

Pliops XDP delivers the full potential of flash storage by enabling enterprise and cloud applications to access data up to 1,000 times faster, using just a fraction of traditional computational load and power.

Pliops Extreme Data Processor Specifications

| Performance | 3.2M IOPS RR, 1.2M IOPS RW, 30GB/s SR, 6.4 GB/s SW |

| Write Atomicity | Support for Atomic Writes up to 64KB for explicit or transparent double write elimination |

| Capacity | 128TB of user data on 128TB of physical disk with parity protection |

| Host API | • Standard block device • KV Library API |

| Compression | Hardware accelerated |

| SSD Support | • Interface: PCIe Gen 3/4/5 NVMe, NVMe-oF • Types: TLC SSD, QLC SSD, Intel® Optane™ |

| Drive Vendors | Samsung, WD, Micron, Intel, Kioxia, Hynix, Seagate, and others supported |

| Physical Dimensions | Low Profile HHHL (6.6” X 2.536”) – Tall and Short Bracket |

| OS Support | All Linux variants |

| Supported Servers | Dell, HPE, Lenovo, Supermicro, Quanta, Wywinn, Inspur, Sugon, Fujitsu, Hitachi – all standard 1U / 2U servers |

| Power Fail Protection | All data is protected from sudden power failure |

| Operating Temperature | 10-52°C @ 250 LFM |

| Storage Temperature | 5°C to 35°C, < 90% non-condensing |

| Power | Typical <25W, Max 45W, +12Vdc through PCIe adapter |

| Warranty | 3 years, free advanced technical support, advanced replacement option |

| Regulatory Certifications | AS/NZS CISPR 22, ICES -003, Class B, EN55022/EN55024, VCCI V-3, RRA no 2013-24 & 25, RoHS compliant, EN/IEC/UL 60950, CNS 13438, FCC 47 CFR part 15 Subpart B, class B, WEEE |

| MTBF | Up to 4.5M Hours |

Performance

The Pliops XDP will help achieve improved performance up to 10x higher for databases, analytics, AI/ML, and more.

The breakthrough data structures and algorithms deliver the equivalent of hundreds of cores of host software. XDP appears as a block device in the system and accelerates any application. With databases like MySQL, MongoDB, and Cassandra, Pliops XDP delivers increased instance density while reducing latency for databases like MySQL, MongoDB, and Cassandra.

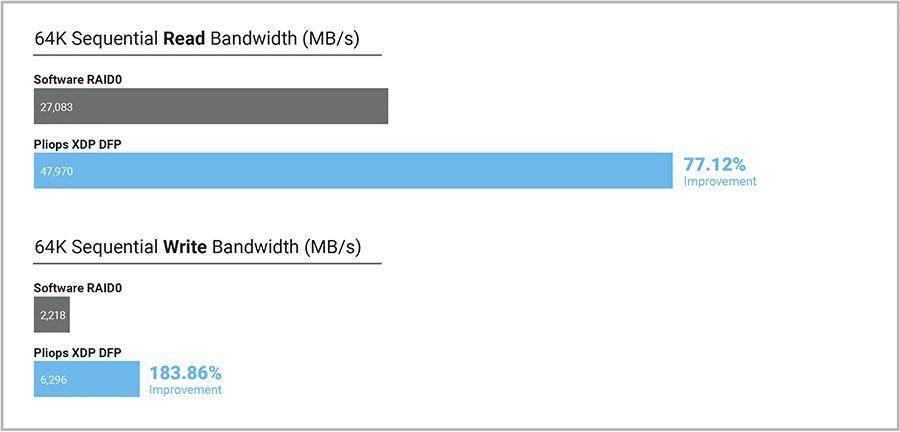

We tested the performance of the Pliops XDP in our lab inside a Dell PowerEdge R750 and four Solidigm P5316 30.72TB QLC SSDs. We compared the performance of software RAID0 using mdadm against the XDP with a RAID5 configuration, both using a 64K chunk size. This put more work on the XDP card and aimed to show the highest performance configuration of software RAID. The mdadm is a command used for building, managing, and monitoring Linux md devices (aka RAID arrays). Note that mdadm is not preinstalled with Linux systems.

For preconditioning, three 128K 10TB sequential write fills were performed prior to measuring sequential read and write performance. For the random read and write tests, three 128K 10TB random fills were performed. The tests themselves were then tested with a 10TB footprint for multiple queue depths across multiple block sizes, with each interval measuring 120 seconds.

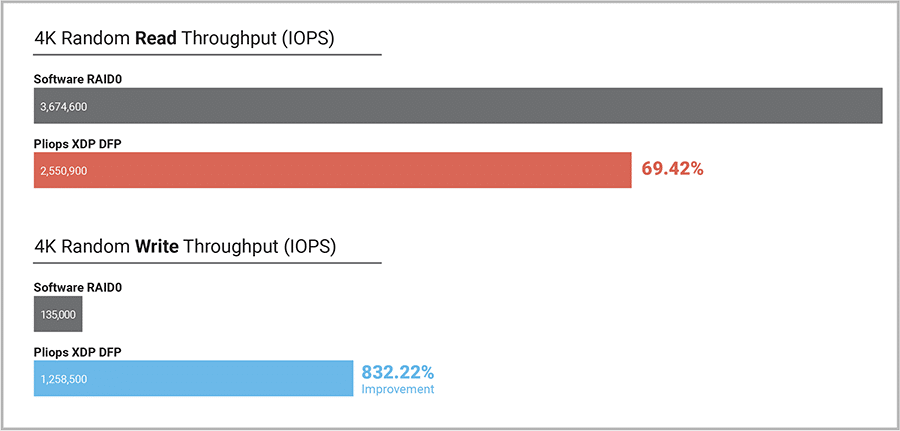

With random 4K transfers, the Pliops XDP came in slightly below software RAID0, measuring 2.6M IOPs compared to 3.7M IOPS. Looking at random write performance, though, there was a massive 832% gain, going from SW RAID0 with 135k IOPS to a whopping 1.3M IOPS from XDP. Both the random read and random write workloads were tested at a level of 8-thread/128-queue.

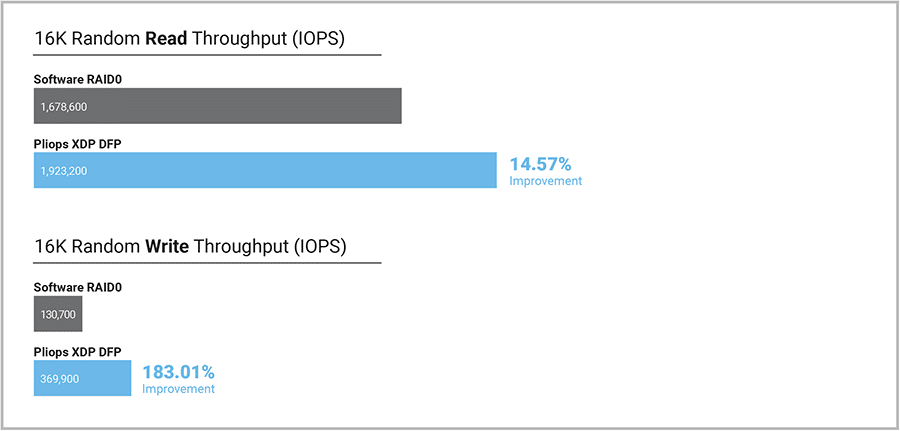

Increasing the blocksize to 16K, the Pliops XDP was able to lead the software RAID0 numbers in both read and write workloads. We measured 16K random read of 1.9M IOPS from XDP versus 1.7M IOPS of SW RAID0. In 16K random write, the difference was 370K IOPS from XDP to 131k IOPS from SW RAID0. Both the random read and random write workloads were tested at a level of 8-thread/128-queue.

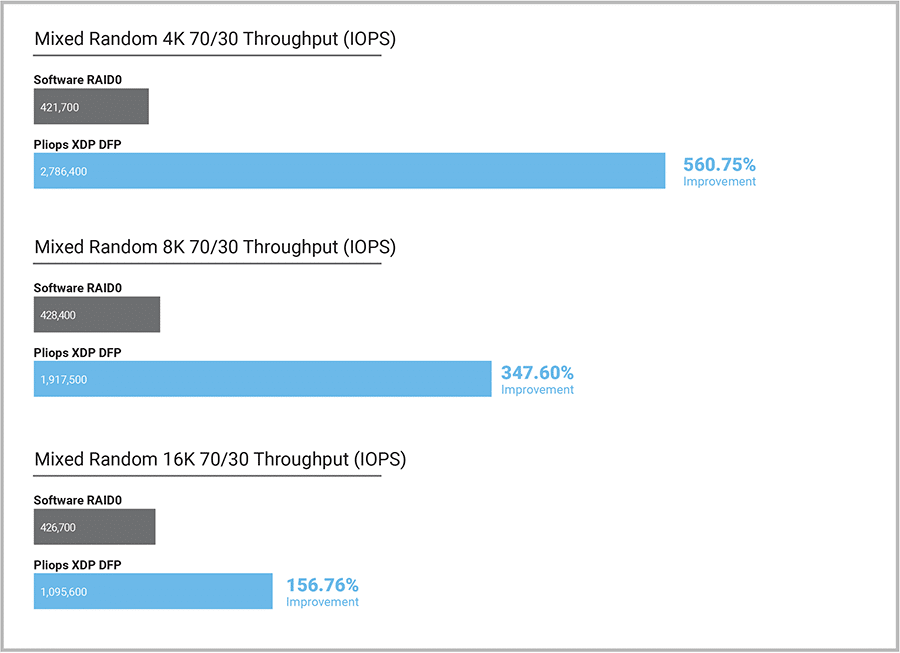

With a mixture of random read and write activity together, we worked up through the transfer sizes from 4K to 16K block sizes. Across the board, Pliops XDP had huge gains. In 4K 70/30 it offers a huge 561% gain, measuring 2.8M IOPS to 422K IOPS from SW RAID0. At the 8K blocksize, the gains were just slightly less at 348%, measuring 1.9M IOPS to 428K IOPS in SW RAID0. At the 16K transfer size the gap narrowed, but still had a large 157% improvement over SW RAID0. Here we measured 1.1M IOPS from XDP versus 427K IOPS of SW RAID0.

While the previous workloads focused on random transfers, our final test focuses on sequential large-block transfer speeds. Here Pliops XDP continued to show substantial gains, especially in write performance. Starting with read bandwidth, we measured 48GB/s from XDP compared to 27GB/s from SW RAID0. In write, the XDP had a 184% lead with 6.3GB/s compared to 2.2GB from SW RAID0.

While performance under optimal conditions is always the strong suit of any storage platform, understanding how long rebuild activities take is an important datapoint as drive capacities increase. With our 4-drive XDP array using Solidigm P5316 30.72TB SSDs, we simulated a drive failure and rebuild. The rebuild process took 450 minutes with background traffic applied. Using FIO to drive an 8K 70/30 workload with 905MB/s of combined traffic, the rebuild speed of the array still maintained a rebuild pace 14.65 Min/TB.

Final Thoughts

Modern enterprise QLC SSDs, like the Solidigm P5316 used in this testing, have the potential to deliver a tremendous blend of performance and capacity. Modern infrastructures, though, require new tools to manage flash. RAID cards of old are cumbersome, while basic software RAID leaves a lot of performance on the table. This reality has opened the door for creative solutions like the Pliops Extreme Data Processor.

We set out to evaluate the performance of the XDP accelerator when compared to software RAID. We dropped four 30.72TB P5316’s in a Dell PowerEdge R750, comparing Pliops XDP performance to software RAID. Further, we gave the software RAID a running start, configuring it in RAID0, while the XDP was set to RAID5.

Taking a quick look at the results, we saw huge gains across the board. With 4K random write performance, in particular, we saw an 832% improvement, although read performance at the 4K blocksize did take a hit. Moving up the blocksize, though, Pliops XDP showed its strength in both random and sequential transfer scenarios. Even in our random mixed workload with a 70/30 Read/Write split, the Pliops XDP had 560% to 156% improvements from 4K to 16K transfer sizes over SW RAID0.

Overall, the Pliops card is straightforward to get operational. For as much as we love working with the high-capacity QLC SSDs, it’s hard at times to find systems that can properly leverage the media. With the Pliops Extreme Data Processor, the entire math changes when it comes to server performance on a cost-per-terabyte basis. It’s easy enough to try yourself as well; click the link below to get started with a PoC.

Pliops sponsors this report. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Amazon

Amazon