In the ever-evolving landscape of artificial intelligence (AI) development, cloud training has revolutionized how AI models are created, refined, and deployed. Cloud training, a method of training AI models using remote cloud infrastructure, brings many advantages that propel AI development into new realms of scalability, efficiency, and accessibility.

By harnessing the power of cloud computing, organizations and developers can unlock a range of benefits that streamline the AI training process and accelerate innovation in ways previously unattainable. From effortless scalability to specialized hardware utilization, cloud training empowers AI practitioners to navigate complex challenges with agility and harness the full potential of their models.

Who is OVHcloud US?

Although not a household name, OVHcloud US, the US-based subsidiary of OVH Group, offers bare metal servers, hosted private cloud, and hybrid cloud solutions. Their solutions cover everything from dedicated servers for video games, bespoke hosted private cloud services for large enterprises, and everything in between. In this review, we will explore their Public Cloud compute services, specifically the GPU Cloud powered by NVIDIA Tesla V100S GPUs. These GPU instances are designed for anything that would benefit from parallel processing, whether essential machine learning, generative AI, or specific AI model training.

One of the key things we want to look at today is the benefits of cloud-based GPU processing versus on-prem solutions. There is certainly an argument to be made for both, but OVHcloud US offers some compelling reasons to go cloud, even if it’s just for getting started on your personal or business AI journey.

The primary selling point is undoubtedly price. Starting at $0.88/hr for a single Tesla V100S with 32 GB of VRAM, 14 vCores, and 45GB of memory, it’s possible to use thousands of hours of cycles before even coming close to the cost of an on-prem solution. Then there’s the cost-benefit of supplementing existing in-house GPU machines with cloud-based instances for things like occasional re-training of AI models.

OVHcloud US GPU offerings are broken down into the following instances:

| Name | Memory | vCore | GPU | Storage | Public Network | Private Network | Price/hr |

| t2-45 | 45 GB | 14 | Tesla V100S 32 GB | 400 GB SSD | 2 Gbps | 4 Gbps | $2.191 |

| t2-90 | 90 GB | 28 | 2x Tesla V100S 32 GB | 800 GB SSD | 4 Gbps | 4 Gbps | $4.38 |

| t2-180 | 180 GB | 56 | 4x Tesla V100S 32 GB | 50 GB SSD + 2 TB NVMe | 10 Gbps | 4 Gbps | $8.763 |

| t2-le-45 | 45 GB | 14 | Tesla V100S 32 GB | 300 GB SSD | 2 Gbps | 4 Gbps | $0.88 |

| t2-le-90* | 90 GB | 30 | Tesla V100S 32 GB | 500 GB SSD | 4 Gbps | 4 Gbps | $1.76 |

| t2-le-180* | 180 GB | 60 | Tesla V100S 32 GB | 500 GB SSD | 10 Gbps | 4 Gbps | $3.53 |

*newly released

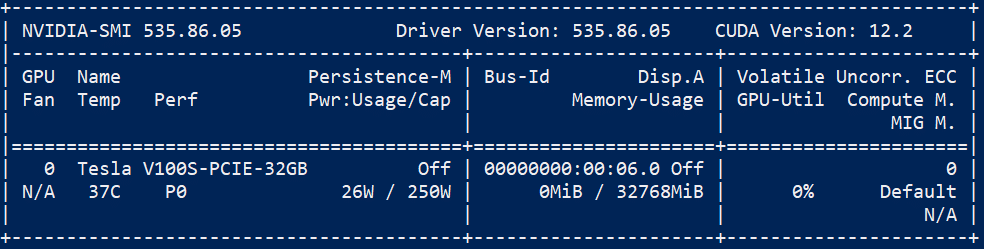

OVHcloud US GPU Servers Hardware

Let’s take a deeper look at the hardware options OVHcloud US offers. At the core of the GPU instances is the NVIDIA Tesla V100S, a GPU optimized for compute with 32GB of HBM2 memory. While the newer Tesla A100s have primarily superseded these GPUs, the V100S still delivers excellent performance and value.

The most important aspect of these instances is they are cloud-based, so they won’t tie up any systems you may have on-site, making them an ideal solution for “set it and forget it” workflows. These GPUs are delivered directly to the instance via PCI passthrough without a virtualization layer, providing dedicated use of each card for your work.

On the CPU side, they don’t specify which specific SKUs you will get, but they guarantee at least 2.2GHz on all cores, which will be fast enough for most applications. Our particular instance reported having an Intel Xeon Gold 6226R with 14 of its 32 threads available to us. The vCore counts range from 14 up to 56.

On the CPU side, they don’t specify which specific SKUs you will get, but they guarantee at least 2.2GHz on all cores, which will be fast enough for most applications. Our particular instance reported having an Intel Xeon Gold 6226R with 14 of its 32 threads available to us. The vCore counts range from 14 up to 56.

OVHcloud US does offer different options with faster CPUs if your use case calls for it. Memory options range from 45GB up to 180GB, which should be plenty for most GPU-focused workflows. The same ethos applies to storage, with capacities from 300GB up to a dedicated 2TB NVMe drive.

OVHcloud US GPU Servers – Popular AI Use Cases

Development

Spinning up and running an instance is fast enough and cheap enough that OVHcloud makes a compelling argument for even the casual developer to play around with a decent GPU. Theoretically, you could create all of your training data locally, load it to the cloud data provider of your choosing, spin up an instance, and start training/fine-tuning various models available online.

Through rigorous testing of this scenario, we developed a variety of sophisticated LLaMa flavors by utilizing the Alpaca code and Huggingface converted weights. These flavors can be seamlessly prepared on your device, conveniently uploaded to Google Drive, effortlessly downloaded on the instance, and expertly fine-tuned. Now, we’re working with the lower parameter models to fit inside the 32GB of VRAM we had, and it was still a much more manageable option than trying to purchase a comparable card like an RTX8000 to toss in the homelab.

Small businesses could use this strategy to provide a developer or team access to GPU computing now rather than waiting for hardware or significant budget approvals.

Inferencing

The V100S is a great GPU for inferencing LLMs that can fit into its memory. While inference times differ from what you would get with services like ChatGPT, the tradeoff comes with the perk of running your own private model. As usual, running a cloud service with 24/7 uptime will incur costs, but it would take months of running the instance at the current $0.88 per hour to come close to the infrastructure required to do it on-prem.

Image Recognition

Extracting data from images to classify them, identify an element, or build richer documents is a requirement for many industries. With frameworks like Caffe2 combined with the Tesla V100S GPU, medical imaging, social networks, public protection, and security become more accessible.

Situation Analysis

In some cases, real-time analysis is required where an appropriate reaction is expected to face varied and unpredictable situations. For example, this technology is used for self-driving cars and internet network traffic analysis. This is where deep learning emerges, forming neural networks that learn independently through training.

Human Interaction

In the past, people learned to communicate with machines. We are now in an era where machines are learning to communicate with people. Whether through speech or emotion recognition through sound and video, tools such as TensorFlow push the boundaries of these interactions, opening up many new uses.

Hands-On Impressions

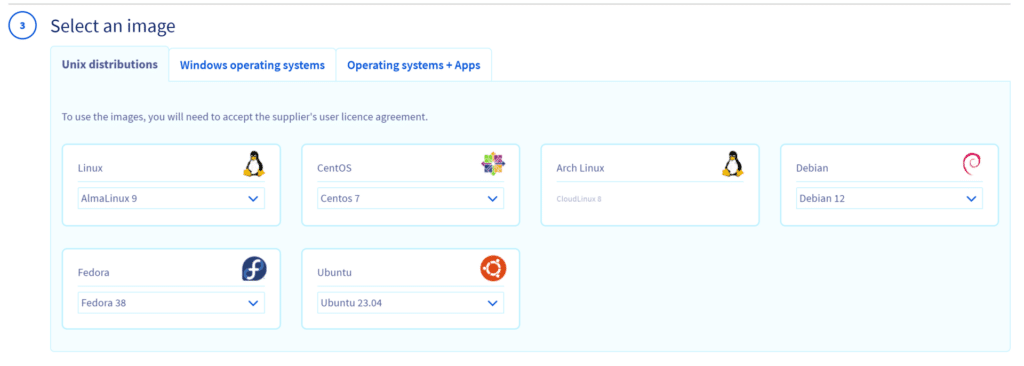

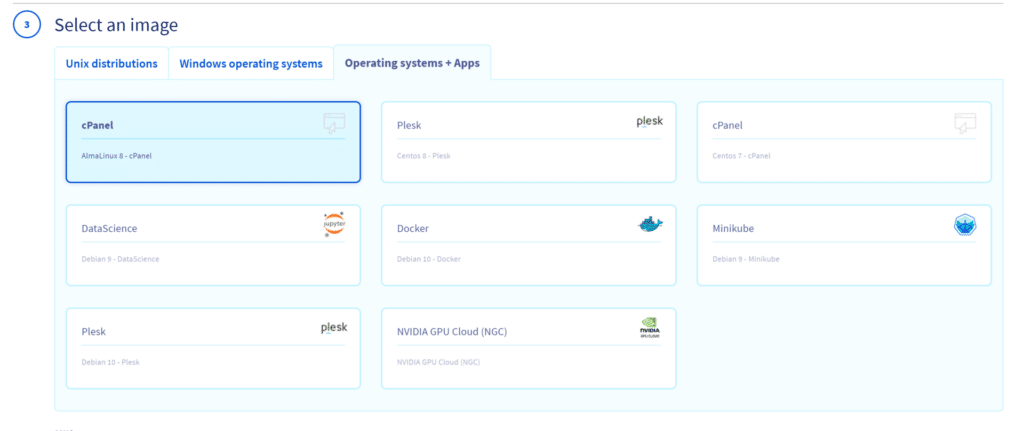

Starting out, the OVHcloud US portal was intuitive, and getting set up was simple. Create an account, add a payment method, create some SSH keys, select the instance, grab a Red Bull, and SSH over to your new GPU box. We used Ubuntu Server, but other options are available for Linux versions and flavors, including Fedora, Arch, Debian CenOS, AlmaLinux, and Rocky.

There is also the option to install various OS images that include apps like Docker.

Getting hands-on with the OVHcloud US GPU Server, we found the experience incredibly user-friendly and responsive. The instance setup was a breeze, and accessing the GPU resources was virtually seamless. Whether testing complex machine learning models or handling large-scale data processing tasks, the OVHcloud US’s GPU instances demonstrated remarkable performance.

Additionally, the flexibility in scaling resources allowed us to customize the environment to our specific needs. Everything was designed to facilitate a smooth and efficient workflow, from the user interface to the underlying hardware. The support for various popular AI frameworks, combined with the power of NVIDIA Tesla V100S GPUs, made our experiments and model training and inferencing not only possible but highly effective.

The ability to augment our in-house resources with these cloud-based solutions confirmed OVHcloud US as an attractive option for both beginners taking their first steps in AI and seasoned professionals looking for reliable and cost-effective solutions.

We ran through a couple of benchmarks to test the CPU allocation performance and V100 implementation. First up was the Blender-CLI benchmark and then our favorite CPU bench, y-cruncher.

| GPU Monster | 1112.95022 |

| GPU Junkshop | 754.813874 |

| GPU Classroom | 603.196188 |

| CPU Monster | 113.467036 |

| CPU Junkshop | 62.223543 |

| CPU Classroom | 50.618349 |

| y-cruncher 1b |

40.867 seconds

|

| y-cruncher 2.5b |

113.142 seconds

|

The t2-45le instance, upon testing, delivered results consistent with its specifications, showing no surprises in its performance metrics. With the V100 onboard, it’s evident that it can handle intensive inferencing tasks and even supports cloud-based training operations effectively both in terms of performance and expense.

Closing Thoughts

As with everything in enterprise IT, there are pros and cons; the same holds true for cloud training AI. Cloud training with OVHcloud US GPU Servers offers scalability, cost-efficiency, and accelerated development and opens the door to innovation and experimentation. Specialized hardware, global accessibility, and seamless collaboration make cloud-based AI ripe for groundbreaking discoveries.

OVHcloud Data Center

These advantages exist alongside the realities of data security concerns and network stability. Addressing the potentially steep learning curve, cloud training for AI should be approached with a side of caution, alerting organizations to chart a strategic course that aligns with their unique needs and priorities.

Although we only touched on the specific GPU Server, OVHcloud US has a comprehensive set of services. Overall, support was friendly, and they followed up to see if we needed assistance after our initial sign-up. The portal was intuitive, easy to use and understand, and the system performed exactly as expected. The only downside might be the lack of regions, but that is easily overlooked based on the cost and simplicity. OVHcloud US gets a solid recommendation and is going in our back pocket as a potential cloud provider for future projects that need to be outside the lab or just a little bit of rented extra horsepower to get a job done.

As we navigate this dynamic landscape, it is essential to approach cloud training for AI with a balanced perspective, embracing the opportunities while pragmatically addressing the challenges. The journey towards AI excellence, marked by ingenuity and practicality, is shaped by understanding the intricate interplay of these pros and cons and crafting strategies that leverage the former while mitigating the latter.

Amazon

Amazon