VAST Data has announced support for the next-generation storage platform named Ceres. Enabled by VAST’s Universal Storage data platform, Ceres is built leveraging the latest hardware technologies like NVIDIA BlueField DPUs (data processing units). On the storage side, Ceres takes advantage of cost-optimized, and highly dense Solidigm EI.L ruler-flash drives and storage-class memory (SCM) SSDs. The combination of hardware nets an all-new VAST data node (DNode) that improves performance, simplifies serviceability, and reduces data center costs.

VAST Data Ceres 1U DNode

VAST Data Ceres 1U DNode

According to CMO and Co-founder Jeff Denworth, VAST’s core mission is to simplify infrastructure, making it easier to deploy and manage, while being more cost-effective. VAST set out to make a data management system and build a system that could scale simply and cost-effectively. The key was to build a system based on flash that would meet performance demands, longevity and would be low-cost.

We spent a few days with VAST Data to get a better sense of how this all comes together. After all, VAST is a software company. In days past, that meant compatible hardware was selected off a compatibility list by customers or system integrators. VAST works a little differently. VAST’s hardware partner, AVNET, puts together the hardware, but the end solution feels a little more like an appliance than traditional software-defined storage.

Ultimately, the progress in the hardware that runs VAST’s Universal Storage platform is a major differentiator. The ability to leverage emerging data transport technology like NVIDIA BlueField makes the hardware very unique. Outside of a handful of startups, or fewer, there really hasn’t been a fundamental shift in data storage architectures since the introduction of flash and hybrid storage arrays. That innovation malaise quite clearly ends today with the launch of VAST Ceres DNodes.

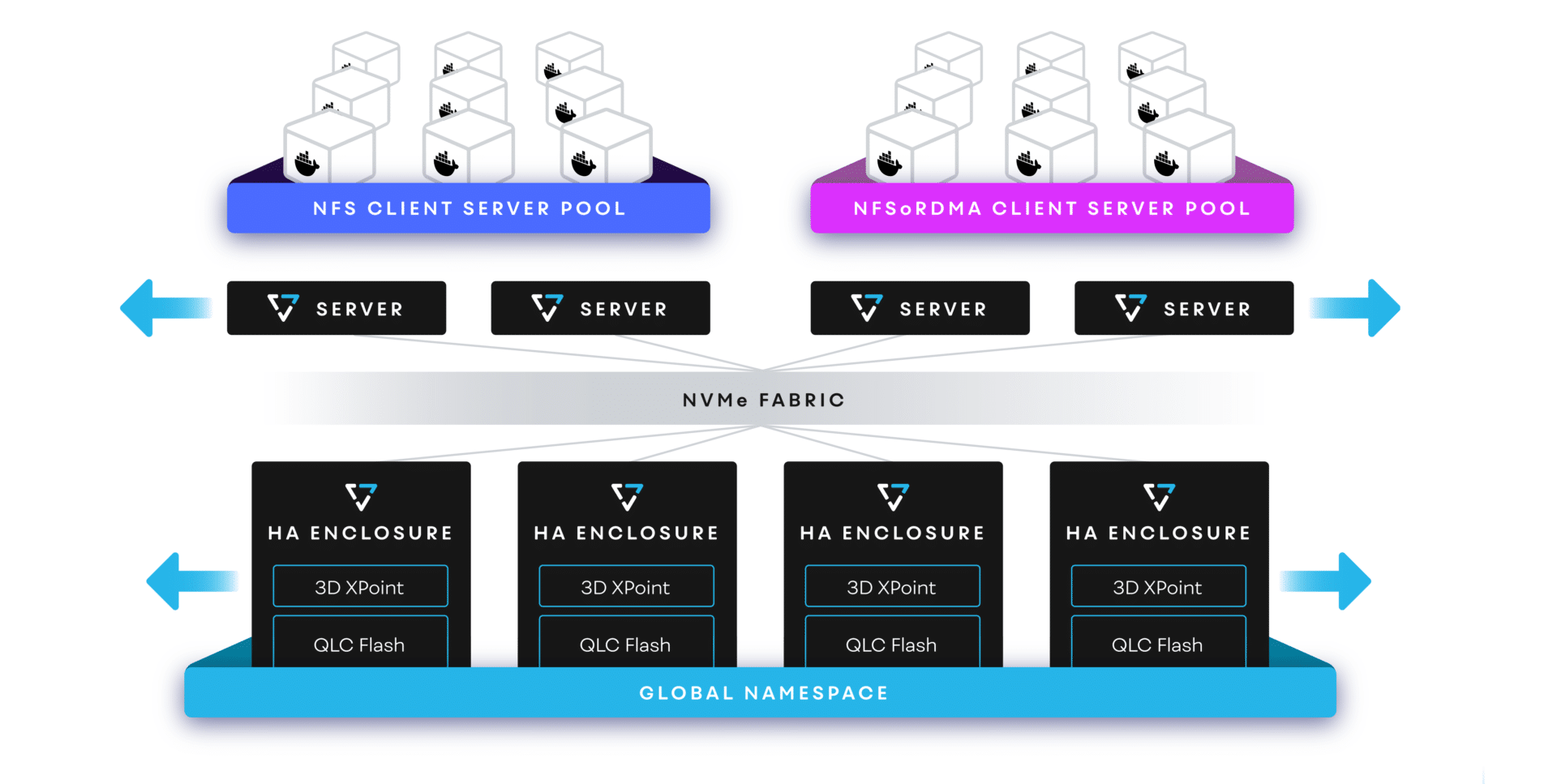

VAST DASE

The new architecture was created to solve the scalability issue and eliminate the need for stateless containers with access to a large number of drives and the necessity to have to coordinate I/O operations with each other. So VAST designed DASE, DisAggregated Shared Everything, a data structure that lives in low-cost flash housed in VAST NVMe enclosures.

VAST systems form a single cluster and a single storage pool across storage enclosures holding different numbers of different sized SSDs and front-end servers with different numbers of cores or even different CPU architectures. This allows VAST users to run clusters with multiple generations of VAST hardware seamlessly.

In VAST’s DASE architecture, all the SSDs are shared and directly addressed by all the front-end protocol servers via NVMe-oF. VAST’s data placement methods operate at the device, not the node/enclosure level. The system selects the SSDs to write each erasure-coded stripe across based on the performance, load, capacity, and endurance of all the SSDs in the system. This load balances across enclosures holding SSDs of different capacities and performance levels.

The system similarly load-balances across front-end protocol servers of different performance levels by resolving DNS requests and assigning shards of system housekeeping to the protocol servers with the lowest CPU utilization.

All of this allows VAST clusters to create a single, load-balanced namespace across heterogeneous protocol servers, enclosures, and SSDs of multiple generations. VAST users simply join new servers and/or enclosures to their clusters, and evict appliances when they reach the end of their useful lives.

VAST Servers are stateless containers that run all of the logic of a VAST Cluster in standard x86 servers. Using NVMe over Fabrics, each server enjoys DAS-like, low-latency access to every NVMe Flash and Storage Class Memory storage device.

Containers make it simple to deploy and scale VAST as a software-defined microservice while also laying the foundation for a much more resilient architecture where container failures are non-disruptive to system operation, forming the world’s first web-scale “disaggregated, shared everything” architecture.

VAST NVMe enclosures are highly-available, high-density flash storage JBOFs. The storage processing responsibility has been decoupled from VAST enclosures such that the system is disaggregated. Because there is no logic running in the system, organizations can size storage capacity independently from compute to right-size their environment. Since the system is fully fault-tolerant, clusters can be built from as little as one enclosure and can scale to over 1,000 enclosures.

It was also not only critical to address the need for all the systems to communicate with each other, but to scale linearly. The new system is designed to get the most efficiency out of flash drives using an algorithm called global codes. VAST uses shape writes as they go through the system using a combination of memory and flash, eliminating flash wear. VAST has realized doubled flash longevity (exceeding SSD vendor warranties) using its Universal Storage software.

VAST’s focus was not on ultimate performance, but on the cost of infrastructure and the simplicity benefits that are a product of consolidation. VAST democratized flash for every data center, every application, every user. Since performance was not the ultimate goal, VAST realized that the aggregate flash performance across petabytes to exabytes of resilient, affordable flash capacity would enable the modern computing agenda. IOPS and bandwidth are now a byproduct of flash capacity, and everything becomes ‘VAST Enough.’

VAST has evolved into an advanced storage vendor, continuing to remain flash agnostic. The original VAST hardware was a 2U rackmount that could house 1.3PB of flash, while the newest model is a 1U rackmount unit. And that brings us to Ceres and the collaboration with NVIDIA.

NVMe-oF and Universal Storage

A key aspect of the VAST solution is advanced software engineering. Data-driven applications such as big data, machine learning, and deep learning demand to be fed more data to be effective. Tiering data from flash to archive results in preventing applications from learning. Although hard drives have always been considered the cost-effective medium to store data, they come with costs. Hard drives operate at a constant rate even as they grow in density, resulting in a performance hit.

Silicon storage, aka flash, was designed to eliminate the performance degradation inherent in HDD media. However, innovations in flash technology have not kept pace with enterprise demands for density and performance, forcing customers to continue to compromise. Historically, enterprise flash systems cost much more than HDD-based storage, so flash is used for only the most valuable data.

VAST decided to solve the problem by democratizing flash storage infrastructure for all data by combining new storage algorithms with new technologies, challenging fundamental assumptions on how storage can be architected and deployed. The solution is to write at storage-class memory speeds, read at NVMe speeds, and scale to millions of IOPS and TB/s. NVMe-over-Fabrics (NVMe-oF) enables commodity data center networks to transform into scalable storage fabrics that combine the performance of NVMe DAS with the efficiency of shared storage infrastructure.

To deliver on the cost/performance demands, QLC flash would enable the economic goals of the VAST concept while providing the NVMe flash performance to power the world’s most demanding applications. Quad-level cell (QLC) SSDs are the fourth and latest generation in flash memory density and therefore costs the least to manufacture. QLC stores 33% more data in the same space than triple-level cell (TLC) SSDs.

While QLC brings the cost per GB of flash down to unprecedentedly low levels, squeezing more bits in each cell comes with a cost. Each successive generation of flash chips reduced cost by fitting more bits in a cell and had lower endurance, wearing out after fewer write/erase cycles. The differences in endurance across flash generations are huge. The first generation of NAND (SLC) could be overwritten 100,000 times, and QLC endurance is 100x lower. That is a significant trade-off, which is why storage vendors that employ QLC SSDs have to do so in creative ways.

VAST’s Universal Storage systems were designed to minimize flash wear by using new data structures that align with the internal geometry of low-cost QLC SSDs, and a large Storage Class Memory write buffer to absorb writes, providing the time, and space, to minimize flash wear. The combination allows VAST Data to warranty QLC flash systems for 10 years, positively impacting system ownership economics.

Storage Class Memory

Leveraging a new non-volatile storage media positioned between flash and DRAM, Storage Class Memory memory is the enabling technology that makes it possible to field QLC in enterprise environments.

Storage Class Memory is a persistent memory technology that is lower-latency and more endurant than the NAND flash memory used in SSDs, while retaining flash’s ability to persistently retain data without external power. Universal Storage systems use Storage Class Memory as a high-performance write buffer to enable the deployment of low-cost QLC flash for the system’s data store and a global metadata store.

A Universal Storage cluster includes tens to hundreds of terabytes of Storage Class Memory capacity. The benefits of the VAST DASE architecture include extremely low-latency, 100 percent persistency, and low cost compared to DRAM. While VAST supports SCM SSDs from Intel and KIOXIA today, the platform is able to support other drives as they come to market.

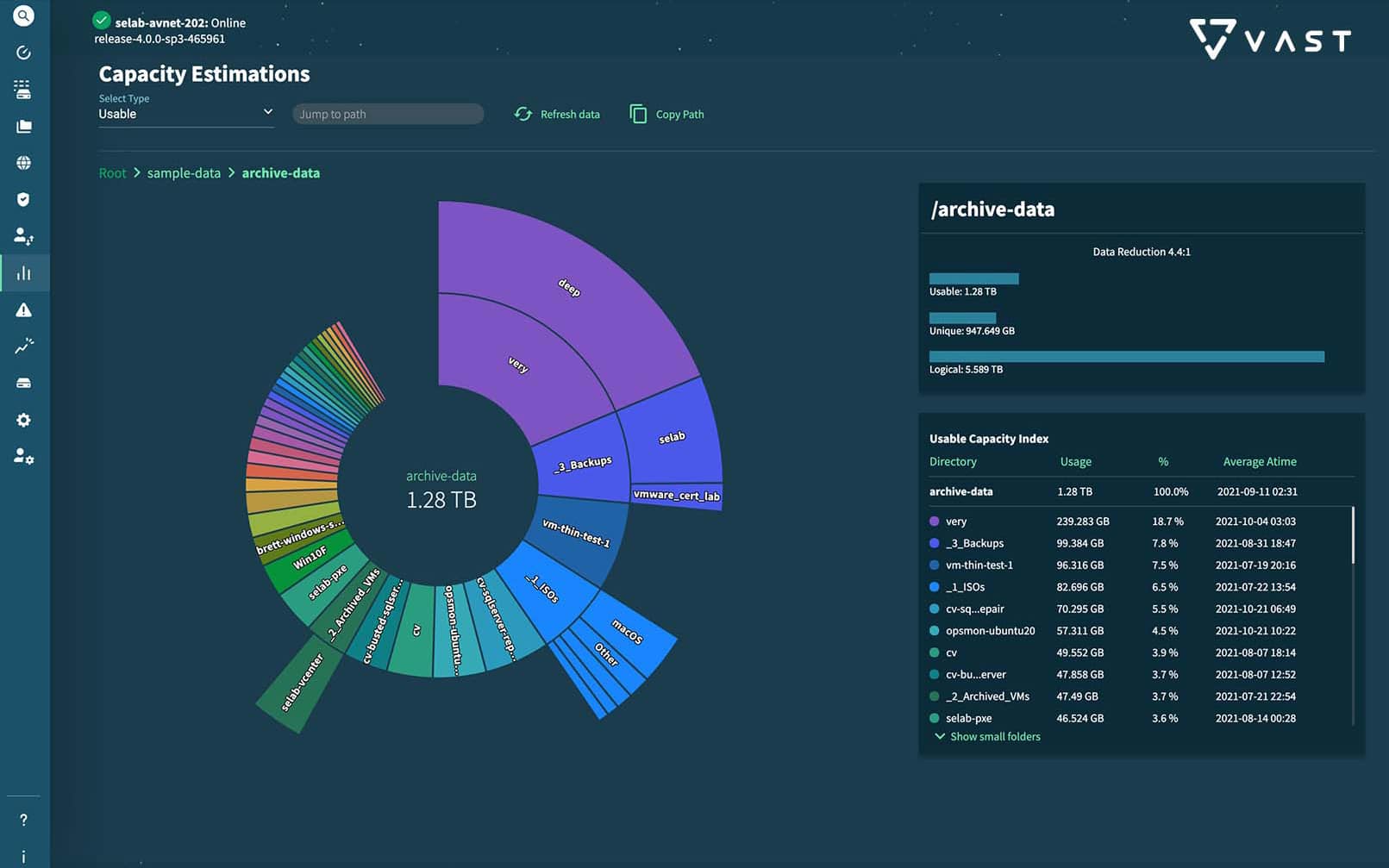

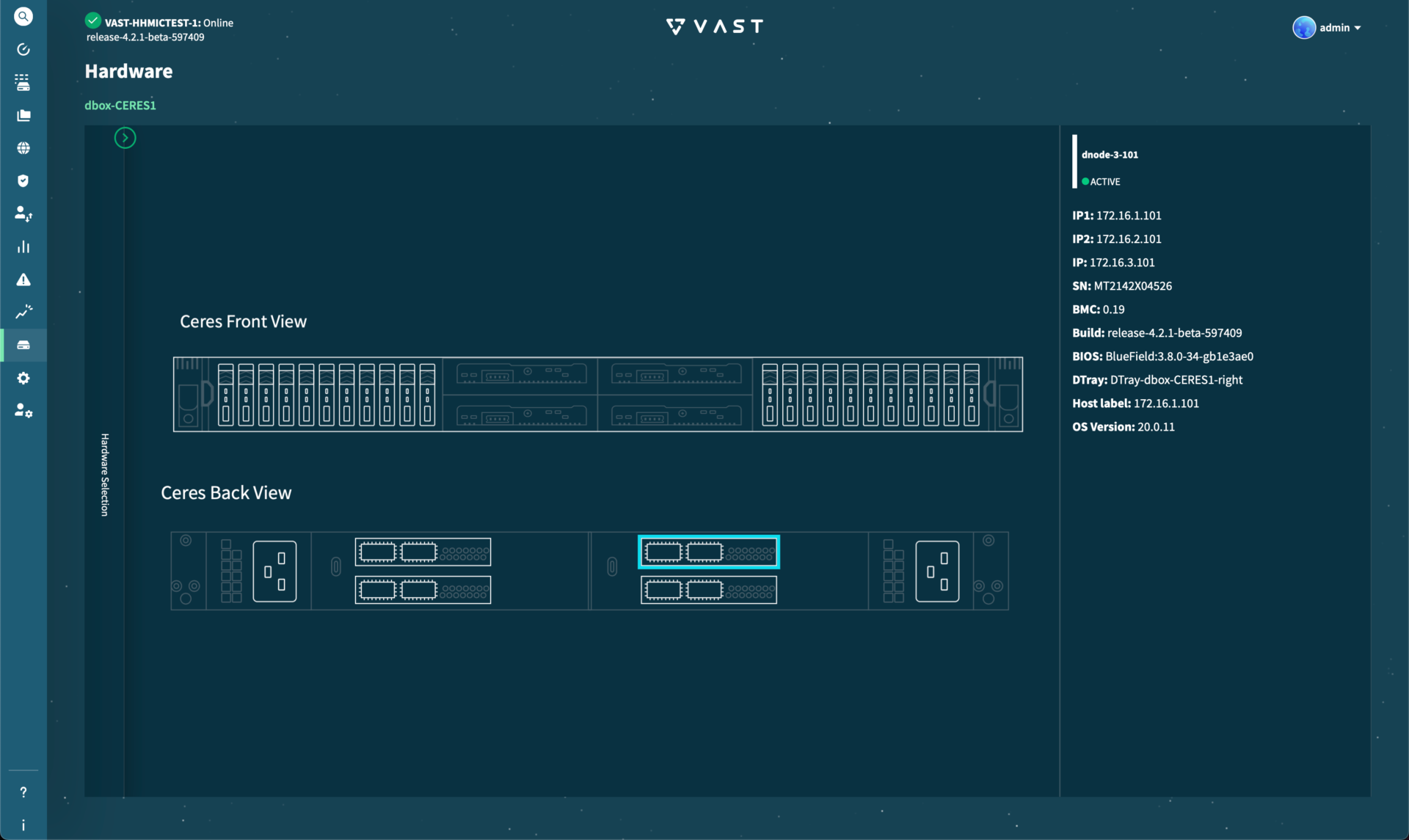

Universal Storage GUI

Unique in the storage management realm is access to a GUI for configuration, management, and maintenance of the storage media. The Universal Storage system offers a GUI interface to make the storage administrator’s life easier. Systems of this nature often tend to be CLI-driven, so an easy-to-use interface is a significant differentiator for VAST.

This display shows the estimated usable capacities of each drive. The left column allows the administrator to select any of the available functions. Each of the “slices” in the graphic shows drive usage, with details from those slices provided on the right. The type of drive usage is the usable capacity.

The dashboard display indicates details on capacity, physical and logical usage, overall performance, and along the bottom, the read/write bandwidth, IOPS, and overall latency.

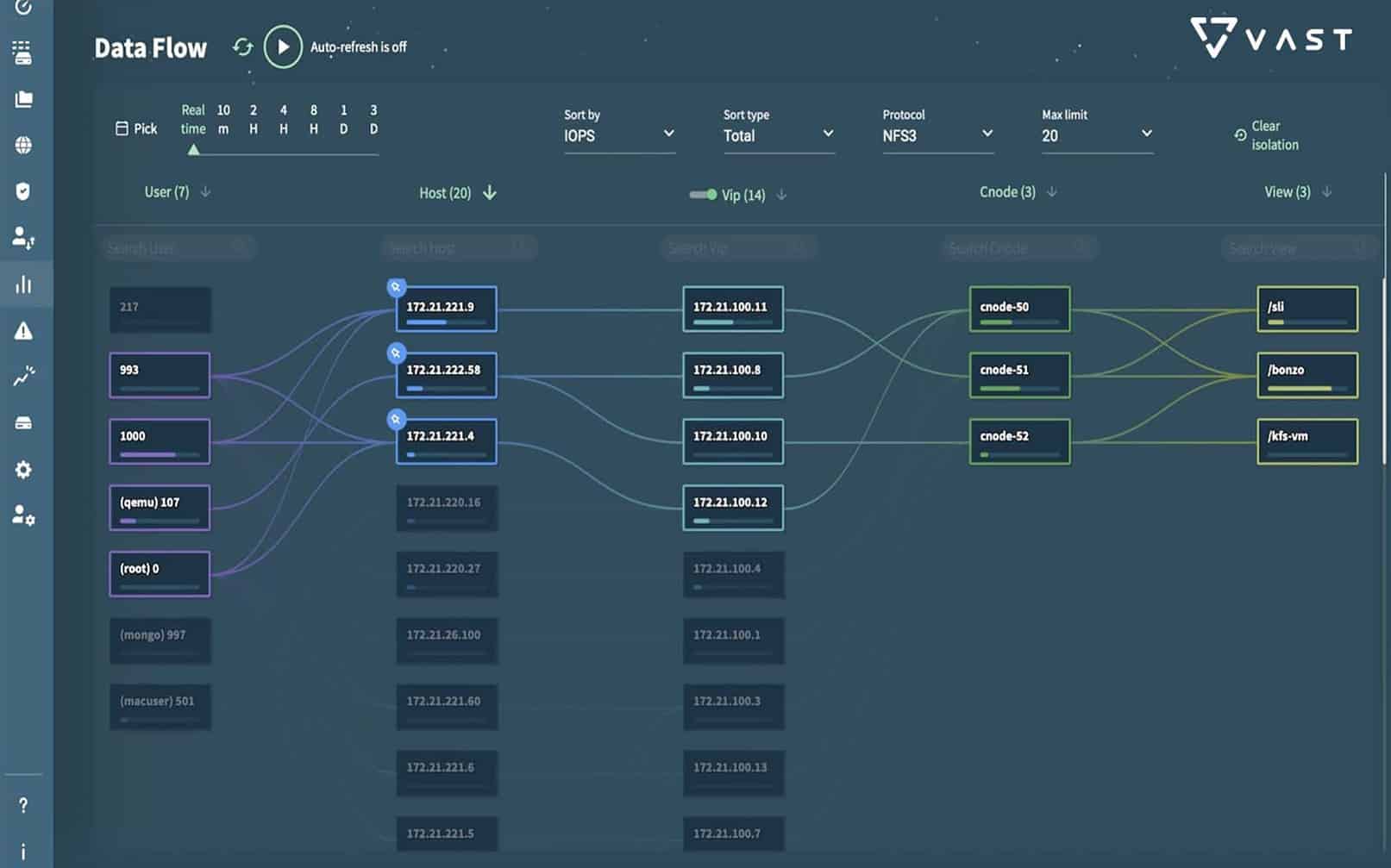

The data flow display is such a helpful tool. It shows the user origin, host IP, Vip, CNode, and destination. Typically, this would be performed via a command line for each path along the way with no graphical display. This screen alone would reduce troubleshooting by tracking the data path for each user.

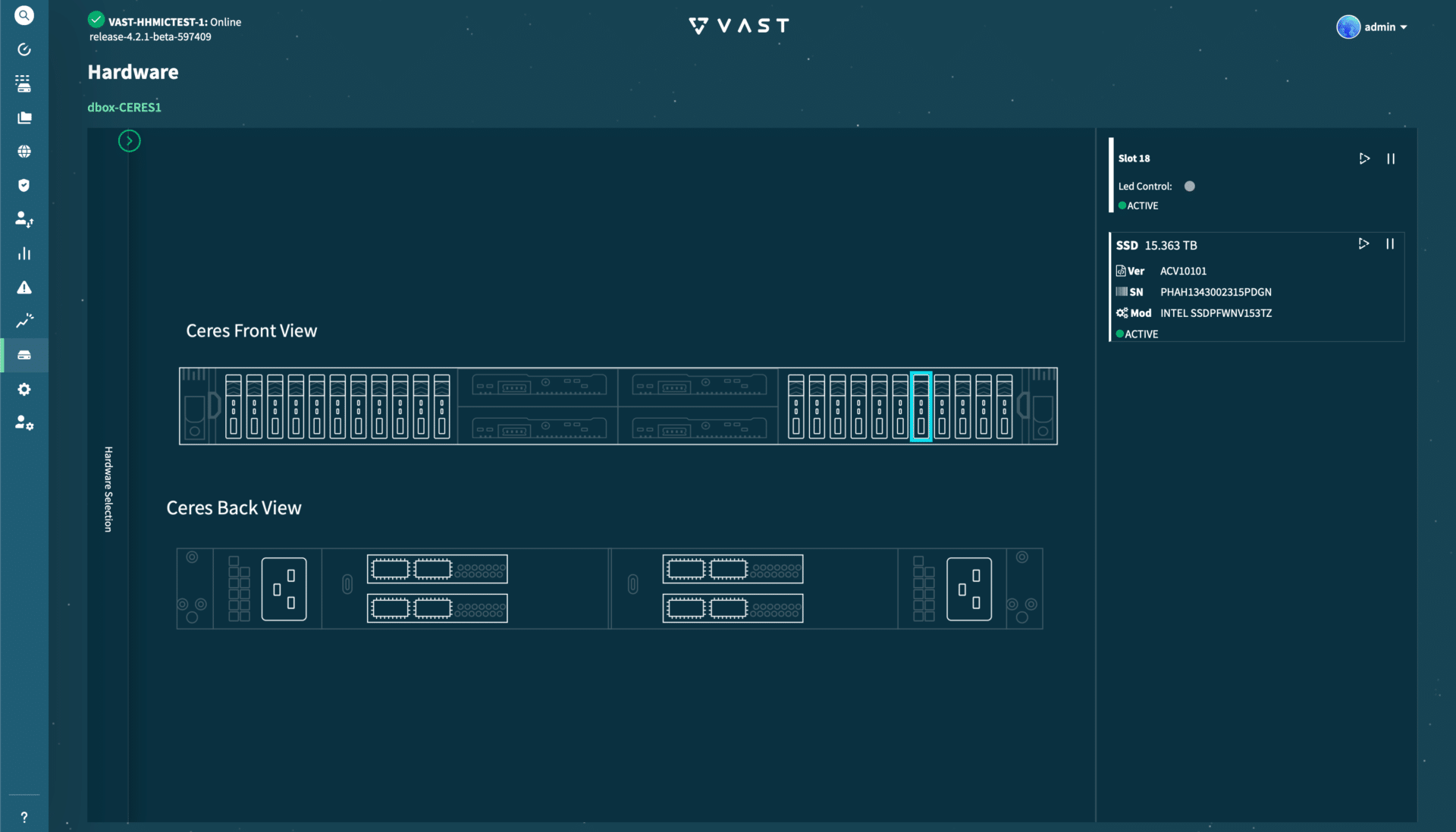

The GUI also has the option to display the front and rear view of the hardware. The selected drive is outlined on the screen from the Ceres front view. Intuitive visual indicators also aid in serviceability in the event an SSD needs to be replaced.

On the same screen, it is possible to select one of the SSDs in use from the rear of the server.

VAST Data Ceres DPUs

The new Ceres storage platform concept pioneers NVIDIA BlueField DPUs, and ruler-based hyperscale flash drives to serve as the disaggregated building blocks of scalable data clusters. VAST’s Universal Storage supports Ceres for its next-gen, high-performance NVMe enclosure.

Jeff Denworth, CMO of VAST Data, explained;

“A year ago, we shared our vision for hyperscale data infrastructure to the industry, and we’ve been amazed by the collaboration and support for this vision that has come back from industry partners. While explosive data growth continues to overwhelm organizations who are increasingly challenged to find value in vast reserves of data, Ceres enables customers to realize a future of at-scale AI and analytics on all of their data as they build to SuperPOD scale and beyond.”

VAST and industry partners designed Ceres to advance storage into the modern AI era, bringing new speed, resilience, modularity, and data center efficiency. VAST’s mission to equip enterprises and service providers with new capabilities that have otherwise been the exclusive domain of the world’s largest hyperscale cloud providers is further advanced with Ceres. VAST Universal Storage software powered the new hardware platform that enables customers to adopt cutting-edge technologies.

This new platform offers increased performance, improved power, and space efficiencies. Taking advantage of NVIDIA BlueField DPU technology makes it possible to build NVMe enclosures without the need for large, power-hungry x86 processors. By transitioning NVMe-oF services from x86 servers to BlueField DPUs, NVIDIA technology makes it possible to develop a 1U form factor capable of delivering over 60GB/s performance per enclosure. VAST’s DASE architecture is positioned to leverage DPU-based systems by decoupling storage processing from the flash layer.

VAST Data Ceres Hardware Layout

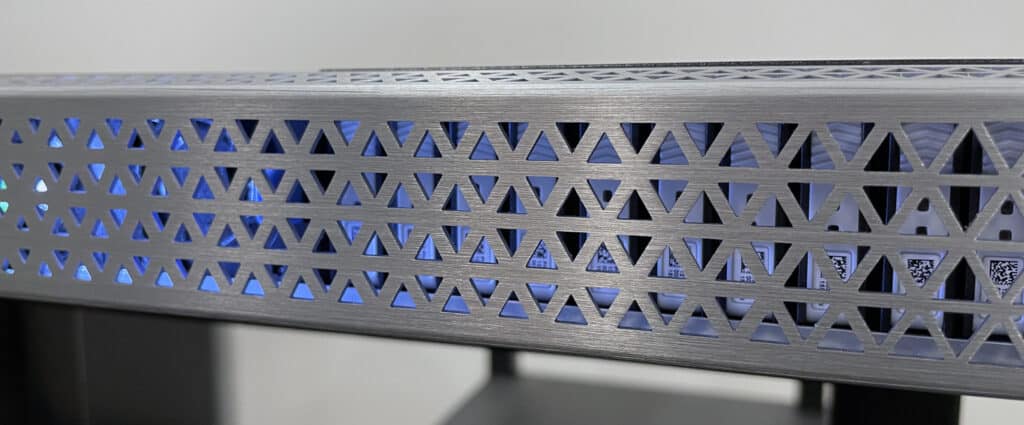

At first or second glance, the VAST Ceres looks like a typical 1U server with a pretty slick bezel. The stylish faceplate is designed for airflow, but it even lights up the front SSDs with some cool illumination when powered. The VAST logo also lights up with its color scheme, which is a nice aesthetic. The attention to detail on the exterior is carried through to the internals and reveals a storage server that is anything but typical.

With the bezel removed, you finally start to see just how unique and flash-dense this server really is. Upfront are 22 E1.L SSDs, and in this case, 22 15.36TB or 36.72TB Solidigm P5316 SSDs. These drives are also offered in the larger 2.5″ U.2 form factor, but density per rack unit is reduced considerably. E1.L SSDs also have distinct advantages for cooling, with the very long body design giving a significant surface area to shed heat away.

The E1.L form factor is very long, hence the terminology “ruler.” These measure just over 12.5″ long, which gives you an idea of how much space they take up in the first foot of the server alone. Now, while almost 340TB or 675TB of QLC flash (depending on drives selected) is nothing to shake a stick at, there is even more flash located behind the middle component of the server. It’s worth noting, that’s just the raw storage footprint of the QLC drives; VAST offers data reduction on top for even better density.

The front logo block hides four more SSD trays and acts as an integral cooling component to the front of the chassis. This block has three fans, which provide additional cooling capabilities down the center of the chassis across the KIOXIA SCM flash located in the center of this particular DNode.

Each of the four trays holds two 2.5″ U.2 SSDs, which on this system are KIOXIA’ FL6 800GB SSDs. VAST uses these as a write buffer to absorb incoming data before filtering it down into the higher-density QLC flash surrounding them. There is effectively no underutilized space on this 1U server that isn’t being leveraged for more storage capacity in one way or another.

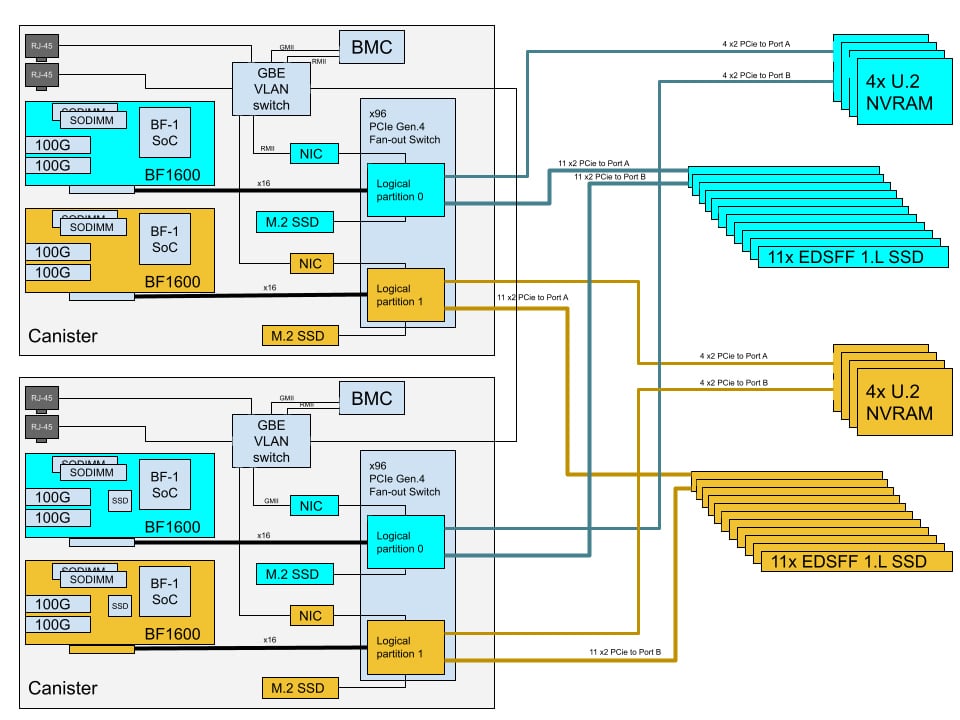

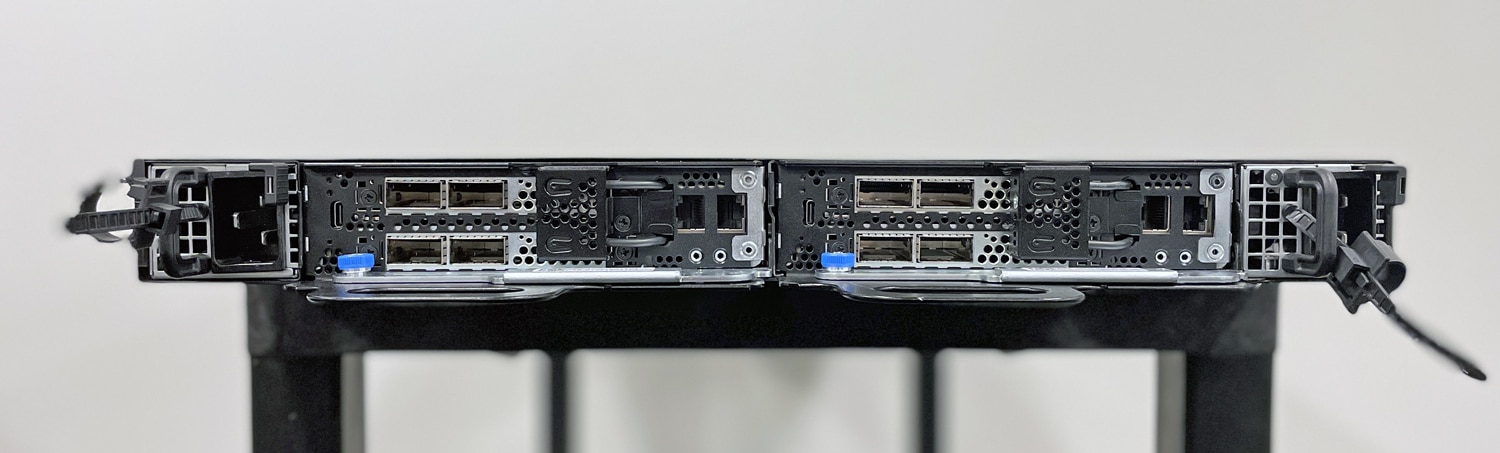

The rear view of the VAST Ceres chassis shows it has been designed to be fully redundant with dual power supplies and dual controllers. Each controller houses two NVIDIA BlueField BF1600 DPUs offering dual 100GbE ports each. In total, across both controllers, users have 800Gb/s of connectivity. Each controller has two 1GbE ports used for management and a micro USB port for direct BMC access.

The internal design of each controller sled really doesn’t leave any space unused either. Each NVIDIA BF1600 DPU is connected via x16 PCIe Gen4 slot, with additional power routed through the small cage just outside the cards on the outside of the chassis.

While the VAST Ceres internal design looks a bit like a traditional server sled, it has no underlying server x86 or similar server design to it. Each controller is effectively one large PCIe switch, connecting the DPUs to the internal and front-accessible storage. While the NVIDIA BF1600 DPUs do offer 16GB eMMC storage for BIOS and OS, VAST designed in additional internal DPU storage via two m.2 SSDs per sled.

Looking at the block diagram for the VAST Ceres really helps paint the best picture of how this system is designed. There are two sets of SSDs and NVRAM/SCM drives in the front, which are then split between the two DPUs inside each controller sled. Each sled is a large PCIe switch, directing that NVMe PCIe storage directly to the two NVIDIA DPUs installed inside of it. There are a few accessory components also touching that fabric, such as the BMC, management NICs, and M.2 SSDs.

It’s the Algorithms

As described above, Ceres features new ruler-based, high-density SSDs to deliver ultra-dense flash capacity configurations. Over time, ruler-based flash drives, with a larger surface area, are expected to pack more flash capacity than traditional NVMe drives. VAST has partnered with Solidigm to certify their 15TB ad 30TB long rulers to deliver up to 675TB of raw flash in a 1U rack space.

Solidigm launched in January following the acquisition of Intel NAND and SSD Technology by SK Hynix. Solidigm operates as a standalone U.S. subsidiary of SK hynix Inc. Based in San Jose, the new subsidiary manages product development, manufacturing, and sales of the acquired Intel assets. The Intel/Solidigm “ruler” form factor was introduced in 2017 and is formally known as the E1.L and E1.S. Solidigm offers a broad portfolio of products with this form factor design and has options spanning flexible optimizations for high-density storage (E1.L), scalable performance (E1.S), and mainstream 2U servers (E3).

With VAST Data’s Similarity-based data reduction algorithms, Ceres can manage nearly 2PB of effective capacity per enclosure at an average 3:1 data reduction ratio. Additionally, VAST’s write shaping techniques extend QLC flash endurance. At the same time, advanced erasure coding also dramatically accelerates the time to rebuild ultra-high-capacity storage devices.

Ceres has been engineered to solve a number of problems that customers have faced when dealing with high-density storage systems. The system is designed to be fully front and rear serviceable, eliminating the need for cable management or making it necessary to slide systems in and out of racks.

The Ceres platform reduces upfront hardware costs with a minimum capacity entry point of 338TB while supporting seamless cluster scaling to hundreds of petabytes. Rack-scale resilience is improved with less hardware to enable full-enclosure failover in Universal Storage clusters. Customers have the flexibility to mix and match Ceres with previous generation VAST-supported hardware to enable the infinite cluster lifecycle.

Expanding on the benefits to NVIDIA customers,Charlie Boyle, vice president and general manager of DGX systems at NVIDIA, said:

“Enterprise levels of simplicity and resilience are critical success factors for NVIDIA as AI infrastructure is adopted broadly around the world. We’ve partnered with VAST because the performance, cost-efficiency, and simplicity of their architecture meets the demands of DGX SuperPOD solutions and our customers who depend on it. VAST Universal Storage and the Ceres platform additionally enables NVIDIA customers to realize the benefits of the NVIDIA DPU from end-to-end across the AI data center with superior performance, security, and efficiency powered by BlueField innovation.”

VAST and NVIDIA SuperPod and More

VAST and NVIDIA are also collaborating on new storage services to enable zero-trust security and offload functionality with client-side DPUs, such as those introduced in recently announced NVIDIA DGX SuperPOD configurations. As part of the collaboration effort with NVIDIA, VAST is certifying Ceres for NVIDIA DGX SuperPOD. The SuperPOD product is designed for large-scale AI workloads, bringing together high-performance storage and networking, providing enterprise customers with a turnkey AI data center solution.

Designed to address the industry’s transformation to AI, the SuperPod supercomputing infrastructure deploys as a fully integrated system. Enabled by VAST’s DASE, Ceres is the data platform foundation for SuperPod. This Ceres platform design will initially be manufactured by VAST design partners such as AIC and Mercury Computer. It will serve as the data capacity building blocks of VAST’s Universal Storage clusters.

With Ceres, NVIDIA customers can now enjoy the simplicity of a NAS solution with limitless levels of scale and performance via a system architecture that radically improves storage resiliency, proven by VAST’s 99.9999% availability track record across exabytes of production data. With all-flash performance and archive storage economics, VAST will make it easy for NVIDIA DGX SuperPOD customers to scale their AI training infrastructure to support exabytes of data without the toil of performance and capacity trade-offs imposed by legacy-tiered storage architectures. Read here to learn more about how VAST will simplify scaling AI development on DGX SuperPOD.

VAST Data Universal Storage certification for NVIDIA DGX SuperPOD is slated for availability by mid-2022.

Conclusion

Organizations with some of the world’s largest computing environments have already selected Ceres. VAST has received software orders to support over 170PBs of data capacity to be deployed on Ceres platforms.

While VAST is first and foremost a software company, the hardware provides an interesting view of what’s in store for the enterprise storage market. While some vendors are still moving down the path of platforms built around x86 hardware with a traditional server approach, VAST is taking a different path. The traditional server model has performed well through the years, although as storage and networking components evolve, so must storage server designs.

The VAST Data Ceres DNodes combine up to 675TB of QLC flash (before data compression) and 6.4TB of SCM with four NVIDIA BlueField DPUs, giving it upwards of 800Gb/s of connectivity in a 1U box. This is possible by cutting out the middle-man, which in this case is an x86 server and swapping it with a PCIe-switching fabric to directly link the 22 E1.L and 8 U.2 SSDs to four DPUs. With the DPUs performing the heavy lifting and VAST software on top, very little extra is required.

While we absolutely love the hardware innovation here with VAST Data Ceres, the software makes all the difference. Write shaping to protect SSD endurance, data reduction to stretch capacity many times over and a GUI that makes standard functions simple, are just the top hits. With VAST, the net result is an amazingly capable cluster that carries with it beneficial cost economics thanks to all of the innovation on the data node platform. Any organization looking to not just wrangle sprawling data, but make business decisions based on the insights analytics provide, would do very well to schedule a VAST Data demo right away.

This report is sponsored by VAST Data. All views and opinions expressed in this report are based on our unbiased view of the product(s) under consideration.

Amazon

Amazon