Violin Systems isn’t exactly a new company; we have been covering them for six years now. The company began as a pioneer in the all-flash arena and ran into a few woes after going public. However, the company has risen from the ashes with new investors backing the firm and has new deployment options along with the same cadre of high performing gear. We’ve been working with Violin in the lab for some time now; today we are looking at the 7650 array, which is the “extreme performance” all-flash model in the Violin Flash Storage Platform (FSP) portfolio.

The Violin FSP 7650 is an all-flash SAN that is all about higher performance and ultra-low latency. The SAN promises to deliver up to 2 million IOPS while keeping consistent low latency. This complete SAN solution can scale up to 140TB in raw capacity and starts as low as 8.8TB. Something that the company has invested in is changing its deployment options with a pay-as-you-grow plan they call Scale Smart. Basically, the model is delivered with all of its flash installed and users only pay for what they need. When their needs increase, the new flash is right there in the rack waiting–meaning no disruptions.

Aside from being fast and more affordable, the 7650 comes with a slew of enterprise data services through its Concerto OS 7 software. These services include data-at-rest encryption that meets both FIPS-140–2 and AES-XTS-256 compliance standards for data security. Users can scale through the method above or use online capacity expansion and online LUN expansion. And the SAN sports global asynchronous replication that can be combined with the FSP 770 Stretch Cluster to maximize business continuity.

FSP 7650 Specifications

| Model number | FSP 7650-26 | FSP 7650-70 | FSP 7650-140 |

| Form Factor | 3U | ||

| Capacity | |||

| Raw max | 26TB | 70TB | 140TB |

| Raw (Pay-as-you-grow) | 8TB or 17TB | 35, 43, 52, or 61TB | 96, 105, 114, 123, or 131TB |

| Usable max | 14.7TB | 44.3TB | 88.7TB |

| Connectivity | |||

| Hosts | 8x16Gb Fibre Channel or 8x10GbE iSCSI | ||

| Replication | 2x40GbE | ||

| Management | 2x 10/100/1000Mb/sec auto-sensing Ethernet ports (RJ-45) | 1x Serial console port (RS-232) | ||

| Performance (max) | |||

| 4K 100% Read | 1M IOPS at 500μs latency sustained | 2M IOPS at 1ms latency sustained | |

| 700K IOPS at 200μs latency sustained | 1.7M IOPS at 500μs latency sustained | ||

| 1M IOPS at 200μs latency sustained | |||

| Minimum latency | 150μs sustained | ||

| Bandwidth | 8GB/s | ||

| Physical | |||

| Depth | 28 in. / 711 mm | ||

| Width | 17.5 in. / 445 mm | ||

| Weight | 80 lb./36.3 kg | 93 lb. / 42.2 kg | |

| Power | 1100W | 1800W | |

| Cooling | 3780 BTU/hr. | 6140 BTU/hr. | |

| Environmental | |||

| Operating Temperature | 10 to 35°C (50 to 95°F) | ||

| Non-Operating Temperature | 40 to 70°C (-40 to 158°F) | ||

| Operating Humidity | 8 to 90% (non-condensing) | ||

| Non-Operating Humidity | 5 to 95% (non-condensing) | ||

Design and Build

Violin doesn’t vary much on design, as each platform looks strikingly like the last. The same goes for the FSP 7650, which is built like a tank. Across the front is the handle with branding, along with an easy way to slide the SAN out. Behind the integrated grab handle is ventilation for the array, powered by impressively large fans. On the bottom right side are LED status lights and USB ports.

Like other Violin devices, the SAN leverages Violin Intelligent Memory Modules (VIMMs) for storage versus common form factor SSDs. These are located behind the fans. As we previously stated, VIMMs are Violin’s alternative to SSD storage, and manage garbage collection, wear leveling, and error/fault management for their underlying storage media. VIMMs are composed of a logic-based flash controller, management processor, DRAM for metadata, and NAND Flash for storage. Each is hot-swappable for ease of maintenance, and in a card form factor.

The rear of the device has more ventilation in the top-left side with two removable PSUs below. To the right are two USB ports, two 40GbE ports, two serial console ports, and two Ethernet ports. On the right side are four slots for I/O cards and ports.

Management

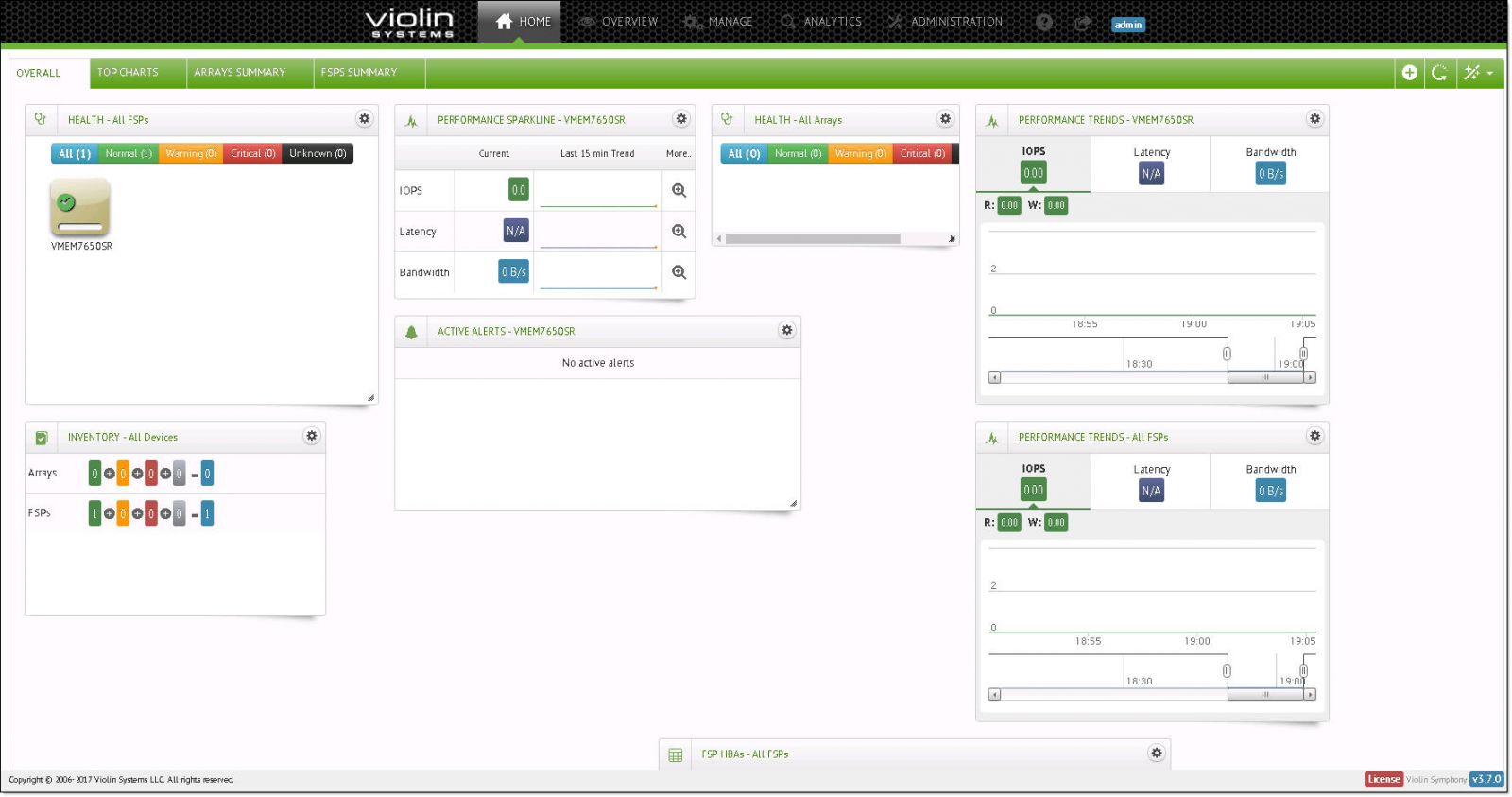

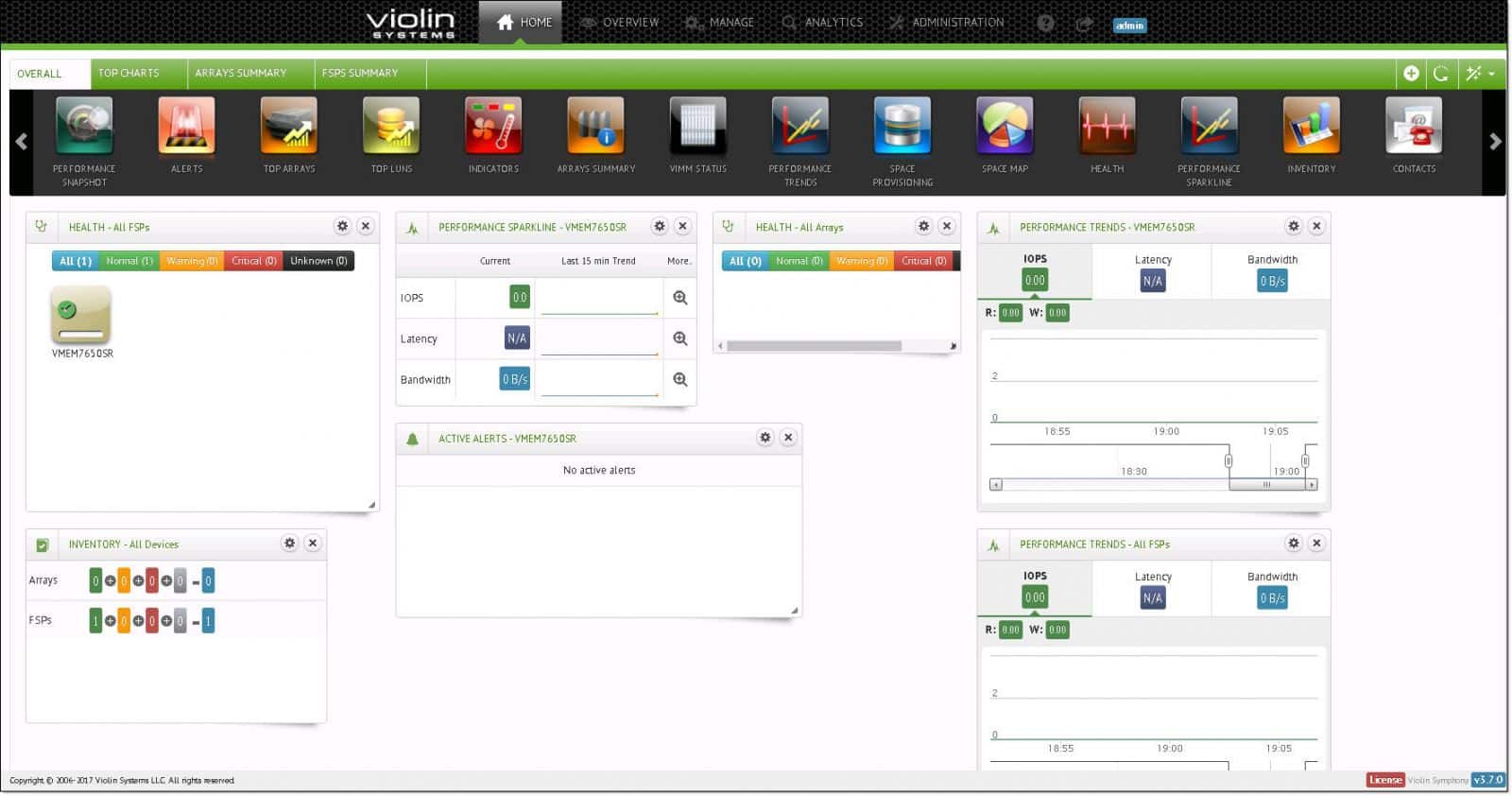

Violin uses Concerto OS 7 for its operating software and Symphony is the SAN’s management software. The company really sets itself apart from other GUIs, and not just because it is flexible and easy to use. It is actually built around flash storage by people that understand that flash needs to be looked at differently. The GUI also stands out as it allows users to customize several dashboards through the use of “gadgets,” putting the most relevant information out for easy viewing. On the ease-of-use side, users can export different list views directly to CSV, PDF, and even email.

There are several gadgets to choose from and they can be mixed and matched to cover most bases.

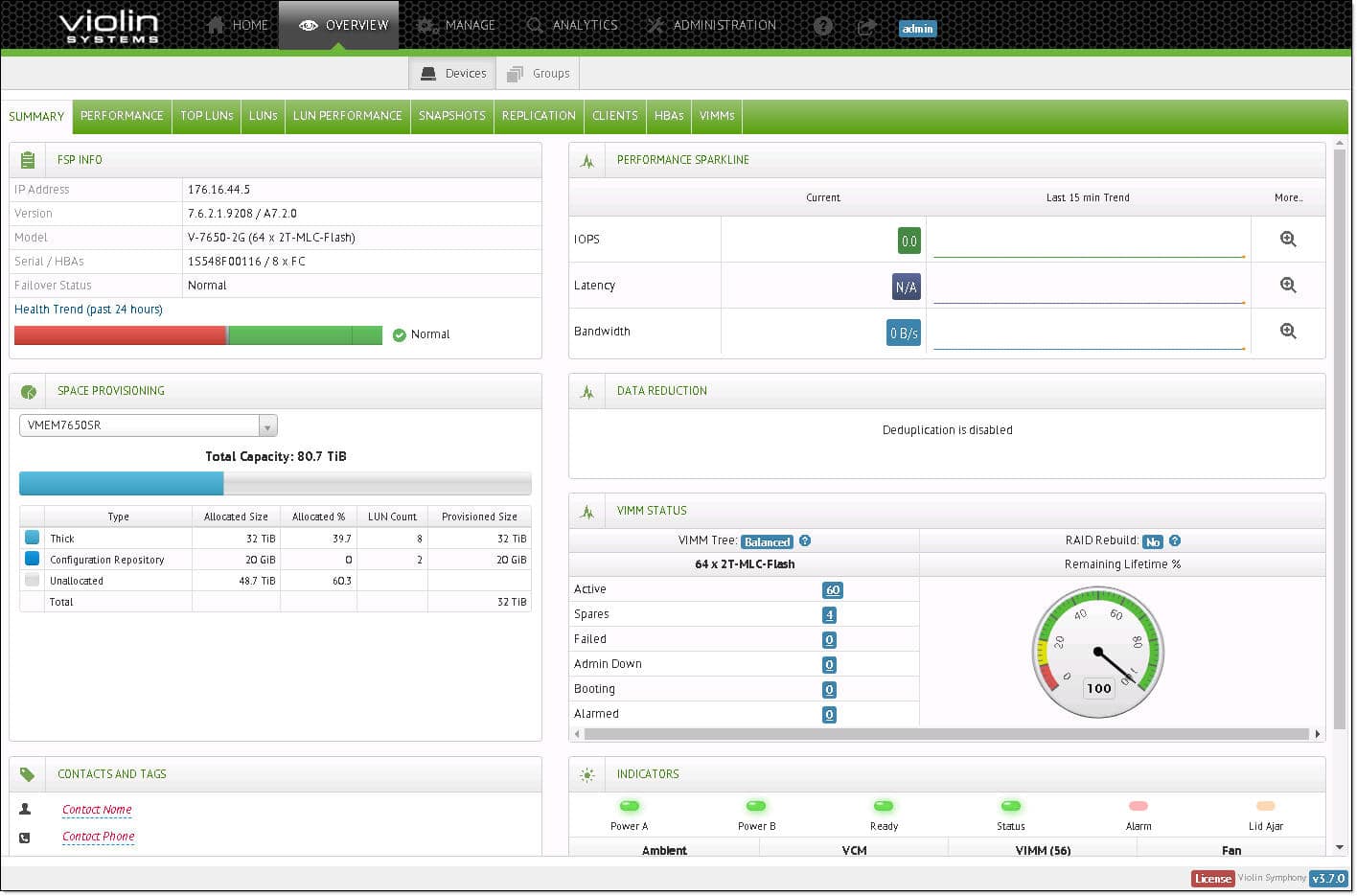

First, we will be looking at the overview tab. This tab has several sub-tabs that let users get a good look into most of the system. The first sub-tab is a summary and as the name implies, it gives a quick overall summary of how the system is running.

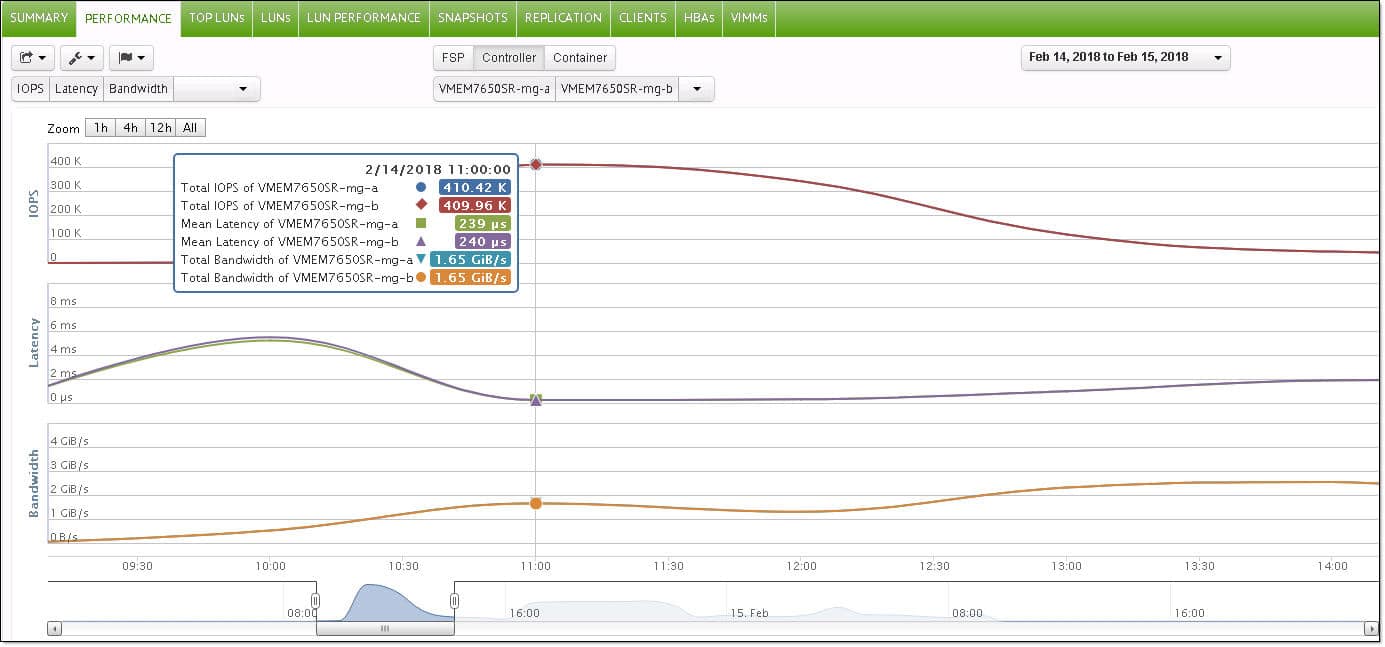

The next sub-tab over is performance. Here, users can choose what metric they want to look at (IOPS, latency, or Bandwidth) and they can choose to see where the performance is coming from: FSP (assuming there is more than one), controller, or container. A specific time can be chosen as well to see how performance was on a particular day at a particular time.

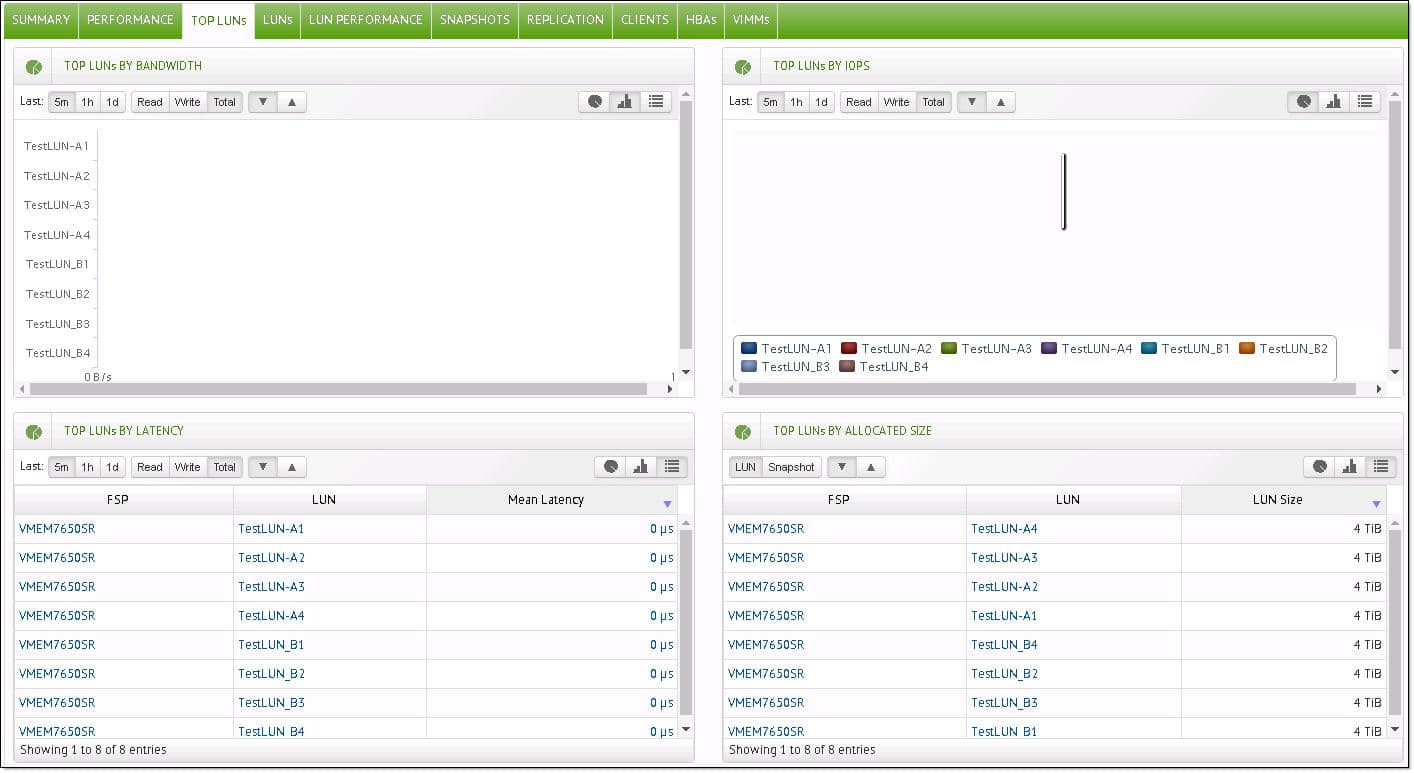

Next we look at top LUNs. These are broken into categories such as bandwidth, IOPS, latency, and size.

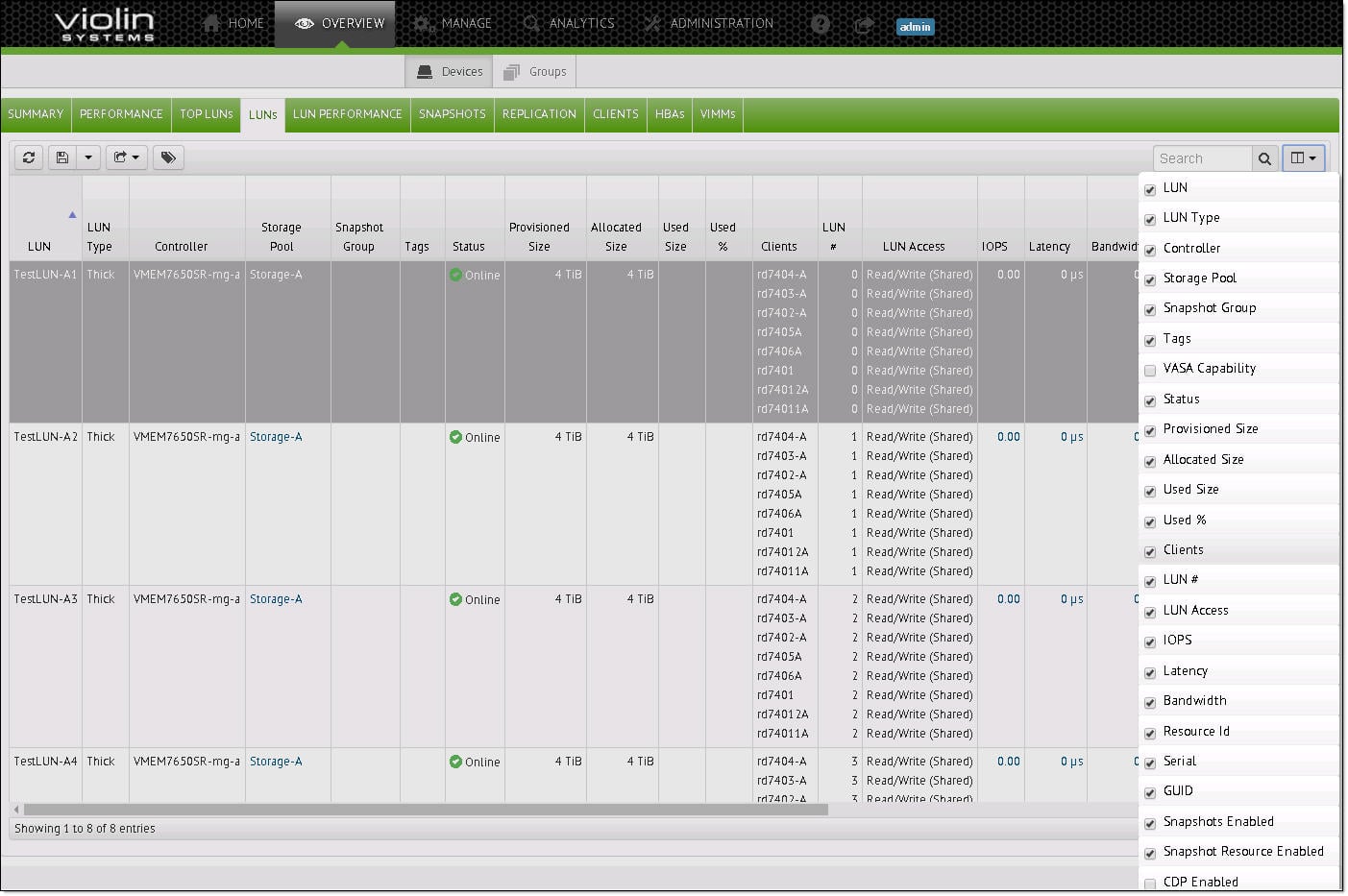

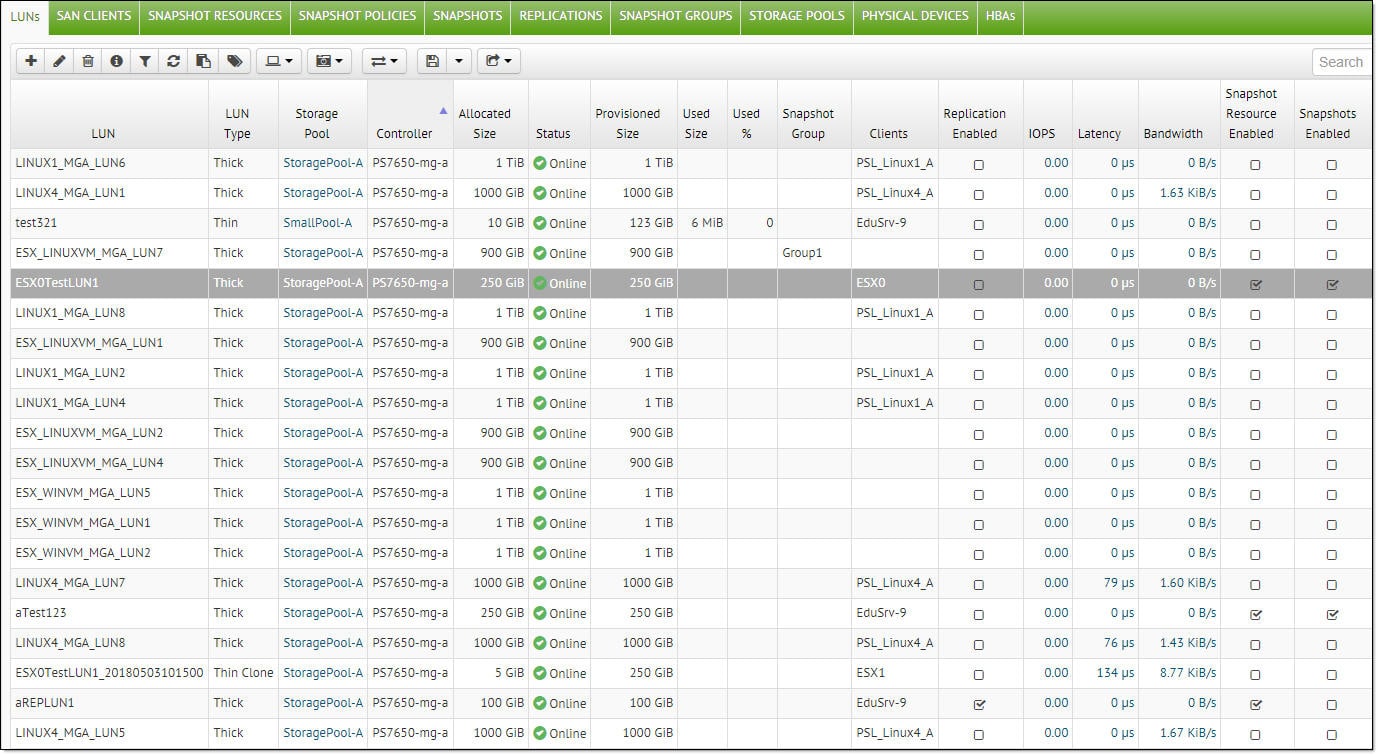

Users can look at all the LUN information on the next tab and like most aspects of Symphony, they can choose what information they wish to see through a drop-down menu on the right-hand side.

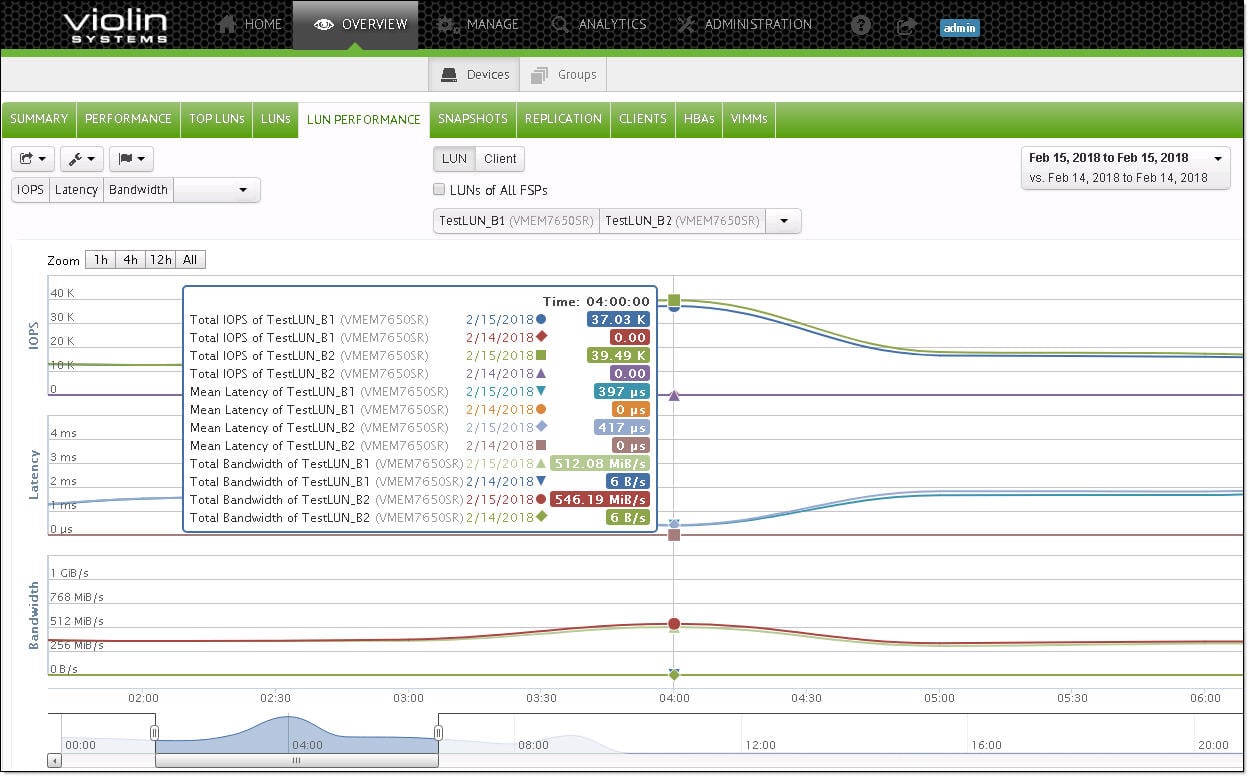

LUN Performance is similar to the performance tab, and users can choose what performance they want displayed and where it is coming from.

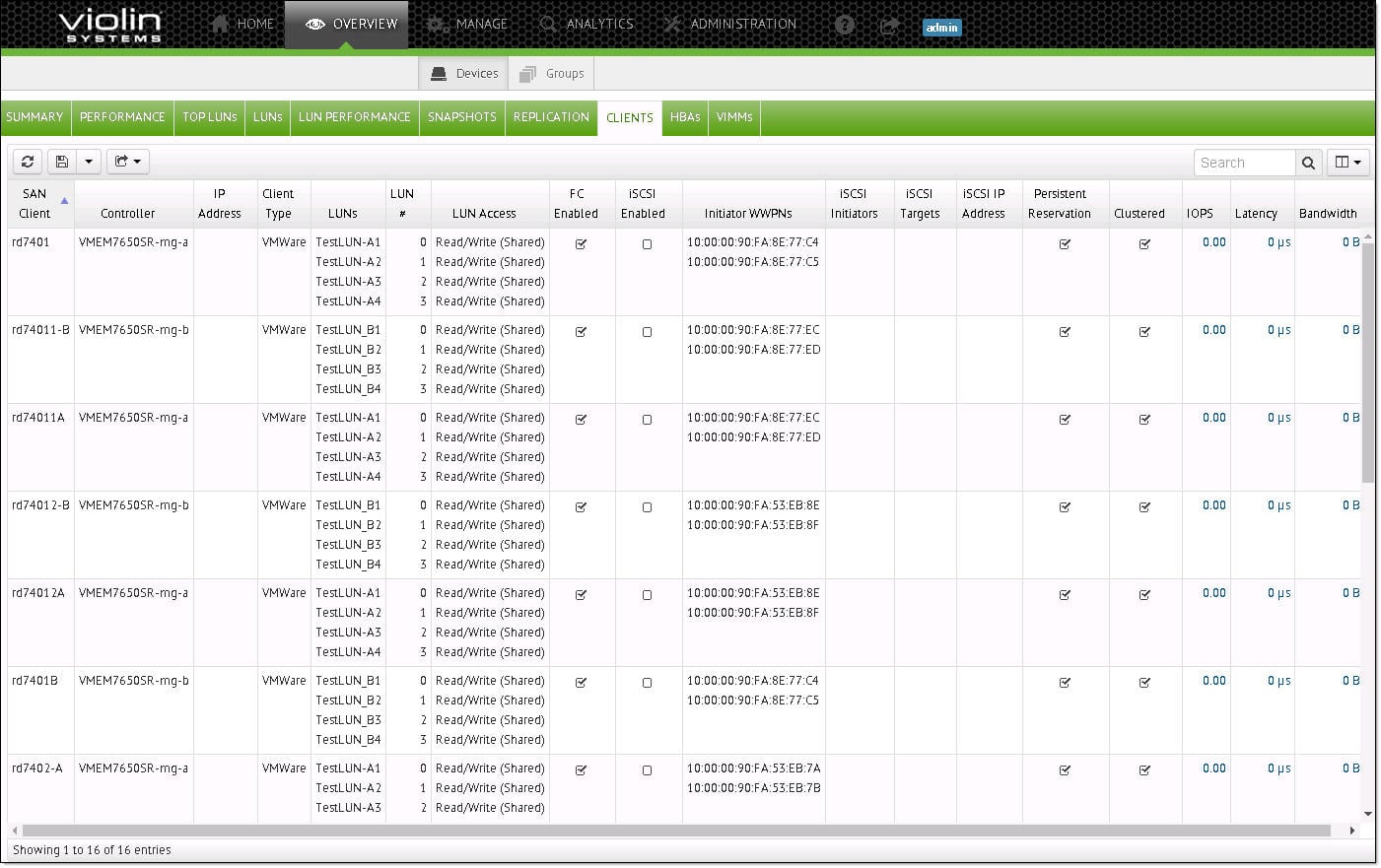

The Clients sub-tab lists off information about the clients such as the controller, IP address, type, LUNs, and whether it is FC or iSCSI enabled. Users also have the ability to customize what is seen when opening the tab.

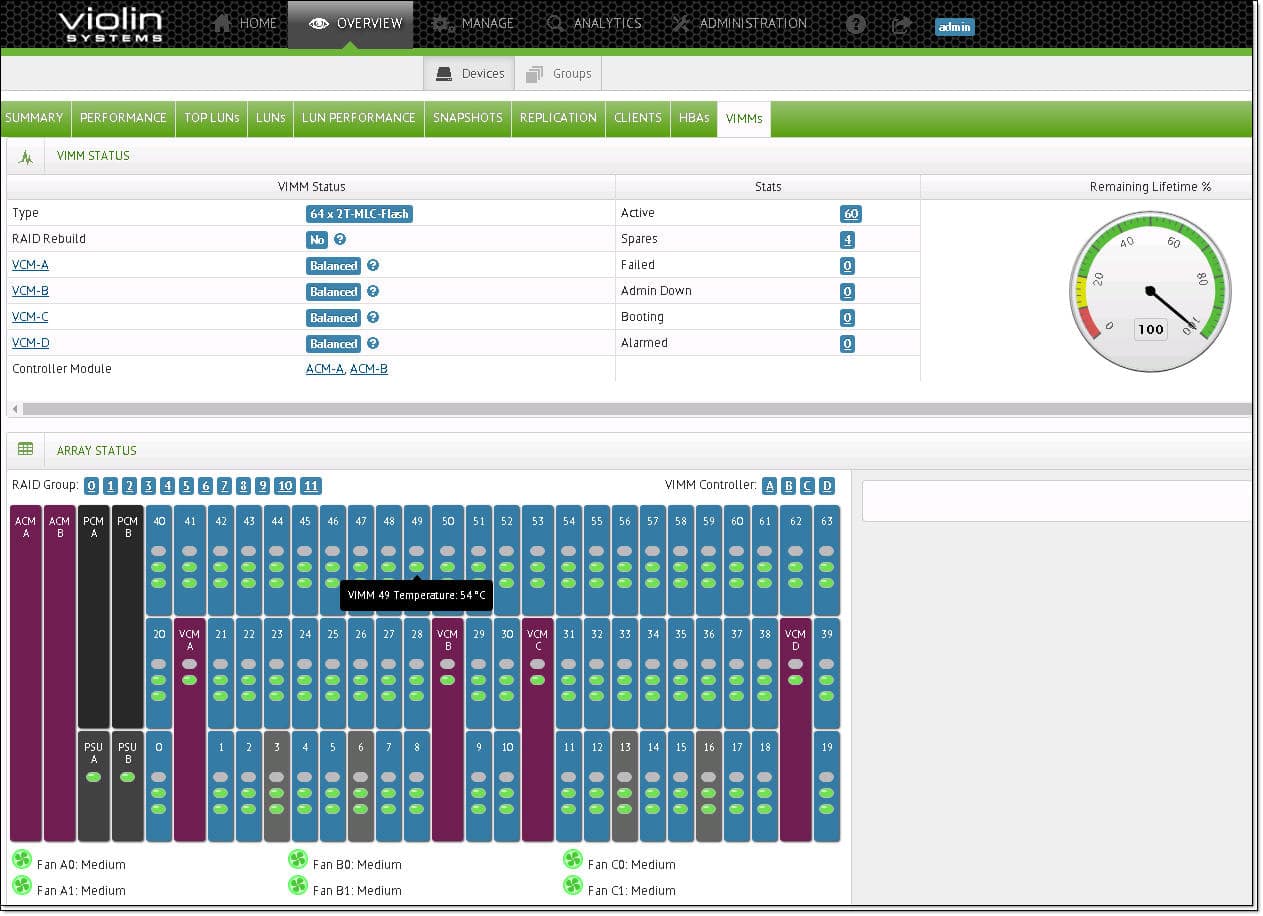

For storage, users need to click on the VIMM tab. Here they can see status such as the flash type, whether it is being RAID rebuilt, if the VIMMs are balanced, as well as states such as remaining lifetime. They can also get a real-time read out of the VIMMs at the bottom of the screen and whether or not there are any issues.

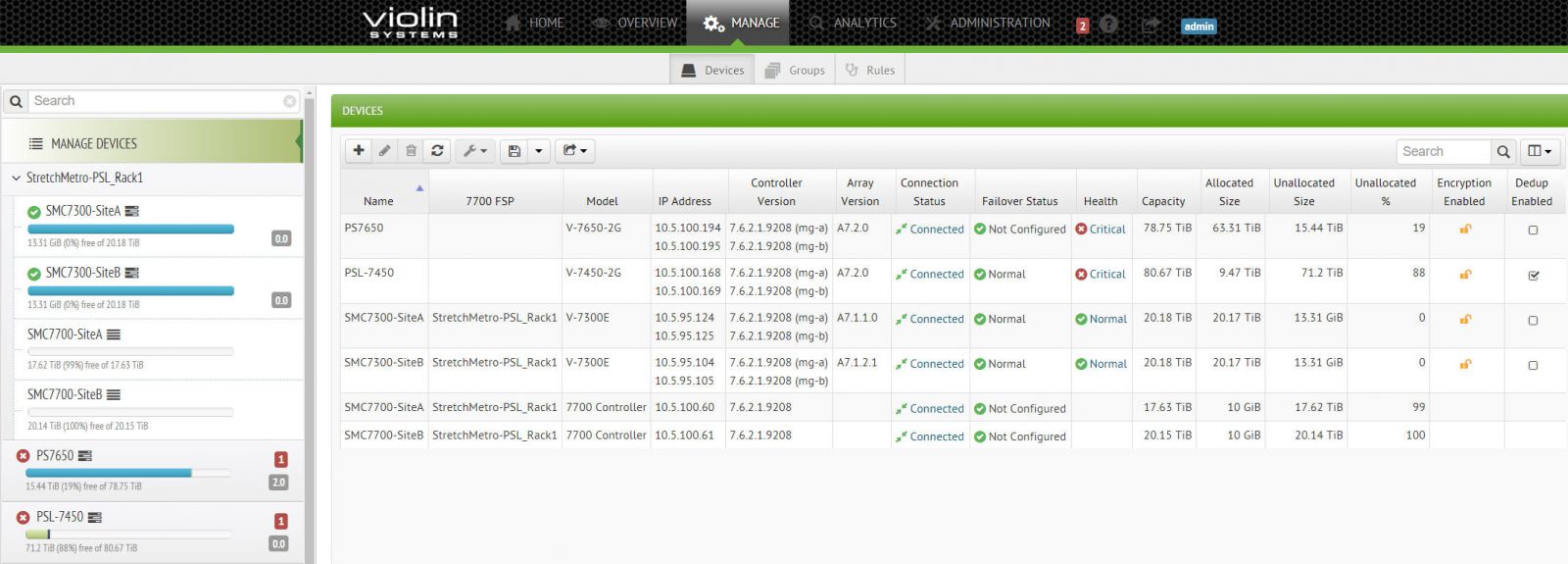

The next main tab is the manage tab. Through this tab, users can manage devices (broken down further into arrays, SANs, or LUNs), groups, or rules. Again, users are presented with a bunch of info that can be customized and clicking on one of the lines allows users to drill down a bit more.

Right-click and open in new tab for a larger image

Drilling down a bit more into LUNs, users are given several new options that include snapshots and replication. Here users can set up snapshots, group snapshots, and replications of LUNs.

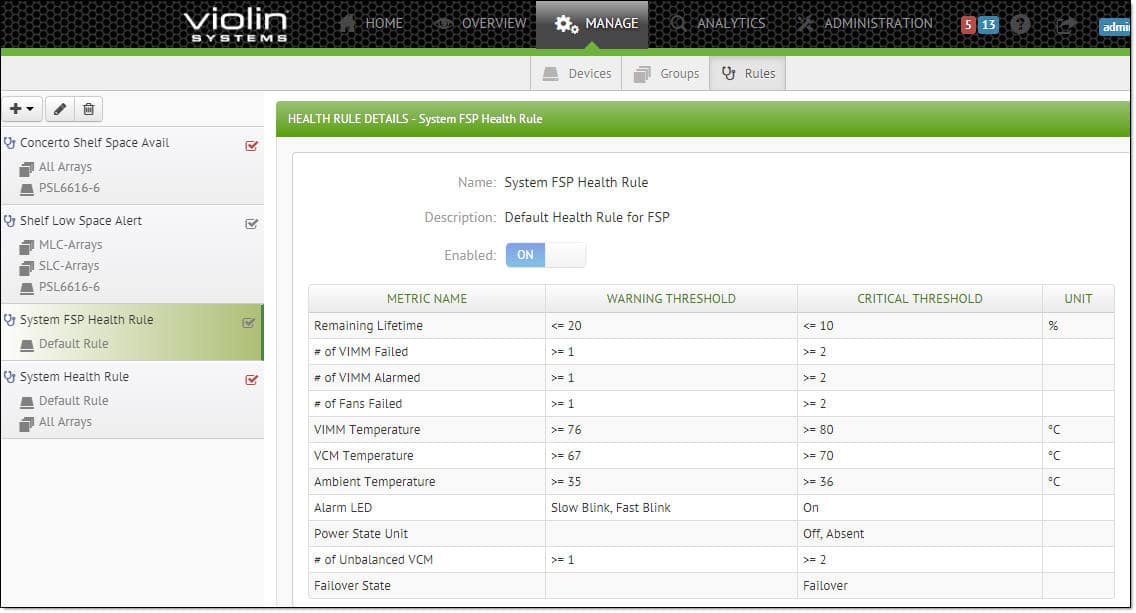

Also under the Manage tab is the sub-tab of rules. Here users can set up rules for shelf space and low space alerts, as well as System FSP health rules setting the name and threshold.

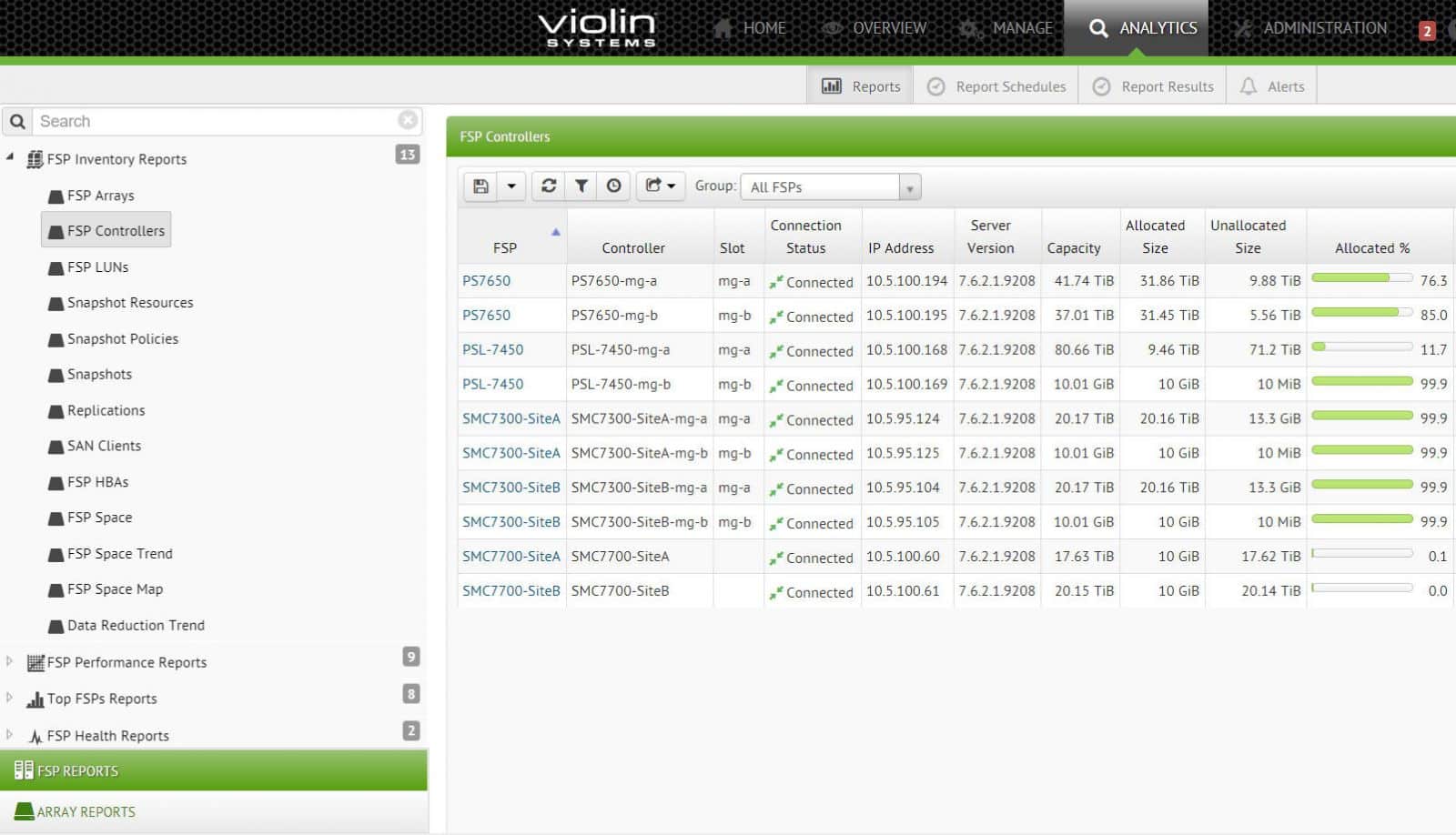

Under the Analytics main tab there are four sub-tabs: Reports, Reports Scheduling, Report Results, and Alerts. Users can select the device they want a report on and see it or schedule how they would like the report set up and then get the results. They can also set up and check alerts based off of the metrics they set up.

Right-click and open in new tab for a larger image

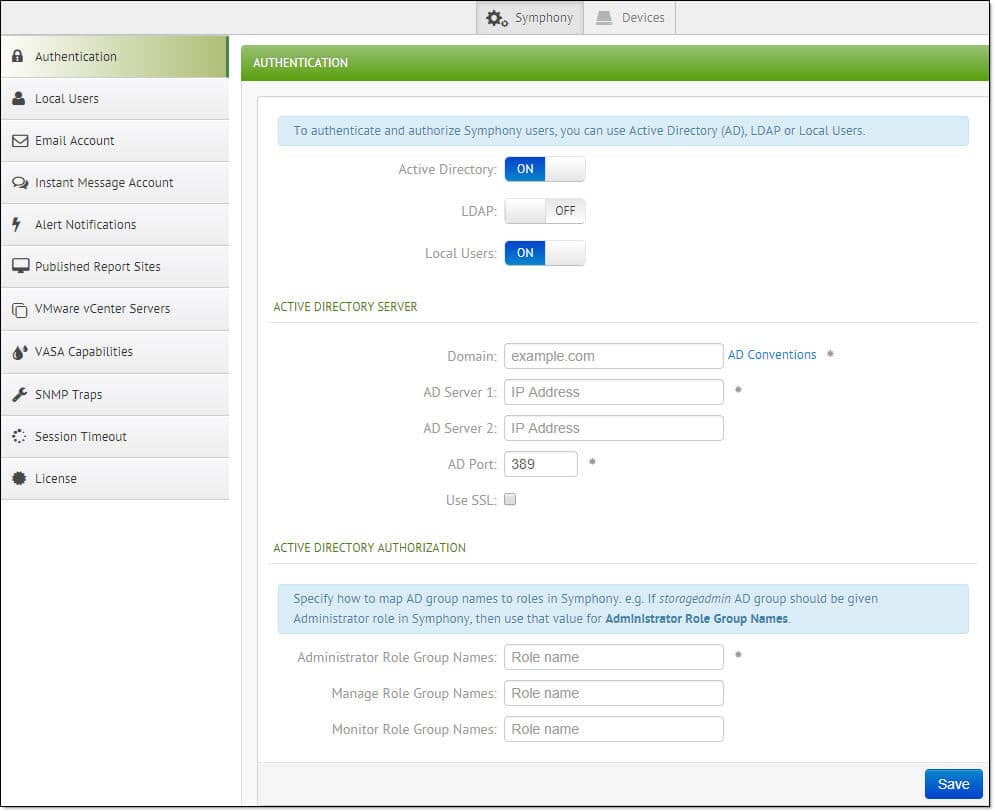

Finally, the Admin tab has the usual suspects such as setting up users and alert notifications, as well as setting up failover options and vCenter plugins.

While the GUI is an overall improvement on most AFA GUIs, there are a few minor issues. While “GUID” and “Serial #” are selectable columns in the LUN list, a column for WWN was notably absent from the list of choices. Similarly, if an “Add Replication” workflow is interrupted mid-setup, it leaves an orphan Snapshot Resource definition behind, rather than properly cleaning up after itself.

Performance

Application Workload Analysis

The application workload benchmarks for the Violin FSP 7650 consist of the MySQL OLTP performance via SysBench and Microsoft SQL Server OLTP performance with a simulated TPC-C workload. In each scenario, we had a 50/50 split of the array VIMMs being controlled by each controller, in its default RAID type across 12 sub-RAID groups. From this layout, we spread our workload across the array evenly, to balance each controller.

SQL Server Performance

Each SQL Server VM is configured with two vDisks: 100GB volume for boot and a 500GB volume for the database and log files. From a system-resource perspective, we configured each VM with 16 vCPUs, 64GB of DRAM and leveraged the LSI Logic SAS SCSI controller. While our Sysbench workloads tested previously saturated the platform in both storage I/O and capacity, the SQL test is looking for latency performance.

This test uses SQL Server 2014 running on Windows Server 2012 R2 guest VMs, and is stressed by Quest’s Benchmark Factory for Databases. While our traditional usage of this benchmark has been to test large 3,000-scale databases on local or shared storage, in this iteration we focus on spreading out four 1,500-scale databases evenly across the Violin FSP 7650 (two VMs per controller).

SQL Server Testing Configuration (per VM)

- Windows Server 2012 R2

- Storage Footprint: 600GB allocated, 500GB used

- SQL Server 2014

- Database Size: 1,500 scale

- Virtual Client Load: 15,000

- RAM Buffer: 48GB

- Test Length: 3 hours

- 2.5 hours preconditioning

- 30 minutes sample period

SQL Server OLTP Benchmark Factory LoadGen Equipment

- Dell EMC PowerEdge R740xd Virtualized SQL 4-node Cluster

- 8 Intel Xeon Gold 6130 CPU for 269GHz in cluster (Two per node, 2.1GHz, 16-cores, 22MB Cache)

- 1TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- 4 x Emulex 16GB dual-port FC HBA

- 4 x Mellanox ConnectX-4 rNDC 25GbE dual-port NIC

- VMware ESXi vSphere 6.5 / Enterprise Plus 8-CPU

For SQL Server we looked at individual VMs as well as aggregate scores. The Violin FSP 7650 was able to hit an aggregate score of 12,642.2 TPS with individual VMs hitting 3,160.4 TPS to 3,160.7 TPS.

With average latency, the 7650 had both individual VMs and an aggregate score of 3ms.

Sysbench Performance

Each Sysbench VM is configured with three vDisks, one for boot (~92GB), one with the pre-built database (~447GB), and the third for the database under test (270GB). From a system-resource perspective, we configured each VM with 16 vCPUs, 60GB of DRAM and leveraged the LSI Logic SAS SCSI controller. Load gen systems are Dell R740xd servers.

Dell PowerEdge R740xd Virtualized MySQL 8 node Cluster

- 16 Intel Xeon Gold 6130 CPU for 538GHz in cluster (two per node, 2.1GHz, 16-cores, 22MB Cache)

- 2TB RAM (256GB per node, 16GB x 16 DDR4, 128GB per CPU)

- 8 x Emulex 16GB dual-port FC HBA

- 8 x Mellanox ConnectX-4 rNDC 25GbE dual-port NIC

- VMware ESXi vSphere 6.5 / Enterprise Plus 8-CPU

Sysbench Testing Configuration (per VM)

- CentOS 6.3 64-bit

- Storage Footprint: 1TB, 800GB used

- Percona XtraDB 5.5.30-rel30.1

- Database Tables: 100

- Database Size: 10,000,000

- Database Threads: 32

- RAM Buffer: 24GB

- Test Length: 3 hours

- 2 hours preconditioning 32 threads

- 1 hour 32 threads

In our Sysbench benchmark, we tested several sets of 8VMs, 16VMs, and 32VMs. Unlike SQL Server, here we only looked at raw performance. In transactional performance, the 7650 was able to hit 17,021.7 TPS with 8VMs, 23,202.2 TPS with 16VMs, and 25,313.7 TPS with 32VMs.

Looking at average latency, the 7650 had 15ms with 8VMs; doubling to 16VMs brought latency up to only 22ms, and doubling it again to 32VMs only saw the latency go to 41.1ms.

In our worst-case scenario latency benchmark, the 7650 hit 27.7ms with 8VMs, 40.8ms with 16VMs, and 75.5ms with 32VMs.

VDBench Workload Analysis

When it comes to benchmarking storage arrays, application testing is best, and synthetic testing comes in second place. While not a perfect representation of actual workloads, synthetic tests do help to baseline storage devices with a repeatability factor that makes it easy to do apples-to-apples comparison between competing solutions. These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices. On the array side, we use our cluster of Dell PowerEdge R740xd servers:

Profiles:

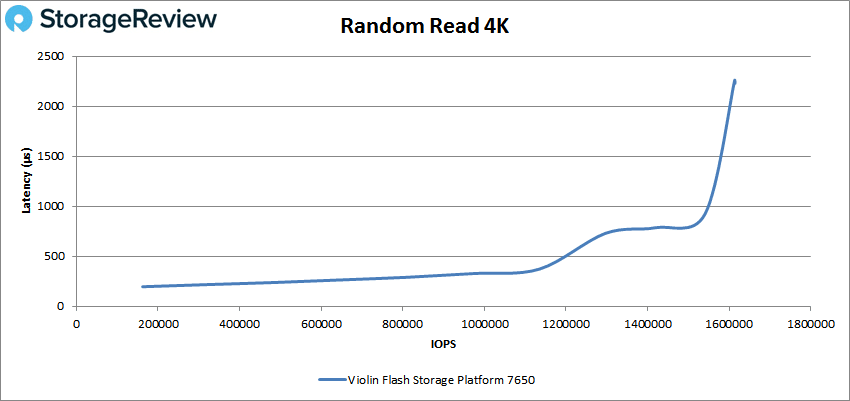

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

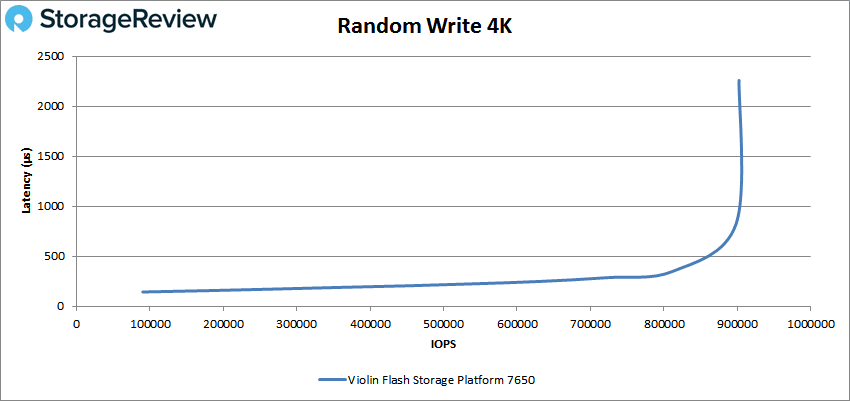

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

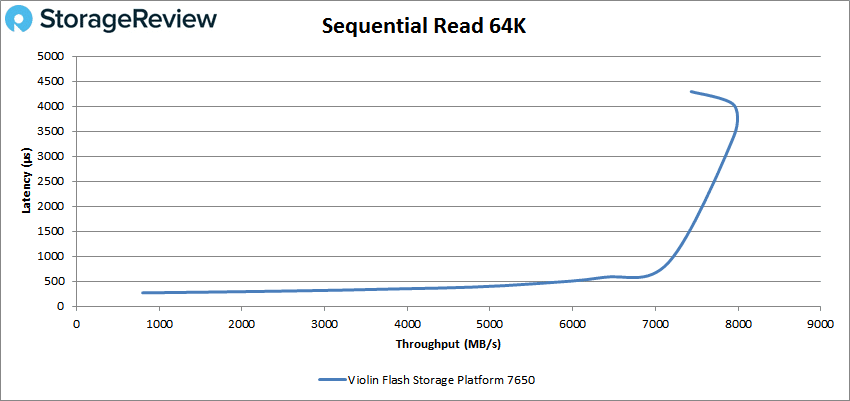

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

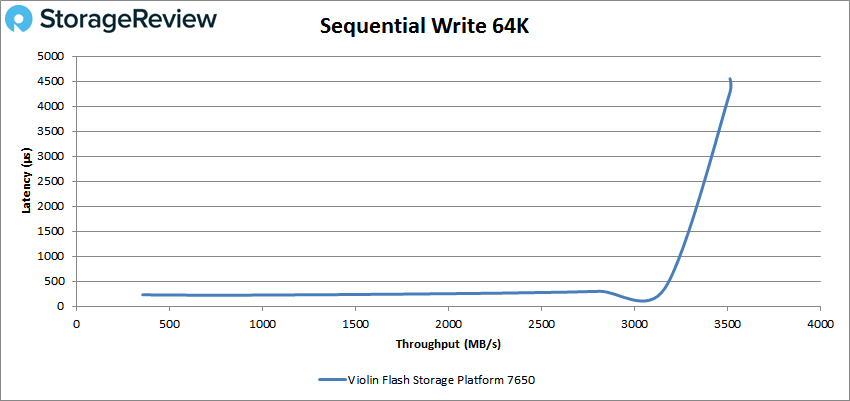

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

In our 4K peak read test, the Violin FSP 7650 had sub-millisecond performance until just north of 1.5 million IOPS and peaked at 1,613,302 IOPS and a latency of 2.26ms.

For 4K peak writes, the 7650 made it to nearly 900K IOPS before breaking 1ms and peaked at 902,388 IOPS with a latency of 2.26ms.

Switching over to sequential workloads, the 7650 had sub-millisecond latency until about 115K IOPS or 7.2GB/s for 64K read. The SAN peaked at about 127K IOPS or 8GB/s with 4ms latency before dropping off some in performance and increasing a bit more in latency.

For 64K write, the 7650 had sub-millisecond latency performance until around 51K IOPS or 3.2GB/s before peaking just over 56K IOPS or 3.5GB/s with a latency of 4.3ms followed by a slight drop off.

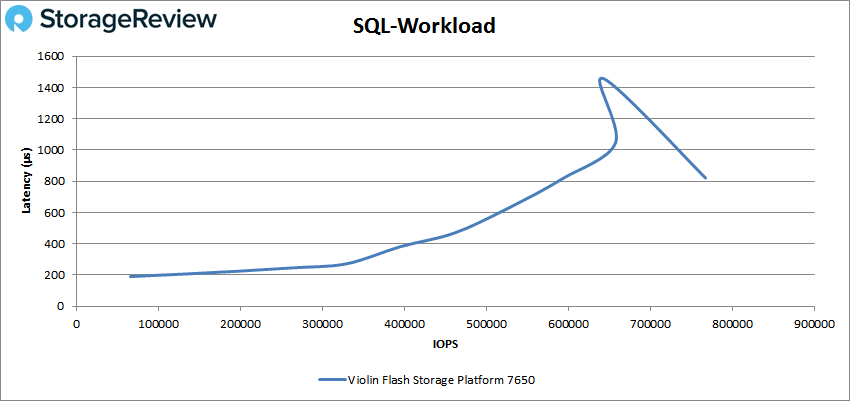

For SQL, the 7650 made it to just over 650K IOPS before going over 1ms. This was followed by a big spike in latency that dropped back off for the SAN to peak at 767,440 IOPS and a latency of 821μs.

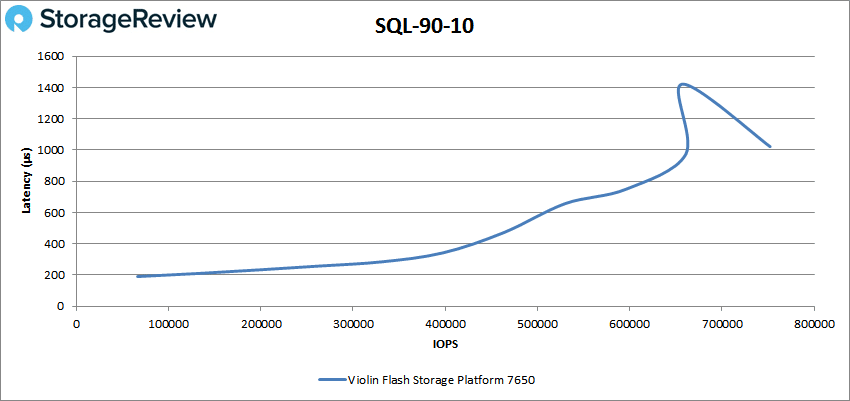

With SQL 90-10, the 7650 made it to roughly 661K IOPS before breaking 1ms. Again this was followed by a spike in latency (though not as high as the previous) before the SAN peaked at 752,175 IOPS and a latency of 1.02ms.

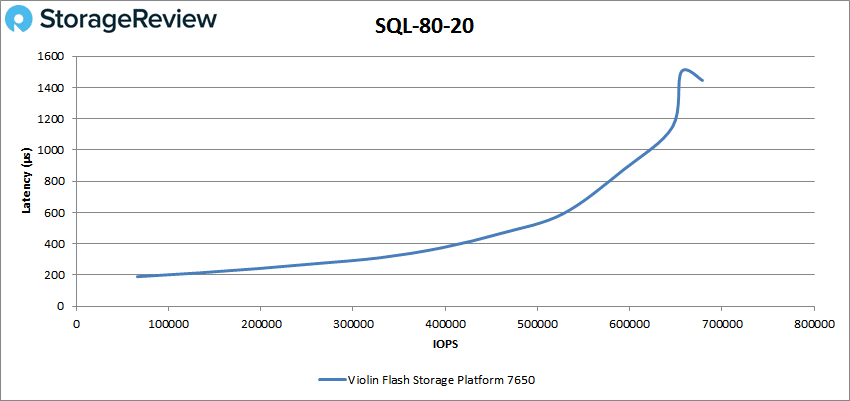

For SQL 80-20, the 7650 had sub-millisecond latency until about 620K IOPS and peaked at 678,858 IOPS and a latency of 1.45ms.

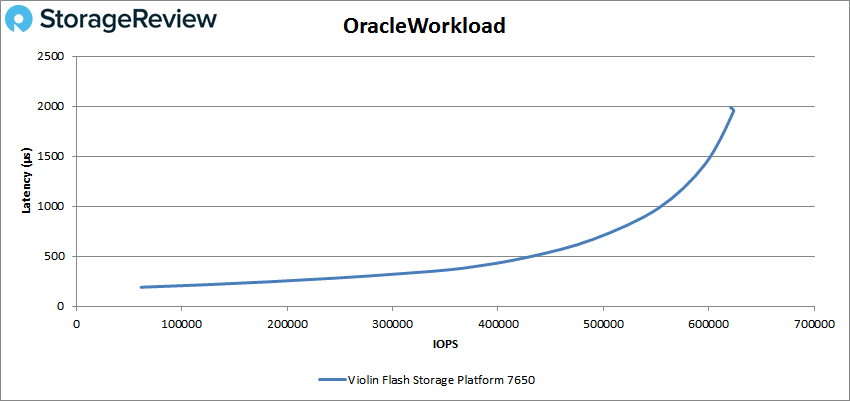

In our Oracle workload, the 7650 stayed beneath 1ms until just over 552K IOPS and peaked at 623,453 IOPS with a latency of 1.95ms.

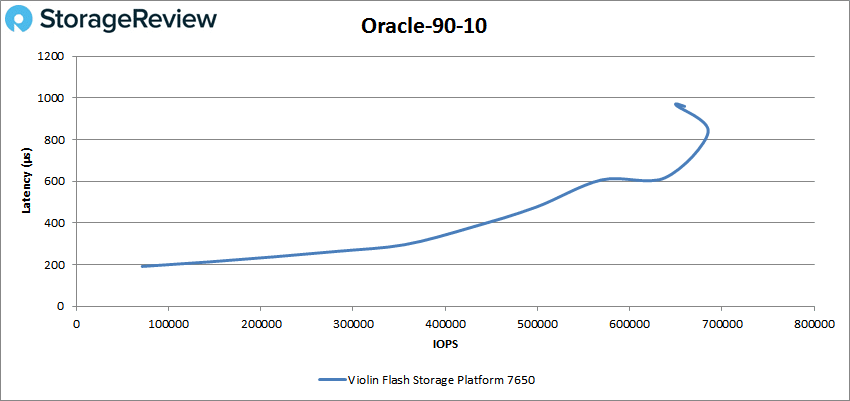

With the Oracle 90-10, the 7650 had sub-millisecond latency throughout and peaked at 685K IOPS with a latency of 837μs before falling in performance some along with increasing in latency.

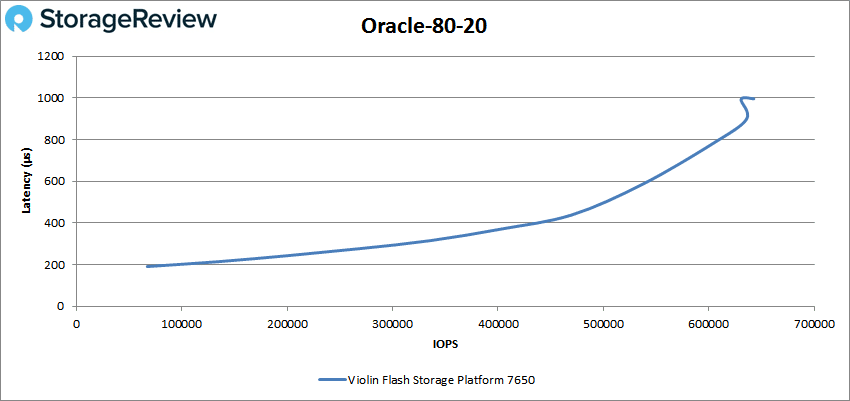

With the Oracle 80-20, the Violin FSP 7650 once again had sub-millisecond latency throughout but only just. The SAN peaked at 642,732 IOPS and 996μs.

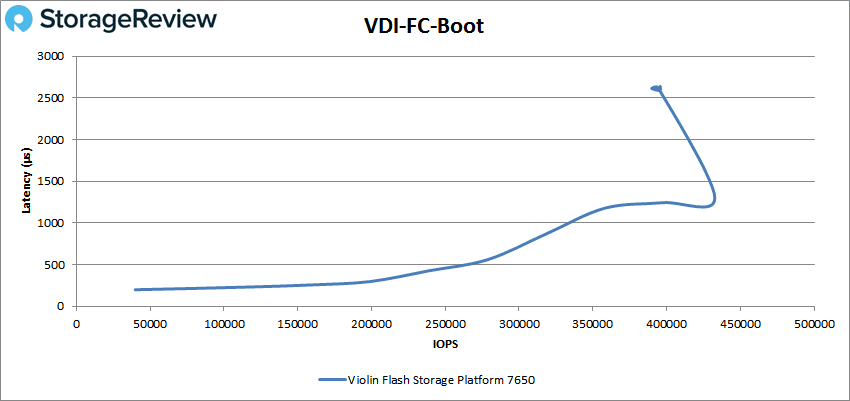

Next we switched over to our VDI clone test, Full and Linked. For VDI Full Clone Boot, the 7650 made it until about 320K IOPS before going over 1ms. The SAN peaked at 433K IOPS with a latency of 1.3ms before dropping off some.

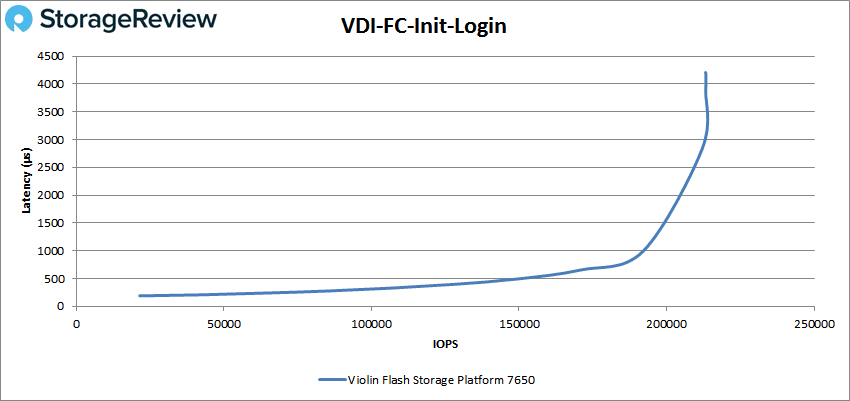

For the VDI FC Initial Login, the 7650 had sub-millisecond latency until 192K IOPS and peaked at around 213K IOPS with a latency of 3ms before dropping off slightly.

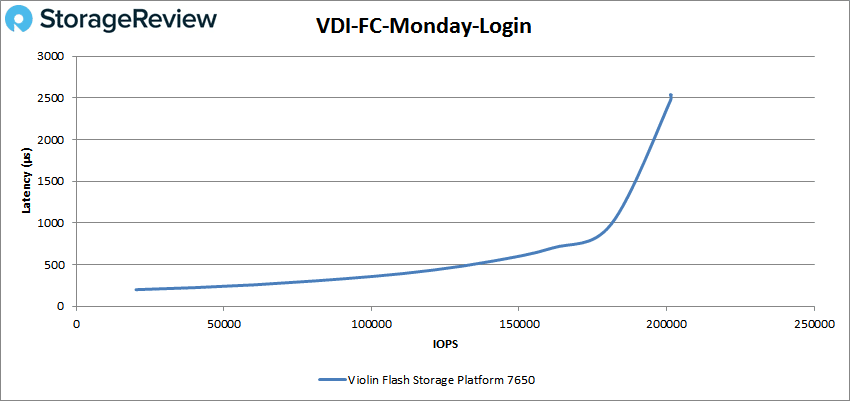

The VDI Full Clone Monday Login saw the 7650 hit 181K IOPS before breaking 1ms and peaked at 201,378 IOPS with a latency of 2.5ms.

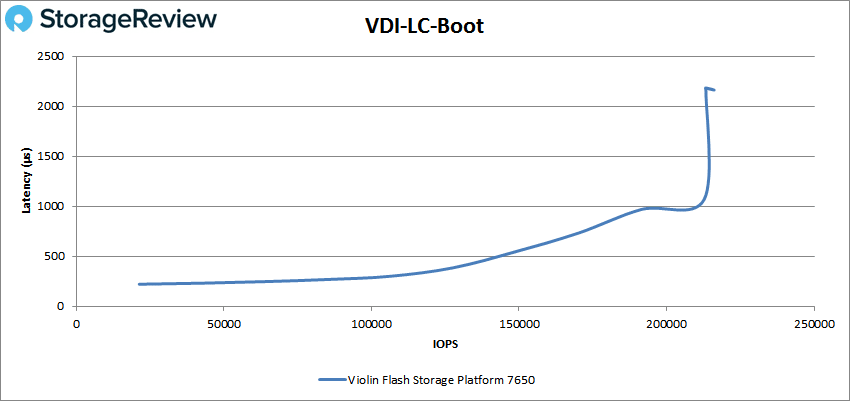

Switching over to VDI Linked Clone, the boot test showed the 7650 made it to roughly 210K IOPS before going over 1ms, though it straddled the line a bit before going over. The SAN peaked at 216,102 IOPS with a latency of 2.16ms.

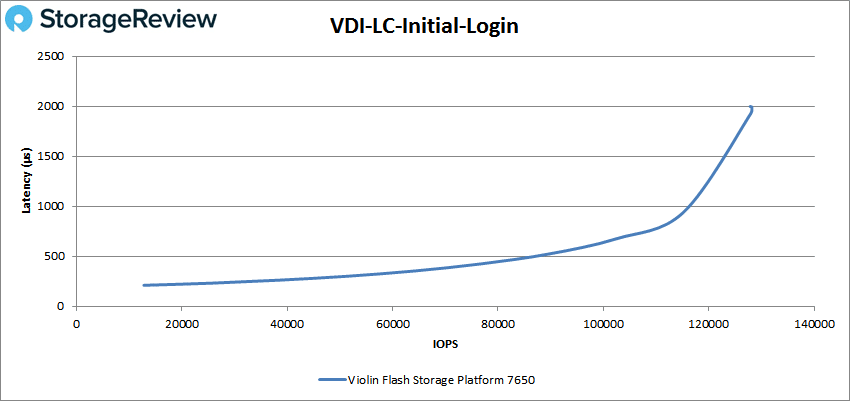

With VDI Linked Clone initial login the 7650 passed 155K IOPS with less than 1ms of latency. The SAN peaked at 128,002 IOPS with a latency of 1.93ms.

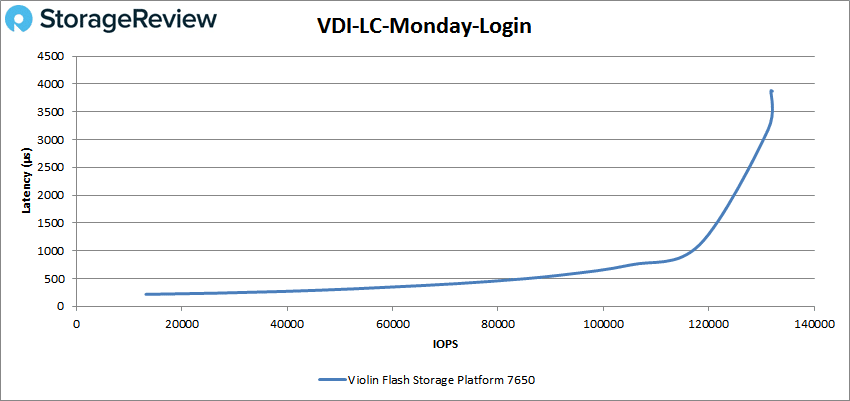

Finally, the VDI Linked Clone Monday Login had the 7650 with sub-millisecond latency until about 118K IOPS and peaked at about 132K IOPS with a latency of roughly 3.5ms.

Conclusion

Violin’s FSP 7650 SAN is geared around extreme performance and by that, the company means high IOPS with ultra-low latency. In fact, the company claims performance numbers as high as 2 million IOPS with latency only being at 1ms. The SAN comes in capacities that range from 8.8TB to 140TB and utilizes the company’s new pay-as-you-grow plan, Scale Smart. The SAN is shipped with all the flash storage contained in the box and when customers need more, they can begin paying for it and instantly have access to it. The FSP 7650 comes with several data services through its Concerto OS 7 software that covers data security, scaling through online expansion and replication for continuity.

New Violin Systems Array Branding

For performance, we ran both our applications workload analysis tests such as SQL Sever and Sysbench as well as our VDBench tests. With the SQL Server test, the 7650 was able to hit an aggregate transactional score of 12,642.2 TPS while having an aggregate latency of 3ms. For Sysbench we ran 8VMs, 16VMs and 32VMs that resulted in transactional performance. The 7650 was able to hit 17,021.7 TPS and an average latency of 15ms with 8VMs, 23,202.2 TPS at 22ms latency with 16VMs, and 25,313.7 TPS at 41.1ms latency with 32VMs. With worst-case scenario, the latency was only 27.7ms for 8VMs, 40.8ms for 16VMs and 75.5ms for 32VMs. Across both of our application-testing scenarios, the Violin FSP 7650 did exactly what it claimed it could do: offer exceptionally high performance while maintaining very low latency. Drilling into the Sysbench data, we were also impressed with how much performance we were able to squeeze out at a low VM count, since some storage systems require very high loads to achieve all of their performance, at the cost of higher latency. Latency on this unit was so good that even at the highest 32VM load in Sysbench, 99th percentile latency stayed under 76ms!

The results of the VDBench tests showed several impressive numbers for the Violin FSP 7650. Again, the array offered exceptionally consistent high performance, even as queue depths ramped up. In our 4K random workload driven across 16 VMs in an ESXi 6.5 environment, the array started off at 162K IOPS at 0.196ms and maintained sub ms latency up to 1.5M IOPS. The SAN broke 1.6 million IOPS at a 2.26ms latency in 4K read and hit 902K IOPS in 4K write, also at 2.26ms. For sequential numbers, the SAN hit 8GB/s read and 3.5GB/s write in our 64K tests. With our SQL workloads, the Violin had peak performance of 767K IOPS, 752K IOPS for the 90-10, and 679K IOPS for the 80-20. In Oracle, the FSP 7650 peaked at 623K IOPS, 685K IOPS for 90-10, and 643K IOPS in 80-20. The performance didn’t keep such high IOPS as we moved into our VDI Clone tests, but this was expected. For Full Clone the 7650 peaked at 433K IOPS for boot, 213K IOPS for Initial login, and 201K IOPS for Monday login with 3ms being the highest latency for the peak performances. For Linked Clone the SAN peaked at 216K IOPS for boot, 128K IOPS for Initial Login, and 132K IOPS for Monday Login, seeing 3.5ms for the highest latency.

The Violin FSP 7650 hits all the marks we were looking for, including a price point that is much more aggressive than expected. The array comes with an over-built chassis designed to take anything you could throw at it, and offers an easy-to-use and customizable management suite, handling every workload we deployed with ease. Latency sensitive applications such as our SQL Server environment had no trouble, and IOPS/throughput-hungry workloads such as Sysbench pushed even higher without breaking a sweat. Furthermore, questions around the viability of the company that may have held buyers back in years past have been answered. Violin has the funding in place to be a player again in enterprise IT along with the support services required. Anyone needing a powerhouse of an array for Tier0/1 workloads would do well to consider Violin Systems.

Amazon

Amazon