In Part 1 of our X-IO Technologies ISE 860 G3 Review we laid out the overview of what the ISE 860 G3 is and we looked at application and synthetic benchmarks. In a brief recap, the platform performed extraordinarily well across everything we threw at it, including MySQL, SQL Server and synthetic workloads. For the second part of our review we will be expanding our VMware virtualization testing of the ISE 860 G3 with VMmark tests, failing a controller under load and stressing the QoS engine on the ISE 860 G3 under MySQL workloads.

VMmark Performance Analysis

As with all of our Application Performance Analysis we attempt to show how products perform in a live production environment compared to the company’s claims on performance. We understand the importance of evaluating storage as a component of larger systems, most importantly how responsive storage is when interacting with key enterprise applications. In this test we use the VMmark virtualization benchmark by VMware in a multi-server environment.

VMmark by its very design is a highly resource intensive benchmark, with a broad mix of VM-based application workloads stressing storage, network and compute activity. When it comes to testing virtualization performance, there is almost no better benchmark for it, since VMmark looks at so many facets, covering storage I/O, CPU, and even network performance in VMware environments.

Dell PowerEdge R730 VMware VMmark 4-node Cluster Specifications

- Dell PowerEdge R730 Servers (x4)

- CPUs: Eight Intel Xeon E5-2690 v3 2.6GHz (12C/24T)

- Memory: 64 x 16GB DDR4 RDIMM

- Emulex LightPulse LPe16002B 16Gb FC Dual-Port HBA

- Emulex OneConnect OCe14102-NX 10Gb Ethernet Dual-Port NIC

- VMware ESXi 6.0

ISE 860 G3 (20×1.6TB SSDs per DataPac)

- Before RAID: 51.2TB

- RAID 10 Capacity: 22.9TB

- RAID 5 Capacity: 36.6TB

- List Price: $575,000

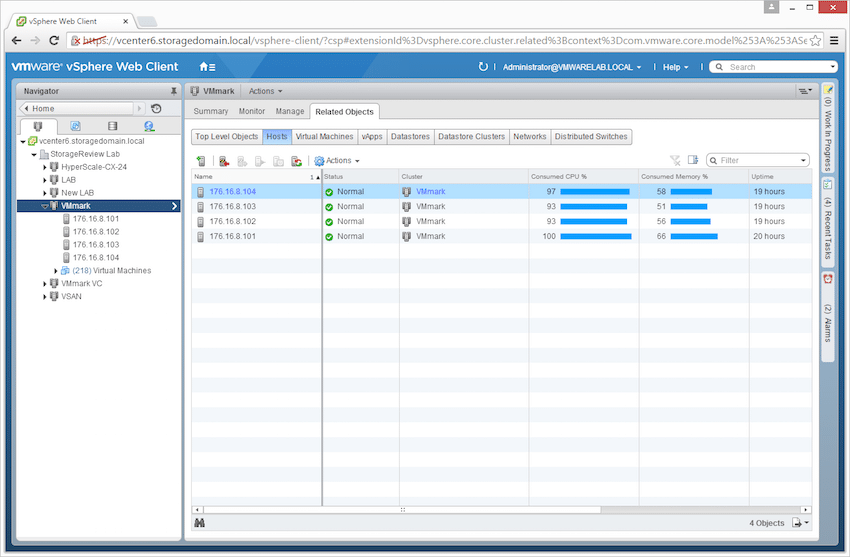

In our initial look at VMware VMmark performance with the XIO ISE 860, we use the Dell PowerEdge R730 13G 4-node cluster as the driving force behind the workload. With eight Intel E5-2690 v3 Haswell CPUs, this cluster offers 249.6GHz of CPU resources for the applications running as a part of each VMmark tile. Generally we’ve seen a requirement of about 10GHz per tile, meaning this cluster under optimal conditions should be capable of running between 24-26 tiles. Beyond that adding additional servers into the cluster or switching to a higher-tier processor such as the E5-2697 v3 or E5-2699 v3 would be necessary. That is another way of saying that when this cluster gets topped out, the storage will most likely still have some headroom available to go higher.

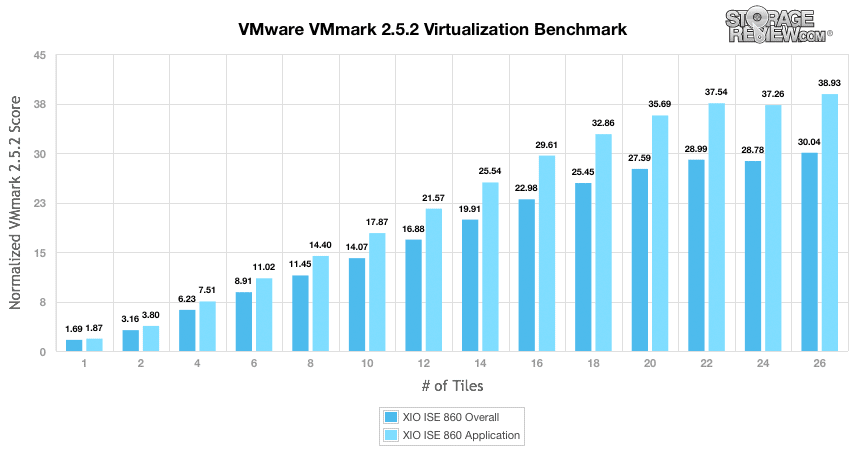

Scaling the VMmark workload on the XIO ISE 860, we saw a strong linear improvement from 1 to 22 tiles. After 22 tiles performance started to taper off slightly as our compute cluster pegged its CPU utilization. With a larger cluster the XIO ISE 860 could easily handle additional load. Diving into the performance monitoring behind the scenes backs that up, with latency measuring under 1ms during our 26 tile runs, with a handful of single-digit spikes during svmotion/deploy actions. With low-latency performance being an absolute must for an all-flash array, the X-IO ISE 860 doesn’t disappoint at all.

Controller Failure Testing

There are different SAN designs on the market as well as configuration differences such as active/passive and active/active. When it comes to dealing with failures, both designs allow a spare or secondary controller to take over storage duties if the primary controller goes offline. We’ve had a growing amount of interest in showing how different platforms cope with controllers failing, since not all are platforms are equal. The scenario we designed is rather basic at its core; deploy a substantial workload on the storage array, wait till the workload reaches steady-state and then pull a controller. During this process we look at how performance characteristics change, monitor for dropped I/O activity and most importantly how fast the platform resumes the workload under test. For the X-IO ISE 860 we used our Sysbench workload, with 4 instances spread across two volumes.

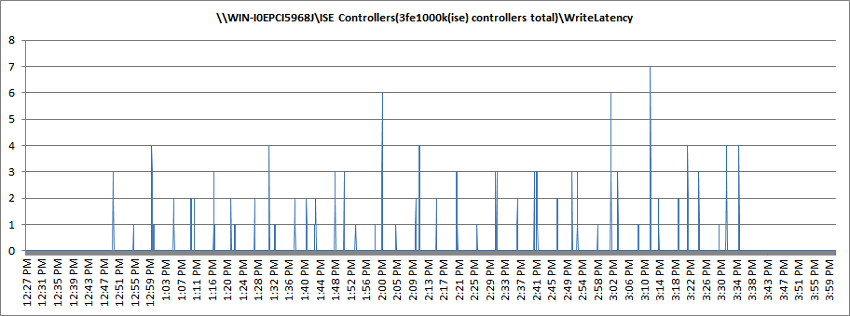

With 4 Sysbench VMs running on the ISE 860, we waited for roughly 15 minutes for the workload to even out on the storage array. At this time the workload measured about 1,100TPS per VM. With one controller pulled, we saw performance taper off for 3-4 seconds across all VMs, pause for about 10 seconds and then quickly resume the performance level measured before the failure. Our VMware ESXi 6.0 hosts easily coped with this storage I/O interruption and continued working as if nothing had happened.

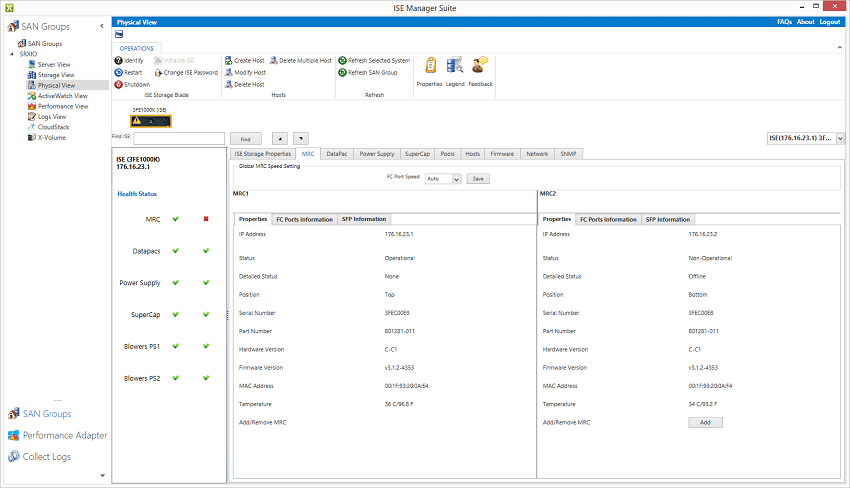

From the X-IO ISE Manager Suite we were able to witness the failure after about 5 minutes (refreshing manually may have shown it sooner). 10-15 minutes after pulling the controller we also received an automated email alert from X-IO support warning us of the controller failure as well.

To bring the old controller back into the fold (or adding the replacement controller into the array) you simply insert the controller into the back of the array, let the array detect/analyze the controller, and indicate it is capable of merging with the array. This process took a few minutes, presenting an “Add” button in the ISE Manager controller view. Once clicked we saw a similar dip in performance, followed by a few seconds of I/O pause before the array was back to normal. Just like the original failure VMware ESXi 6.0 had no trouble handling this interruption and we saw no errors at the guest OS level. Not all storage arrays are created equal in this regard, and it is nice to see that the ISE 860 could easily handle a catastrophic failure.

X-IO Technologies ISE 860 G3 QoS

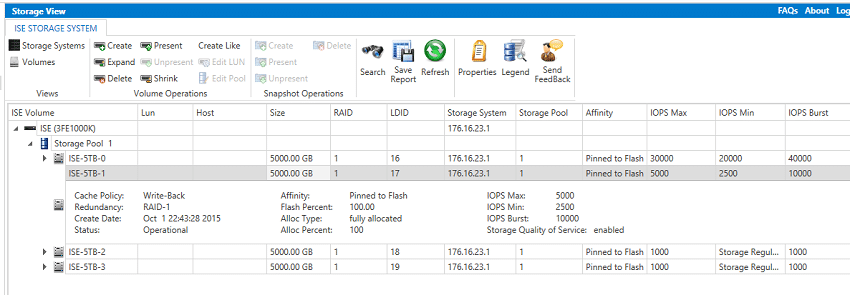

We briefly touched on QoS in the first part of our review, here we are going to take a more in depth look. X-IO offers QoS functionality on their ISE storage arrays. QoS settings are applied at the volume level, where the user can specify IOPS Max, IOPS Min, and IOPS Burst. While synthetic results might be useful to show how well QoS profiles work on a given device, seeing how applications respond to them is far more valuable. We used our Sysbench MySQL TPC-C workload again in this section since it offers an excellent real-time performance monitoring capability. Our scenario leveraged a 4 VM deployment, with two VMs on one volume and the other two on another volume. One volume was designed to be a “production” use case, where we wanted no restriction in performance compared to an unregulated benchmark, while the other volume would be a “development” use case. This would reflect an enterprise setting where you need multiple database instances running on primary storage, but development instances wouldn’t be allowed to impact production VMs.

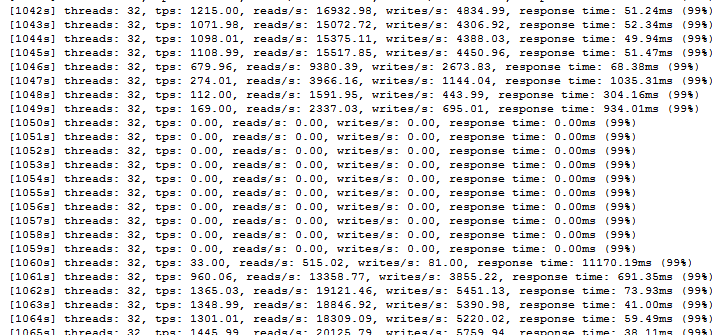

Enabling QoS and configuring it at a volume level is very easy on the X-IO ISE 860. The settings are accessed through the same menus when provisioning volumes, where the default is “storage regulated” or full performance. To enable QoS you simply enter an IOPS value, and through some trial and error see how it affects your workload. It is worthwhile to monitor the IOPS level of your workload without restriction through the performance view on the ISE Manager first to get a baseline. In this case 2 Sysbench VMs consumed over 20,000 IOPS, so we set a 30k IOPS Max, 40k IOPS Burst and 20k IOPs Min on the volume running our production workload. For our development volume, we ran through a few iterations to see how limiting the I/O profile impacted our live Sysbench run.

The first example shows Sysbench running on our production volume, with QoS enabled. We saw no performance change versus storage regulated or completely unrestricted.

On our development Sysbench workload, we were easily able to control performance profile which translated to stable, albeit lower performance levels. In the example below we changed from a profile set to half the performance of our production volume, to one lowering the IOPS level to 25% of original. As you can see the performance change took place immediately, with no I/O interruption or I/O instability. For buyers concerned about noisy neighbors that may affect high-priority workloads, X-IO offers a highly-capable QoS featureset that performs very well under real-world conditions.

Part 2 Final Thoughts

The second segment of this review series takes a broad look at both performance and service issues. On the performance front, the ISE 860 took down 26 tiles in VMmark, maxing out the ability of the 4-node cluster. Going further, with its 26-tile load write latency was incredibly low, measuring sub 1ms, having spikes less than 10ms. The ISE clearly has more headroom here, which is something we’ll explore further. Taking everything the four servers could throw its way is no small feat but was expected nonetheless as the ISE continues to demonstrate fantastic performance across a variety of workloads.

Looking beyond performance, one of X-IO’s claims to fame with the ISE series is the lack of need for maintenance. Failing a drive in this case won’t work, X-IO aggregates the storage to intentionally obfuscate the individual drives themselves, even if you could physically access them while operational. In this case we less than gracefully pulled a controller to see what happens to active workloads. With a little blip as the second controller absorbed the load, everything continued on with no issues in vCenter or the guest OSs. We also dove into the QoS features of the ISE, which allow for tight controls on a per volume basis. Mature QoS capabilities aren’t widely found on primary storage arrays, so have this level of granular access is a nice to have, especially for those who run non-critical dev workloads on primary storage, or have noisy neighbors that can often eat up well more than their fair share of resources.

We’ll continue to work with the ISE 860 as we further develop our testing strategies for this new breed of high-performance storage. Next steps include testing against a Cisco UCS Mini with eight blades and a few additional workloads that flash arrays can excel with.

X-IO Technologies ISE 860 G3 Review: Part 1

Amazon

Amazon