The Oracle Cloud Infrastructure includes a wide variety of service offerings including compute, storage, networking, database, and load balancing–in effect, all the infrastructure necessary to construct a cloud-based data center. In the context of this review, we’re interested in the Oracle Cloud Infrastructure Compute category, with a very specific focus on their bare metal instances. Like most cloud providers, Oracle offers virtualized compute instances, but unlike most other virtual offerings, Oracle can back these with shapes that contain up to ~25TB of NVMe storage for applications that need low latency. As great as those are, Oracle has further upped the ante for cloud compute performance by offering the industry’s first high-performance bare-metal instances, ideal for mission-critical applications where latency is paramount. Instances come with up to 52 OCPUs, 768GB RAM, dual 25GbE NICs and up to 51TB of locally attached NVMe storage. For those that desire more, up to 512TB of network attached NVMe block storage is available as well as GPU options. All the different Oracle compute offerings run on a highly-optimized software-defined network tuned to minimize contention and maximize performance.

There are a wide variety of cloud offerings at this point and even several large cloud offerings with AWS, Google Cloud Platform, and Microsoft Azure at the top of that list. While these cloud service providers offer many great products and services, one thing they typically don’t have is performance. When comparing cloud to on-premises, on-prem always beats cloud hands down. Oracle is looking to change this view with its cloud infrastructure offerings.

Oracle’s compute offerings come with promises one would expect out of on-prem storage or servers, including performance, availability, versatility, and governance. The performance side supports peak and consistent performance for mission-critical applications and is backed by the recently announced end-to-end cloud infrastructure SLA, which as of this writing is the only one like it in the industry. The offering supports availability at multiple layers including DNS, load balancing, replication, backup, storage snapshots, and clustering. The compute offerings range from a single core VM to 52-core single-tenant bare metal, offering the versatility to run everything from common workloads to HPC clusters. And with Oracle’s bare metal instances, customers get the isolation and control of an on-prem server, as they contain no other tenants and no Oracle-provider software.

The compute offerings from Oracle Cloud come in several “shapes,” including bare metal instances, bare metal GPU instances, and VM instances. For this review we will be looking at bare metal instances, that according to Oracle can deliver up to 5.1 million IOPS and are for use cases such as mission-critical database applications, HPC workloads, and I/O intensive web applications. For comparison, we’ll also show Oracle’s VM shapes, with local NVMe storage (DenseIO), and with network block storage (Standard).

Management

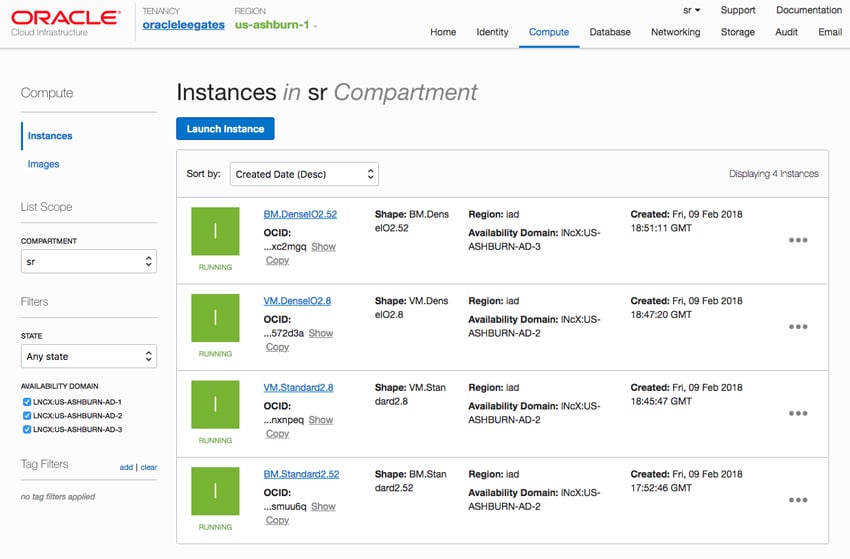

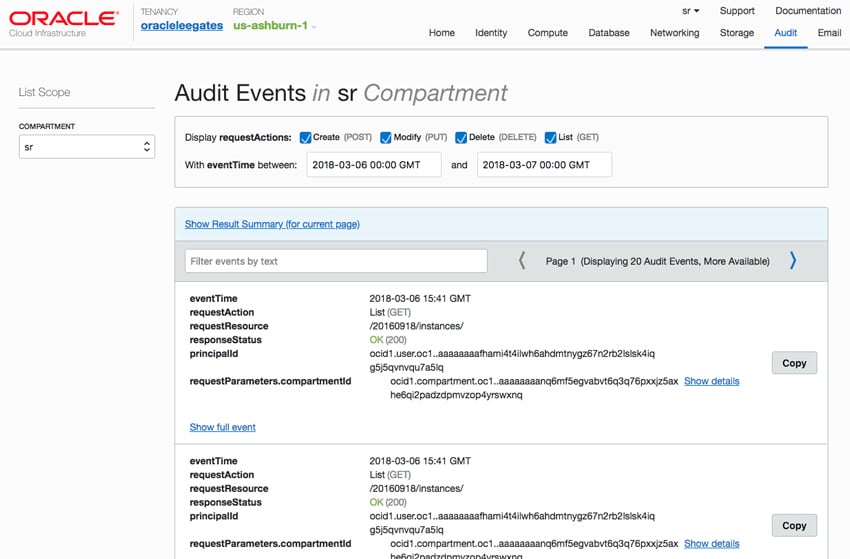

The management GUI for Oracle Cloud Infrastructure is fairly simple to figure out. The main page has walkthroughs and assistance, if needed. Along the top are the tenancy or account, the region one is using (us-ashburn-1, in our case), along with tabs for Home (which is the main page), Identity, Compute, Database, Networking, Storage, Audit, and Email. For our testing, DenseIO2 and Standard2 are shown.

Since this review focuses on the Compute side, looking under that tab we can see the instances we will use for our performance tests. Each instance has its name, shape, region, availability domain, and when it was created. On the left-hand side, users can change the listing by selecting the state, for instance, “running.”

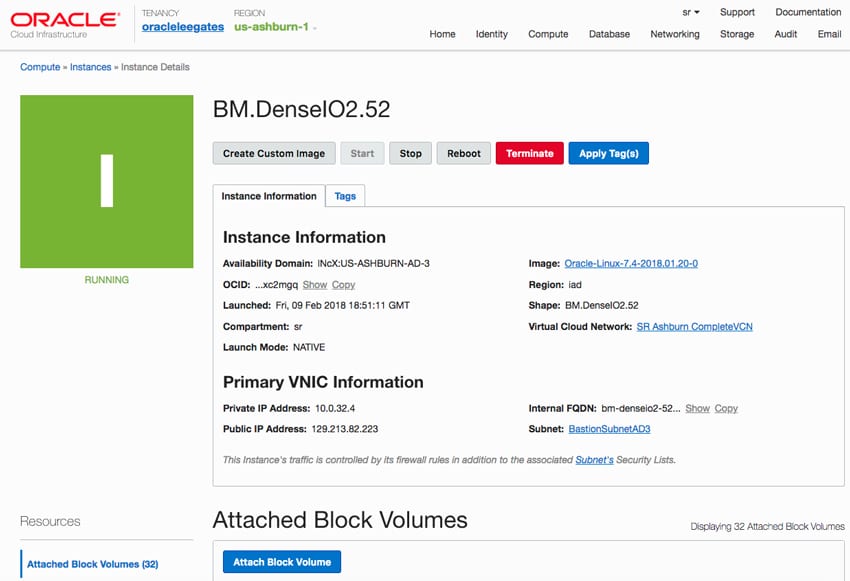

Clicking on the ellipsis on the right-hand side allows one to drill a little deeper into an instance. Looking at the BM.DenseIO2.52, one can easily see if the instance is running and more in-depth information about it. Tags can be associated with it here as well. Along the top of the info is the ability to create a custom image, start, stop, or reboot the instance, terminate it or apply tags. Scrolling down gives one the ability to attach block volumes as well.

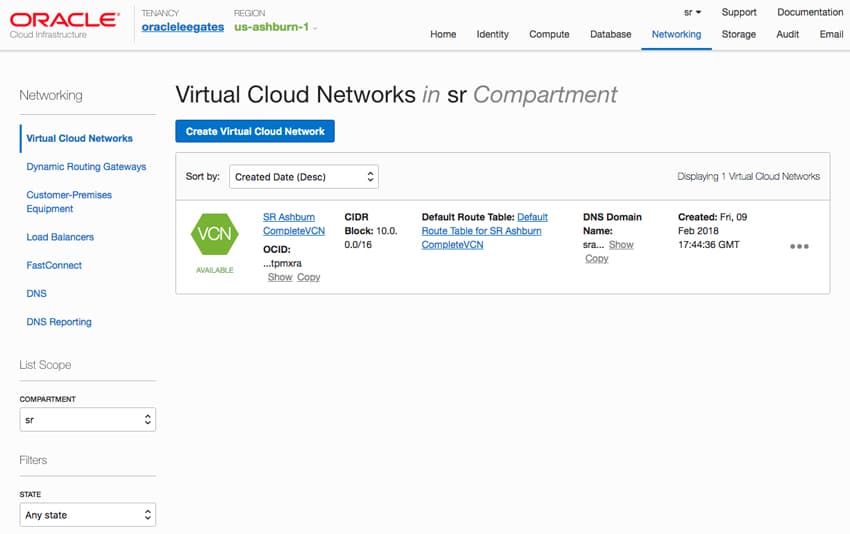

Under the Networking tab, one can see the Virtual Cloud Network being used or create one. For the VCN, there is information such as region, default route table, DNS domain, and when it was created. Again, the ellipsis on the right allows for drilling down, applying tags, and creating a subnet.

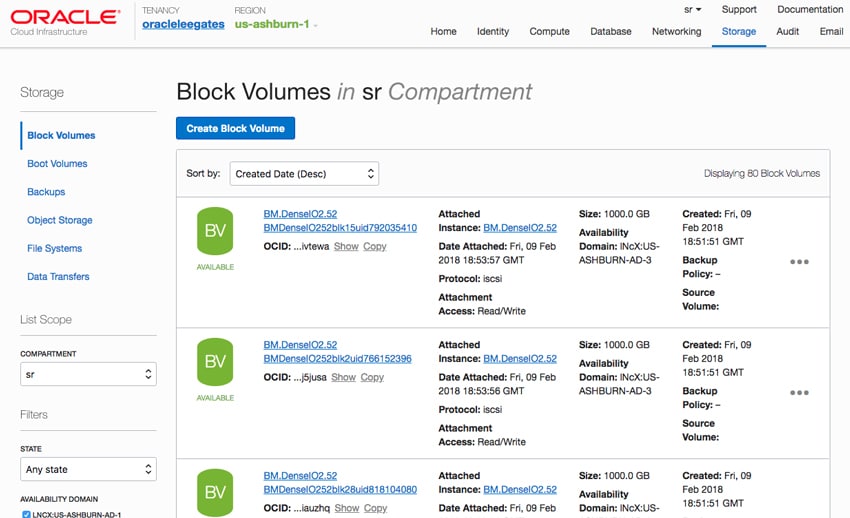

Under the Storage tab, users can see the block volumes in their compartment and create more. The block volumes are listed by date created and users can drill down for more information, create manual backups, detach the block volume from the instance, delete the volume, or apply tags.

And as Audit implies, one can quickly look at past events by selecting the date and time range. This enables enterprises to meet management compliance needs where the user and action for every event or change to the environment is captured.

Performance

VDBench Workload Analysis

To evaluate the performance of these Oracle Cloud instances, we leveraged vdbench installed locally on each platform. Our tests were spread across all local storage at the same time, so if both BV (Block Volume) and NVMe storage were present, we tested one group at a time. With both storage types, we allocated 12% of each device and grouped them together in aggregate to look at peak system performance with a moderate amount of data locality.

These workloads offer a range of different testing profiles ranging from “four corners” tests, common database transfer size tests, as well as trace captures from different VDI environments. All of these tests leverage the common vdBench workload generator, with a scripting engine to automate and capture results over a large compute testing cluster. This allows us to repeat the same workloads across a wide range of storage devices, including flash arrays and individual storage devices.

Profiles:

- 4K Random Read: 100% Read, 128 threads, 0-120% iorate

- 4K Random Write: 100% Write, 64 threads, 0-120% iorate

- 64K Sequential Read: 100% Read, 16 threads, 0-120% iorate

- 64K Sequential Write: 100% Write, 8 threads, 0-120% iorate

- Synthetic Database: SQL and Oracle

- VDI Full Clone and Linked Clone Traces

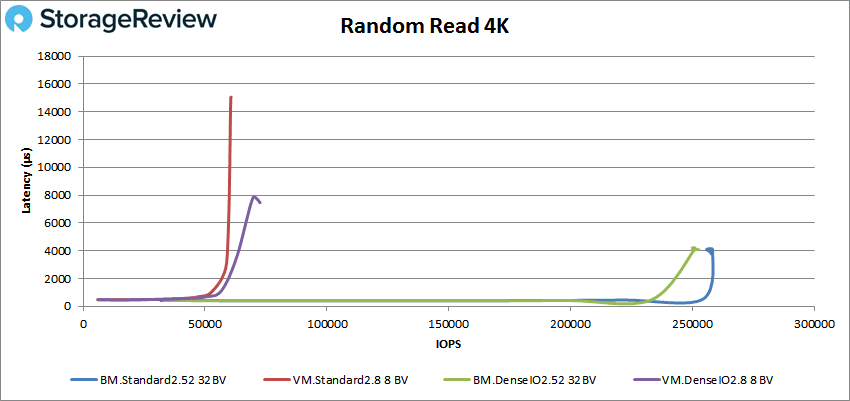

We look at both remote block devices (BV) and NVMe. Since there is such a dramatic performance difference, we have separated the results into two charts (the latency would be so far apart that the charts would be very difficult to read). For this review we look at both Bare Metal (BM) and VM configurations with both standard and dense IO runs on BV and just dense IO runs for the NVMe.

Looking at peak 4K read for BV, all four runs started off with strong sub-millisecond latency. The first to peel away was the VM.Standard, making it just under 53K IOPS then peaking at 60,591 IOPS with a latency of 15ms. The next to break sub-millisecond latency was the VM.DenseIO, going over 1ms in about the same spot as standard, but peaking at 72,626 IOPS with a latency of 7.5ms. Both of the bare metal runs did much better with the DenseIO running sub-millisecond latency performance until about 235K IOPS, peaking at 252,275 IOPS with a latency of 4.1ms. The BM.Standard made it until about 250K IOPS before going over 1ms and peaked at 258,329 IOPS with a latency of 4.05ms.

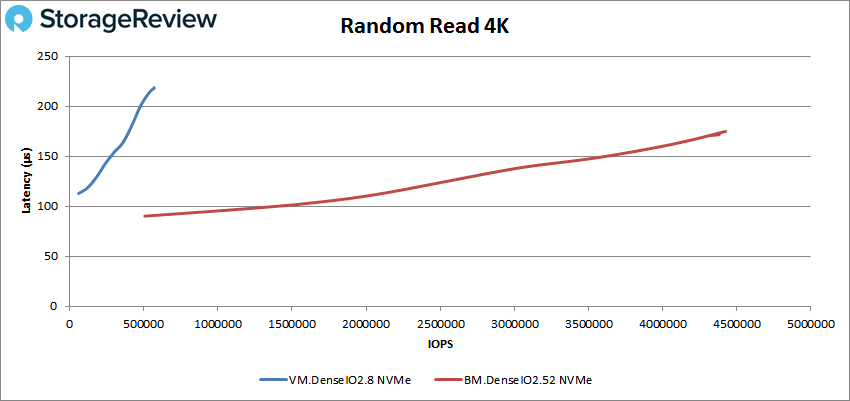

Looking at peak 4K read performance for NVMe, both runs had sub-millisecond latency throughout. The VM.DenseIO peaked at 569,534 IOPS with a latency of 214μs. The BM.DenseIO peaked at 4,419,490 IOPS with a latency of only 174.6 μs.

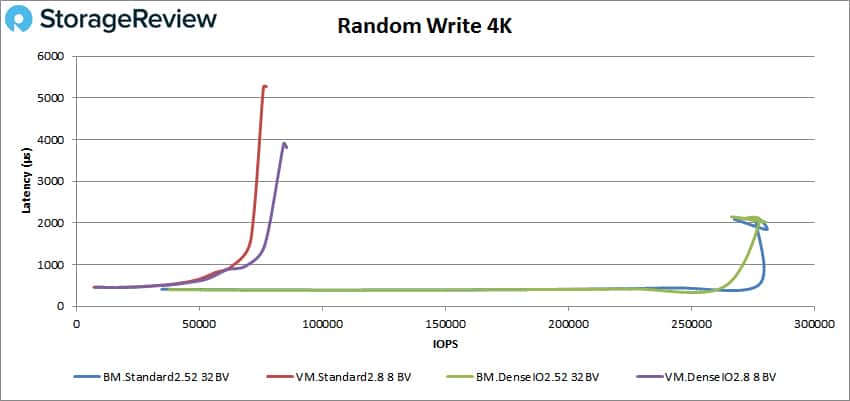

Switching over to random 4K write peak performance for BV, we see a similar placing as before with the VMs peaking much earlier than the BMs. The VM.Standard made it to about 63K IOPS before breaking sub-millisecond latency and peaked at 77,229 IOPS at 5.3ms latency. The VM.DenseIO performed a bit better hitting about 69K IOPS before going over 1ms and peaked at 84,274 IOPS with a latency of 3.9ms. The BM.DenseIO was able to make it to north of 263K IOPS before going above 1ms in latency and peaked at 280,158 IOPS with a latency of 2.02ms. And BM.Standard was the top performing configuration making to about 278K IOPS before going over sub-millisecond latency and peaked at 280,844 IOPS with a latency of 1.84ms.

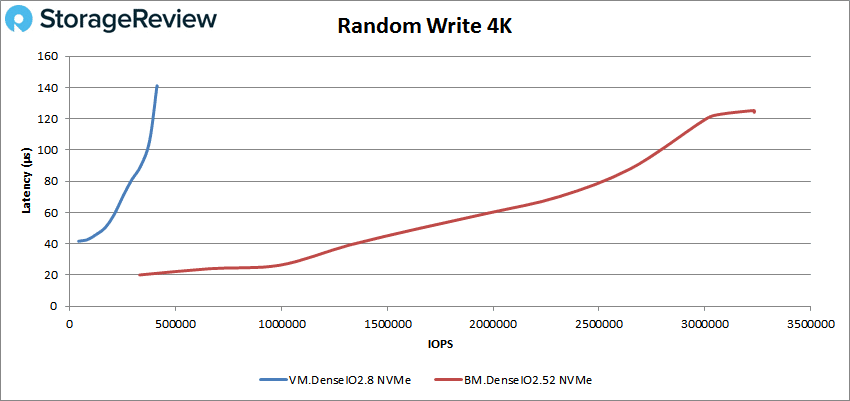

With the NVMe on 4K write, again the BM outperformed the VM with both having sub-millisecond latency performance throughout. The VM.DenseIO peaked at 412,207 IOPS with a latency of 141μs. The BM.DenseIO peaked at 3,232,215 IOPS with a latency of 125μs.

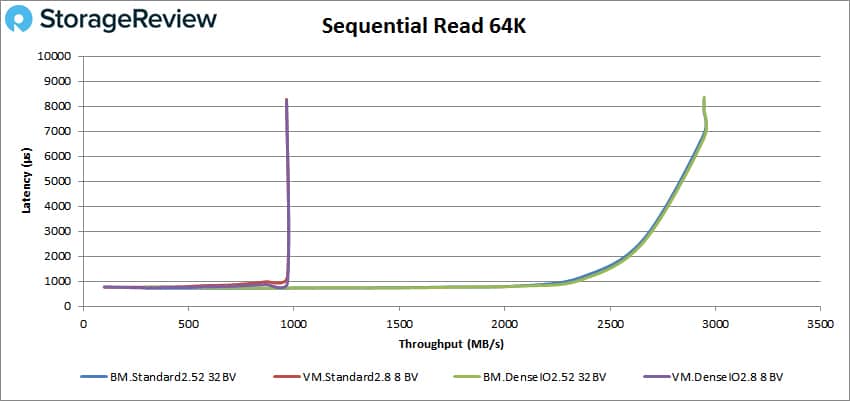

Moving on to sequential work, first we look at 64K read for BV. Both the VM.Standard and VM.DenseIO broke 1ms latency at about 15.5K IOPS or 968MB/s. And both more or less maintained the same performance as latency climbed to 8.2ms. Again we saw something similar with BM.Standard and BM.DenseIO both breaking 1ms at about 37.5K IOPS or 2.35GB/s. Both configs peaked at just north of 47K IOPS or 2.95GB/s at 8.4ms latency.

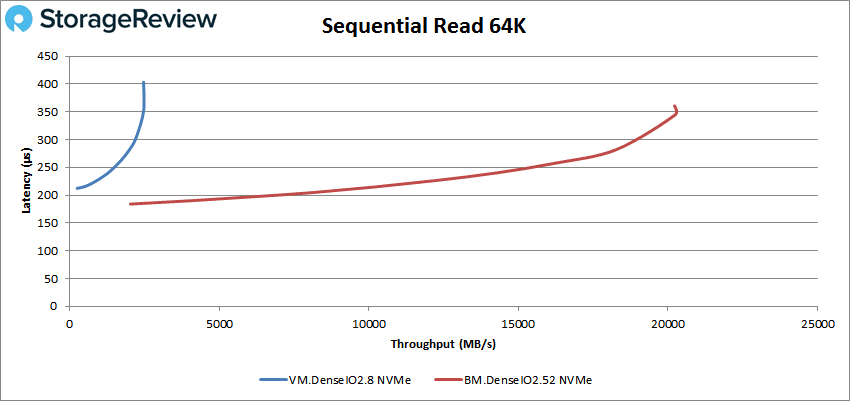

NVMe sequential 64K read had both configs stay below sub-millisecond latency throughout. The VM.DenseIO peaked at 39,512 IOPS or 2.5GB/s with a latency of 403μs, while the BM.DenseIO peaked at 323,879 IOPS or 20.2GB/s with a latency of 361μs.

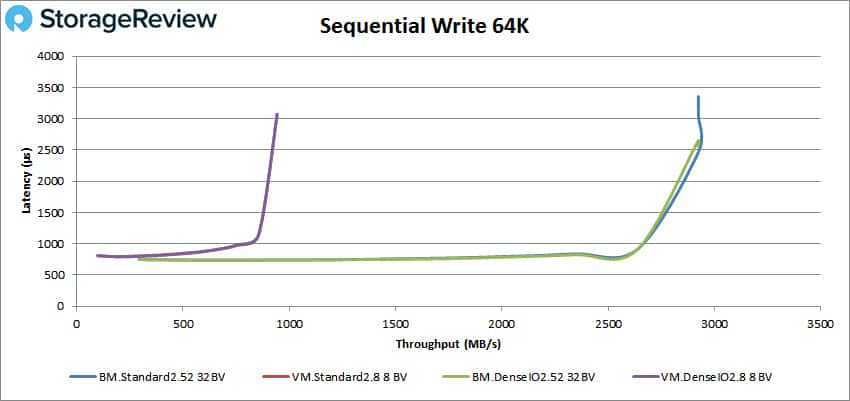

With 64K sequential writes for BV, we again see a similar phenomenon with VM.Standard and VM.DenseIO both breaking 1ms latency at a performance of 12K IOPS or 770MB/s. Both peaked around 15.1K IOPS or 943MB/s at a latency of 3.1ms. With the BM.Standard and BM.DenseIO both broke 1ms latency at about 42K IOPS or 2.6GB/s with the BM.DenseIO peaking at 46,768 IOPS or 2.92GB/s with 2.6ms latency. The BM.Standard peaked at 46,787 IOPS or 2.92GB/s with a latency of 3.4ms.

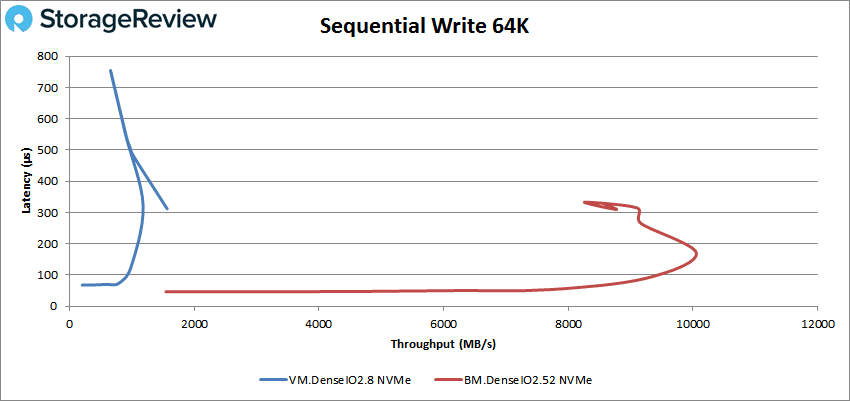

For 64K sequential writes for NVMe, both the VM.DenseIO and BM.DenseIO had sub-millisecond latency performance throughout again, but both suffered a spike in latency as well (coupled with a final reduction in performance for the BM.DenseIO). The VM.DenseIO peaked at 25K IOPS or 1.56GB/s with a latency of 311μs after a spike up to 754μs. The BM.DenseIO had much better peak performance (160,895 IOPS or 10.1GB/s at a latency of 170μs), but it did drop off some at the end with an uptick in latency as well, finishing at 132,192 IOPS or 8.8GB/s with a latency of 310μs.

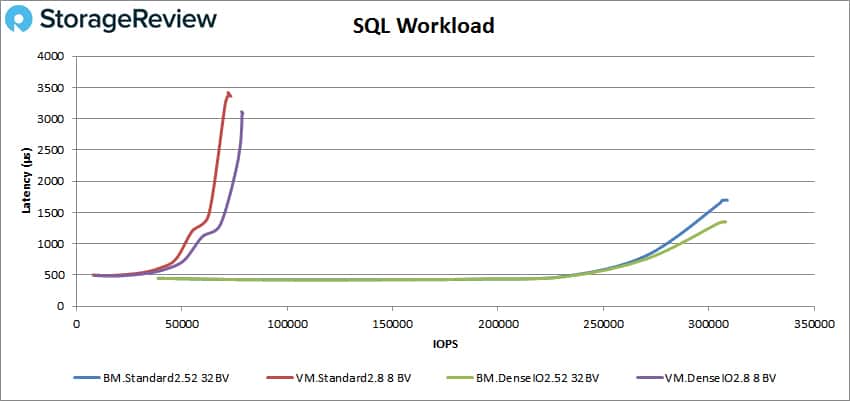

In our SQL workload for BV, the VM.Standard was the first to go above 1ms at roughly 50K IOPS it peaked at 73,259 IOPS with a latency of 3.4ms. VM.DenseIO went over 1ms latency at around 58K IOPS and peaked at 78,624 IOPS with a latency of 3.1ms. With the BMs, both stayed under 1ms in latency until around 275K (with the BM.DenseIO running a bit longer). The BM.Standard peaked at 305,368 IOPS with 1.7ms latency while the BM.DenseIO peaked at 307,979 IOPS with 1.35ms latency.

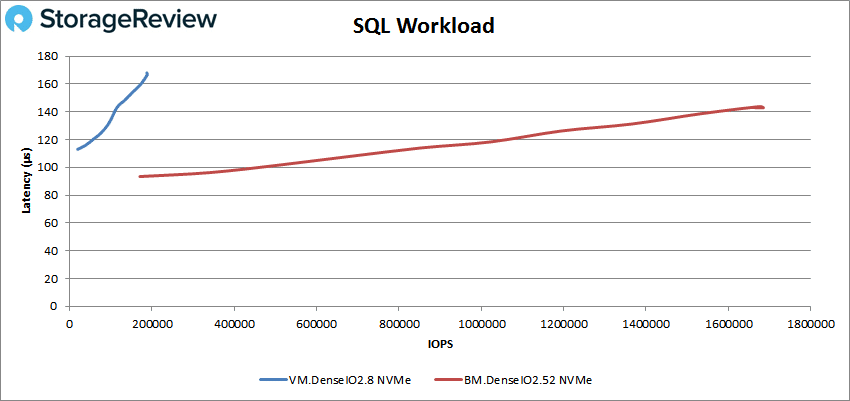

SQL for NVMe again had sub-millisecond latency throughout with the VM.DenseIO peaking at 188,786 IOPS with 167μs. The BM.DenseIO peaked at 1,684,869 IOPS with a latency of 142μs.

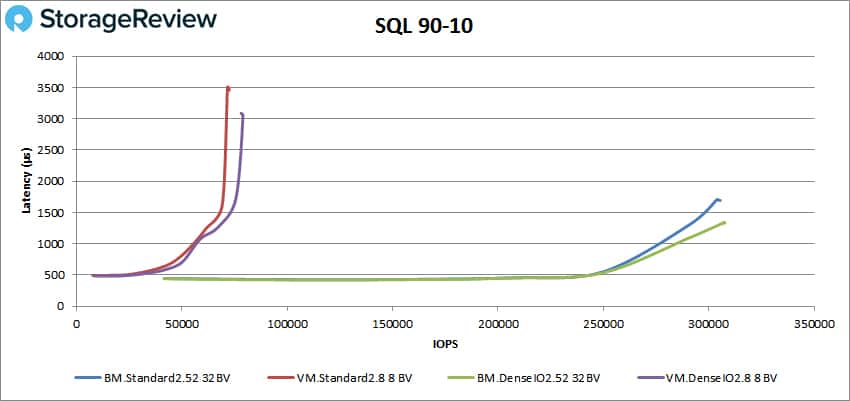

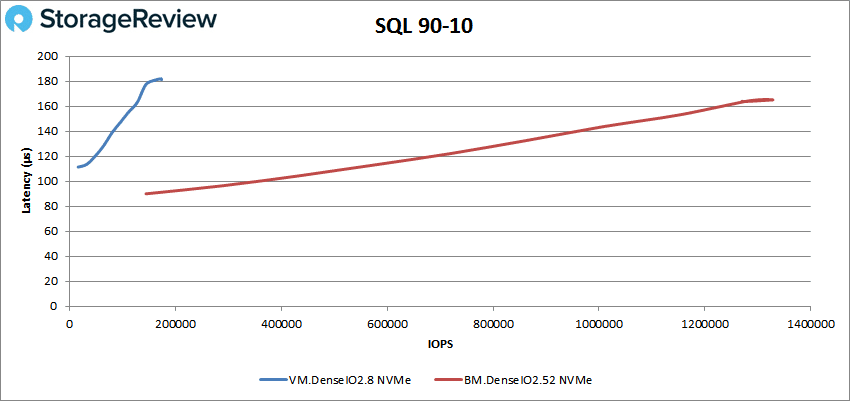

In the SQL 90-10 benchmark for BV, both VMs broke sub-millisecond latency performance at about 58K IOPS. The VM.Standard peaked at 71,691 IOPS with a latency of 3.5ms. The VM.DenseIO peaked at 79,033 IOPS with a latency of 3.05ms. The BM.Standard broke 1ms latency at a performance of roughly 270K IOPS and peaked at 303,904 IOPS with a latency of 1.7ms. The BM.DenseIO had sub-millisecond latency until about 290K IOPS and peaked at 307,472 IOPS with a latency of 1.34ms.

For NVMe SQL 90-10, the VM.DenseIO peaked at 172,693 IOPS with a latency of 182μs. The BM.DenseIO peaked at 1,328,437 IOPS with 165μs.

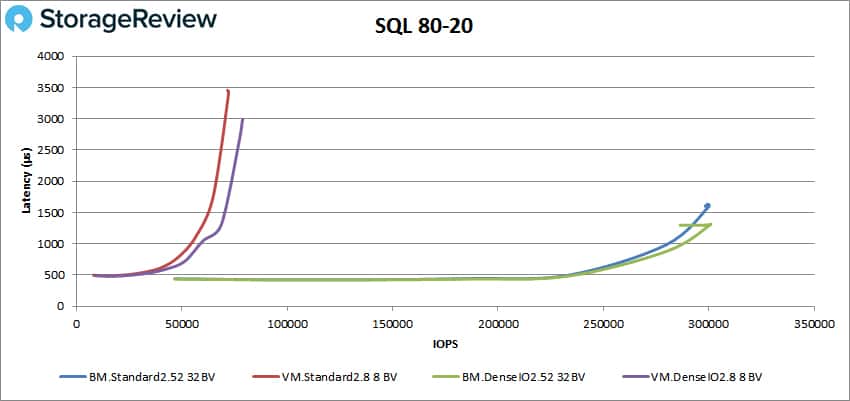

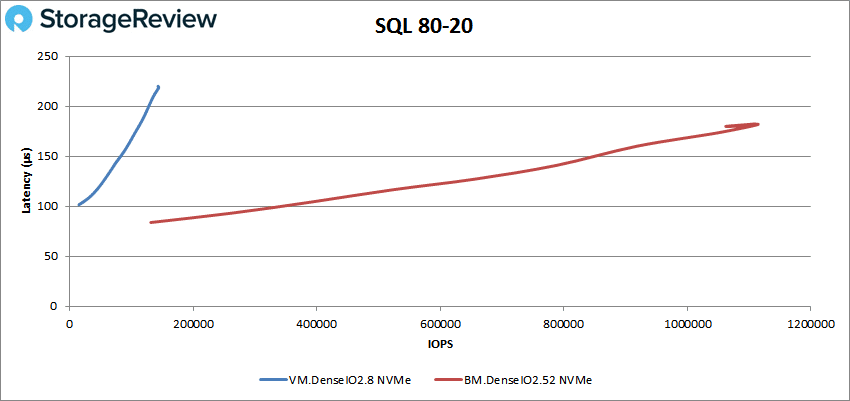

In the SQL 80-20 benchmark for BV, the VM.Standard made it to about 54K IOPS before going over 1ms and peaked at 72,204 IOPS with a latency of 3.4ms. The VM.DenseIO had sub-millisecond latency until about 59K IOPS and peaked at 78,787 IOPS with a latency of 2.99ms. The BM.Standard ran to about 280K IOPS with latency under 1ms and peaked at 300,014 IOPS with a latency of 1.6ms. The BM.DenseIO broke 1ms latency at about 285K IOPS and peaked at 299,730 IOPS with 1.3ms latency before dropping down in performance.

In the SQL 80-20 benchmark for NVMe, the VM.DenseIO peaked at 144,010 IOPS with a latency of 218μs. The BM.DenseIO peaked at 1,114,056 IOPS with a latency of 182μs before having a slight drop off in performance.

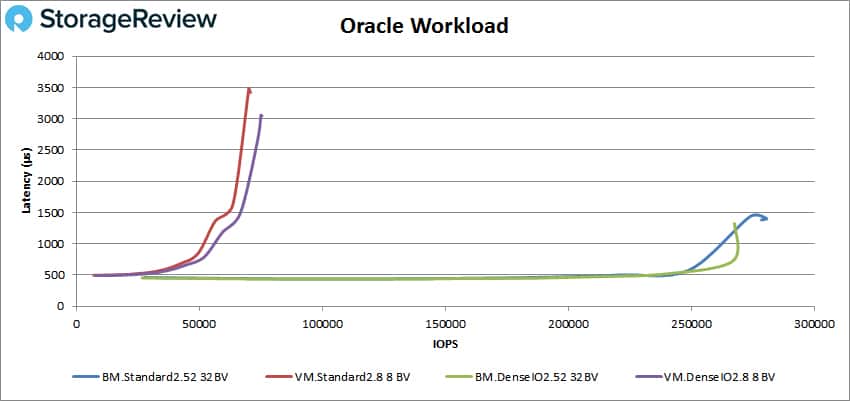

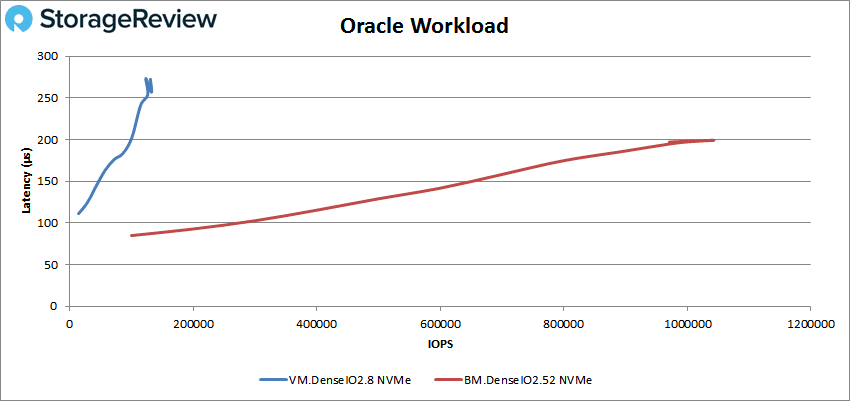

In our Oracle workload with BV, the VM.Standard had sub-millisecond latency performance until it reached about 52K IOPS and peaked at 70,096 IOPS with a latency of 3.4ms. The VM.DenseIO broke 1ms latency at roughly 58K IOPS and peaked at 75,000 IOPS with a latency of 3.1ms. BM.Standard broke 1ms latency around 255K with a peak performance of 280,599 IOPS with a latency of 1.41ms. BM.DenseIO had sub-millisecond latency until about 260K IOPS and peaked at 267,632 IOPS with a latency of 1.3ms.

Our Oracle workload with NVMe showed a peak performance for the VM.DenseIO of 132,553 IOPS with a latency of 257μs. With BM.DenseIO the peak performance was 1,043,104 IOPS and a latency of 199μs.

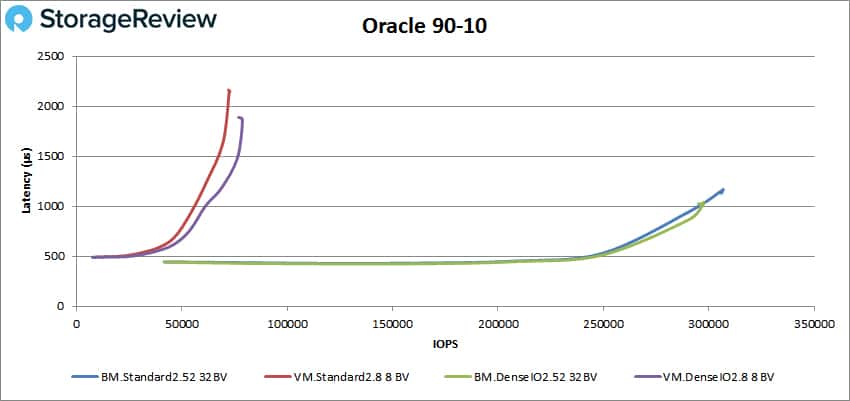

In the Oracle 90-10 for BV, the VM.Standard had sub-millisecond latency until just over 54K IOPS and peaked at 72,533 IOPS with a latency of 2.2ms. The VM.DenseIO broke 1ms latency at roughly 61K IOPS and peaked at 76,908 IOPS with a latency of 1.86ms. Both BMs made it to 297K IOPS before breaking 1ms latency. The BM.Standard peaked at 305,771 IOPS with a latency of 1.17ms. The BM.DenseIO had a peak performance of 297,509 IOPS with a latency of 1.03ms.

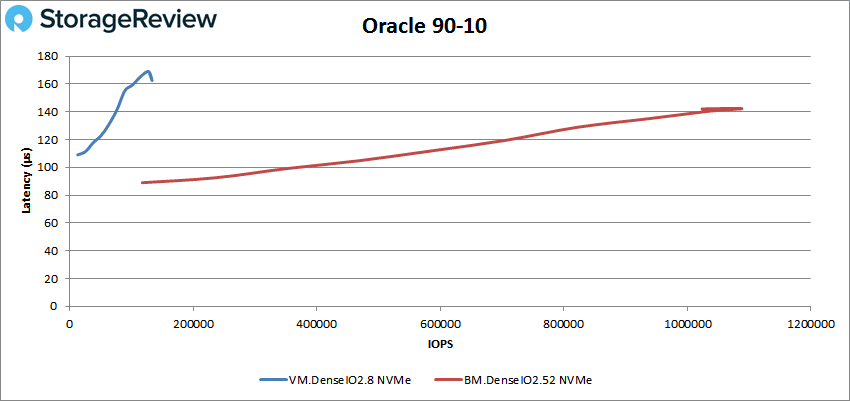

In the Oracle 90-10 for NVMe, the VM.DenseIO had a peak performance of 133,330 IOPS and a latency of 163μs. The BM.DenseIO had a peak performance of 1,088,454 IOPS and a latency of 142μs.

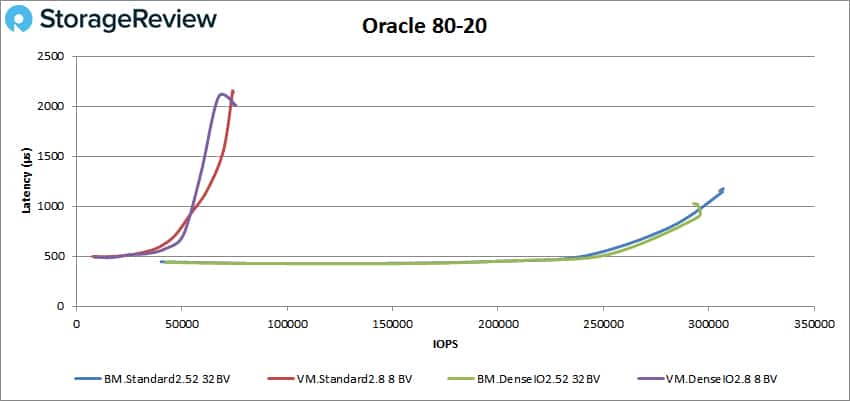

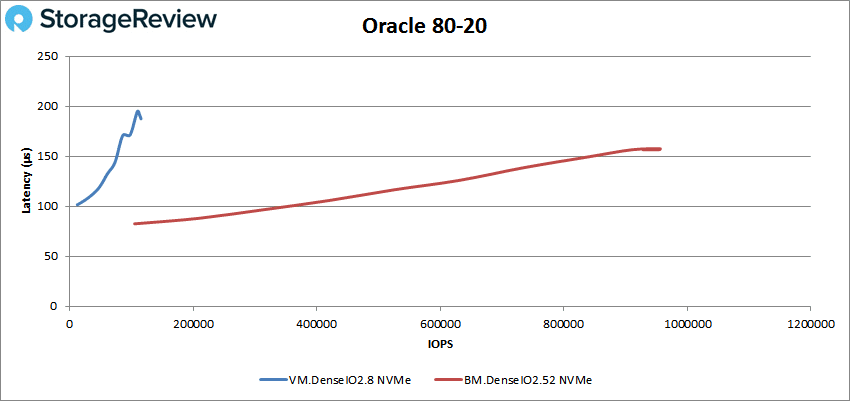

In the Oracle 80-20 with BV, the VM.Standard made it to about 55K in less than 1ms and peaked at 74,032 IOPS with a latency of 2.14ms. The VM.DenseIO had sub-millisecond latency until about 51K and peaked at 75,666 IOPS with a latency of 2ms. Both BMs made it until about 295K IOPS before breaking 1ms. The BM.Standard peaked at 306,955 IOPS with a latency of 1.14ms. The BM.DenseIO peaked at about 295K IOPS with a latency of 893μs.

In the Oracle 80-20 with NVMe, the VM.DenseIO peaked at 108,483 IOPS with a latency of 195μs. The BM.DenseIO peaked at 956,326 IOPS with a latency of 158μs.

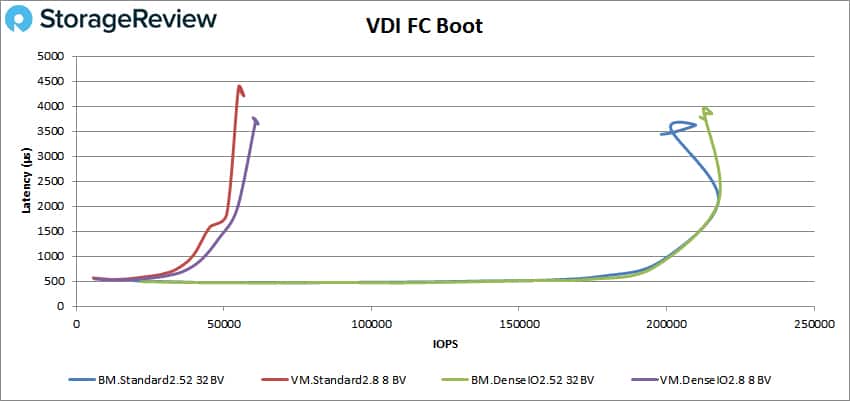

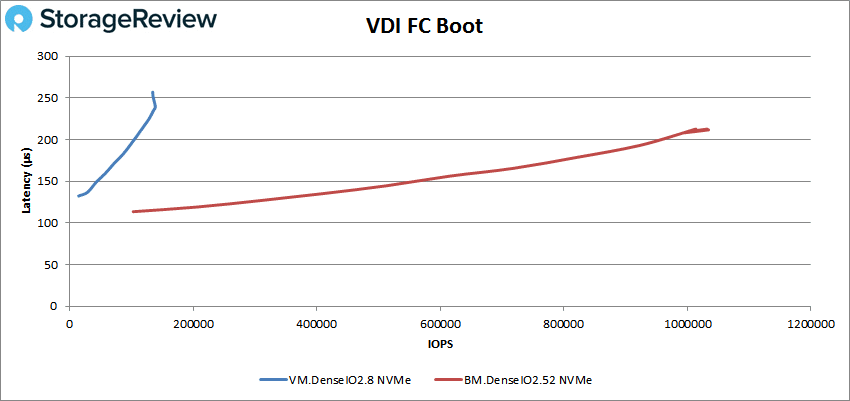

Next we looked at VDI Full Clone. For the boot with BV, VM.Standard broke 1ms just under 40K IOPS and peaked at 56,057 IOPS with a latency of 4.2ms. VM.DenseIO made it with sub-millisecond latency until roughly 43K IOPS and peaked at 61,570 IOPS with a latency of 3.6ms. Both BMs had sub-millisecond latency until just over the 200K IOPS threshold. Both peaked at about 220K IOPS with a latency of 2.1 before dropping off in performance.

For Full Clone boot with NVMe, the VM.DenseIO peaked at about 136K IOPS with a latency of 235μs. The BM.DenseIO peaked at 1,032,322 IOPS with a latency of 213μs.

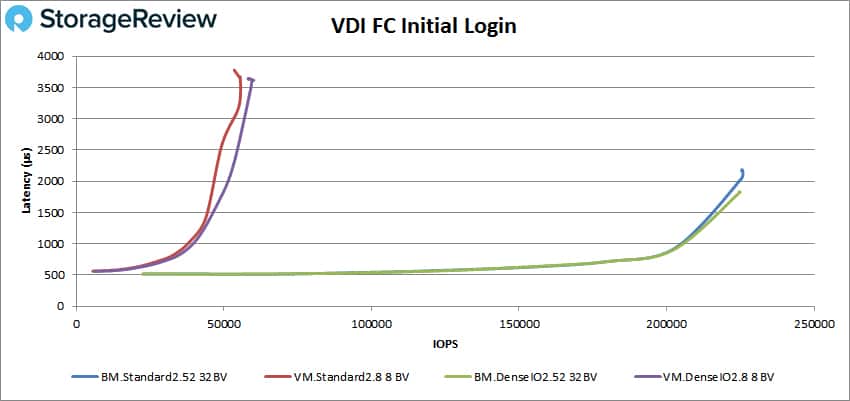

With VDI Full Clone Initial Log In with BV, both VMs made it to about 41K IOPS with sub-millisecond latency, with the VM.Standard peaking at 55,522 IOPS with a latency of 3.7ms and the VMDenseIO peaking at 59,560 IOPS with 3.6ms latency. Both BMs broke sub-millisecond latency right around 203K IOPS (with standard going before dense). BM.Standard peaked at about 225K IOPS with 2.04ms latency and the BM.DenseIO peaked at 224,385 IOPS with a latency of 1.8ms.

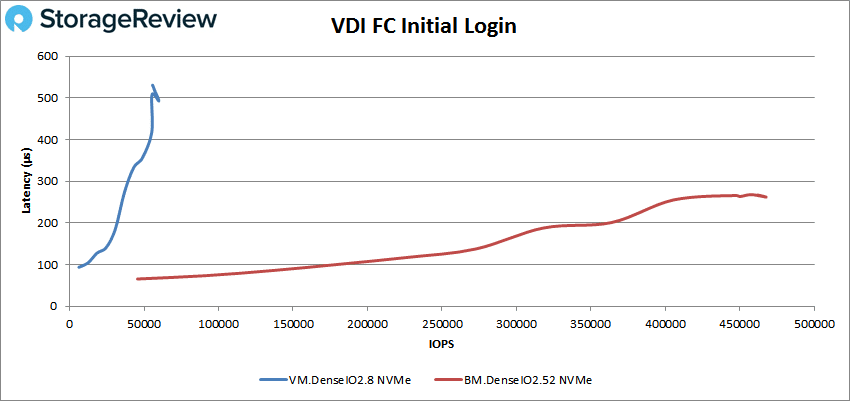

For VDI Full Clone Initial Login with NVMe, the VM.Standard peaked at 59,883 IOPS with a latency of 506μs. And the BM.DenseIO peaked at 467,761 IOPS with a latency of 262μs.

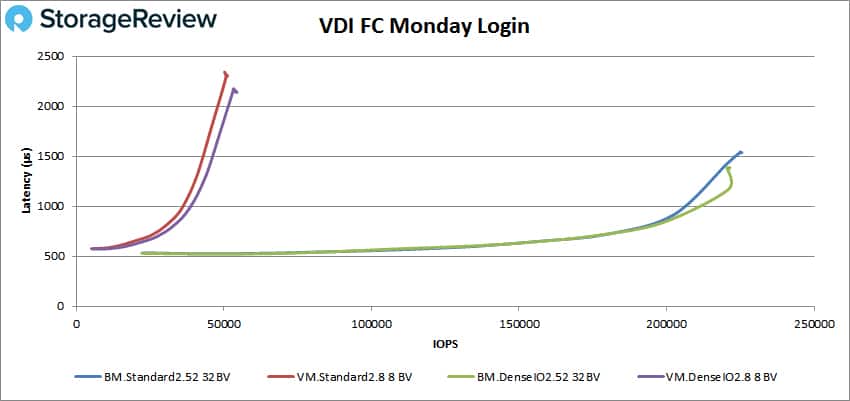

VDI Full Clone Monday Login with BV had the VM.Standard with sub-millisecond latency until just shy of 36K IOPS with a peak of 50,685 IOPS and a latency of 2.3ms. VM.DenseIO performed under 1ms until just north of 38K IOPS and peaked at 53,304 IOPS with 2.2ms latency. BM.Standard broke 1ms latency at about 205K IOPS and peaked at 224,764 IOPS with a latency of 1.5ms. BM.DenseIO went above 1ms around 210K with a peak of just over 220K IOPS and a latency of 1.2ms.

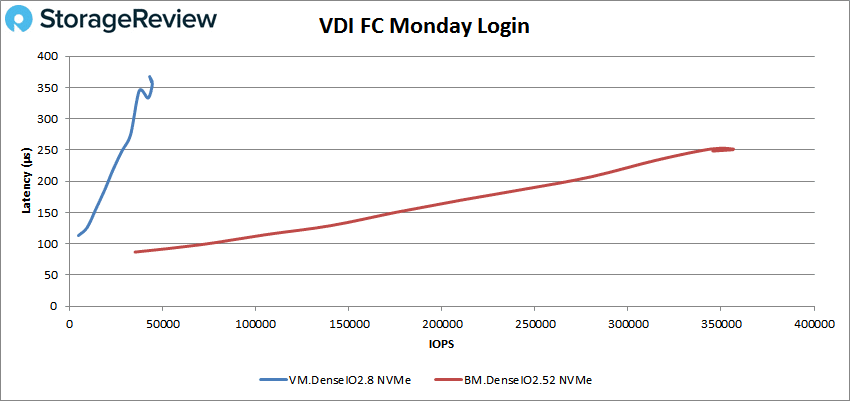

VDI Full Clone Monday Login with NVMe showed a peak performance of VM.DenseIO of 44,384 IOPS with a latency of 356μs. BM.DenseIO peaked at 356,691 IOPS with a latency of 252μs.

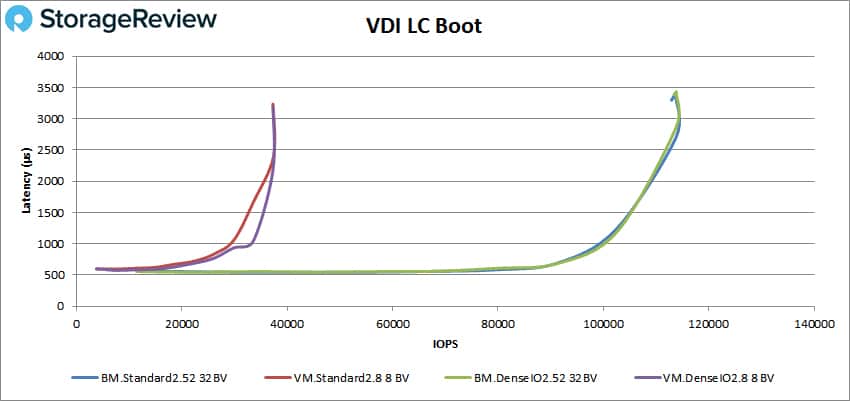

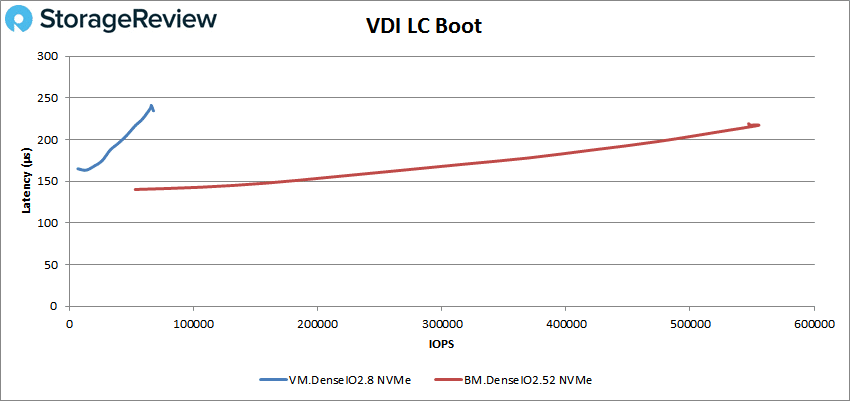

Our final selection of tests looks at VDI Linked Clone. Starting again with the boot test with BV, the VM.Standard had sub-millisecond latency until about 29K IOPS, peaking at about 38K IOPS with 2.4ms latency. VM.DenseIO made it until about 32K IOPS before breaking 1ms and peaked at about 38K IOPS as well with 2.16ms latency. Both BMs made it to roughly 100K IOPS before going over 1ms latency. Both had a peak performance of about 114K IOPS with 3ms latency.

With VDI Linked Clone Boot for NVMe, we saw the VM.DenseIO peak at 65,384 IOPS with a latency of 238μs. The BM.DenseIO peaked at 555,004 IOPS with a latency of 217μs.

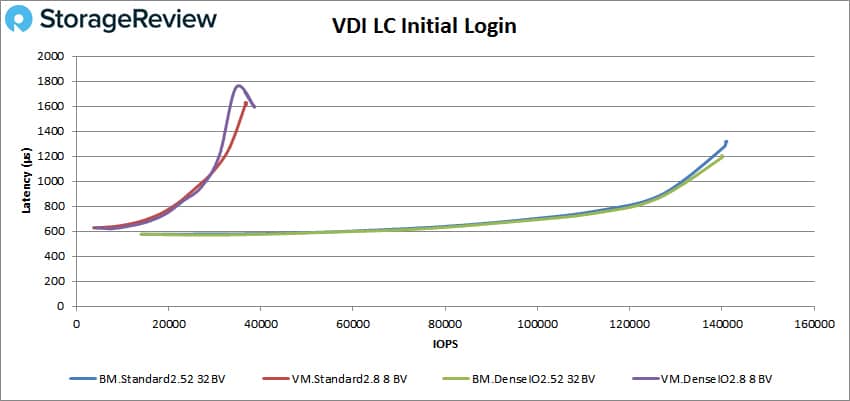

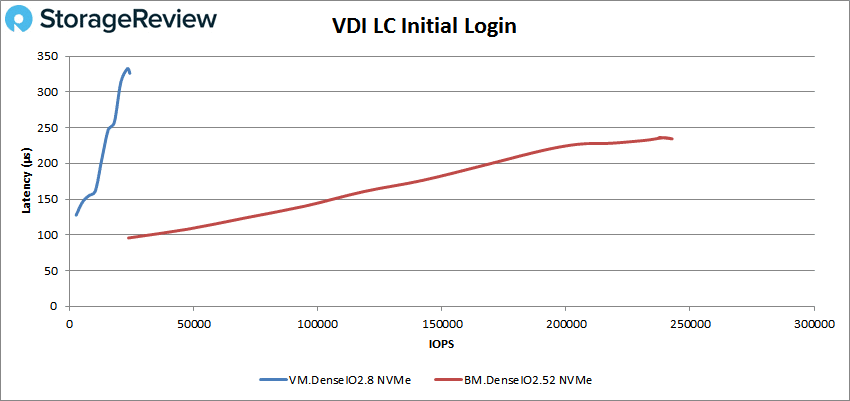

With Initial Login with BV, both VMs broke 1ms latency at roughly 28K IOPS, with the VM.Standard peaking at 36,682 IOPS with a latency of 1.6ms and the VM.DenseIO peaking at 38,525 IOPS with a latency of 1.6ms. Both BMs broke 1ms latency at around 132K IOPS with the BM.Standard peaking at 140,848 IOPS with a latency of 1.3ms and the BM.DenseIO peaking at 139,883 IOPS and 1.2ms latency.

Initial Login with NVMe saw a peak performance of 24,228 IOPS and 326μs for the VM.DenseIO and 242,778 IOPS with 234μs for the BM.DenseIO.

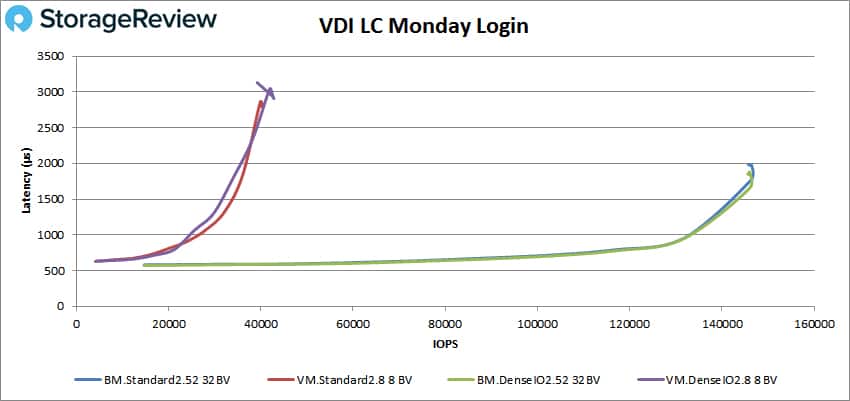

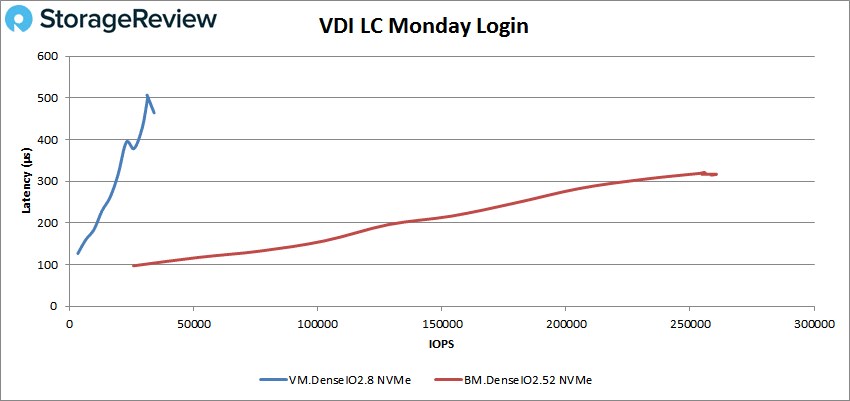

Finally, with VDI Linked Clone Monday Login with BV, the VM.Standard had sub-millisecond latency performance until about 27K IOPS with a peak of 39,874 IOPS and a latency of 2.86ms. VM.DenseIO broke 1ms at around 25K IOPS and peaked at 42,469 IOPS and 3ms latency. Both BMs had sub-millisecond latency until around 135K IOPS with both peaking at 146K IOPS, the denseIO having a latency of 1.6ms and the standard having a latency of 1.76ms.

With VDI Linked Clone Monday Login with NVMe, the VM.DenseIO peaked at 34,016 IOPS and a latency of 464μs. BM.DenseIO peaked at 260,527 IOPS and a latency of 317μs.

Conclusion

Oracle’s Cloud Infrastructure takes one of the main issues with the Cloud–performance or lack thereof–and addresses it with its bare metal instances. Oracle offers bare metal and virtual compute instances as well as NVMe versions with up to 25TB of NVMe storage for performance unlike anything else seen in the cloud. It takes more than NVMe storage to hit Oracle’s quoted performance of up to 5.1 million IOPS; the instances also have up to 52 OCPUs, 768GB RAM, dual 25GbE NICs and up to 51TB of locally attached NVMe storage. This level of performance is used primarily for use cases such as mission-critical database applications, HPC workloads, and I/O intensive web applications.

On the performance side of things, we ran our VDBech tests for both bare metal (BM) and VM shapes using both the local NVMe storage (DenseIO) and network block storage (Standard). The performance, simply put, blew us away. For each test we ran two sets of charts, as the latency discrepancy between the DenseIO and Standard was so great the charts would be difficult to read if all were on one set. In terms of how good storage performance on these instances is compared to traditional storage, they rival many of the best shared storage options on the market, let alone cloud alternatives. The attached BVs which are hosted over iSCSI and backed up offer up a strong mix of throughput and bandwidth served up at low latency. To put this in context, we saw many tests with 32 BVs attached on the 52 OCPU instances exceed the performance of all-flash storage arrays we’ve tested in our lab. Some might actually have been slightly faster, which is pretty impressive considering we’re comparing a cloud instance against a $250k+ AFA, FC switching and multiple compute hosts.

What makes the Oracle Cloud bare metal instances really incredible, though, is the locally attached NVMe storage. The DenseIO2.8 had 1 device, while the DenseIO2.52 had 8, giving these instances performance measured in the millions of IOPS. The instance with 1 NVMe SSD saw random 4K read speed top at 569k IOPS, while the instance with 8 had performance skyrocket out to 4.4M IOPS. Bandwidth was no joke either; the smaller instance saw 2.5GB/s read peak, while the larger one topped out above 20GB/s. Be sure to have a backup plan in place, as the NVMe shapes are locally attached storage after all, that need to be protected.

Oracle has built out top-spec servers and storage in the cloud; the only thing that can rival their bare metal instances is building out a top-spec server hosted locally, along with all of the other components and services to support it. As with all cloud solutions, Oracle offers the fluidity to turn your instances on and off and flexibility to adjust storage requirements as needed. With these instances, the obvious issue that will come up is cost. The expediency of getting an instance online within Oracle Cloud vs. the effort and expense required to set up comparable hardware in your own data center, is perhaps the key deciding factor. While NVMe attached storage is expensive, there’s no arguing with the performance benefits that we saw. If you have a business that is impacted by the processing time on large data sets, there is no easier or faster solution to get workloads like analytics complete than the NVMe-based shapes we used. And it’s not that the standard attached block shapes were bad, the NVMe shapes were just so unreal they eclipse the rest. The bottom line is that forward-thinking businesses that can derive measurable value from high-performance cloud should definitely be evaluating what Oracle has going on. There are many choices when going to the cloud, but there’s nothing that is as fast as what we’ve seen with the Oracle Cloud bare metal instances, making these solutions a clear and deserving winner of our first Editor’s Choice Award granted to a cloud services provider.

Amazon

Amazon