AMD and IBM collaborate to deliver advanced AI infrastructure to open-source AI research company Zyphra.

IBM and AMD have entered a multi-year collaboration to deliver advanced AI infrastructure to Zyphra, an open-source AI research and product company based in San Francisco. Under the agreement, IBM will provide a large, dedicated cluster of AMD Instinct MI300X GPUs on IBM Cloud for training frontier multimodal foundation models, positioning the deployment among the most ambitious generative AI training environments available today.

Collaboration Driving Momentum

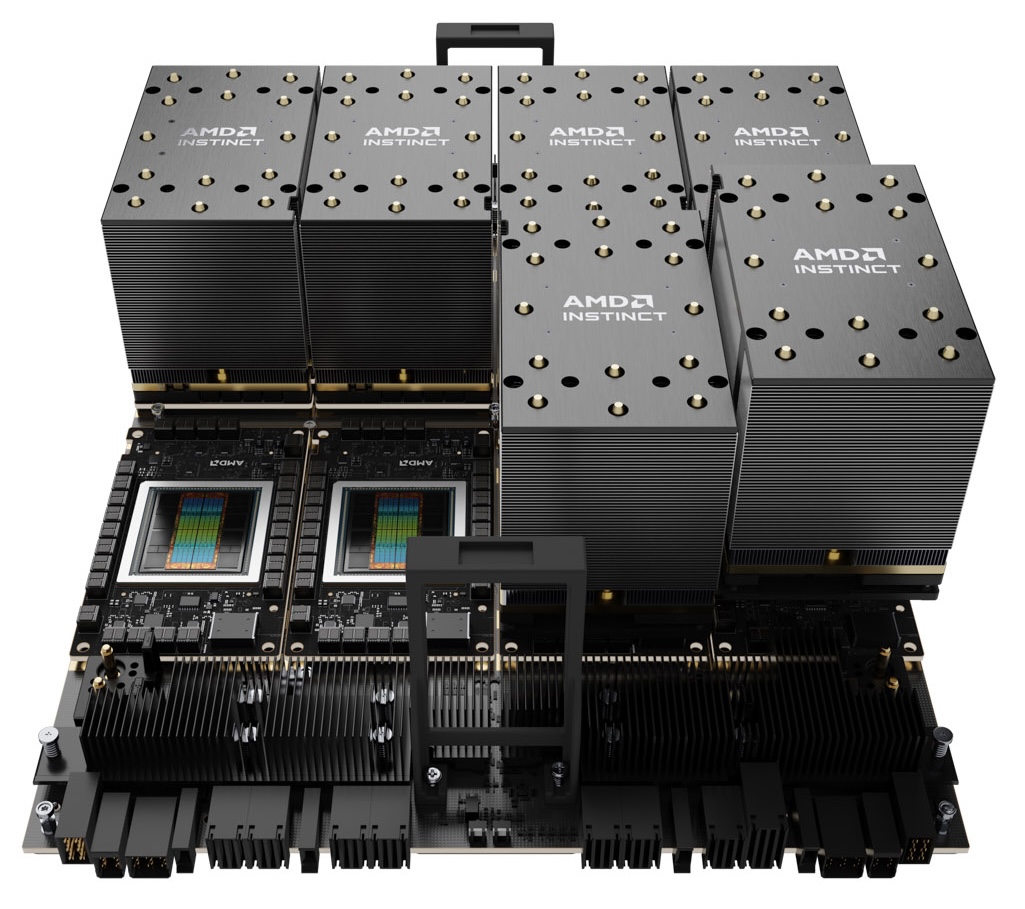

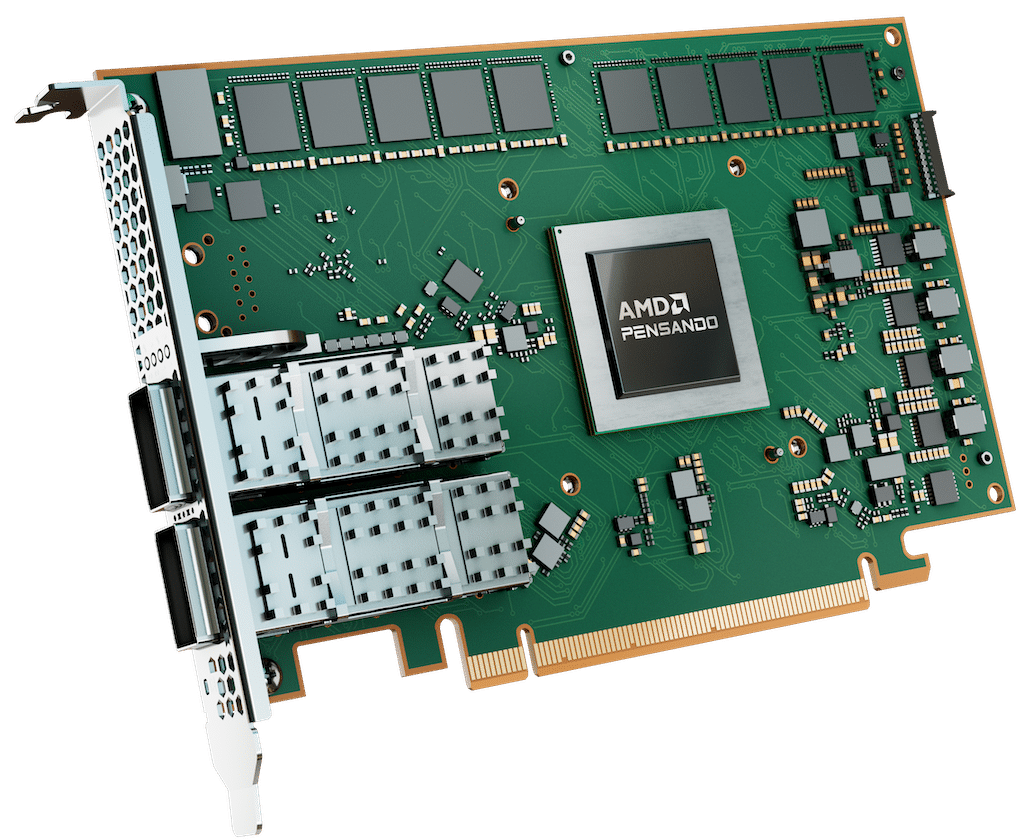

One of the most critical aspects of this collaboration is the introduction of enterprise-level scale to public cloud GPU infrastructure. This initiative represents the first large-scale, dedicated training cluster on IBM Cloud using AMD Instinct MI300X GPUs. The inclusion of AMD Pensando Pollara 400 AI NICs and Pensando Ortano DPUs adds further depth by creating a network fabric optimized for high-throughput training workloads, complete with data-plane acceleration and efficient east-west networking. For IT administrators and CTOs, the setup highlights a pathway to run GPU-intensive AI training jobs as a managed cloud service, without sacrificing the scalability, orchestration, and SLAs expected in enterprise deployments.

Equally significant is the momentum behind the open ecosystem. Zyphra is not building in isolation, but instead operating with an open-source and open-science ethos. The company is utilizing its infrastructure to conduct research in neural architectures, long-term memory systems, and continual learning techniques. These avenues are not only beneficial for AI in general but also provide enterprises with greater transparency, reproducibility, and licensing clarity than traditional black-box models. The open-source dimension may create downstream opportunities for organizations evaluating AI investment strategies but wary of opaque, proprietary solutions.

Another key factor is the alignment of the supply chain and the roadmap. Securing GPU capacity at scale has become one of the most significant bottlenecks in AI development. Zyphra’s engagement with IBM and AMD included early collaboration on product roadmaps and delivery schedules, enabling reliable access to accelerators alongside rapid deployment timelines. The initial cluster went live in September, with a deliberate plan for capacity expansion in 2026. For enterprise technology leaders, this signals a forward-looking approach to planning the GPU roadmap. One that balances near-term experimentation with the ability to scale into more complex model training cycles over time.

The Zyphra Program

Zyphra recently secured a Series A funding round at a $1 billion valuation. This is an early indicator of investor confidence in its mission. With that capital, the company intends to establish a leading “super intelligence” research lab grounded in the principles of open-source and open science. The new infrastructure on IBM Cloud is central to that effort. Powered by AMD Instinct accelerators, Zyphra’s models span language, vision, and audio modalities, converging into a pipeline that underpins Maia, its general-purpose superagent. The intended outcome is a platform designed to augment the knowledge workers of enterprises by automating workflows, surfacing relevant information, and enhancing overall productivity.

The technical arrangement with IBM and AMD ensures that Zyphra can grow its computational footprint as training requirements intensify. Rather than treating infrastructure as a static asset, the collaboration emphasizes continual scaling, aligning GPU supply, cloud orchestration, and advanced networking into one integrated solution. This forward compatibility is a critical differentiator for AI companies aiming to train larger and more sophisticated models while incurring predictable costs and maintaining reliable access to these models.

Architecture Highlights

The center of the deployment is compute. At scale, the AMD Instinct MI300X GPUs bring a high-performance, high-bandwidth memory architecture designed to handle the large data flows required in multimodal AI training. Each GPU cluster is optimized for dense floating-point workloads and is tuned to the performance-per-watt metrics that enterprises increasingly consider when evaluating operational costs.

Networking and the data plane are also central to the story. By utilizing AMD Pensando Pollara 400 AI NICs and Ortano DPUs, Zyphra’s environment can distribute workloads more efficiently across thousands of GPUs, eliminating the bottlenecks traditionally associated with east-west traffic in large clusters. The hardware offloads accelerate network throughput, enabling policy management, telemetry, and security enforcement, ensuring that workloads perform predictably even under heavy demand.

At the substrate layer, IBM Cloud provides the orchestration environment with enterprise-grade features for security, governance, and compliance. These capabilities enable Zyphra to navigate a landscape where enterprise AI must meet increasingly stringent requirements regarding data sovereignty, auditability, and service continuity. In effect, IBM Cloud complements AMD’s compute and networking stack with the operational controls that CTOs expect in production-quality deployments.

From Zyphra’s vantage point, this engagement represents the first successful implementation of a fully integrated stack, from compute accelerators through networking fabric, running entirely on IBM Cloud with AMD silicon. Zyphra’s leadership views this infrastructure as a springboard for creating frontier models in an open and enterprise-friendly context, with the larger ambition of influencing how superintelligence is developed and applied.

Krithik Puthalath, CEO and Chairman of Zyphra, highlighted that their collaboration with AMD and IBM Cloud marks the first successful integration of a full-stack training platform, spanning from compute to networking, on IBM Cloud. Zyphra will lead the development of frontier models using AMD silicon, aiming to advance open-source and enterprise super intelligence.

Alan Peacock, GM of IBM Cloud, emphasized the importance of rapidly and efficiently scaling AI workloads to achieve better ROI for both established enterprises and emerging companies. He highlights IBM’s support for Zyphra’s strategic growth by collaborating with AMD to develop scalable, cost-effective AI infrastructure that accelerates model training.

AMD, in turn, positions the collaboration as a milestone in AI infrastructure design. Philip Guido, EVP and Chief Commercial Officer, points to the integration of IBM’s established enterprise cloud ecosystem with AMD’s compute acceleration technology as a model for the industry. For AMD, the agreement highlights its leadership not only in high-performance compute but also in enabling real-world AI outcomes through systems capable of inference-efficient, multimodal work at scale.

Operating Model

The first phase of this deployment entered service in early September, making Zyphra one of the earliest organizations to access a large-capacity AMD Instinct MI300X cluster on IBM Cloud. This immediate availability addresses the near-term demands for training large multimodal models, enabling Zyphra to begin iterating quickly.

Looking forward, the collaboration sets the stage for expanded compute and networking resources in 2026. This phased rollout acknowledges the reality of scaling AI workloads: training requirements will continue to grow in tandem with dataset size, model complexity, and experimentation cadence. CTOs monitoring the evolution of multimodal AI will view the 2026 expansion as a strategic commitment to capacity planning, ensuring that Zyphra, IBM, and AMD remain aligned for the long term.

Strategy

The Zyphra engagement builds on a broader strategic alignment between IBM and AMD. Last year, the two companies began deploying AMD Instinct MI300X accelerators as a service on IBM Cloud, highlighting commitments around reliable performance, power efficiency, and enterprise-scale delivery. By moving from a service-oriented deployment to a dedicated, large-scale cluster, the Zyphra program illustrates a progression toward more customized and capacity-rich client solutions.

In parallel, IBM and AMD are expanding their collaboration into next-generation architectures such as quantum-centric supercomputing. IBM brings global leadership in quantum systems and algorithms, while AMD contributes high-performance compute and AI acceleration expertise. This combined roadmap is designed to push the boundaries of what is possible in large-scale computation, further reinforcing the long-term vision of an integrated enterprise infrastructure that spans today’s GPUs and tomorrow’s quantum processors.

Outcomes for IT and CTOs

Training efficiency and total cost of ownership will be the foremost considerations. Enterprises should closely monitor how effectively the MI300X and Pensando scale across large, multimodal workloads, particularly in key metrics such as tokens per second per GPU, interconnect performance, and per-job cost predictability.

Integration patterns also bear attention. The Zyphra deployment will showcase how data staging, checkpointing, observability, and feature stores integrate within IBM Cloud. For organizations evaluating their AI roadmaps, these patterns can serve as reference architectures.

Security and governance are also central. With standard models often spanning billions of parameters and petabytes of data, IT leaders will want to evaluate how programmable NICs and DPUs contribute to security isolation, telemetry, and granular policy enforcement inside multi-GPU clusters.

Hybrid and multicloud flexibility represent another dimension. While Zyphra’s environment currently runs on IBM Cloud, the architectural choices, like optimized NICs, DPUs, and orchestration layers, are designed to accommodate hybrid environments. For enterprises, this provides a potential blueprint for maintaining ROI through workload portability, data locality, and resilience across multiple clouds.

Finally, IT decision-makers should watch for Zyphra’s open-source releases. As a company committed to open science, Zyphra is expected to share training recipes, models, and benchmarks. These will provide enterprises with evaluation opportunities for fine-tuning domain-specific models, building retrieval augmented generation (RAG) pipelines, or assessing the efficiency of multimodal AI approaches in production workloads.

Key Takeaway

For technical decision-makers in enterprise IT, the Zyphra deployment represents more than a single client win. It serves as a reference implementation of how GPU supply, networking offload, and enterprise cloud infrastructure can align to create a sustainable pipeline for training frontier AI models. With IBM Cloud handling orchestration and AMD silicon providing dense compute and advanced networking, the collaboration resets expectations for performance, cost, and reliability in large-scale training.

Engage with StorageReview

Newsletter | YouTube | Podcast iTunes/Spotify | Instagram | Twitter | TikTok | RSS Feed