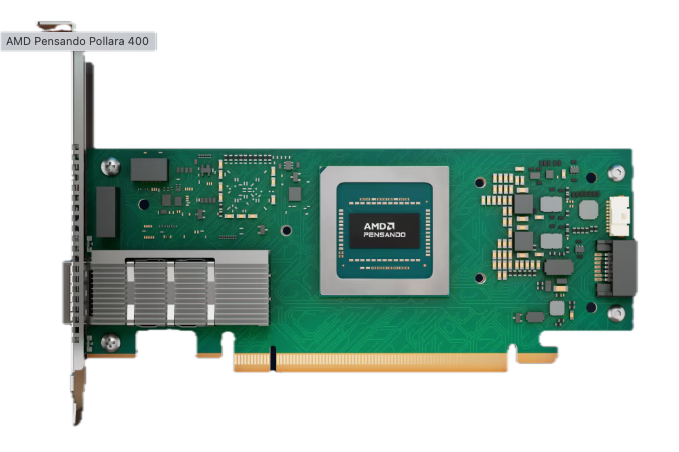

AMD has announced the availability of the Pensando Pollara 400, the industry’s first fully programmable AI Network Interface Card (NIC). Designed to accelerate AI workloads, this innovative solution is compatible with the developing Ultra Ethernet Consortium (UEC) standards and optimized to enhance GPU-to-GPU communication in data centers. The Pollara 400 AI NIC represents a significant leap forward in constructing scalable, high-performance infrastructure for AI/ML workloads, generative AI, and large language models.

As AI evolves, organizations face the challenge of developing similar computing infrastructures that deliver optimal performance and provide the flexibility to adapt to future demands. A critical factor in this evolution is efficiently scaling out intra-node GPU communication networks. AMD’s commitment to preserving customer choice and reducing the total cost of ownership (TCO) without sacrificing performance is evident in the Pollara 400 AI NIC. This solution empowers organizations to create future-ready AI infrastructure while maintaining compatibility with an open ecosystem.

Accelerating AI Workloads with Advanced Networking

Maximizing AI cluster performance is a top priority for cloud service providers, hyperscalers, and enterprises. However, many organizations see the network as a bottleneck, restricting GPU utilization. While data transfer speed is essential, it is only beneficial when the network is optimized to meet the demands of modern AI workloads.

| Specification | Details |

|---|---|

| Max Bandwidth | 400 Gbps |

| Form Factor | Half-height, half-length (HHHL) |

| Host Interface | PCIe Gen5.0 x16 |

| Ethernet Interface | QSFP112 (NRZ/PAM4 Serdes) |

| Ethernet Speeds | 25/50/100/200/400 Gbps |

| Ethernet Configurations | Supports up to 4 ports: 1 x 400G 2 x 200G 4 x 100G 4 x 50G 4 x 25G |

| Management | MCTP over SMBus |

The Pensando Pollara 400 AI NIC addresses these challenges by delivering intelligent load balancing, congestion management, fast failover, and loss recovery. These features ensure that networking and compute resources are fully utilized, enabling higher uptime, quicker job completion, and improved reliability at scale. As AI workloads increase in complexity and size, the Pollara 400 AI NIC provides the tools necessary to eliminate bottlenecks and unlock the full potential of AI infrastructure.

A Future-Ready, Programmable Solution

The Pollara 400 AI NIC is powered by AMD’s P4 architecture, offering a fully programmable hardware pipeline that delivers unmatched flexibility. This programmability allows customers to adapt to new standards, such as those set by the UEC, or to create custom transport protocols designed for their specific workloads. Unlike traditional hardware, which necessitates new generations to support emerging features, the Pollara 400 enables organizations to upgrade their AI infrastructure without waiting for hardware updates.

Key features of the Pollara 400 include support for multiple transport protocols, such as RoCEv2, UEC RDMA, and other Ethernet protocols, ensuring compatibility with various workloads. Advanced capabilities like intelligent packet spraying, out-of-order packet handling, and selective retransmission optimize bandwidth utilization and reduce latency, which are critical for training and deploying large AI models. Path-aware congestion control and rapid fault detection ensure near-wire-rate performance and minimize GPU idle time, even during transient congestion or network failures.

The Open Ecosystem Advantage

AMD’s open ecosystem approach ensures vendor-agnostic compatibility, enabling organizations to establish AI infrastructure that satisfies current demands while being scalable and programmable for future needs. This strategy reduces capital expenditures (CapEx) by removing the necessity for costly, cell-based large buffer switching fabrics, all while ensuring high performance.

The Pensando Pollara 400 AI NIC has already been validated in some of the world’s largest scale-out data centers. Cloud Service Providers (CSPs) have chosen this solution for its unique combination of programmability, high bandwidth, low latency, and extensive feature set. By enabling extensible infrastructure within an open ecosystem, AMD assists organizations in future-proofing their AI environments while delivering immediate performance benefits.

Amazon

Amazon