The JetStor 1600S is a 3U 16 bay NAS capable of storing up to 384TB of data (if optional SAS JBODs are employed). The 1600S can support NAS, Fiber Channel, and iSCSI and almost every RAID level. It also supports application features like VMware VAAI, HA (High Availability), thin-provisioning, app support, and data backup. Most of the major components that come with the JetStor 1600S are field-replaceable, reducing potential down time.

The 1600S allows for multiple drives to be designated as hot-spares, so that in the event of error detection data can continue moving through the system. Since drives are hot-swappable, capacity can be improved while the system is still running and data is automatically rebuilt upon installation of any new drive. JetStor uses proNAS 3.0 OS which brings a GUI for ease of use, along with mobile applications for NAS management.

JetStor sells the 1600S in a wide variety of configurations ranging from 16 2TB hard drives for $11,000 to all SSD configs (900GB) for $27,000.

Specifications

- CPU Model: Intel Quad Core Xeon 1.8G or above, dual processor

- Cache Memory: 32GB DDR3 SDRAM

- Max Number of Drives: 16

- External ports: USB 2.0 port X1, USB 3.0 port X2, eSATA port X1

- Compatible Drive Types:

- 3.5″ SATA (1-4TB)

- 3.5″ SAS (300GB-4TB)

- RAID controllers: 0, 0+1, 1, 3, 5, 6

- Size (WxDxH): 19″, 29″, 5.25″

- Weight:

- LAN: Gigabit x2

- Fan: x2

- Power Consumption: 100 ~ 240 V

- Protocols Supported: TCP/IP, SMB/CIFS, NFS, SNMP, SNMP, FTP/ SFTP/FXP, HTTP, HTTPS, Telnet, SSH, AFP, WebDAV, Bonjour, TFTP

- OS Support: Windows 98/ME/NT/2000/XP/2003/Vista/2008/Win7/Windows2012/Win8, VMware/Citrix/Hyper-V, VMware VAAI support

- Warranty: 3 years

Design and Build

The JetStor NAS 1600S is a 3U 16-bay storage system designed around 3.5″ HDDs or 2.5″ HDD/SSDs. It features hot-swappable drive caddies that have a basic locking mechanism and two LED status lights. They slide into the front of the array and latch in securely. The system also has a retractable screen displaying the status of the NAS with some management functions.

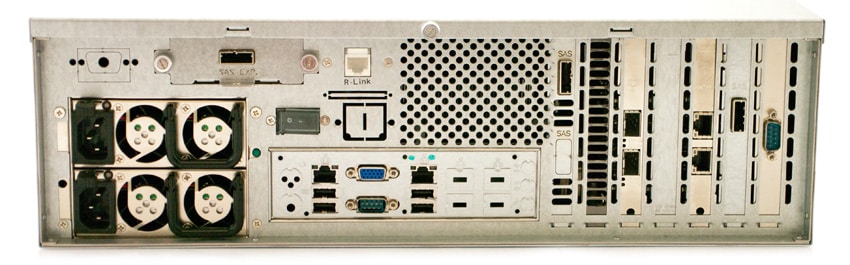

The rear of the chassis has two AC power ports, a SAS port (to which JBODs can be added), two on-board LAN ports, 4 USB ports, two serial ports, one VGA port, as well as add-on cards featuring additional connectivity. Our unit features two additional 1GbE LAN ports as well two 10GbE ports for faster connectivity.

Management

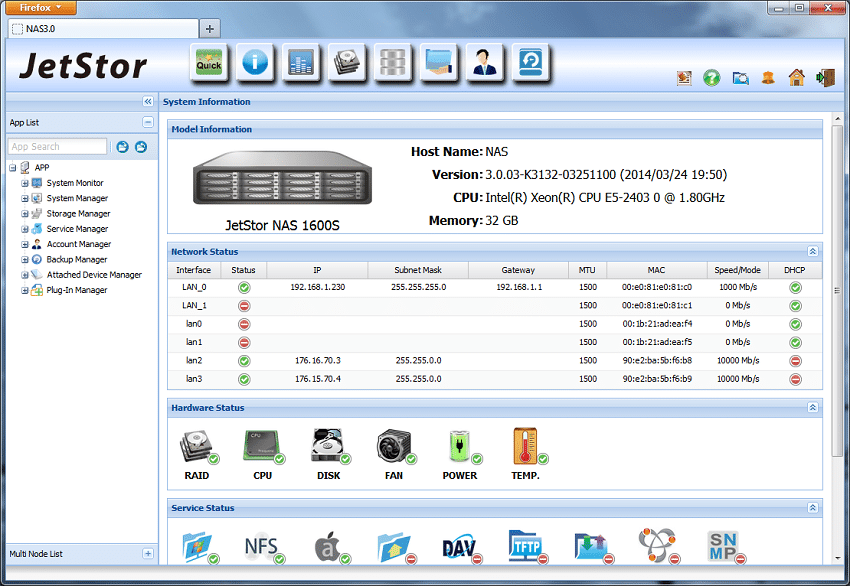

As far as management software goes, the JetStor’s NAS 3.0 interface is visually appealing and fairly user-friendly but not as feature-packed or refined as others in the enterprise storage space. Immediately upon logging in users can quickly check the status of RAID, Disks, the CPU, the LAN interfaces, the fan, power, system temperature, and services like Samba, NFS, and AFD to name a few.

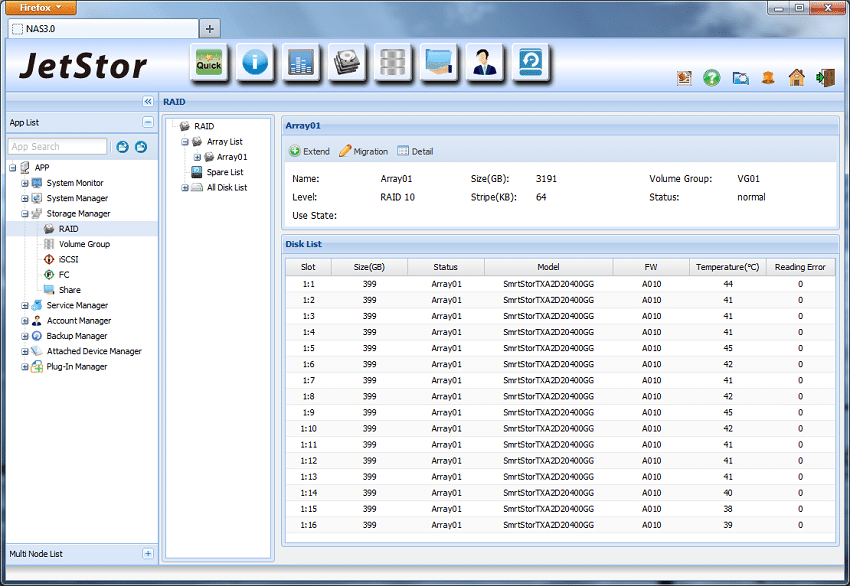

During our initial setup, the system has to be located with its static IP from the factory, versus DHCP like many other units to quickly appear online with discovery utilities. Creating a volume group was easy enough with common RAID types permissible, although this setting can’t be changed down the line without fully resetting the entire platform. When the volume is first created, home directories are made which can’t be deleted. JetStor did this to protect users from themselves, but it is kind of a pain to start over if that’s what a user intends to do.

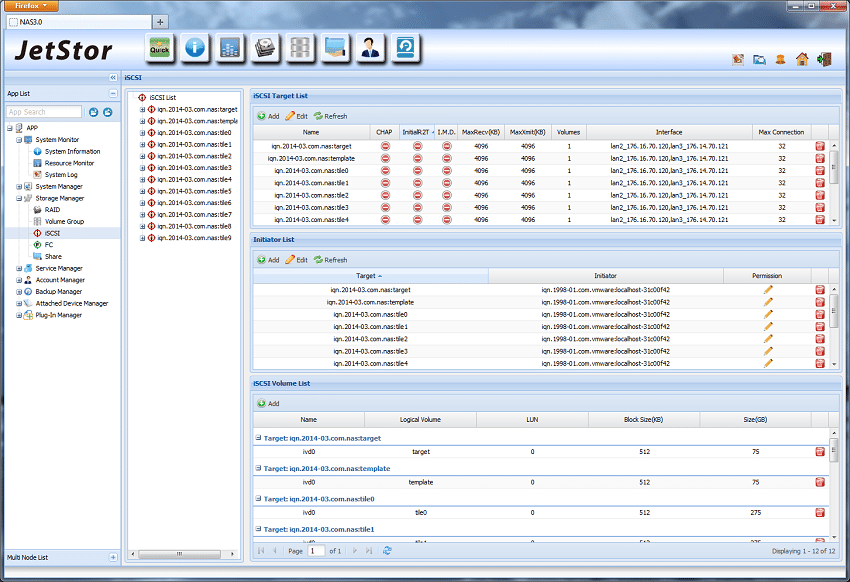

Creating CIFS or NFS shares and setting permissions is straightforward and easy. Creating iSCSI LUNs is pretty simple as well, although the NAS 3.0 software doesn’t incorporate initiator group settings, so you need to create individual masking options for each LUN and initiator. For a large group in a virtualized cluster, this can get tedious. Removing iSCSI LUNs can also be problematic, where the user interface won’t let you delete a LUN with active connections, but also at the same time won’t let you disable a LUN to stop active connections. In our setup we had to power down our ESXi hosts to remove unwanted LUNs.

Along with the standard interface comes an app for iOS and Android to let users manage the NAS remotely.

Testing Background and Comparables

We tested both SMB and iSCSI performance using RAID10 and RAID50 configurations of the Sandisk Optimus Eco 400GB SSD as well as the Seagate Cheetah 600GB HDD.

Volume configurations tested for this review:

Sandisk Optimus Eco 400GB

- RAID10 SMB (Four 25GB test files presented through four shared folders)

- RAID10 iSCSI (Four 25GB Instant Allocated iSCSI LUNs presented through 4 iSCSI targets)

- RAID50 SMB (Four 25GB test files presented through four shared folders)

- RAID50 iSCSI (Four 25GB Instant Allocated iSCSI LUNs presented through 4 iSCSI targets)

Seagate Cheetah 600GB (15,000RPM)

- RAID10 SMB (Four 25GB test files presented through four shared folders)

- RAID10 iSCSI (Four 25GB Instant Allocated iSCSI LUNs presented through 4 iSCSI targets)

- RAID50 SMB (Four 25GB test files presented through four shared folders)

- RAID50 iSCSI (Four 25GB Instant Allocated iSCSI LUNs presented through 4 iSCSI targets)

We publish an inventory of our lab environment, an overview of the lab’s networking capabilities, and other details about our testing protocols so that administrators and those responsible for equipment acquisition can fairly gauge the conditions under which we have achieved the published results. None of our reviews are paid for or overseen by the manufacturer of equipment we are testing.

Lenovo ThinkServer RD630 Testbed

- 2 x Intel Xeon E5-2690 (2.9GHz, 20MB Cache, 8-cores)

- Intel C602 Chipset

- Memory – 16GB (2 x 8GB) 1333Mhz DDR3 Registered RDIMMs

- Windows Server 2008 R2 SP1 64-bit, Windows Server 2012 Standard, CentOS 6.3 64-Bit

- Boot SSD: 100GB Micron RealSSD P400e

- LSI 9211-4i SAS/SATA 6.0Gb/s HBA (For boot SSDs)

- LSI 9207-8i SAS/SATA 6.0Gb/s HBA (For benchmarking SSDs or HDDs)

- Emulex LightPulse LPe16202 Gen 5 Fibre Channel (8GFC, 16GFC or 10GbE FCoE) PCIe 3.0 Dual-Port CFA

Mellanox SX1036 10/40Gb Ethernet Switch and Hardware

- 36 40GbE Ports (Up to 64 10GbE Ports)

- QSFP splitter cables 40GbE to 4x10GbE

Application Performance Analysis

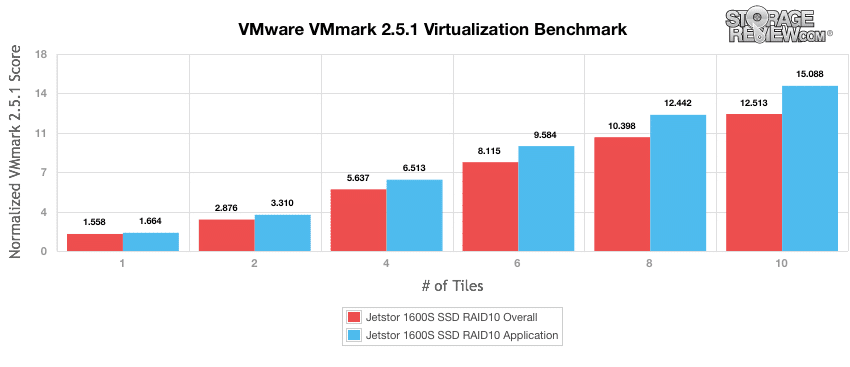

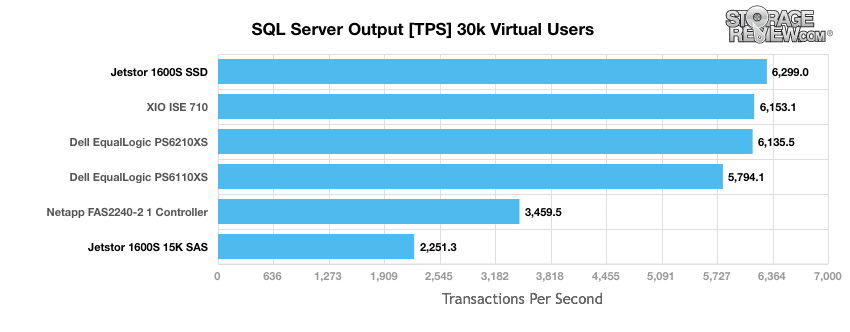

Our first two benchmarks of the JetStor NAS 1600S are the VMware VMmark Virtualization Benchmark and our Microsoft SQL Server OLTP Benchmark which both simulate application workloads similar to those which the 1600S and its comparables are designed to serve.

The StorageReview VMmark protocol utilizes an array of sub-tests based on common virtualization workloads and administrative tasks with results measured using a tile-based unit. Tiles measure the ability of the system to perform a variety of virtual workloads such as cloning and deploying of VMs, automatic VM load balancing across a datacenter, VM live migration (vMotion) and dynamic datastore relocation (storage vMotion).

Although this was the first console tested with our new platform, we saw that the 1600S was able to maintain its performance with each additional load up to 10 tiles.

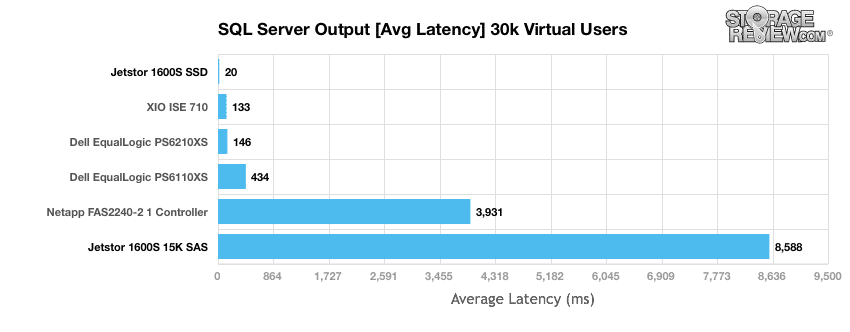

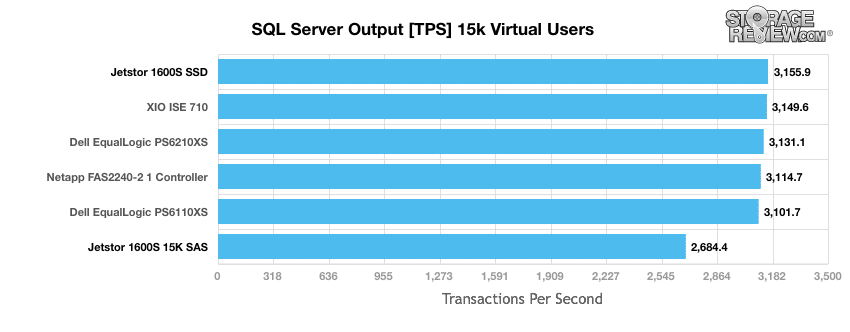

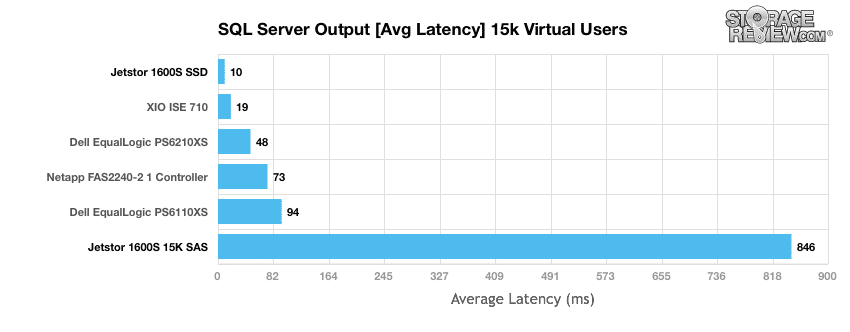

StorageReview’s Microsoft SQL Server OLTP testing protocol employs the current draft of the Transaction Processing Performance Council’s Benchmark C (TPC-C), an online transaction processing benchmark that simulates the activities found in complex application environments. The TPC-C benchmark comes closer than synthetic performance benchmarks to gauging the performance strengths and bottlenecks of storage infrastructure in database environments. Our SQL Server protocol uses a 685GB (3,000 scale) SQL Server database and measures the transactional performance and latency under a load of 30,000 virtual users and then again with a half-size database of 15,000 virtual users.

With a workload of 30,000 virtual users, the 1600S’s 16 15K SAS HDDs in RAID10 had the fewest transactions per second (2,250TPS), although the Netapp FAS2240-2 performed somewhat poorly as well (3,460TPS) with 12 drives in RAID6. The 1600S with 16 SAS SSDs in RAID10 had the best performance, with 6,300TPS.

Although the 1600S SSD had the fastest performance out of all of the configurations tested (20ms), the 1600S 15K SAS had the slowest by a large margin (8,600ms). The next slowest system was the Netapp FAS2240-2 with 3,900ms.

With a workload of 15,000 virtual users, the 1600S 15K SAS was outclassed by the other systems tested again, achieving only 2,680TPS (the next lowest array was the Dell EqualLogic PS6110XS with 3,100TPS). The 1600S with SSDs performed the best though, with 3,155TPS.

The 1600S 15K SAS exhibited the highest average latency by far with 846ms, which is consistent with its lower TPS results from the benchmark. The next highest array was the Dell EqualLogic PS6110XS with 94ms, and the fastest console was the 1600S with SSDs (10ms).

Enterprise Synthetic Workload Analysis

The StorageReview Enterprise Synthetic Workload Analysis includes common sequential and random profiles intended to reflect real-world activity. These profiles are based on similarity with historical benchmark protocols as well as to help compare against widely-published values such as 4K read and write speed and the 8K 70/30 benchmark which is often used to evaluate enterprise storage.

- 4K (Random)

- 100% Read or 100% Write

- 8K (Sequential)

- 100% Read or 100% Write

- 8K 70/30 (Random, Uncached)

- 70% Read, 30% Write

- 8K 70/30 (Random, Cached)

- 70% Read, 30% Write

- 128K (Sequential)

- 100% Read or 100% Write

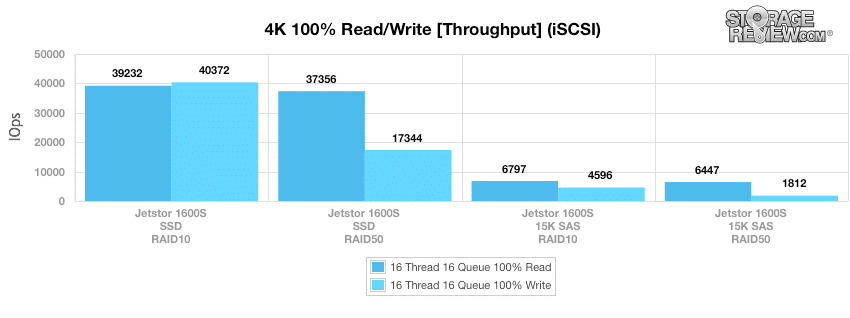

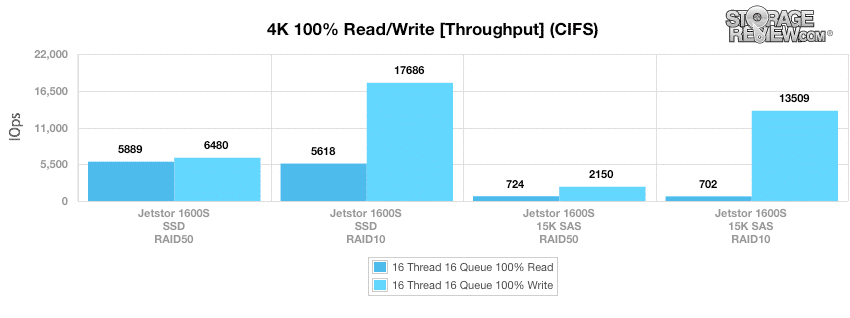

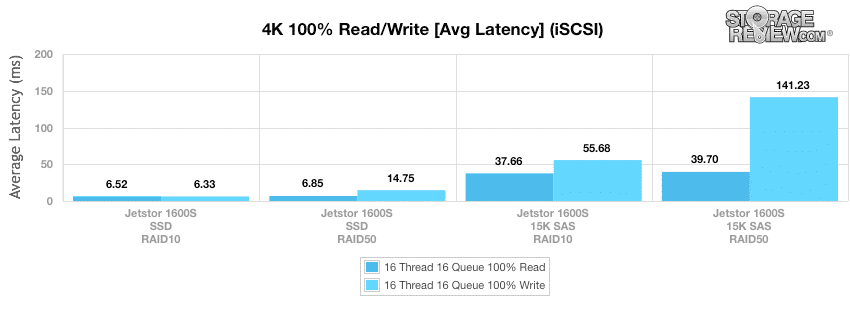

Our first test measures fully random 4K read and write performance. The top performer in this first benchmark was the SSD RAID10 in the iSCSI configuration in both read and write functions (39,000 and 40,000IOPS, respectively), and the close second was the same drive configured in RAID50 (37,000 and 17,000IOPS, respectively). The other drives tested were fairly even, with the exception of the good write performances of the 15K SAS RAID10 CIFS and SSD RAID10 CIFS and the poor read performances of the 15K SAS RAID10 CIFS and 15K SAS RAID50 CIFS.

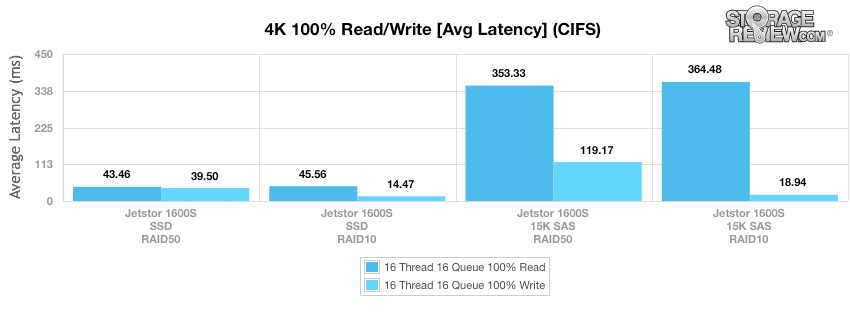

Consistent with the results of the throughput analysis, the SSD RAID10 and RAID50 iSCSI configurations were the fastest performers overall in both read and write functions (6.5, 6.3, 6.8 and 14.8ms, respectively) in the average latency measurement. The slowest read performances were seen in the 15K SAS RAID10 and RAID50 CIFS configurations, with 364.5 and 353.3ms, respectively.

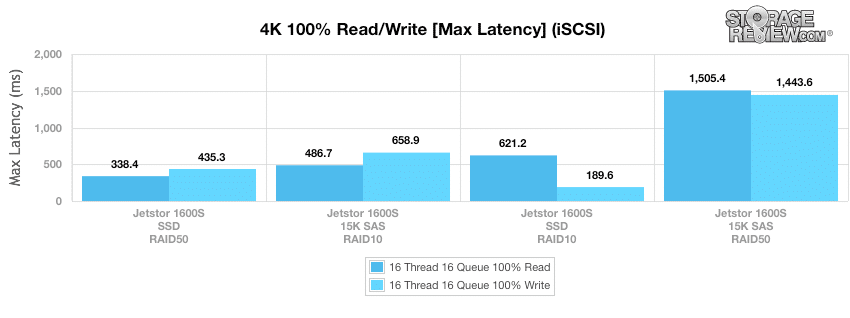

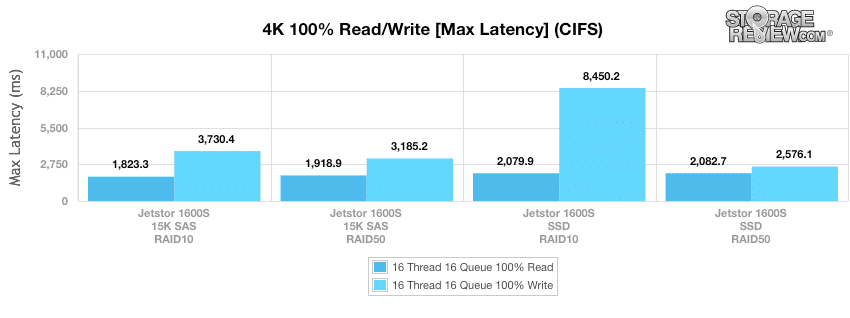

We saw a very slow write performance out of the SSD RAID10 CIFS configuration in the maximum latency measurement. Top performers in write functions were the SSD RAID10 and RAID50 in the iSCSI configuration (189.6 and 435.3ms, respectively) and the top performers in read functions were the SSD RAID50 iSCSI and 15K SAS RAID10 iSCSI configurations (338.4 and 486.7ms, respectively)

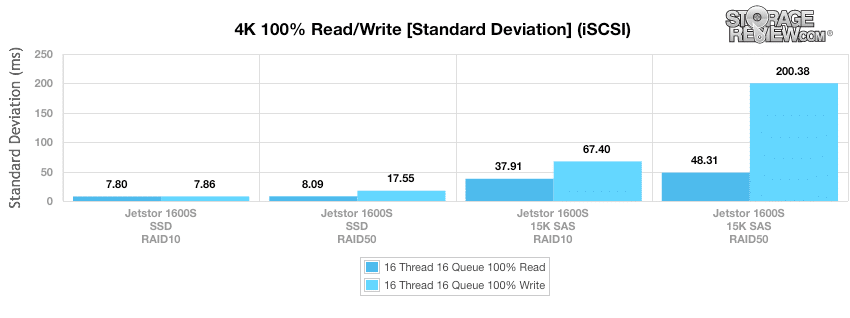

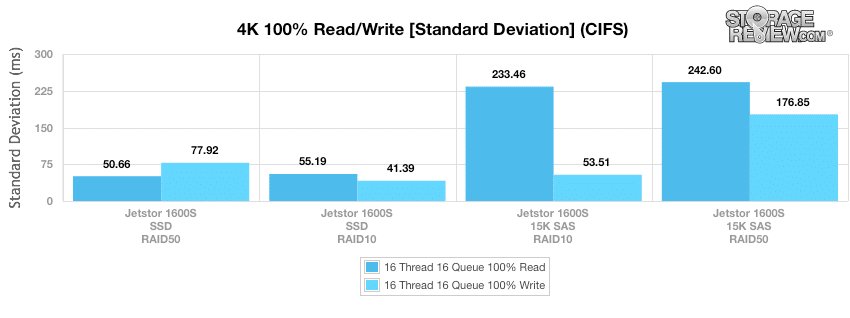

The most consistent performers were the SSD RAID10 and RAID50 in the iSCSI configuration in both read and write functions (7.8, 7.9, 8.1 and 17.5ms, respectively) by quite a large margin. The worst write performance was the 15K SAS RAID50 iSCSI configuration (200.4ms) and the worst read performances were the 15K SAS RAID10 and RAID50 in the CIFS configuration (233.5 and 242.6ms, respectively).

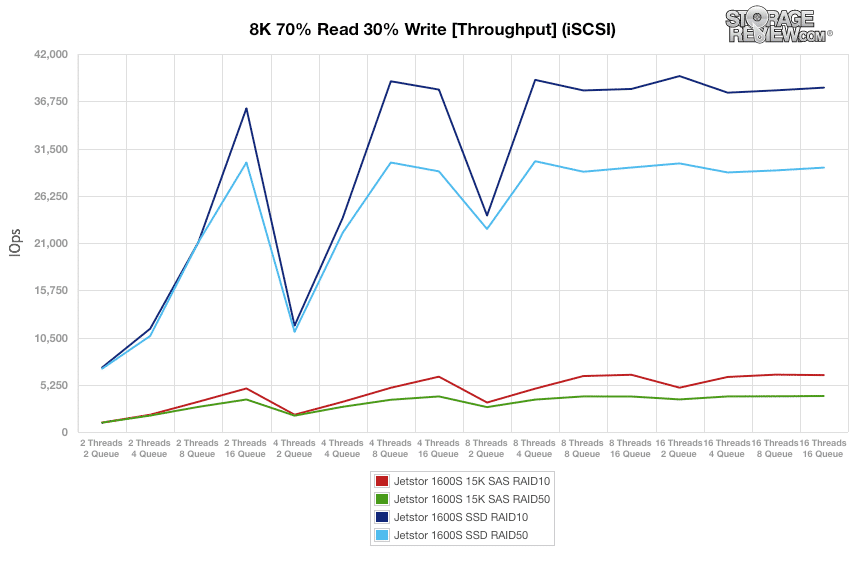

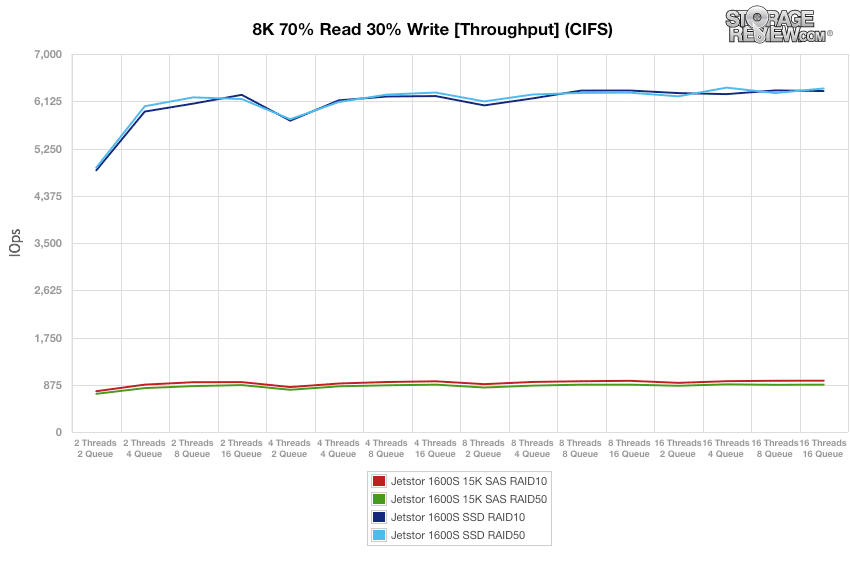

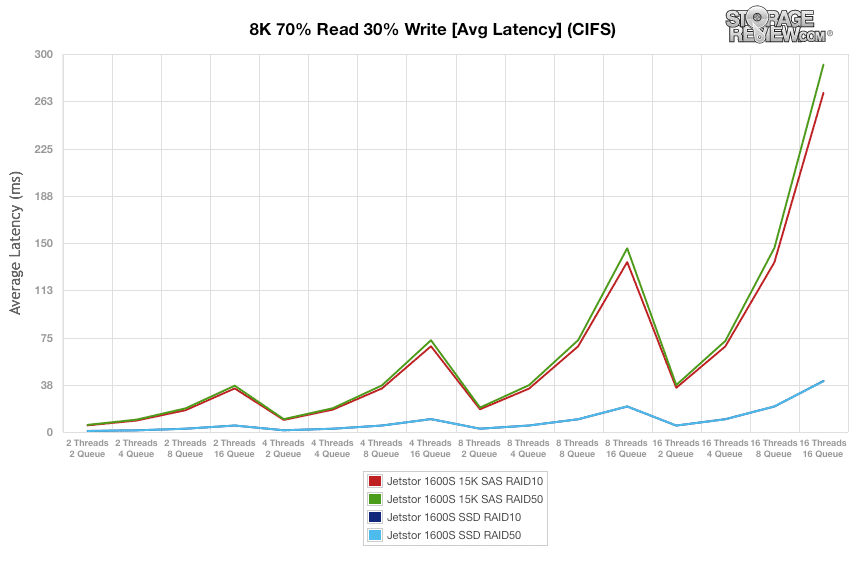

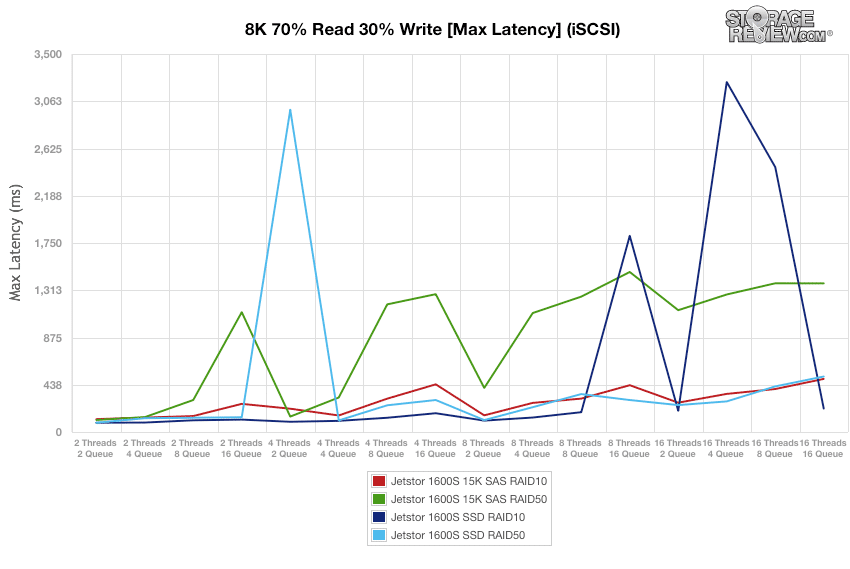

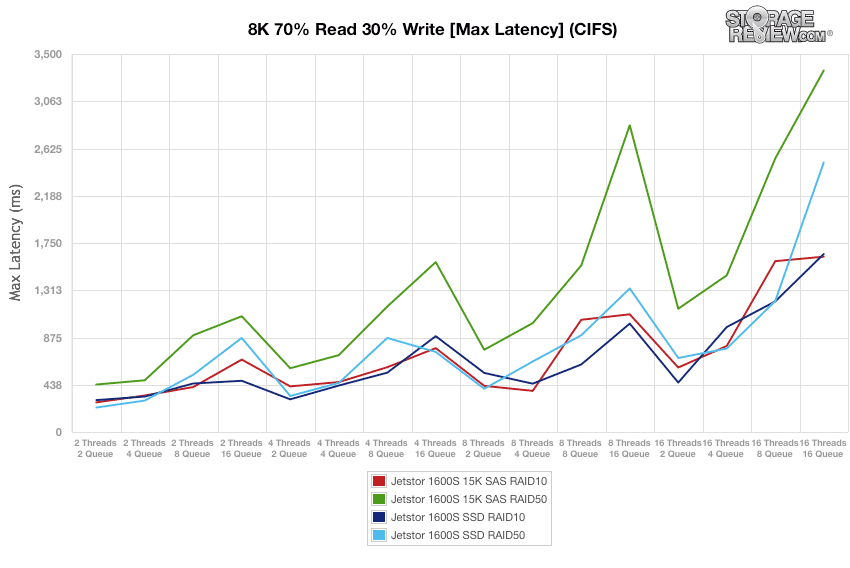

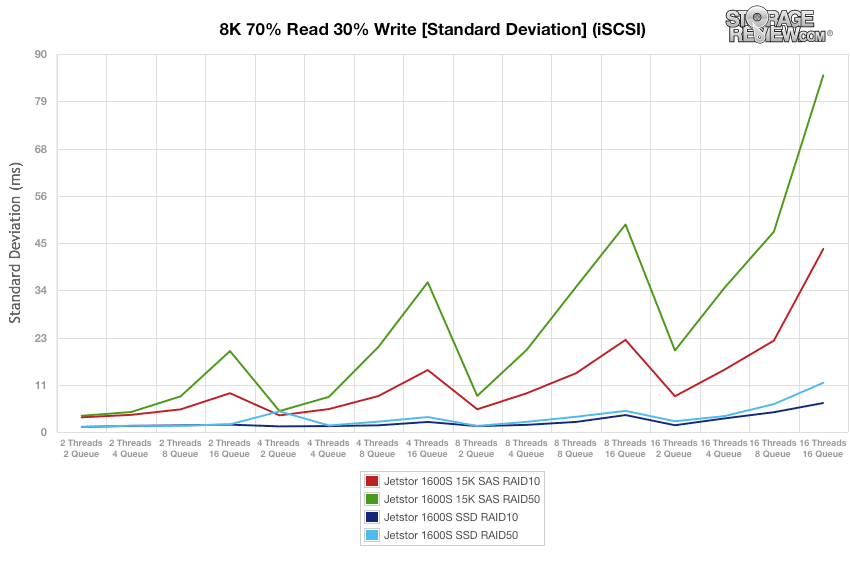

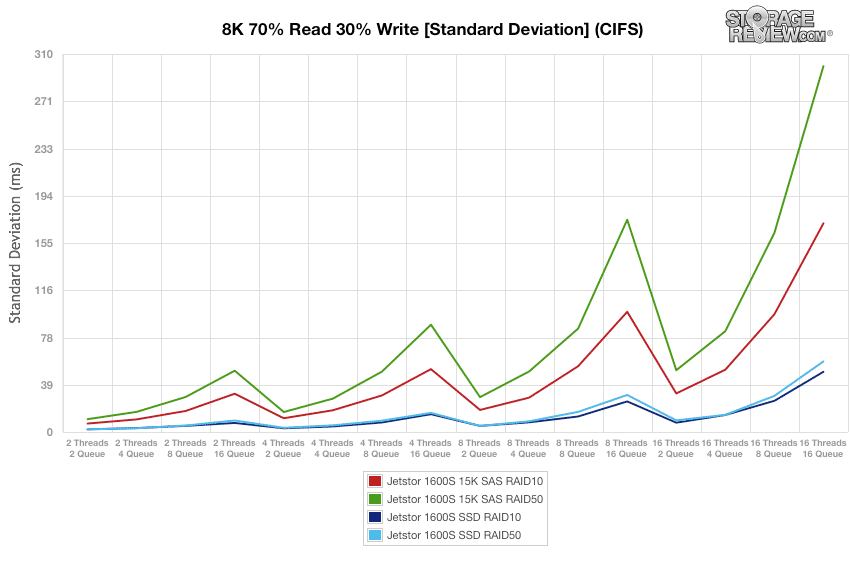

Compared to the fixed 16 thread 16 queue max workload we performed in the 100% 4K write test, our mixed workload profiles scale the performance across a wide range of thread/queue combinations. In these tests we span workload intensity from 2 threads and 2 queue up to 16 threads and 16 queue. In the expanded 8K 70/30 test, the SSD RAID10 iSCSI configuration was the top performer, followed by that drive’s RAID50 configuration, although neither showed a very consistent performance. The worst performer was the 15K SAS RAID10 CIFS, although its performance was the most consistent.

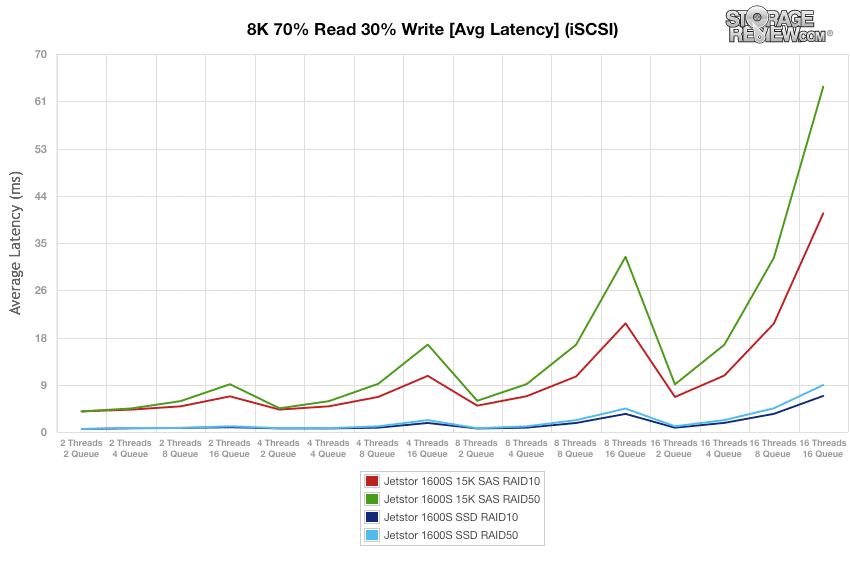

RAID10 vs. RAID50 didn’t seem to show much of a difference when looking at average latency; for the most part each both configurations of each drive produced similar numbers. The top performers were the SSD iSCSI drives and the worst performers were the 15K SAS CIFS drives.

All drives exhibited variable performances in the maximum latency benchmark. The most consistently fast drives were the SSD RAID50 iSCSI and 15K SAS RAID10 iSCSI configurations. Although the SSD RAID10 iSCSI performed better that all of the other drives in during the beginning of the test it had a few large latency spikes around the 15K SAS RAID50 CIFS range, which was the slowest performer.

The results of the standard deviation benchmark are similar to that of the throughput benchmark; the SSD RAID10 and RAID50 iSCSI configurations were the top performers and the 15K SAS RAID10 and RAID50 CIFS configurations had the least consistent performances.

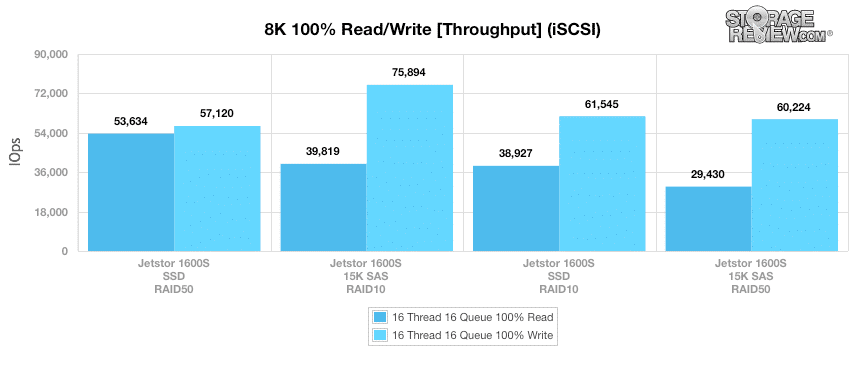

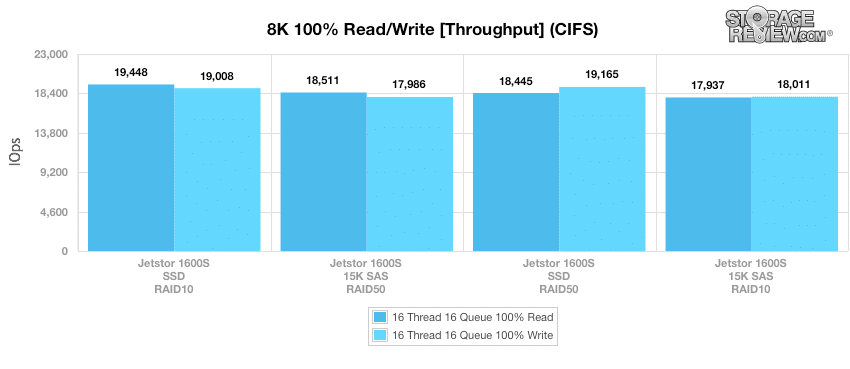

Our next workload looks at 8k sequential performance of the JetStor NAS 1600S. There was an apparent divide between the performance of the CIFS and iSCSI configurations; all of the iSCSI drives performed better than all of the CIFS drives (and the CIFS drives’ performances were almost identical to each other). The 15K SAS RAID10 iSCSI had the best write performance (75,900IOPS) and the SSD RAID50 iSCSI had the best read performance (53,600IOPS).

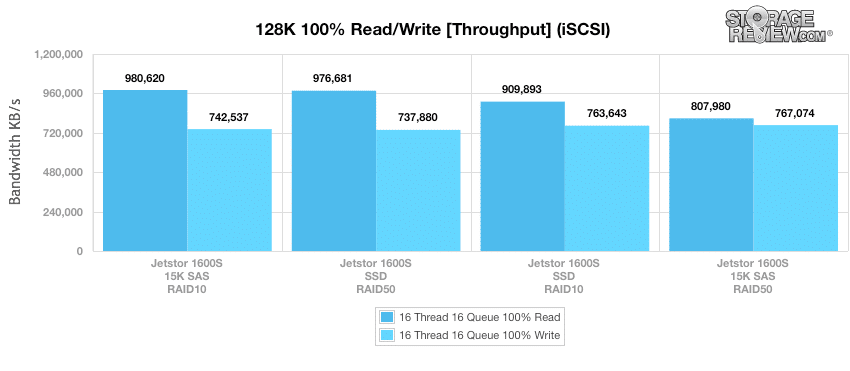

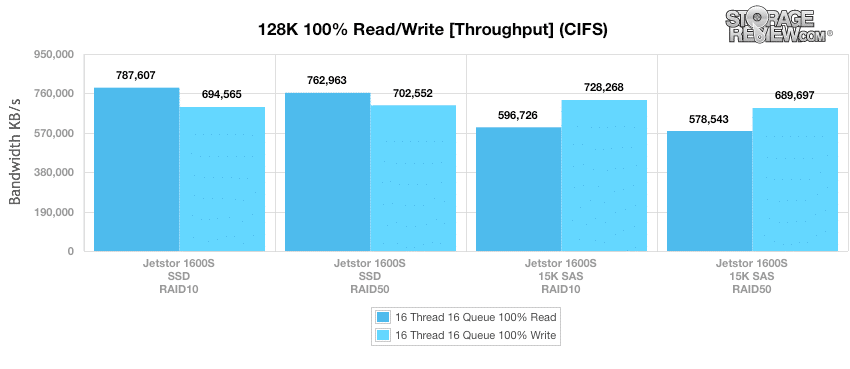

Keeping the sequential transfer type, we increased the I/O size to 128k in our next test. Performance was relatively even; for the most part, each configuration of each drive performed well, although there were some outliers. The 15K SAS RAID10 iSCSI and SSD RAID50 iSCSI drives had the best read performances (980,000 and 977,000KB/s, respectively) and the 15K SAS RAID10 and RAID50 CIFS drives had the worst (597,000 and 578,000KB/s, respectively). The write performances were very similar to each other, but the configuration that performed the best was the 15K SAS RAID50 iSCSI (767,000KB/s).

Conclusion

The 16 drive capacity of the JetStor 1600S, along with additional JBODs, allows it to store a maximum of 384TB, making it suitable for enterprise-level usage. The interface that the 1600S implements allows users to easily create shares, set permissions, and check the status of RAID, the CPU, and the system temperature at-a-glance. For the most part, the 1600S performed best when equipped with SSDs and configured in RAID10 or RAID50 iSCSI.

In terms of performance, the JetStor NAS 1600S had its best foot forward with iSCSI, having CIFS take a huge 6:1 hit in performance in random mixed workloads. With SSDs in RAID10 over iSCSI, we saw random 4k throughput peak at about 40kIOPS read/write, with 8k 70/30 throughput topping out at 38kIOPs. By comparison a group of 15k SAS HDDs in RAID10 as well managed 6.7kIOPS read and 4.6kIOPS write in 4k random throughput and 6.3kIOPS in our 8k 70/30 workload. In our application tests such as VMmark the 1600S had no trouble supporting a load of 10-tiles with an all-SSD configuration. In our SQL Server test with SAS SSDs, the JetStor 1600S performed quite well, ranking at the top of our list, although the same tests with a 15K SAS HDD configuration came in at the bottom. Overall for businesses looking to save money versus going with a top-tier brand the JetStor NAS 1600S packs a lot of features and can offer strong performance, but for those looking for refinement of a Tier 0/1 storage platform the 1600S has room for improvement.

Pros

- Strong performance in application tests with SSDs

- Supported a workload up to 10-tiles in VMmark

Cons

- Poor CIFS performance for both SSD and HDD configurations

- Management WebGUI lacks refinement of others in the space

Bottom Line

The JetStor 1600S is a highly configurable NAS that posts impressive iSCSI performance and with SSDs, handles SQL and VMmark very well. The system lacks the refinement found in top-tier brands, but the price point alone for this kind of hardware should win it some business.

Amazon

Amazon