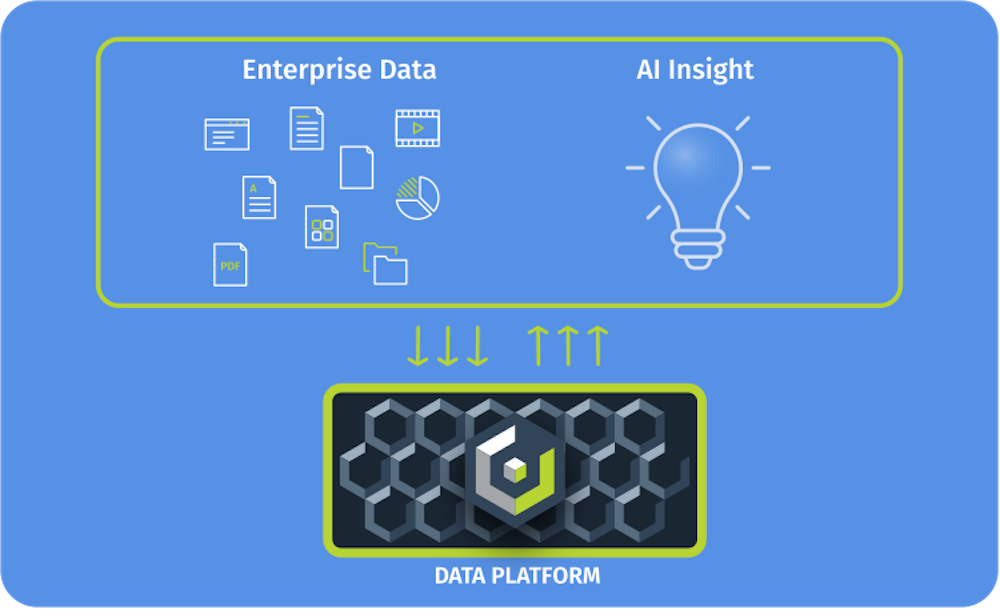

Cloudian has announced the general availability of the Cloudian HyperScale AI Data Platform, a turnkey stack that aligns closely with how customers want to deploy enterprise AI. Based on the NVIDIA AI Data Platform reference design, the solution turns decades of institutional knowledge stored in documents, PDFs, images, and spreadsheets into instantly accessible insights via a familiar chatbot-style interface. The intent is to enable organizations to stand up production-grade, compliant AI environments in days rather than months, while retaining complete control over their data.

Solving the Enterprise AI Deployment Dilemma

Most enterprise AI programs run into a familiar problem. To move quickly, they often rely on shared or public cloud environments that may not align with internal security, data sovereignty, or regulatory requirements. To retain control, they turn to custom, on-premises builds that involve multiple vendors, months of integration, and unclear paths to measurable business value.

At the same time, the bulk of enterprise knowledge is still effectively invisible to AI systems. Cloudian cites the commonly referenced figure that roughly 80 percent of institutional knowledge resides in unstructured content such as documents, reports, emails, images, and operational logs. This content often spans decades and contains critical process knowledge, customer context, and regulatory history. Without a practical way to ingest and query it, AI deployments struggle to deliver meaningful outcomes.

The HyperScale AI Data Platform is positioned to remove this trade-off. It bundles compute, storage, networking, and software into an integrated infrastructure that is operational in days and is designed specifically for enterprise document AI use cases. Rather than requiring significant data migration or complex integration work, the system is deployed on-premises or in controlled environments, allowing organizations to keep their data where it is while making it available to AI workloads.

The platform incorporates proven NVIDIA AI Blueprints for enterprise document AI, which provide reference workflows and pre-validated components for document processing, retrieval-augmented generation (RAG), and related tasks. This reduces risk compared with bespoke projects and anchors deployments in repeatable architectures.

NVIDIA’s Justin Boitano, Vice President of Enterprise AI Products, clearly frames the need. He emphasized that transforming enterprise data into AI insights requires more than scalable storage, adding that it demands a tightly integrated, high-performance infrastructure stack that is adaptable to enterprise requirements. He points to the Cloudian HyperScale AI Data Platform, built on NVIDIA AI infrastructure, networking, and software, as a way for organizations to securely unlock critical knowledge, simplify implementation, and expand AI capacity as data volumes grow.

Immediate Business Impact with Predictable Economics

The platform is designed to deliver enterprise AI capabilities without the traditional performance and cost trade-offs that have characterized early AI deployments. At the storage layer, Cloudian brings its S3-native object storage together with RDMA acceleration for S3 operations. This combination is intended to remove the performance penalties typically associated with object storage in latency-sensitive AI and vector database scenarios.

Cloudian reports that this RDMA-accelerated S3 delivers up to 8x faster vector database performance than CPU-based solutions that rely on traditional file storage. For customers, the implication is that embeddings, similarity search, and retrieval operations can support real-time responses for thousands of concurrent users without shifting to bespoke, non-standard storage stacks.

The economic story is equally crucial for technical sales teams and architects. By offering the HyperScale AI Data Platform as an on-premises, integrated appliance, Cloudian aligns AI infrastructure spending with predictable CapEx rather than variable, often unpredictable cloud OpEx. The solution is designed to eliminate common AI cloud expenses, such as per-token inference fees and high cloud storage costs, which can be orders of magnitude higher than those in dense on-premises deployments.

Because the platform is S3-native and built on Cloudian HyperStore, it can process and analyze data across on-premises environments, hybrid architectures, and distributed locations. This flexibility allows organizations to keep data where it sits while bringing it into AI workflows, rather than enforcing a large-scale migration to a single cloud or proprietary platform. For many regulated enterprises, that capability is central to maintaining data sovereignty and meeting internal governance requirements.

Enterprise Document AI

The initial focus of the HyperScale AI Data Platform is enterprise document AI. The built-in document AI application blueprint is designed to expose decades of institutional knowledge through conversational, natural-language queries. The user experience is intentionally similar to that of consumer chat interfaces, but grounded in an organization’s own documents and policies.

In healthcare, for example, the platform can be used to query patient records, clinical protocols, and internal guidelines while remaining aligned with HIPAA and other privacy mandates. Because the data never leaves the organization’s controlled environment, compliance and audit teams retain visibility into where information is stored and how it is accessed.

In financial services, institutions can search regulatory filings, internal policies, risk assessments, and contract repositories without sending sensitive information to external AI providers. This ability to keep regulatory content and customer data within a governed perimeter while still benefiting from modern retrieval-augmented generation is a core selling point for risk-conscious buyers.

From a technical perspective, the blueprint abstracts away much of the complexity associated with building document ingestion pipelines, indexing, vectorization, and retrieval workflows. Instead of designing this stack from scratch, customers rely on the reference design included in the platform and focus on tuning, access control, and governance.

Beyond Documents to Video and Analytics

Cloudian positions the HyperScale AI Data Platform as a foundation that will support multiple AI application blueprints over time, not just document-centric use cases. The roadmap includes support for video analysis, particularly in environments such as manufacturing floors, warehouses, logistics hubs, and construction sites, where real-time or near-real-time video intelligence can drive safety, quality, and operational efficiency.

Upcoming blueprints will focus on analyzing video streams for activity and anomaly detection, and for operational monitoring, using the same integrated infrastructure. Future applications will also include broader data analytics and additional enterprise AI capabilities, delivered through the same hardware and software stack. This single-platform approach simplifies procurement and lifecycle management since customers do not need to deploy separate point solutions for each new AI workload.

Platform Architecture and Hardware Foundation

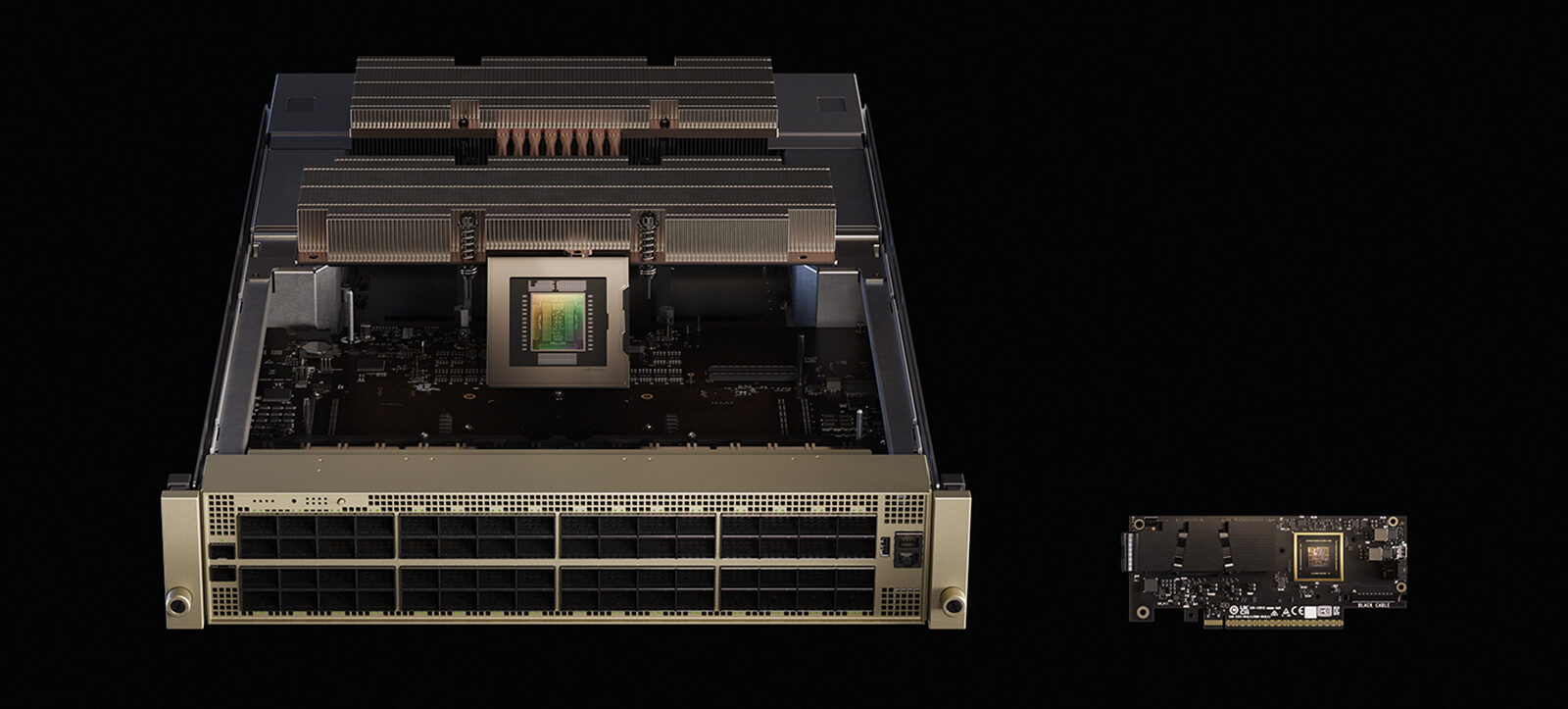

The entry-level configuration of the HyperScale AI Data Platform is built on the NVIDIA RTX PRO Server reference design. This system is engineered for AI workloads and can be configured with up to eight NVIDIA RTX PRO 6000 Blackwell Server Edition GPUs, providing substantial acceleration for training, fine-tuning, and inference across large unstructured datasets.

Compute is provided by dual Intel Xeon 6900 series CPUs, ensuring sufficient general-purpose processing for orchestration, data preprocessing, and control-plane services. The platform also incorporates NVIDIA BlueField-3 DPUs, which can offload networking, security, and storage tasks from host CPUs, and NVIDIA Spectrum-X Ethernet SuperNICs, which provide high-bandwidth, low-latency connectivity essential for AI and data-intensive workloads.

On the storage side, the platform uses Cloudian HyperStore with NVMe-based media to deliver high-throughput, low-latency S3-compatible object storage. The integrated RDMA for S3 connectivity reduces protocol overhead and enables AI and vector workloads to access object data with latency closer to that of local block or file systems, while retaining the scalability benefits of object storage.

The architecture is designed for non-disruptive scaling from terabytes to exabytes as data requirements grow. This scaling capability is essential for customers who want to begin with limited-scope AI projects but have a clear path to global deployment without redesigning their infrastructure.

Cloudian CEO Michael Tso emphasizes that organizations need AI platforms that fit within their existing security and compliance frameworks rather than forcing those frameworks to adapt. He notes that HyperScale AI provides secure access to institutional knowledge through a familiar chatbot interface, while keeping data under the organization’s control and reducing deployment time from months to days.

Availability

The Cloudian HyperScale AI Data Platform is available immediately worldwide through Cloudian’s certified channel partner ecosystem.

Each system includes Cloudian HyperCare support, which provides remote management services and 24/7 technical assistance.

Amazon

Amazon