Today Condusiv Technologies released the latest version of its I/O reduction software solution for virtual environments, V-locity 6.0. Condusiv is also announcing that they have updated their IntelliMemory DRAM read caching engine stating it now delivers 3 times the performance of the previous version. IntelliMemory has gained the performance increase by focusing on caching effectiveness versus cache hits.

Similar to their Diskeeper for physical servers, V-locity aims to improve performance for virtual servers. Condusiv claims that companies can see up to 50-300% faster application performance. We have yet to get our hands on either Diskeeper or V-locity to use in our lab and see what they can do. However Condusiv has a long list of satisfied customers with some pretty impressive endorsements and instances where performance was greatly improved (being doubled or tripled) and where some substantial amounts of money were saved by not buying new hardware to address a problem.

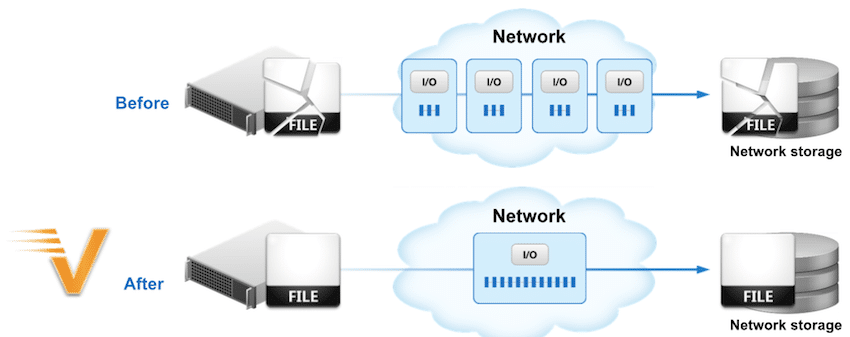

The latest version of V-locity has two key technologies that it uses to reduce I/O from VM to storage, its IntelliWrite and IntelliMemory engines. IntelliWrite increases I/O density from VM to storage by adding a layering of intelligence into the Windows OS that eliminates I/O fracturing so writes (and subsequent reads) are processed in a more contiguous and sequential manner. I/O requirements for any given workload are reduce which in turn increases the throughput since more data is processed with each I/O operation. IntelliMemory DRAM read caching now focuses on serving only the smallest, random I/O, since this is generally the problem that dampens overall system performance. IntelliMemory now comes with a lightweight compression engine expanding that amount of data that can be serviced by the DRAM without visible CPU overhead.

Condusiv’s V-locity 6.0 with enhanced IntelliMemory DRAM read caching offers:

- Enhanced Performance – Condusiv states that Iometer testing reveals the latest version of IntelliMemory in V-locity 6.0 is 3.6X faster when processing 4K blocks and 2.0X faster when processing 64K blocks.

- Self-Learning Algorithms – IntelliMemory collects and accumulates data on storage access over extended periods of time and employs intelligent analytics to determine which blocks are likely to be accessed at different points throughout the day.

- Cache Effectiveness – By focusing on “cache effectiveness” rather than the commodity and capacity-intensive approach of “cache hits,” V-locity determines the best use of DRAM for caching purposes by collecting data on a wide range of data points (storage access, frequency, I/O priority, process priority, types of I/O, nature of I/O (sequential or random), time between I/Os) – then leverages its analytics engine to identify which storage blocks will benefit the most from caching, which also reduces “cache churn” and the repeated recycling of cache block.

- Data Pattern Compression (DPC) – A very lightweight data compression engine, V-locity doesn’t tax the CPU with visible overhead, eliminating the need for dedicated compute resources.

Availability

The latest version of V-locity with its new DRAM caching engine is expected to ship the first week of July 2015.

Amazon

Amazon