Evozyne has used NVIDIA BioNeMo to generate high-quality proteins to speed drug design and help create a more sustainable environment. Evozyne creates products that solve high-impact problems in therapeutics and sustainability. NVIDIA BioNeMo is an AI-powered drug discovery cloud service and framework built on NVIDIA NeMo Megatron for training and deploying large biomolecular transformer AI models at a supercomputing scale.

Evozyne created two proteins using a pre-trained AI model from NVIDIA. The two proteins have significant potential in healthcare and clean energy. One aims to cure a congenital disease, and another is designed to consume carbon dioxide to reduce global warming.

Evozyne co-founder, Andrew Ferguson, said:

“It’s been really encouraging that even in this first round the AI model has produced synthetic proteins as good as naturally occurring ones. That tells us it’s learned nature’s design rules correctly.”

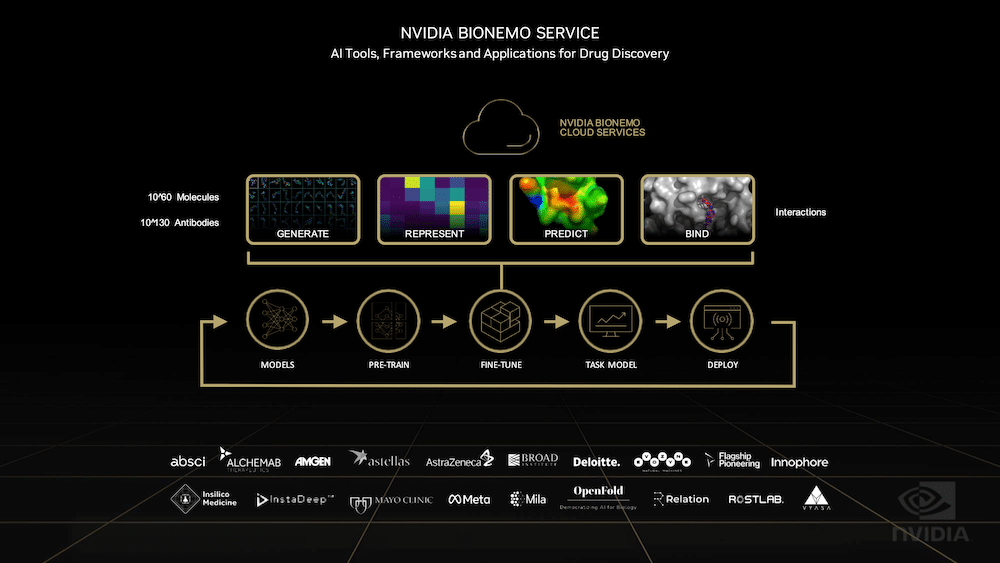

NVIDIA BioNeMo, part of the NVIDIA Clara Discovery collection, is a framework for training and deploying large biomolecular language models at a supercomputing scale to help scientists better understand diseases and find therapies for patients. The large language model (LLM) framework will support chemistry, protein, DNA, and RNA data formats.

Just as AI is learning to understand human languages with LLMs, it’s also learning the languages of biology and chemistry. NVIDIA BioNeMo helps researchers discover new patterns and insights in biological sequences, helping them connect to biological properties or functions and even human health conditions. Initial results indicate this is a new way to accelerate drug discovery.

NVIDIA BioNeMo also has a cloud API service supporting a growing list of pre-trained AI models.

A Transformational AI Model

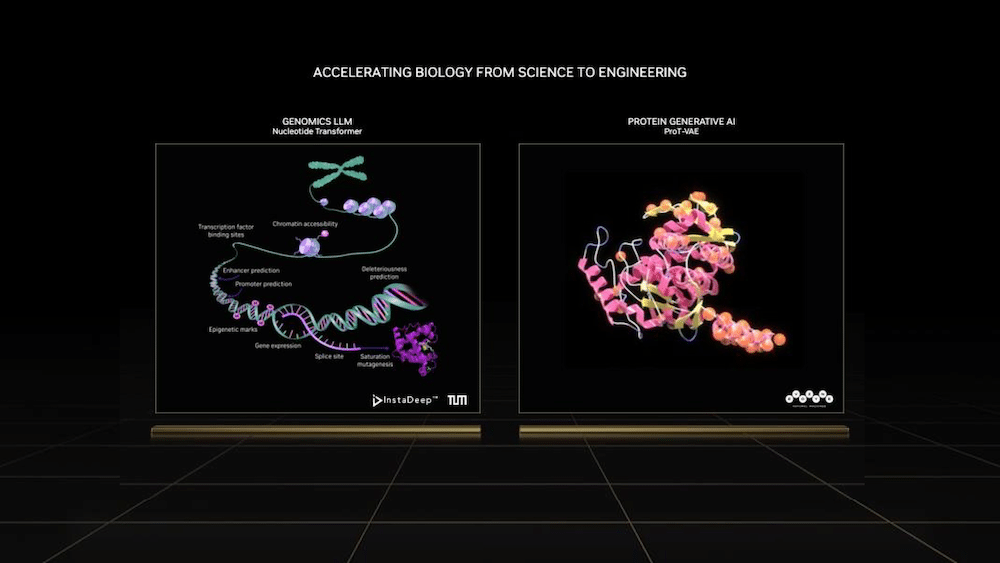

Evozyne used NVIDIA’s transformer model for the implementation of ProT5. The model is the heart of Evovyne’s process called ProT-VAE. It’s a workflow that combines BioNeMo with a variational autoencoder that acts as a filter.

Evozyne’s Ferguson added:

“BioNeMo really gave us everything we needed to support model training and then run jobs with the model very inexpensively — we could generate millions of sequences in just a few seconds. Using large language models combined with variational autoencoders to design proteins was not on anybody’s radar just a few years ago.”

Learning Nature’s Ways

NVIDIA’s transformer model reads sequences of amino acids in millions of proteins, just like a student reading a book. Using the same techniques neural networks employ to understand text, it learned how nature assembles these powerful building blocks of biology. The model can then predict how to assemble new proteins suited for functions Evozyne wants to address.

Machine learning navigates the astronomical number of possible protein sequences, then identifies the most useful ones. The traditional method of engineering proteins, called directed evolution, uses a slow, hit-or-miss approach, typically changing only a few amino acids in sequence at a time. Contrast that to Evozyne’s approach, where half or more of the amino acids in a protein can be altered in a single round. That’s the equivalent of making hundreds of mutations. Evozyne plans to build a range of proteins to fight diseases and climate change using the new process.

NVIDIA played a key role

Joshua Moller, a data scientist at Evozyne, explained that NVIDIA “scaled jobs to multiple GPUs to speed up training,” helping them get through entire datasets every minute. By reducing the time to train large AI models from months to a week, Ferguson said they could train models, some with billions of trainable parameters, that would have otherwise been impossible.

Evozyne is very optimistic about what the future holds.

Amazon

Amazon