Researchers at Carnegie Mellon University have released a study that focused on the in-field reliability of SSDs, the study is titled “A Large-Scale Study of Flash Memory Failures in the Field.” The study was conducted using Facebook’s datacenters over the course of four years and millions of operational hours. The study looks at how errors manifest and aim to help others develop novel flash reliability solutions.

As we have been saying for some time here at StorageReview, flash offers several performance benefits, density benefits, and lower power consumption with the largest drawback being cost. While many SSDs claim good longevity and reliability there hasn’t been any major, in-field studies until now.

Though specific suppliers and specific drives weren’t mentioned, Facebook uses many in their hot data tier, the study did make note of the type of platform that the SSD were used in (this was defined as combination of the device capacity used in the system, the PCIe technology used, and the number of SSDs in the system), these were label platforms A-F. The platforms ranged in capacity from 720GB to 3.2TB, had two generations of PCIe (version 1 and 2), some employed one SSD other employed two, and the amount of time in operation spanned from half a year to over two years.

The researchers used registers to keep track of SSD operation similar to SMART data. The raw values were collected once per hour, parsed into a form that could be curated into a Hive Table, and then a cluster of machines performed a parallelized aggregation of the data stored in Hive using MapReduce jobs. From this the researchers were able to monitor Bit Error Rate, SSD failure rate and error count, the correlation between different SSDs (particularly in platforms that had more than one SSD), and the role of both internal and external factors.

Conclusions drawn from the study include:

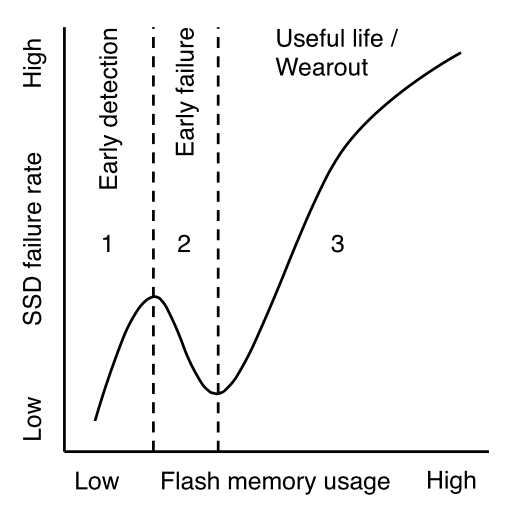

- SSDs go through several distinct failure periods – early detection, early failure, usable life, and wearout – during their lifecycle, corresponding to the amount of data written to flash chips.

- The effect of read disturbance errors is not a predominant source of errors in the SSDs examined.

- Sparse data layout across an SSD’s physical address space (e.g., non-contiguously allocated data) leads to high SSD failure rates; dense data layout (e.g., contiguous data) can also negatively impact reliability under certain conditions, likely due to adversarial access patterns.

- Higher temperatures lead to increased failure rates, but do so most noticeably for SSDs that do not employ throttling techniques.

- The amount of data reported to be written by the system software can overstate the amount of data actually written to flash chips, due to system-level buffering and wear reduction techniques.

While the study doesn’t state one drive, or type of drive, is better than another it is still an interesting look as to how the SSDs perform in the real world under heavy usage. This study opens the door to both future studies looking at SSD usage as well as new technology that could make SSDs even more reliable or last much longer.

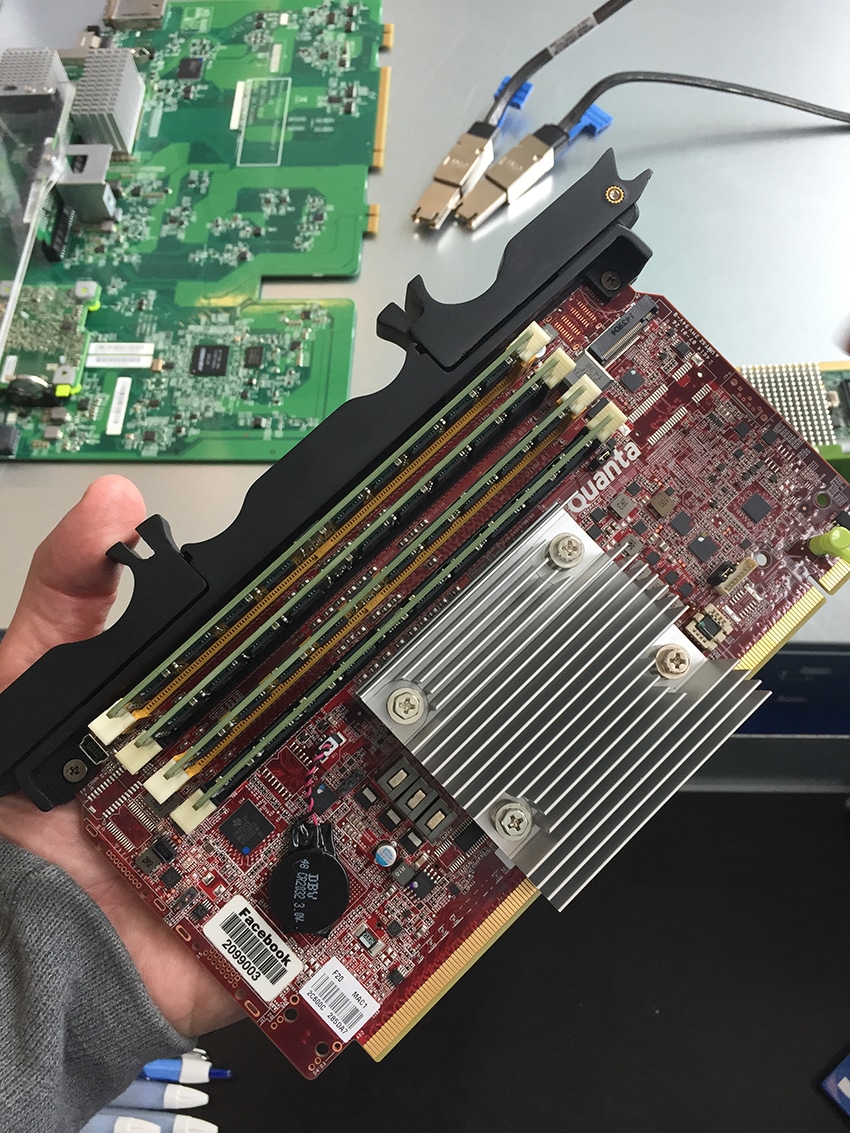

For their part Facebook has been continuing to evolve their server designs to accommodate for data points like those derived from the Carnegie Mellon study. Their recently announced Yosemite platform is a timely example of how Facebook is leveraging flash in non-traditional form factors, for the datacenter at least, to get the best performance profile with a heavy emphasis on TCO.

Amazon

Amazon