IBM continues to evolve its Storage Scale System 6000 platform to address a familiar problem in modern data centers: data silos that slow down AI initiatives, increase infrastructure complexity, and strand valuable information. By unifying data across edge, core, and cloud through a single global namespace and Active File Management (AFM), the Storage Scale System 6000 is designed to give organizations a consistent, high-performance data platform that can feed GPUs and CPUs at scale. The result is a storage foundation that enables AI pipelines to start immediately and deliver results faster, rather than waiting for legacy storage constraints.

The latest updates center on two top-of-mind themes for organizations today: unlocking dark data and removing AI inference and training bottlenecks. IBM addresses these priorities with a new all-flash expansion enclosure, support for high-capacity QLC NVMe media, and an upgraded software stack that targets both performance and efficiency. Together, these changes position Storage Scale System 6000 as a dense, scalable, and AI-ready storage platform suitable for enterprise, HPC, and AI-as-a-service environments.

Rack-Level Capacity Tripled with High-Density QLC Flash

With its latest enhancements, IBM has tripled the maximum capacity of the Storage Scale System 6000 to 47PB per rack. This increase comes from support for industry-standard QLC NVMe SSDs in 30TB, 60TB, and 122TB configurations. Customers gain more flexibility in matching media type to workload needs, from performance-sensitive tiers to capacity-oriented AI repositories.

The expansion in drive options is especially relevant for AI and analytics pipelines that must store and process massive datasets without fragmenting them across multiple silos or platforms. By consolidating capacity into a single global namespace, Storage Scale System 6000 helps reduce management overhead while still delivering the performance characteristics required for GPU-heavy workloads.

IBM Storage Scale System 7.0.0: Software Enhancements for AI and Data Services

The release of IBM Storage Scale System 7.0.0 software introduces several key enhancements to improve data management efficiency, performance, and resilience in mixed-workload environments.

First, the platform now supports multi-flash tiering with industry-standard NVMe TLC and QLC flash drives within the same system. This capability allows organizations to align cost, performance, and endurance with workload profiles. Hot or latency-sensitive data can be kept on TLC-based tiers, while colder or capacity-focused datasets can reside on dense QLC media. The tiering model provides a more granular way to optimize TCO while maintaining predictable performance.

Second, IBM has introduced a dedicated data acceleration tier that uses high-performance NVMe-oF to feed applications at scale. This tier can deliver up to 340GB/s of throughput and up to 28 million IOPS. It is engineered for high compression efficiency and data density while consuming less power per unit of performance. For AI and HPC teams that need to keep expensive compute fully utilized, this acceleration tier is particularly valuable in minimizing idle GPU time caused by storage bottlenecks.

Third, the data protection framework has been enhanced with broader erasure coding options, including support for 16+2/3P configurations. These wider codes improve disk efficiency and overall storage utilization while also boosting performance and write throughput. The result is a data protection scheme that scales with the platform’s increased capacity and throughput, rather than becoming a bottleneck.

Finally, the software stack integrates with NVIDIA Spectrum-X Ethernet networking to accelerate AI training workflows. Spectrum-X can significantly reduce checkpointing time during AI foundation model training, resulting in shorter training cycles and improved infrastructure utilization. For large-scale training environments, this is a practical lever to reduce time-to-insight while retaining an Ethernet-based fabric.

All-Flash Expansion Enclosure

To meet the demands of workloads that operate on massive datasets, IBM has introduced the IBM Storage Scale System All-Flash Expansion Enclosure. This enclosure is optimized for high-performance AI training, inference, HPC, and other data-intensive workloads that require both high throughput and large, contiguous data sets.

By incorporating 122TB QLC NVMe SSDs, the Storage Scale System 6000 with the All-Flash Expansion Enclosure delivers more than 47PB of high-density, cost-effective flash capacity in a single 42U rack. For organizations looking to build AI factories or large-scale HPC clusters, this provides a clear path to consolidating storage into a smaller footprint without sacrificing performance.

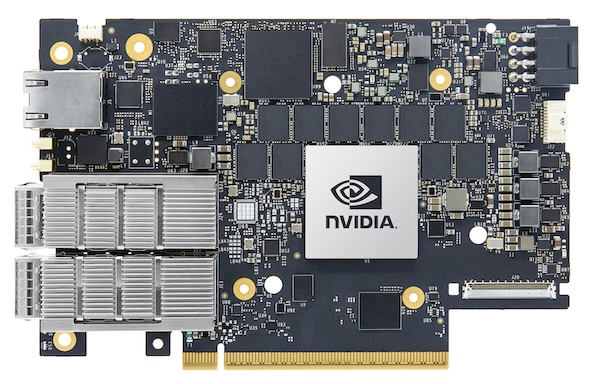

The enclosure is also architected to integrate with NVIDIA BlueField-3 DPUs for acceleration at scale. Network adapters in the solution can provide up to 400Gb/s of high-performance network throughput, along with NVMe-oF offload capabilities for the Storage Scale System. This offload reduces CPU overhead, enabling more host resources to be directed toward AI, analytics, or HPC processing instead of storage and protocol handling.

Each expansion enclosure delivers up to 100 GB/s of throughput and more than 3PB of raw flash capacity in a 2U form factor. This density and performance profile make it a strong fit for environments where rack space, power, and cooling are tightly constrained yet performance expectations are non-negotiable.

The enclosure can support up to 4 NVIDIA BlueField-3 DPUs and up to 26 dual-port QLC SSDs. This combination enables high aggregate bandwidth, rich connectivity options, and flexible scaling of both capacity and offload acceleration as workloads grow.

Multitenancy and Service Provider Use Cases

The scalability of the new All-Flash Expansion Enclosure also aligns with larger cache configurations and multitenant service provider requirements. IBM Storage Scale System 6000 supports highly configurable multitenancy at the cluster, file system, or fileset level.

For service providers and large AI factories, this design provides the tools to isolate workloads, enforce differentiated security policies, and allocate resources precisely to tenants or internal business units. Isolation can be implemented without sacrificing performance or complicating the operational model.

Whether the environment is a large supercomputing deployment, a traditional HPC cluster, or an AI-as-a-service platform, this architecture is built to support secure, scalable, and cost-efficient multitenant operations. Diverse data-intensive workloads can coexist on the same infrastructure while still meeting compliance, performance, and service-level expectations.

Enabling AI at Scale While Supporting Existing Workloads

IT infrastructure teams are pressured to scale AI initiatives while continuing to support existing enterprise workloads and legacy applications. The enhanced IBM Storage Scale System 6000 is designed to address both requirements with a single platform.

By unifying data across protocols, locations, and formats, the system enables globally dispersed teams to collaborate directly on shared data without copying or staging it into separate environments. AFM, IBM’s global caching and data orchestration layer, can now cache much larger working sets of data closer to GPUs, thanks to the tripled capacity and expanded enclosure options. This proximity helps eliminate data silos and reduces data movement overhead, leading to faster, more efficient AI pipelines.

Instead of maintaining separate storage silos for traditional workloads and AI, organizations can standardize on Storage Scale System 6000 as a common data plane. This approach simplifies operations, supports a broad range of protocols and access methods, and still delivers the performance required for modern AI and HPC workflows.

Availability

IBM Storage Scale System 7.0.0 software is scheduled to be available on December 9. General availability for the new IBM Storage Scale System All-Flash Expansion Enclosure is December 12.

Amazon

Amazon