At the 2025 OCP Global Summit, Intel emphasized AI inference by unveiling two key advancements: a new data center GPU called “Crescent Island” and a rack-scale reference design for Gaudi 3. Both developments align with the growing transition from model training to real-time, widespread inference, where factors such as latency, memory bandwidth, efficiency, and operational simplicity are now crucial.

From Static Training to Pervasive, Real-Time Inference

Intel’s CTO, Sachin Katti, summarized the transition by noting that as agentic AI becomes more widespread, inference turns continuous, context-rich, and increasingly system-intensive. To manage the growing token volumes, complex modality combinations, and strict SLA requirements, a heterogeneous infrastructure combining optimal silicon with an open, developer-centric software stack becomes necessary. In this environment, Intel’s Xe architecture data center GPUs offer the capacity and reliability needed as sequence lengths and token rates increase, while Gaudi 3 supports an open, scalable inference ecosystem with predictable total cost of ownership (TCO).

The key takeaway is not simply “faster chips.” Inference success hinges on systems integration: topology-aware memory, flexible interconnects, power and cooling that match utilization profiles, and orchestration that treats models, tokens, and streams as first-class citizens. Enterprises don’t want lock-in at precisely the moment they need to iterate across models, frameworks, and serving stacks.

Spanning PC, Edge, and Data Center

Intel argues it’s uniquely positioned to deliver end-to-end breadth, with AI PCs, industrial edge, and data center racks grounded in Xeon 6 CPUs, Gaudi 3 accelerators, and Intel GPUs. The common themes include:

- PCIe flexibility in diversity enables the reuse of existing footprints when suitable and allows scaling to fabric-centric racks if required.

- Rack-scale designs ensure predictable performance-per-watt and include liquid-cooling options when high density and thermal requirements necessitate it.

- A comprehensive, integrated software platform aimed at ensuring seamless development across various silicon types and deployment levels.

Partnering with OCP supports the company’s preference for open, referenceable designs, which are easier to procure, validate, and scale in operations.

Crescent Island: A Data Center GPU Tuned for Inference Economics

Crescent Island is Intel’s upcoming data center GPU designed for air-cooled enterprise servers that need high token throughput without requiring advanced power or cooling solutions. Its focus is practical: prioritize cost efficiency and energy performance while delivering the memory capacity and bandwidth essential for modern inference tasks.

Highlights include:

- Xe3P microarchitecture focused on performance-per-watt. This aligns well with steady-state inference, where utilization is sustained and cost sensitivity is high.

- 160 GB LPDDR5X memory on-card. For LLM/RAG serving, long-context summarization, and multimodal pipelines, memory capacity and bandwidth often gate QPS and tail latency more than raw TOPS.

- Broad data type support, designed with “tokens-as-a-service” providers in mind. Mixed-precision/quantization flexibility is critical for squeezing throughput while preserving accuracy for production endpoints.

- Software readiness via Intel’s open, unified stack. Early optimization on Arc Pro B-Series GPUs aims to streamline developer workflows and minimize porting friction.

- Timeline: Customer sampling for Crescent Island is expected in the second half of 2026. For buyers planning 2026–2027 refresh cycles, that gives a practical window to pilot the software stack and model-serving patterns now.

Crescent Island is about inference economics at scale. It’s air-cooled, capacity-forward, perf/W focused. Suppose your workloads are dominated by token-heavy serving with strict latency SLOs. In that case, the onboard 160 GB and efficient data types should translate into more concurrent sessions per watt and fewer cluster surprises as sequence lengths creep up.

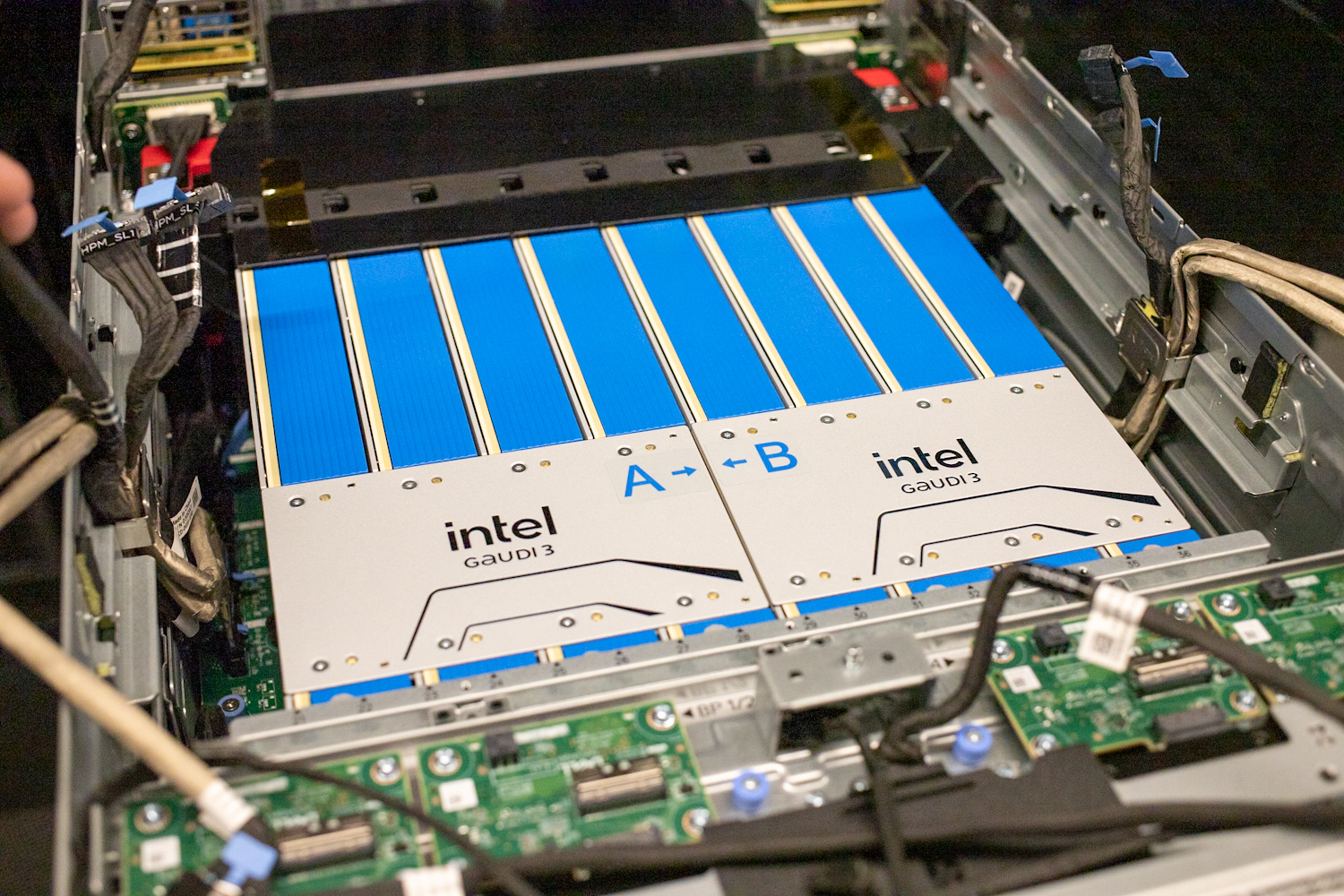

Gaudi 3: Referenceable Rack-Scale for Large Model and Real-Time Inference

Gaudi 3 extends from PCIe deployments to complete rack-scale configurations, offering a path to scale without forcing an all-at-once architectural bet. The new rack-scale reference design targets enterprises standardizing on large-model inference and latency-critical real-time systems.

Key elements:

- The PCIe-to-rack sequence begins with PCIe cards in current servers and extends to racks, as dictated by model sizes, concurrency, and SLA profiles. This approach, a common enterprise strategy, helps to minimize project risk.

- Supports up to 64 accelerators per rack with 8.2 TB of high-bandwidth memory. The key feature, the memory domain (HBD), enables more parameters and longer memory contexts, resulting in fewer performance drops and more consistent tail latency.

- Liquid cooling options are essential at accelerator densities where air cooling alone limits sustained inference.

- Orchestration involves an open software stack, such as Kubernetes, standard model servers, and popular frameworks, which simplifies integration with existing MLOps and service-mesh architectures.

In production inference, factors like memory topology and consistent thermal management surpass peak theoretical performance. The Gaudi 3 rack, which features 8.2 TB of HBM across 64 accelerators, addresses practical needs such as loading multiple large variants, managing long prompts, and handling bursts without causing thrashing. Liquid cooling isn’t just optional at these densities; it’s essential for maintaining QPS and latency under heat stress.

Timeline

- Software: Intel’s unified, open software stack is being readied on Arc Pro B-Series GPUs to give developers an early window for optimization and CI/CD integration.

- Crescent Island: Customer sampling projected for 2H’26. Plan evaluations around software readiness, memory-driven serving profiles, and air-cooled datacenter constraints.

- Gaudi 3 racks: Reference designs specify up to 64 accelerators/rack, 8.2 TB of HBM, and liquid cooling, aligning facilities and power/cooling envelopes early with your DC/colo teams.

Amazon

Amazon