Answering the call for high-performance, green data centers, NVIDIA announced the liquid-cooled A100 PCIe GPU, the first in a line of liquid-cooled GPUs for mainstream servers. NVIDIA made the announcement during Computex 2022 in Taiwan.

As part of a growing movement to build data centers that deliver both high performance and energy efficiency, Equinix’s head of edge infrastructure, Zac Smith, is committed to doing his part to be climate neutral. Zac manages more than 240 data centers for Equinix, a global service provider, so he is familiar with the importance of reducing the effects carbon footprint has on climate change.

Zac recounted that the 10,000 customers he supports are counting on his team to deliver more data, more intelligence, often with AI, and those customers want that delivered more sustainably.

Marking Progress in Efficiency

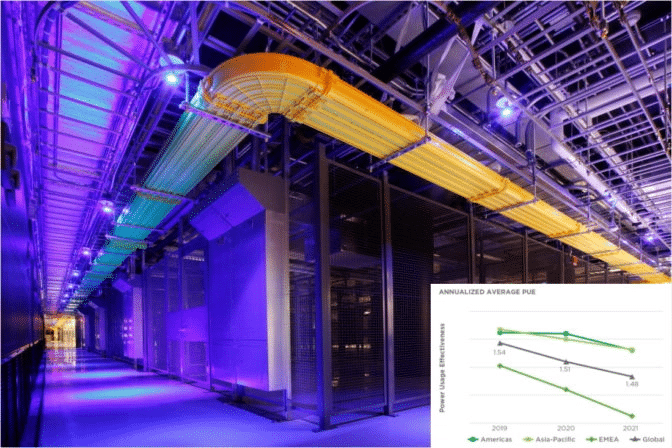

As of April, Equinix has issued $4.9 billion in green bonds. These are investment-grade instruments Equinix will apply to reduce environmental impact by optimizing power usage effectiveness (PUE), an industry metric of how much energy a data center uses goes directly to computing tasks.

Datacenter operators are trying to shave that ratio ever closer to the ideal of 1.0 PUE. Equinix facilities have an average 1.48 PUE today, with its best new data centers hitting less than 1.2.

Equinix is making steady progress in the energy efficiency of its data centers as measured by PUE (inset).

In January, Equinix opened a dedicated facility to pursue advances in energy efficiency. One part of that work focuses on liquid cooling. Liquid cooling was born in the mainframe era and is maturing in the age of AI, and it’s now widely used inside the world’s fastest supercomputers in a modern form called direct-chip cooling. Liquid cooling is the next step in accelerated computing for NVIDIA air-cooled GPUs that deliver up to 20x better energy efficiency on AI inference and high-performance computing jobs than CPUs.

If you switched all the CPU-only servers running AI and HPC worldwide to GPU-accelerated systems, you could save a whopping 11 trillion watt-hours of energy a year. That’s equivalent to the energy usage more than 1.5 million homes consume in a year.

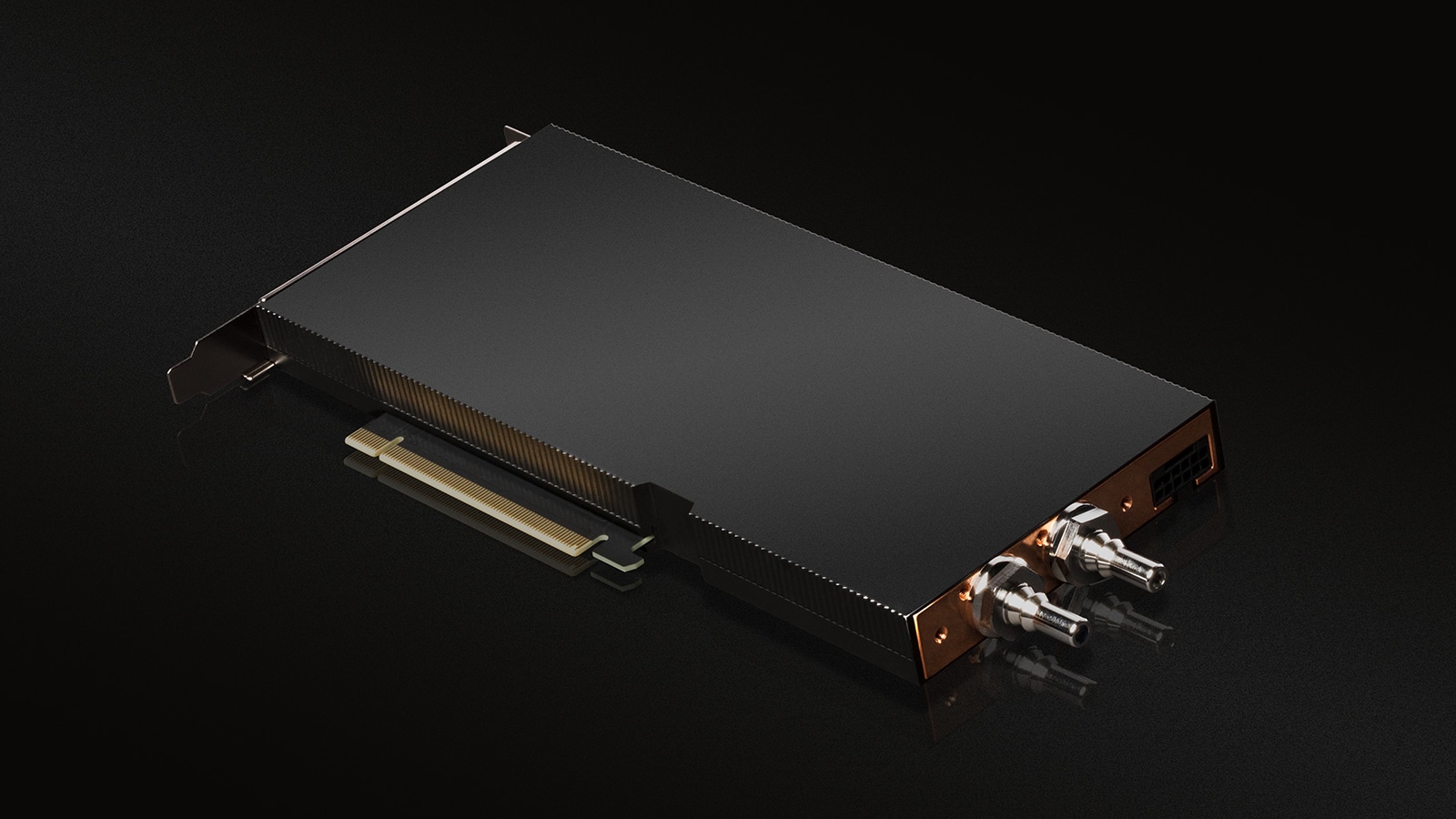

NVIDIA adds to its sustainability efforts by releasing its first data center PCIe GPU using direct-chip cooling. Equinix is qualifying the NVIDIA A100 80GB PCIe Liquid-Cooled GPU for use in its data centers as part of a comprehensive approach to sustainable cooling and heat capture. The GPUs are sampling now and will be generally available this summer.

Saving Water and Power

According to Zac, the NVIDIA PCIe GPU is the first liquid-cooled GPU introduced to the Equinix lab, and Equinix customers are looking for sustainable ways to harness AI.

Datacenter operators aim to eliminate chillers that evaporate millions of gallons of water a year to cool the air inside data centers. Liquid cooling promises systems that recycle small amounts of fluids in closed systems focused on key hot spots.

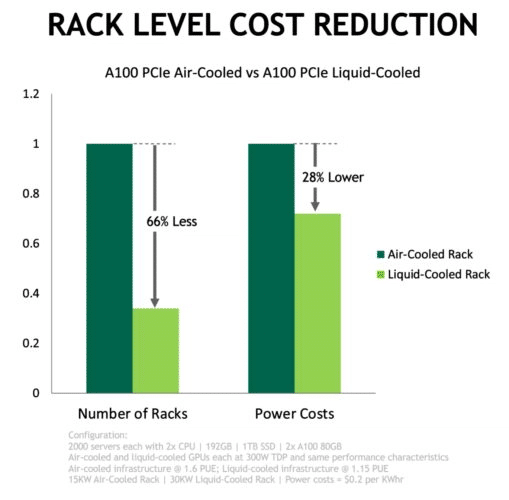

In separate tests, Equinix and NVIDIA found a data center using liquid cooling could run the same workloads as an air-cooled facility while using about 30 percent less energy. NVIDIA estimates the liquid-cooled data center could hit 1.15 PUE, far below 1.6 for its air-cooled cousin. Liquid-cooled data centers can also pack twice as much computing into the same space. That’s because the A100 GPUs use just one PCIe slot, while the air-cooled A100 GPUs fill two.

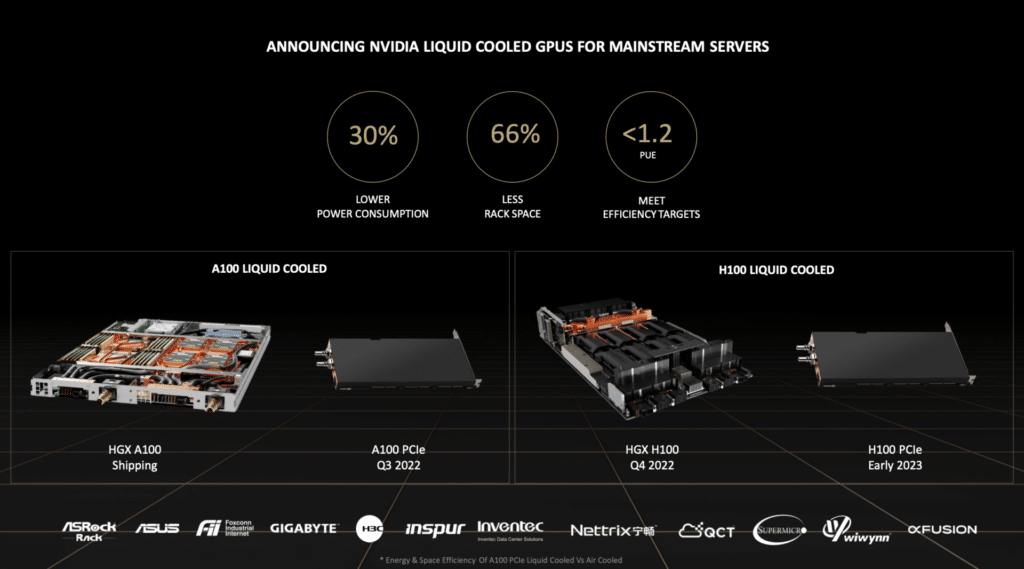

At least a dozen system makers plan to incorporate these GPUs into their offerings later this year. They include ASUS, ASRockRack, Foxconn Industrial Internet, GIGABYTE, H3C, Inspur, Inventec, Nettrix, QCT, Supermicro, Wiwynn, and xFusion. NVIDIA also showed off HGX reference designs and a liquid-colled H100 GPU.

A Global Trend

Regulations setting energy-efficiency standards are pending in Asia, Europe, and the U.S. That’s motivating banks and other large data center operators to evaluate liquid cooling. And the technology isn’t limited to data centers. Cars and other systems need it to cool high-performance systems embedded inside confined spaces.

The Road to Sustainability

When asked, Zac explained that this is the start of the journey of the debut of liquid-cooled mainstream accelerators. NVIDIA plans to follow up the A100 PCIe card with a version next year using the H100 Tensor Core GPU based on the NVIDIA Hopper architecture and intends to support liquid cooling in its high-performance data center GPUs and NVIDIA HGX platforms.

For fast adoption, today’s liquid-cooled GPUs deliver the same performance for less energy. In the future, NVIDIA expects these cards will provide an option of getting more performance for the same energy; something users say they want.

Learn more about the new A100 PCIe liquid-cooled GPUs.

Amazon

Amazon